Zhongrui Fan

A Multimodal Late Fusion Model for E-Commerce Product Classification

Aug 14, 2020

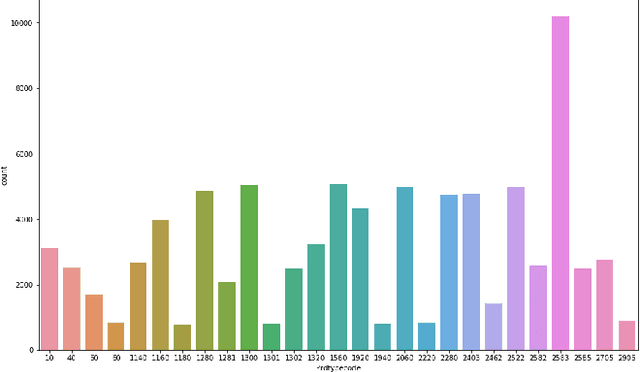

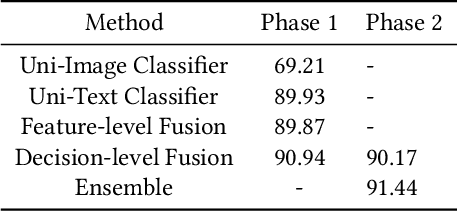

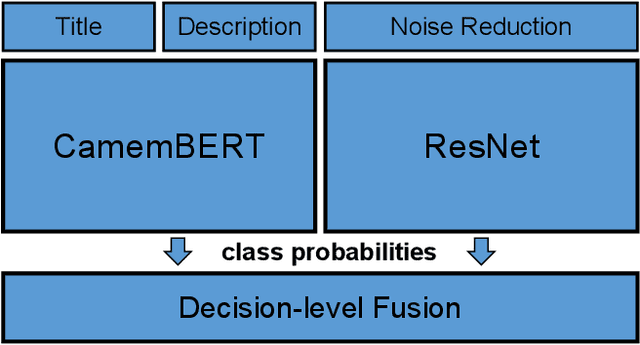

Abstract:The cataloging of product listings is a fundamental problem for most e-commerce platforms. Despite promising results obtained by unimodal-based methods, it can be expected that their performance can be further boosted by the consideration of multimodal product information. In this study, we investigated a multimodal late fusion approach based on text and image modalities to categorize e-commerce products on Rakuten. Specifically, we developed modal specific state-of-the-art deep neural networks for each input modal, and then fused them at the decision level. Experimental results on Multimodal Product Classification Task of SIGIR 2020 E-Commerce Workshop Data Challenge demonstrate the superiority and effectiveness of our proposed method compared with unimodal and other multimodal methods. Our team named pa_curis won the 1st place with a macro-F1 of 0.9144 on the final leaderboard.

A Hybrid BERT and LightGBM based Model for Predicting Emotion GIF Categories on Twitter

Aug 14, 2020

Abstract:The animated Graphical Interchange Format (GIF) images have been widely used on social media as an intuitive way of expression emotion. Given their expressiveness, GIFs offer a more nuanced and precise way to convey emotions. In this paper, we present our solution for the EmotionGIF 2020 challenge, the shared task of SocialNLP 2020. To recommend GIF categories for unlabeled tweets, we regarded this problem as a kind of matching tasks and proposed a learning to rank framework based on Bidirectional Encoder Representations from Transformer (BERT) and LightGBM. Our team won the 4th place with a Mean Average Precision @ 6 (MAP@6) score of 0.5394 on the round 1 leaderboard.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge