Yasser Shoukry

From Dead Neurons to Deep Approximators: Deep Bernstein Networks as a Provable Alternative to Residual Layers

Feb 04, 2026Abstract:Residual connections are the de facto standard for mitigating vanishing gradients, yet they impose structural constraints and fail to address the inherent inefficiencies of piecewise linear activations. We show that Deep Bernstein Networks (which utilizes Bernstein polynomials as activation functions) can act as residual-free architecture while simultaneously optimize trainability and representation power. We provide a two-fold theoretical foundation for our approach. First, we derive a theoretical lower bound on the local derivative, proving it remains strictly bounded away from zero. This directly addresses the root cause of gradient stagnation; empirically, our architecture reduces ``dead'' neurons from 90\% in standard deep networks to less than 5\%, outperforming ReLU, Leaky ReLU, SeLU, and GeLU. Second, we establish that the approximation error for Bernstein-based networks decays exponentially with depth, a significant improvement over the polynomial rates of ReLU-based architectures. By unifying these results, we demonstrate that Bernstein activations provide a superior mechanism for function approximation and signal flow. Our experiments on HIGGS and MNIST confirm that Deep Bernstein Networks achieve high-performance training without skip-connections, offering a principled path toward deep, residual-free architectures with enhanced expressive capacity.

Correct-by-Construction Vision-based Pose Estimation using Geometric Generative Models

Jan 24, 2026Abstract:We consider the problem of vision-based pose estimation for autonomous systems. While deep neural networks have been successfully used for vision-based tasks, they inherently lack provable guarantees on the correctness of their output, which is crucial for safety-critical applications. We present a framework for designing certifiable neural networks (NNs) for perception-based pose estimation that integrates physics-driven modeling with learning-based estimation. The proposed framework begins by leveraging the known geometry of planar objects commonly found in the environment, such as traffic signs and runway markings, referred to as target objects. At its core, it introduces a geometric generative model (GGM), a neural-network-like model whose parameters are derived from the image formation process of a target object observed by a camera. Once designed, the GGM can be used to train NN-based pose estimators with certified guarantees in terms of their estimation errors. We first demonstrate this framework in uncluttered environments, where the target object is the only object present in the camera's field of view. We extend this using ideas from NN reachability analysis to design certified object NN that can detect the presence of the target object in cluttered environments. Subsequently, the framework consolidates the certified object detector with the certified pose estimator to design a multi-stage perception pipeline that generalizes the proposed approach to cluttered environments, while maintaining its certified guarantees. We evaluate the proposed framework using both synthetic and real images of various planar objects commonly encountered by autonomous vehicles. Using images captured by an event-based camera, we show that the trained encoder can effectively estimate the pose of a traffic sign in accordance with the certified bound provided by the framework.

KoALA: KL-L0 Adversarial Detector via Label Agreement

Oct 14, 2025

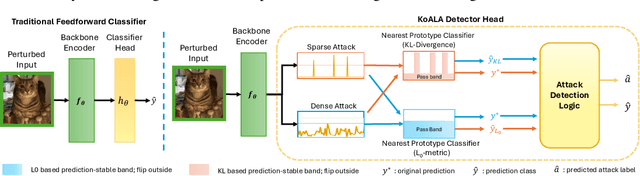

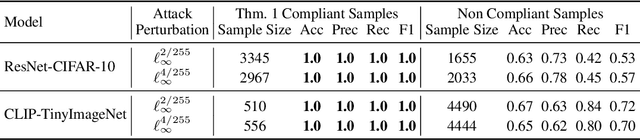

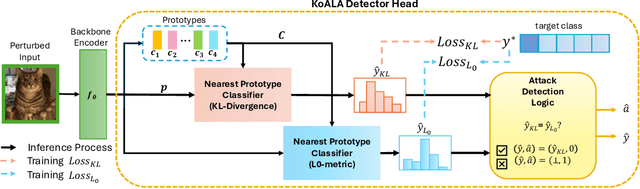

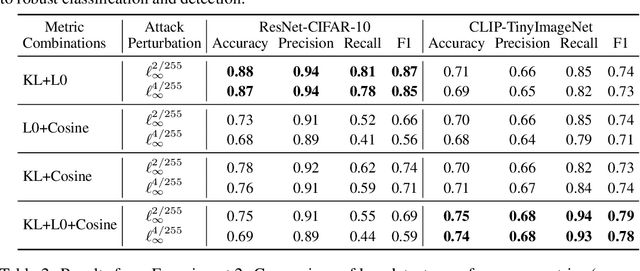

Abstract:Deep neural networks are highly susceptible to adversarial attacks, which pose significant risks to security- and safety-critical applications. We present KoALA (KL-L0 Adversarial detection via Label Agreement), a novel, semantics-free adversarial detector that requires no architectural changes or adversarial retraining. KoALA operates on a simple principle: it detects an adversarial attack when class predictions from two complementary similarity metrics disagree. These metrics-KL divergence and an L0-based similarity-are specifically chosen to detect different types of perturbations. The KL divergence metric is sensitive to dense, low-amplitude shifts, while the L0-based similarity is designed for sparse, high-impact changes. We provide a formal proof of correctness for our approach. The only training required is a simple fine-tuning step on a pre-trained image encoder using clean images to ensure the embeddings align well with both metrics. This makes KOALA a lightweight, plug-and-play solution for existing models and various data modalities. Our extensive experiments on ResNet/CIFAR-10 and CLIP/Tiny-ImageNet confirm our theoretical claims. When the theorem's conditions are met, KoALA consistently and effectively detects adversarial examples. On the full test sets, KoALA achieves a precision of 0.94 and a recall of 0.81 on ResNet/CIFAR-10, and a precision of 0.66 and a recall of 0.85 on CLIP/Tiny-ImageNet.

Extracting Forward Invariant Sets from Neural Network-Based Control Barrier Functions

Jan 25, 2025Abstract:Training Neural Networks (NNs) to serve as Barrier Functions (BFs) is a popular way to improve the safety of autonomous dynamical systems. Despite significant practical success, these methods are not generally guaranteed to produce true BFs in a provable sense, which undermines their intended use as safety certificates. In this paper, we consider the problem of formally certifying a learned NN as a BF with respect to state avoidance for an autonomous system: viz. computing a region of the state space on which the candidate NN is provably a BF. In particular, we propose a sound algorithm that efficiently produces such a certificate set for a shallow NN. Our algorithm combines two novel approaches: it first uses NN reachability tools to identify a subset of states for which the output of the NN does not increase along system trajectories; then, it uses a novel enumeration algorithm for hyperplane arrangements to find the intersection of the NN's zero-sub-level set with the first set of states. In this way, our algorithm soundly finds a subset of states on which the NN is certified as a BF. We further demonstrate the effectiveness of our algorithm at certifying for real-world NNs as BFs in two case studies. We complemented these with scalability experiments that demonstrate the efficiency of our algorithm.

Certified Vision-based State Estimation for Autonomous Landing Systems using Reachability Analysis

Sep 10, 2023Abstract:This paper studies the problem of designing a certified vision-based state estimator for autonomous landing systems. In such a system, a neural network (NN) processes images from a camera to estimate the aircraft relative position with respect to the runway. We propose an algorithm to design such NNs with certified properties in terms of their ability to detect runways and provide accurate state estimation. At the heart of our approach is the use of geometric models of perspective cameras to obtain a mathematical model that captures the relation between the aircraft states and the inputs. We show that such geometric models enjoy mixed monotonicity properties that can be used to design state estimators with certifiable error bounds. We show the effectiveness of the proposed approach using an experimental testbed on data collected from event-based cameras.

DeepBern-Nets: Taming the Complexity of Certifying Neural Networks using Bernstein Polynomial Activations and Precise Bound Propagation

May 22, 2023Abstract:Formal certification of Neural Networks (NNs) is crucial for ensuring their safety, fairness, and robustness. Unfortunately, on the one hand, sound and complete certification algorithms of ReLU-based NNs do not scale to large-scale NNs. On the other hand, incomplete certification algorithms are easier to compute, but they result in loose bounds that deteriorate with the depth of NN, which diminishes their effectiveness. In this paper, we ask the following question; can we replace the ReLU activation function with one that opens the door to incomplete certification algorithms that are easy to compute but can produce tight bounds on the NN's outputs? We introduce DeepBern-Nets, a class of NNs with activation functions based on Bernstein polynomials instead of the commonly used ReLU activation. Bernstein polynomials are smooth and differentiable functions with desirable properties such as the so-called range enclosure and subdivision properties. We design a novel algorithm, called Bern-IBP, to efficiently compute tight bounds on DeepBern-Nets outputs. Our approach leverages the properties of Bernstein polynomials to improve the tractability of neural network certification tasks while maintaining the accuracy of the trained networks. We conduct comprehensive experiments in adversarial robustness and reachability analysis settings to assess the effectiveness of the proposed Bernstein polynomial activation in enhancing the certification process. Our proposed framework achieves high certified accuracy for adversarially-trained NNs, which is often a challenging task for certifiers of ReLU-based NNs. Moreover, using Bern-IBP bounds for certified training results in NNs with state-of-the-art certified accuracy compared to ReLU networks. This work establishes Bernstein polynomial activation as a promising alternative for improving NN certification tasks across various applications.

Model Extraction Attacks Against Reinforcement Learning Based Controllers

Apr 25, 2023Abstract:We introduce the problem of model-extraction attacks in cyber-physical systems in which an attacker attempts to estimate (or extract) the feedback controller of the system. Extracting (or estimating) the controller provides an unmatched edge to attackers since it allows them to predict the future control actions of the system and plan their attack accordingly. Hence, it is important to understand the ability of the attackers to perform such an attack. In this paper, we focus on the setting when a Deep Neural Network (DNN) controller is trained using Reinforcement Learning (RL) algorithms and is used to control a stochastic system. We play the role of the attacker that aims to estimate such an unknown DNN controller, and we propose a two-phase algorithm. In the first phase, also called the offline phase, the attacker uses side-channel information about the RL-reward function and the system dynamics to identify a set of candidate estimates of the unknown DNN. In the second phase, also called the online phase, the attacker observes the behavior of the unknown DNN and uses these observations to shortlist the set of final policy estimates. We provide theoretical analysis of the error between the unknown DNN and the estimated one. We also provide numerical results showing the effectiveness of the proposed algorithm.

SEO: Safety-Aware Energy Optimization Framework for Multi-Sensor Neural Controllers at the Edge

Feb 24, 2023Abstract:Runtime energy management has become quintessential for multi-sensor autonomous systems at the edge for achieving high performance given the platform constraints. Typical for such systems, however, is to have their controllers designed with formal guarantees on safety that precede in priority such optimizations, which in turn limits their application in real settings. In this paper, we propose a novel energy optimization framework that is aware of the autonomous system's safety state, and leverages it to regulate the application of energy optimization methods so that the system's formal safety properties are preserved. In particular, through the formal characterization of a system's safety state as a dynamic processing deadline, the computing workloads of the underlying models can be adapted accordingly. For our experiments, we model two popular runtime energy optimization methods, offloading and gating, and simulate an autonomous driving system (ADS) use-case in the CARLA simulation environment with performance characterizations obtained from the standard Nvidia Drive PX2 ADS platform. Our results demonstrate that through a formal awareness of the perceived risks in the test case scenario, energy efficiency gains are still achieved (reaching 89.9%) while maintaining the desired safety properties.

EnergyShield: Provably-Safe Offloading of Neural Network Controllers for Energy Efficiency

Feb 13, 2023Abstract:To mitigate the high energy demand of Neural Network (NN) based Autonomous Driving Systems (ADSs), we consider the problem of offloading NN controllers from the ADS to nearby edge-computing infrastructure, but in such a way that formal vehicle safety properties are guaranteed. In particular, we propose the EnergyShield framework, which repurposes a controller ''shield'' as a low-power runtime safety monitor for the ADS vehicle. Specifically, the shield in EnergyShield provides not only safety interventions but also a formal, state-based quantification of the tolerable edge response time before vehicle safety is compromised. Using EnergyShield, an ADS can then save energy by wirelessly offloading NN computations to edge computers, while still maintaining a formal guarantee of safety until it receives a response (on-vehicle hardware provides a just-in-time fail safe). To validate the benefits of EnergyShield, we implemented and tested it in the Carla simulation environment. Our results show that EnergyShield maintains safe vehicle operation while providing significant energy savings compared to on-vehicle NN evaluation: from 24% to 54% less energy across a range of wireless conditions and edge delays.

BERN-NN: Tight Bound Propagation For Neural Networks Using Bernstein Polynomial Interval Arithmetic

Nov 22, 2022Abstract:In this paper, we present BERN-NN as an efficient tool to perform bound propagation of Neural Networks (NNs). Bound propagation is a critical step in wide range of NN model checkers and reachability analysis tools. Given a bounded input set, bound propagation algorithms aim to compute tight bounds on the output of the NN. So far, linear and convex optimizations have been used to perform bound propagation. Since neural networks are highly non-convex, state-of-the-art bound propagation techniques suffer from introducing large errors. To circumvent such drawback, BERN-NN approximates the bounds of each neuron using a class of polynomials called Bernstein polynomials. Bernstein polynomials enjoy several interesting properties that allow BERN-NN to obtain tighter bounds compared to those relying on linear and convex approximations. BERN-NN is efficiently parallelized on graphic processing units (GPUs). Extensive numerical results show that bounds obtained by BERN-NN are orders of magnitude tighter than those obtained by state-of-the-art verifiers such as linear programming and linear interval arithmetic. Moreoveer, BERN-NN is both faster and produces tighter outputs compared to convex programming approaches like alpha-CROWN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge