Yanru Sun

From Absolute to Relative: Rethinking Reward Shaping in Group-Based Reinforcement Learning

Jan 30, 2026Abstract:Reinforcement learning has become a cornerstone for enhancing the reasoning capabilities of Large Language Models, where group-based approaches such as GRPO have emerged as efficient paradigms that optimize policies by leveraging intra-group performance differences. However, these methods typically rely on absolute numerical rewards, introducing intrinsic limitations. In verifiable tasks, identical group evaluations often result in sparse supervision, while in open-ended scenarios, the score range instability of reward models undermines advantage estimation based on group means. To address these limitations, we propose Reinforcement Learning with Relative Rewards (RLRR), a framework that shifts reward shaping from absolute scoring to relative ranking. Complementing this framework, we introduce the Ranking Reward Model, a listwise preference model tailored for group-based optimization to directly generate relative rankings. By transforming raw evaluations into robust relative signals, RLRR effectively mitigates signal sparsity and reward instability. Experimental results demonstrate that RLRR yields consistent performance improvements over standard group-based baselines across reasoning benchmarks and open-ended generation tasks.

SEED: Spectral Entropy-Guided Evaluation of SpatialTemporal Dependencies for Multivariate Time Series Forecasting

Dec 18, 2025

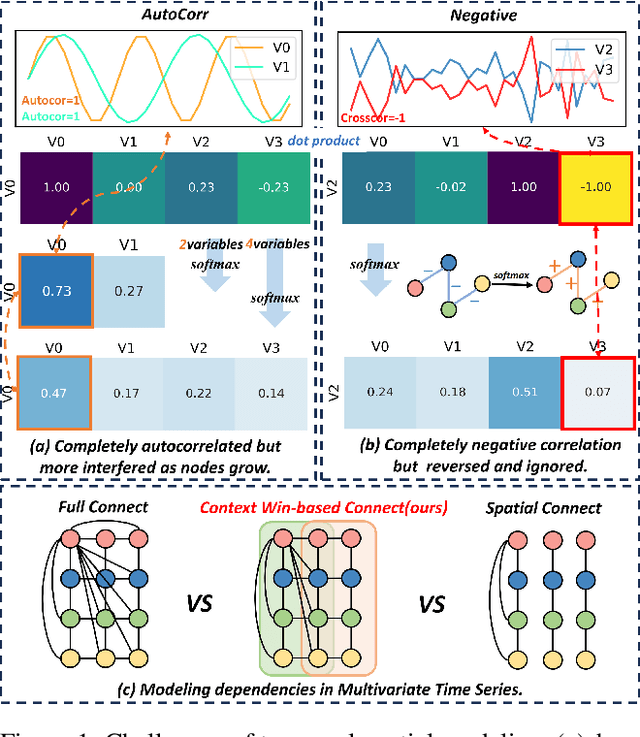

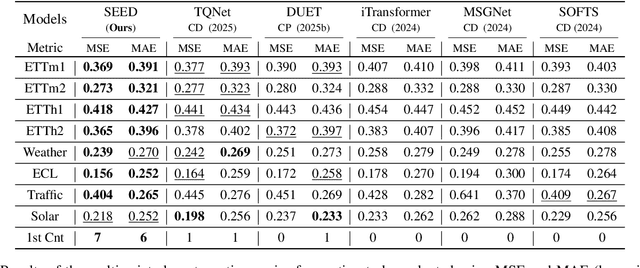

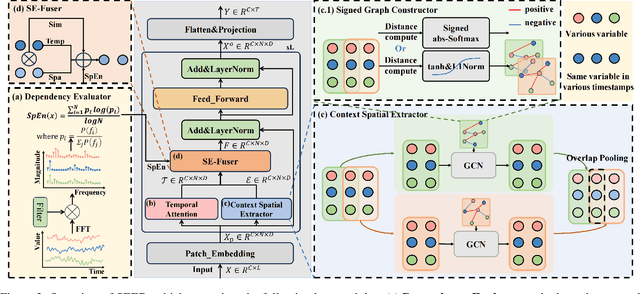

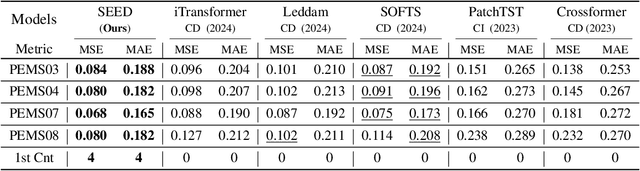

Abstract:Effective multivariate time series forecasting often benefits from accurately modeling complex inter-variable dependencies. However, existing attention- or graph-based methods face three key issues: (a) strong temporal self-dependencies are often disrupted by irrelevant variables; (b) softmax normalization ignores and reverses negative correlations; (c) variables struggle to perceive their temporal positions. To address these, we propose \textbf{SEED}, a Spectral Entropy-guided Evaluation framework for spatial-temporal Dependency modeling. SEED introduces a Dependency Evaluator, a key innovation that leverages spectral entropy to dynamically provide a preliminary evaluation of the spatial and temporal dependencies of each variable, enabling the model to adaptively balance Channel Independence (CI) and Channel Dependence (CD) strategies. To account for temporal regularities originating from the influence of other variables rather than intrinsic dynamics, we propose Spectral Entropy-based Fuser to further refine the evaluated dependency weights, effectively separating this part. Moreover, to preserve negative correlations, we introduce a Signed Graph Constructor that enables signed edge weights, overcoming the limitations of softmax. Finally, to help variables perceive their temporal positions and thereby construct more comprehensive spatial features, we introduce the Context Spatial Extractor, which leverages local contextual windows to extract spatial features. Extensive experiments on 12 real-world datasets from various application domains demonstrate that SEED achieves state-of-the-art performance, validating its effectiveness and generality.

Adapting LLMs to Time Series Forecasting via Temporal Heterogeneity Modeling and Semantic Alignment

Aug 10, 2025Abstract:Large Language Models (LLMs) have recently demonstrated impressive capabilities in natural language processing due to their strong generalization and sequence modeling capabilities. However, their direct application to time series forecasting remains challenging due to two fundamental issues: the inherent heterogeneity of temporal patterns and the modality gap between continuous numerical signals and discrete language representations. In this work, we propose TALON, a unified framework that enhances LLM-based forecasting by modeling temporal heterogeneity and enforcing semantic alignment. Specifically, we design a Heterogeneous Temporal Encoder that partitions multivariate time series into structurally coherent segments, enabling localized expert modeling across diverse temporal patterns. To bridge the modality gap, we introduce a Semantic Alignment Module that aligns temporal features with LLM-compatible representations, enabling effective integration of time series into language-based models while eliminating the need for handcrafted prompts during inference. Extensive experiments on seven real-world benchmarks demonstrate that TALON achieves superior performance across all datasets, with average MSE improvements of up to 11\% over recent state-of-the-art methods. These results underscore the effectiveness of incorporating both pattern-aware and semantic-aware designs when adapting LLMs for time series forecasting. The code is available at: https://github.com/syrGitHub/TALON.

LangTime: A Language-Guided Unified Model for Time Series Forecasting with Proximal Policy Optimization

Mar 11, 2025

Abstract:Recent research has shown an increasing interest in utilizing pre-trained large language models (LLMs) for a variety of time series applications. However, there are three main challenges when using LLMs as foundational models for time series forecasting: (1) Cross-domain generalization. (2) Cross-modality alignment. (3) Error accumulation in autoregressive frameworks. To address these challenges, we proposed LangTime, a language-guided unified model for time series forecasting that incorporates cross-domain pre-training with reinforcement learning-based fine-tuning. Specifically, LangTime constructs Temporal Comprehension Prompts (TCPs), which include dataset-wise and channel-wise instructions, to facilitate domain adaptation and condense time series into a single token, enabling LLMs to understand better and align temporal data. To improve autoregressive forecasting, we introduce TimePPO, a reinforcement learning-based fine-tuning algorithm. TimePPO mitigates error accumulation by leveraging a multidimensional rewards function tailored for time series and a repeat-based value estimation strategy. Extensive experiments demonstrate that LangTime achieves state-of-the-art cross-domain forecasting performance, while TimePPO fine-tuning effectively enhances the stability and accuracy of autoregressive forecasting.

Lightweight Channel-wise Dynamic Fusion Model: Non-stationary Time Series Forecasting via Entropy Analysis

Mar 04, 2025Abstract:Non-stationarity is an intrinsic property of real-world time series and plays a crucial role in time series forecasting. Previous studies primarily adopt instance normalization to attenuate the non-stationarity of original series for better predictability. However, instance normalization that directly removes the inherent non-stationarity can lead to three issues: (1) disrupting global temporal dependencies, (2) ignoring channel-specific differences, and (3) producing over-smoothed predictions. To address these issues, we theoretically demonstrate that variance can be a valid and interpretable proxy for quantifying non-stationarity of time series. Based on the analysis, we propose a novel lightweight \textit{C}hannel-wise \textit{D}ynamic \textit{F}usion \textit{M}odel (\textit{CDFM}), which selectively and dynamically recovers intrinsic non-stationarity of the original series, while keeping the predictability of normalized series. First, we design a Dual-Predictor Module, which involves two branches: a Time Stationary Predictor for capturing stable patterns and a Time Non-stationary Predictor for modeling global dynamics patterns. Second, we propose a Fusion Weight Learner to dynamically characterize the intrinsic non-stationary information across different samples based on variance. Finally, we introduce a Channel Selector to selectively recover non-stationary information from specific channels by evaluating their non-stationarity, similarity, and distribution consistency, enabling the model to capture relevant dynamic features and avoid overfitting. Comprehensive experiments on seven time series datasets demonstrate the superiority and generalization capabilities of CDFM.

PPGF: Probability Pattern-Guided Time Series Forecasting

Feb 18, 2025Abstract:Time series forecasting (TSF) is an essential branch of machine learning with various applications. Most methods for TSF focus on constructing different networks to extract better information and improve performance. However, practical application data contain different internal mechanisms, resulting in a mixture of multiple patterns. That is, the model's ability to fit different patterns is different and generates different errors. In order to solve this problem, we propose an end-to-end framework, namely probability pattern-guided time series forecasting (PPGF). PPGF reformulates the TSF problem as a forecasting task guided by probabilistic pattern classification. Firstly, we propose the grouping strategy to approach forecasting problems as classification and alleviate the impact of data imbalance on classification. Secondly, we predict in the corresponding class interval to guarantee the consistency of classification and forecasting. In addition, True Class Probability (TCP) is introduced to pay more attention to the difficult samples to improve the classification accuracy. Detailedly, PPGF classifies the different patterns to determine which one the target value may belong to and estimates it accurately in the corresponding interval. To demonstrate the effectiveness of the proposed framework, we conduct extensive experiments on real-world datasets, and PPGF achieves significant performance improvements over several baseline methods. Furthermore, the effectiveness of TCP and the necessity of consistency between classification and forecasting are proved in the experiments. All data and codes are available online: https://github.com/syrGitHub/PPGF.

Learning Pattern-Specific Experts for Time Series Forecasting Under Patch-level Distribution Shift

Oct 13, 2024

Abstract:Time series forecasting, which aims to predict future values based on historical data, has garnered significant attention due to its broad range of applications. However, real-world time series often exhibit complex non-uniform distribution with varying patterns across segments, such as season, operating condition, or semantic meaning, making accurate forecasting challenging. Existing approaches, which typically train a single model to capture all these diverse patterns, often struggle with the pattern drifts between patches and may lead to poor generalization. To address these challenges, we propose \textbf{TFPS}, a novel architecture that leverages pattern-specific experts for more accurate and adaptable time series forecasting. TFPS employs a dual-domain encoder to capture both time-domain and frequency-domain features, enabling a more comprehensive understanding of temporal dynamics. It then uses subspace clustering to dynamically identify distinct patterns across data patches. Finally, pattern-specific experts model these unique patterns, delivering tailored predictions for each patch. By explicitly learning and adapting to evolving patterns, TFPS achieves significantly improved forecasting accuracy. Extensive experiments on real-world datasets demonstrate that TFPS outperforms state-of-the-art methods, particularly in long-term forecasting, through its dynamic and pattern-aware learning approach. The data and codes are available: \url{https://github.com/syrGitHub/TFPS}.

Hierarchical Classification Auxiliary Network for Time Series Forecasting

May 29, 2024

Abstract:Deep learning has significantly advanced time series forecasting through its powerful capacity to capture sequence relationships. However, training these models with the Mean Square Error (MSE) loss often results in over-smooth predictions, making it challenging to handle the complexity and learn high-entropy features from time series data with high variability and unpredictability. In this work, we introduce a novel approach by tokenizing time series values to train forecasting models via cross-entropy loss, while considering the continuous nature of time series data. Specifically, we propose Hierarchical Classification Auxiliary Network, HCAN, a general model-agnostic component that can be integrated with any forecasting model. HCAN is based on a Hierarchy-Aware Attention module that integrates multi-granularity high-entropy features at different hierarchy levels. At each level, we assign a class label for timesteps to train an Uncertainty-Aware Classifier. This classifier mitigates the over-confidence in softmax loss via evidence theory. We also implement a Hierarchical Consistency Loss to maintain prediction consistency across hierarchy levels. Extensive experiments integrating HCAN with state-of-the-art forecasting models demonstrate substantial improvements over baselines on several real-world datasets. Code is available at:https://github.com/syrGitHub/HCAN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge