Xuehao Cui

A Watermark for Auto-Regressive Image Generation Models

Jun 13, 2025

Abstract:The rapid evolution of image generation models has revolutionized visual content creation, enabling the synthesis of highly realistic and contextually accurate images for diverse applications. However, the potential for misuse, such as deepfake generation, image based phishing attacks, and fabrication of misleading visual evidence, underscores the need for robust authenticity verification mechanisms. While traditional statistical watermarking techniques have proven effective for autoregressive language models, their direct adaptation to image generation models encounters significant challenges due to a phenomenon we term retokenization mismatch, a disparity between original and retokenized sequences during the image generation process. To overcome this limitation, we propose C-reweight, a novel, distortion-free watermarking method explicitly designed for image generation models. By leveraging a clustering-based strategy that treats tokens within the same cluster equivalently, C-reweight mitigates retokenization mismatch while preserving image fidelity. Extensive evaluations on leading image generation platforms reveal that C-reweight not only maintains the visual quality of generated images but also improves detectability over existing distortion-free watermarking techniques, setting a new standard for secure and trustworthy image synthesis.

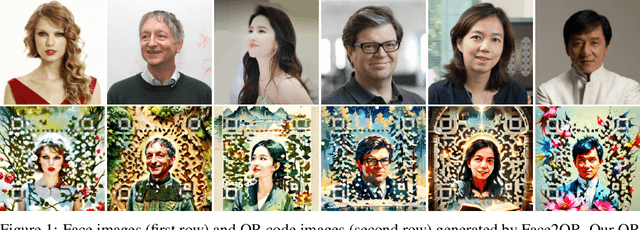

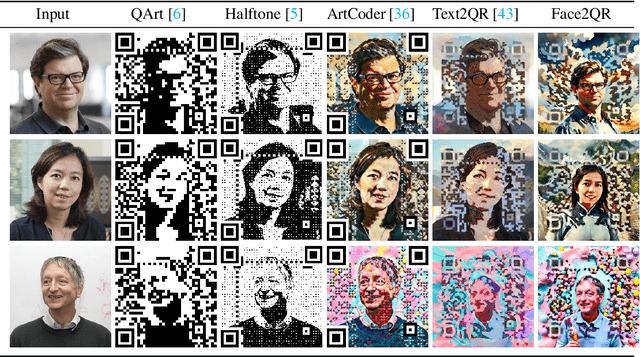

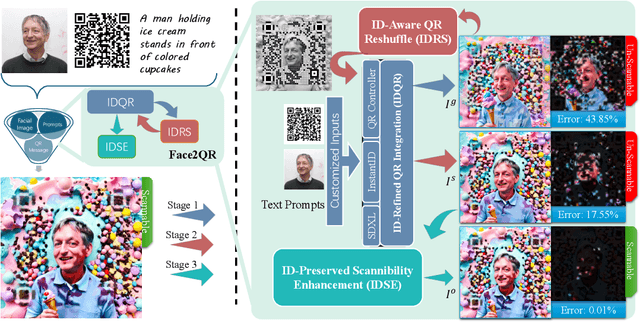

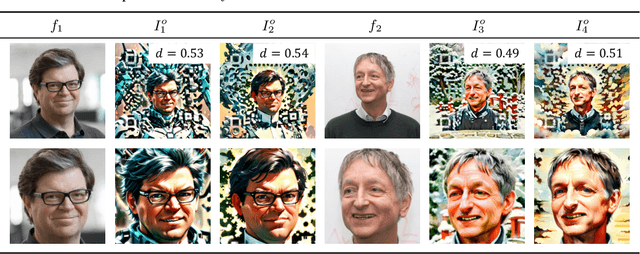

Face2QR: A Unified Framework for Aesthetic, Face-Preserving, and Scannable QR Code Generation

Nov 28, 2024

Abstract:Existing methods to generate aesthetic QR codes, such as image and style transfer techniques, tend to compromise either the visual appeal or the scannability of QR codes when they incorporate human face identity. Addressing these imperfections, we present Face2QR-a novel pipeline specifically designed for generating personalized QR codes that harmoniously blend aesthetics, face identity, and scannability. Our pipeline introduces three innovative components. First, the ID-refined QR integration (IDQR) seamlessly intertwines the background styling with face ID, utilizing a unified Stable Diffusion (SD)-based framework with control networks. Second, the ID-aware QR ReShuffle (IDRS) effectively rectifies the conflicts between face IDs and QR patterns, rearranging QR modules to maintain the integrity of facial features without compromising scannability. Lastly, the ID-preserved Scannability Enhancement (IDSE) markedly boosts scanning robustness through latent code optimization, striking a delicate balance between face ID, aesthetic quality and QR functionality. In comprehensive experiments, Face2QR demonstrates remarkable performance, outperforming existing approaches, particularly in preserving facial recognition features within custom QR code designs. Codes are available at $\href{https://github.com/cavosamir/Face2QR}{\text{this URL link}}$.

On the Impact of Feature Heterophily on Link Prediction with Graph Neural Networks

Sep 26, 2024

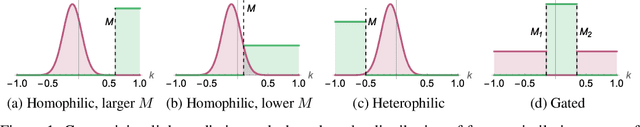

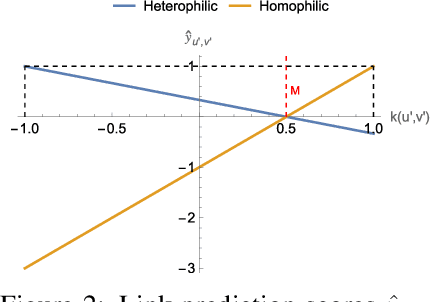

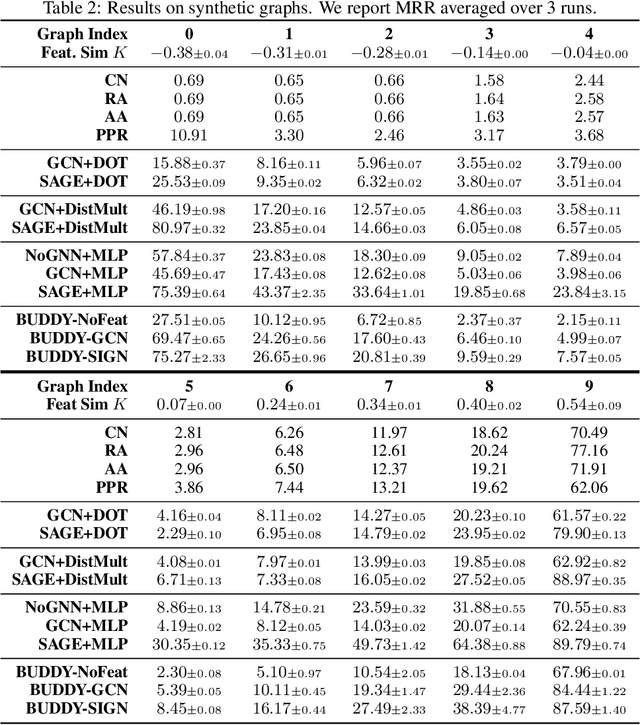

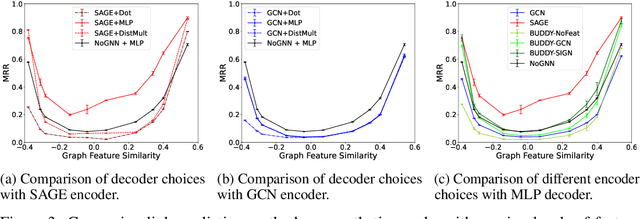

Abstract:Heterophily, or the tendency of connected nodes in networks to have different class labels or dissimilar features, has been identified as challenging for many Graph Neural Network (GNN) models. While the challenges of applying GNNs for node classification when class labels display strong heterophily are well understood, it is unclear how heterophily affects GNN performance in other important graph learning tasks where class labels are not available. In this work, we focus on the link prediction task and systematically analyze the impact of heterophily in node features on GNN performance. Theoretically, we first introduce formal definitions of homophilic and heterophilic link prediction tasks, and present a theoretical framework that highlights the different optimizations needed for the respective tasks. We then analyze how different link prediction encoders and decoders adapt to varying levels of feature homophily and introduce designs for improved performance. Our empirical analysis on a variety of synthetic and real-world datasets confirms our theoretical insights and highlights the importance of adopting learnable decoders and GNN encoders with ego- and neighbor-embedding separation in message passing for link prediction tasks beyond homophily.

Text2QR: Harmonizing Aesthetic Customization and Scanning Robustness for Text-Guided QR Code Generation

Mar 13, 2024

Abstract:In the digital era, QR codes serve as a linchpin connecting virtual and physical realms. Their pervasive integration across various applications highlights the demand for aesthetically pleasing codes without compromised scannability. However, prevailing methods grapple with the intrinsic challenge of balancing customization and scannability. Notably, stable-diffusion models have ushered in an epoch of high-quality, customizable content generation. This paper introduces Text2QR, a pioneering approach leveraging these advancements to address a fundamental challenge: concurrently achieving user-defined aesthetics and scanning robustness. To ensure stable generation of aesthetic QR codes, we introduce the QR Aesthetic Blueprint (QAB) module, generating a blueprint image exerting control over the entire generation process. Subsequently, the Scannability Enhancing Latent Refinement (SELR) process refines the output iteratively in the latent space, enhancing scanning robustness. This approach harnesses the potent generation capabilities of stable-diffusion models, navigating the trade-off between image aesthetics and QR code scannability. Our experiments demonstrate the seamless fusion of visual appeal with the practical utility of aesthetic QR codes, markedly outperforming prior methods. Codes are available at \url{https://github.com/mulns/Text2QR}

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge