Xiumin Wang

Surgery: Mitigating Harmful Fine-Tuning for Large Language Models via Attention Sink

Feb 05, 2026Abstract:Harmful fine-tuning can invalidate safety alignment of large language models, exposing significant safety risks. In this paper, we utilize the attention sink mechanism to mitigate harmful fine-tuning. Specifically, we first measure a statistic named \emph{sink divergence} for each attention head and observe that \emph{different attention heads exhibit two different signs of sink divergence}. To understand its safety implications, we conduct experiments and find that the number of attention heads of positive sink divergence increases along with the increase of the model's harmfulness when undergoing harmful fine-tuning. Based on this finding, we propose a separable sink divergence hypothesis -- \emph{attention heads associating with learning harmful patterns during fine-tuning are separable by their sign of sink divergence}. Based on the hypothesis, we propose a fine-tuning-stage defense, dubbed Surgery. Surgery utilizes a regularizer for sink divergence suppression, which steers attention heads toward the negative sink divergence group, thereby reducing the model's tendency to learn and amplify harmful patterns. Extensive experiments demonstrate that Surgery improves defense performance by 5.90\%, 11.25\%, and 9.55\% on the BeaverTails, HarmBench, and SorryBench benchmarks, respectively. Source code is available on https://github.com/Lslland/Surgery.

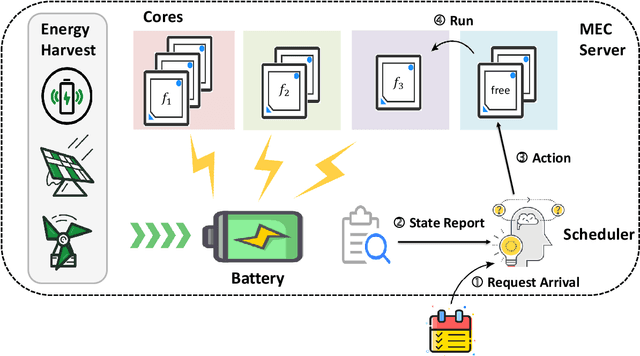

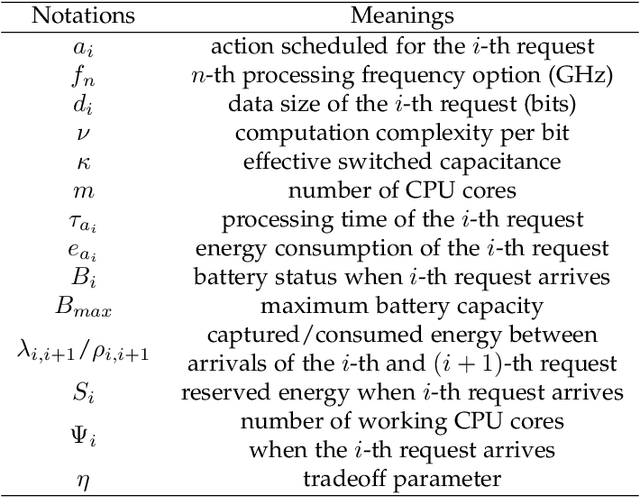

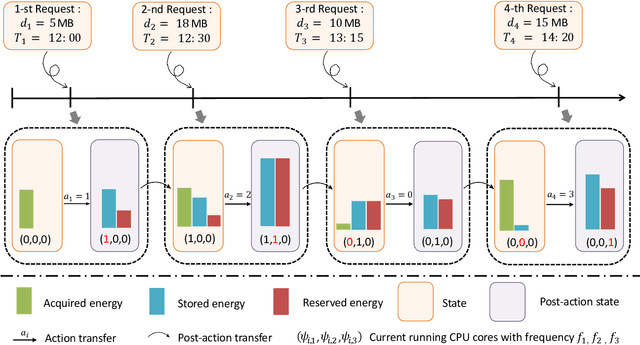

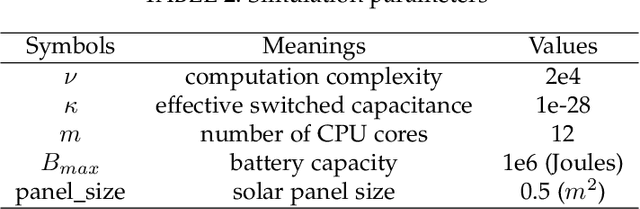

Adaptive Processor Frequency Adjustment for Mobile Edge Computing with Intermittent Energy Supply

Feb 10, 2021

Abstract:With astonishing speed, bandwidth, and scale, Mobile Edge Computing (MEC) has played an increasingly important role in the next generation of connectivity and service delivery. Yet, along with the massive deployment of MEC servers, the ensuing energy issue is now on an increasingly urgent agenda. In the current context, the large scale deployment of renewable-energy-supplied MEC servers is perhaps the most promising solution for the incoming energy issue. Nonetheless, as a result of the intermittent nature of their power sources, these special design MEC server must be more cautious about their energy usage, in a bid to maintain their service sustainability as well as service standard. Targeting optimization on a single-server MEC scenario, we in this paper propose NAFA, an adaptive processor frequency adjustment solution, to enable an effective plan of the server's energy usage. By learning from the historical data revealing request arrival and energy harvest pattern, the deep reinforcement learning-based solution is capable of making intelligent schedules on the server's processor frequency, so as to strike a good balance between service sustainability and service quality. The superior performance of NAFA is substantiated by real-data-based experiments, wherein NAFA demonstrates up to 20% increase in average request acceptance ratio and up to 50% reduction in average request processing time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge