Xiong Chen

SimuSOE: A Simulated Snoring Dataset for Obstructive Sleep Apnea-Hypopnea Syndrome Evaluation during Wakefulness

Jul 10, 2024

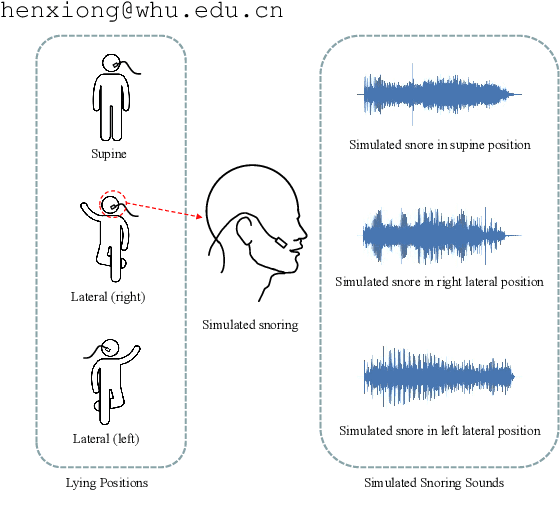

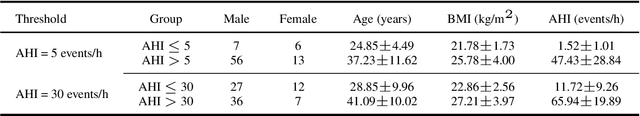

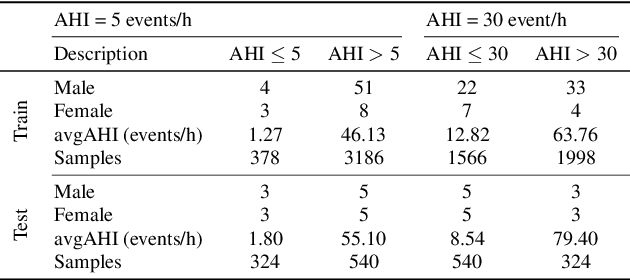

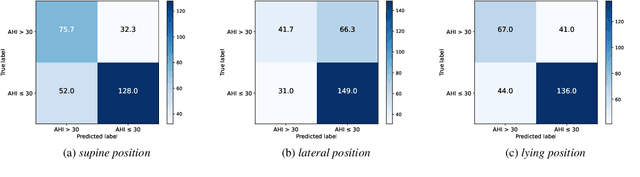

Abstract:Obstructive Sleep Apnea-Hypopnea Syndrome (OSAHS) is a prevalent chronic breathing disorder caused by upper airway obstruction. Previous studies advanced OSAHS evaluation through machine learning-based systems trained on sleep snoring or speech signal datasets. However, constructing datasets for training a precise and rapid OSAHS evaluation system poses a challenge, since 1) it is time-consuming to collect sleep snores and 2) the speech signal is limited in reflecting upper airway obstruction. In this paper, we propose a new snoring dataset for OSAHS evaluation, named SimuSOE, in which a novel and time-effective snoring collection method is introduced for tackling the above problems. In particular, we adopt simulated snoring which is a type of snore intentionally emitted by patients to replace natural snoring. Experimental results indicate that the simulated snoring signal during wakefulness can serve as an effective feature in OSAHS preliminary screening.

A Snoring Sound Dataset for Body Position Recognition: Collection, Annotation, and Analysis

Jul 25, 2023

Abstract:Obstructive Sleep Apnea-Hypopnea Syndrome (OSAHS) is a chronic breathing disorder caused by a blockage in the upper airways. Snoring is a prominent symptom of OSAHS, and previous studies have attempted to identify the obstruction site of the upper airways by snoring sounds. Despite some progress, the classification of the obstruction site remains challenging in real-world clinical settings due to the influence of sleep body position on upper airways. To address this challenge, this paper proposes a snore-based sleep body position recognition dataset (SSBPR) consisting of 7570 snoring recordings, which comprises six distinct labels for sleep body position: supine, supine but left lateral head, supine but right lateral head, left-side lying, right-side lying and prone. Experimental results show that snoring sounds exhibit certain acoustic features that enable their effective utilization for identifying body posture during sleep in real-world scenarios.

Learning Discriminative Features with Multiple Granularities for Person Re-Identification

Aug 14, 2018

Abstract:The combination of global and partial features has been an essential solution to improve discriminative performances in person re-identification (Re-ID) tasks. Previous part-based methods mainly focus on locating regions with specific pre-defined semantics to learn local representations, which increases learning difficulty but not efficient or robust to scenarios with large variances. In this paper, we propose an end-to-end feature learning strategy integrating discriminative information with various granularities. We carefully design the Multiple Granularity Network (MGN), a multi-branch deep network architecture consisting of one branch for global feature representations and two branches for local feature representations. Instead of learning on semantic regions, we uniformly partition the images into several stripes, and vary the number of parts in different local branches to obtain local feature representations with multiple granularities. Comprehensive experiments implemented on the mainstream evaluation datasets including Market-1501, DukeMTMC-reid and CUHK03 indicate that our method has robustly achieved state-of-the-art performances and outperformed any existing approaches by a large margin. For example, on Market-1501 dataset in single query mode, we achieve a state-of-the-art result of Rank-1/mAP=96.6%/94.2% after re-ranking.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge