Xinglu Wang

Artificial Intelligence for Operations Research: Revolutionizing the Operations Research Process

Jan 06, 2024

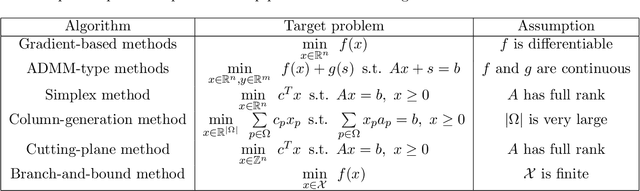

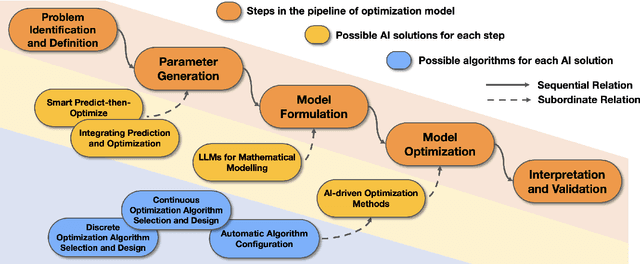

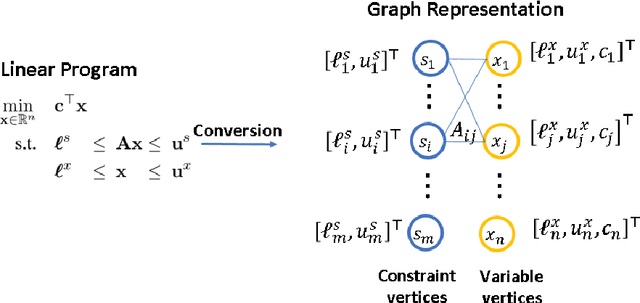

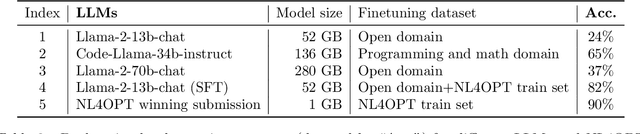

Abstract:The rapid advancement of artificial intelligence (AI) techniques has opened up new opportunities to revolutionize various fields, including operations research (OR). This survey paper explores the integration of AI within the OR process (AI4OR) to enhance its effectiveness and efficiency across multiple stages, such as parameter generation, model formulation, and model optimization. By providing a comprehensive overview of the state-of-the-art and examining the potential of AI to transform OR, this paper aims to inspire further research and innovation in the development of AI-enhanced OR methods and tools. The synergy between AI and OR is poised to drive significant advancements and novel solutions in a multitude of domains, ultimately leading to more effective and efficient decision-making.

ETran: Energy-Based Transferability Estimation

Aug 03, 2023

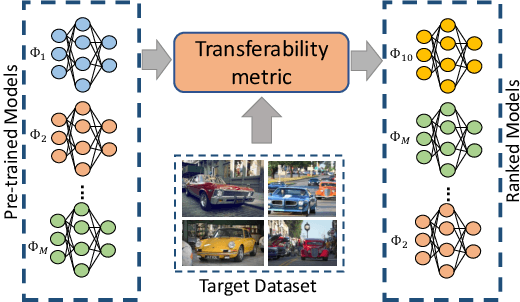

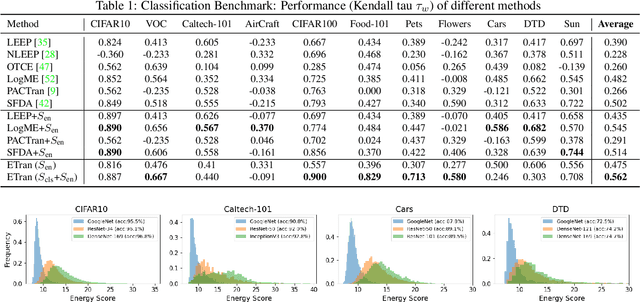

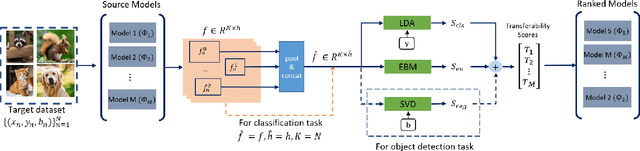

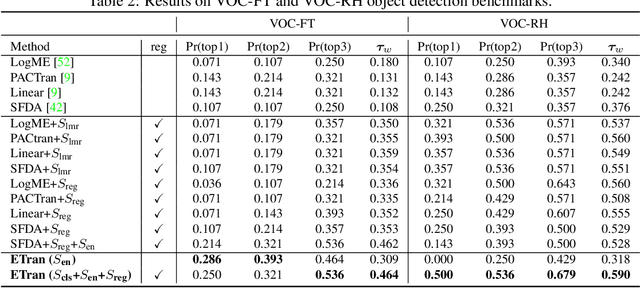

Abstract:This paper addresses the problem of ranking pre-trained models for object detection and image classification. Selecting the best pre-trained model by fine-tuning is an expensive and time-consuming task. Previous works have proposed transferability estimation based on features extracted by the pre-trained models. We argue that quantifying whether the target dataset is in-distribution (IND) or out-of-distribution (OOD) for the pre-trained model is an important factor in the transferability estimation. To this end, we propose ETran, an energy-based transferability assessment metric, which includes three scores: 1) energy score, 2) classification score, and 3) regression score. We use energy-based models to determine whether the target dataset is OOD or IND for the pre-trained model. In contrast to the prior works, ETran is applicable to a wide range of tasks including classification, regression, and object detection (classification+regression). This is the first work that proposes transferability estimation for object detection task. Our extensive experiments on four benchmarks and two tasks show that ETran outperforms previous works on object detection and classification benchmarks by an average of 21% and 12%, respectively, and achieves SOTA in transferability assessment.

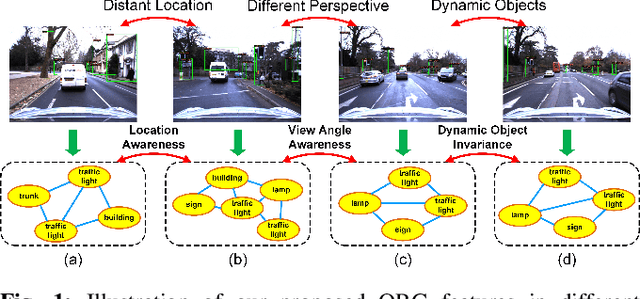

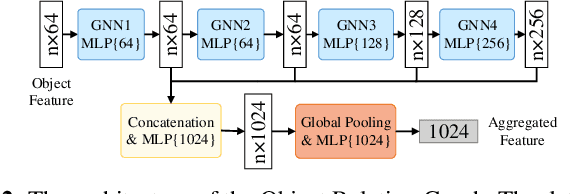

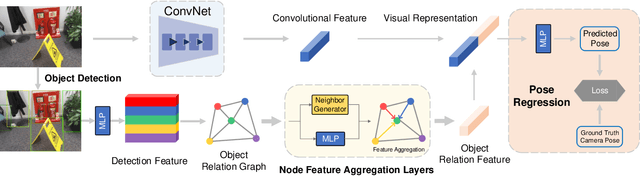

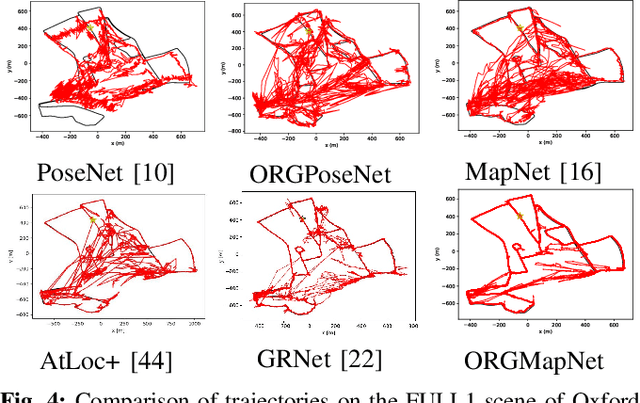

Objects Matter: Learning Object Relation Graph for Robust Camera Relocalization

May 26, 2022

Abstract:Visual relocalization aims to estimate the pose of a camera from one or more images. In recent years deep learning based pose regression methods have attracted many attentions. They feature predicting the absolute poses without relying on any prior built maps or stored images, making the relocalization very efficient. However, robust relocalization under environments with complex appearance changes and real dynamics remains very challenging. In this paper, we propose to enhance the distinctiveness of the image features by extracting the deep relationship among objects. In particular, we extract objects in the image and construct a deep object relation graph (ORG) to incorporate the semantic connections and relative spatial clues of the objects. We integrate our ORG module into several popular pose regression models. Extensive experiments on various public indoor and outdoor datasets demonstrate that our method improves the performance significantly and outperforms the previous approaches.

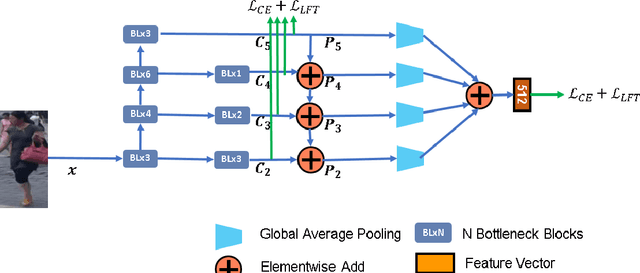

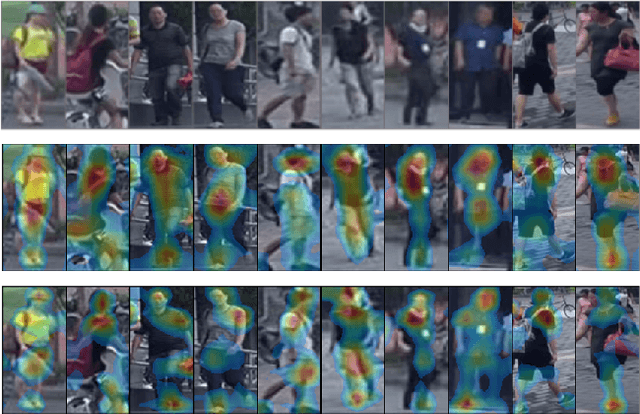

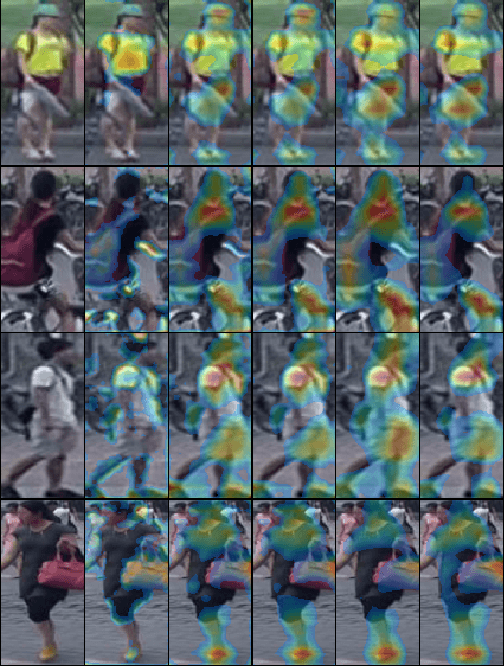

Adversarial Multi-scale Feature Learning for Person Re-identification

Dec 28, 2020

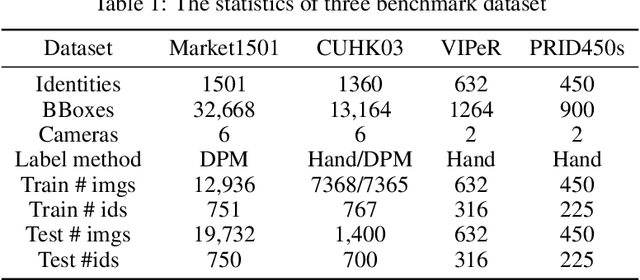

Abstract:Person Re-identification (Person ReID) is an important topic in intelligent surveillance and computer vision. It aims to accurately measure visual similarities between person images for determining whether two images correspond to the same person. The key to accurately measure visual similarities is learning discriminative features, which not only captures clues from different spatial scales, but also jointly inferences on multiple scales, with the ability to determine reliability and ID-relativity of each clue. To achieve these goals, we propose to improve Person ReID system performance from two perspective: \textbf{1).} Multi-scale feature learning (MSFL), which consists of Cross-scale information propagation (CSIP) and Multi-scale feature fusion (MSFF), to dynamically fuse features cross different scales.\textbf{2).} Multi-scale gradient regularizor (MSGR), to emphasize ID-related factors and ignore irrelevant factors in an adversarial manner. Combining MSFL and MSGR, our method achieves the state-of-the-art performance on four commonly used person-ReID datasets with neglectable test-time computation overhead.

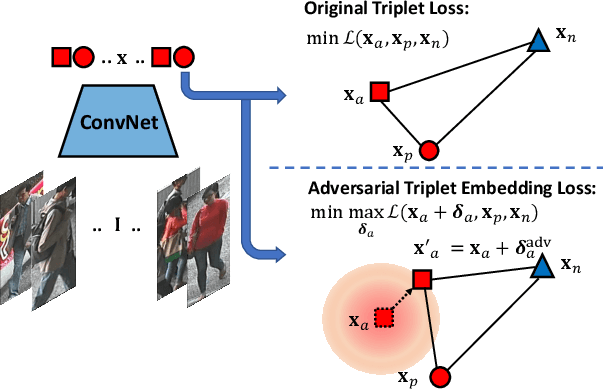

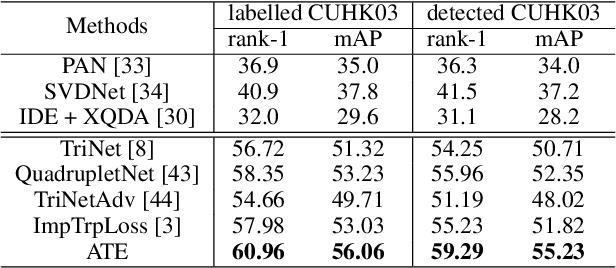

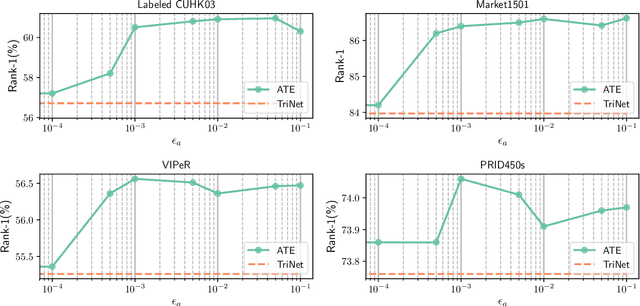

Person Re-identification with Adversarial Triplet Embedding

Dec 28, 2020

Abstract:Person re-identification is an important task and has widespread applications in video surveillance for public security. In the past few years, deep learning network with triplet loss has become popular for this problem. However, the triplet loss usually suffers from poor local optimal and relies heavily on the strategy of hard example mining. In this paper, we propose to address this problem with a new deep metric learning method called Adversarial Triplet Embedding (ATE), in which we simultaneously generate adversarial triplets and discriminative feature embedding in an unified framework. In particular, adversarial triplets are generated by introducing adversarial perturbations into the training process. This adversarial game is converted into a minimax problem so as to have an optimal solution from the theoretical view. Extensive experiments on several benchmark datasets demonstrate the effectiveness of the approach against the state-of-the-art literature.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge