Xiaoye Miao

E2PL: Effective and Efficient Prompt Learning for Incomplete Multi-view Multi-Label Class Incremental Learning

Jan 23, 2026Abstract:Multi-view multi-label classification (MvMLC) is indispensable for modern web applications aggregating information from diverse sources. However, real-world web-scale settings are rife with missing views and continuously emerging classes, which pose significant obstacles to robust learning. Prevailing methods are ill-equipped for this reality, as they either lack adaptability to new classes or incur exponential parameter growth when handling all possible missing-view patterns, severely limiting their scalability in web environments. To systematically address this gap, we formally introduce a novel task, termed \emph{incomplete multi-view multi-label class incremental learning} (IMvMLCIL), which requires models to simultaneously address heterogeneous missing views and dynamic class expansion. To tackle this task, we propose \textsf{E2PL}, an Effective and Efficient Prompt Learning framework for IMvMLCIL. \textsf{E2PL} unifies two novel prompt designs: \emph{task-tailored prompts} for class-incremental adaptation and \emph{missing-aware prompts} for the flexible integration of arbitrary view-missing scenarios. To fundamentally address the exponential parameter explosion inherent in missing-aware prompts, we devise an \emph{efficient prototype tensorization} module, which leverages atomic tensor decomposition to elegantly reduce the prompt parameter complexity from exponential to linear w.r.t. the number of views. We further incorporate a \emph{dynamic contrastive learning} strategy explicitly model the complex dependencies among diverse missing-view patterns, thus enhancing the model's robustness. Extensive experiments on three benchmarks demonstrate that \textsf{E2PL} consistently outperforms state-of-the-art methods in both effectiveness and efficiency. The codes and datasets are available at https://anonymous.4open.science/r/code-for-E2PL.

ZeroED: Hybrid Zero-shot Error Detection through Large Language Model Reasoning

Apr 06, 2025Abstract:Error detection (ED) in tabular data is crucial yet challenging due to diverse error types and the need for contextual understanding. Traditional ED methods often rely heavily on manual criteria and labels, making them labor-intensive. Large language models (LLM) can minimize human effort but struggle with errors requiring a comprehensive understanding of data context. In this paper, we propose ZeroED, a novel hybrid zero-shot error detection framework, which combines LLM reasoning ability with the manual label-based ED pipeline. ZeroED operates in four steps, i.e., feature representation, error labeling, training data construction, and detector training. Initially, to enhance error distinction, ZeroED generates rich data representations using error reason-aware binary features, pre-trained embeddings, and statistical features. Then, ZeroED employs LLM to label errors holistically through in-context learning, guided by a two-step reasoning process for detailed error detection guidelines. To reduce token costs, LLMs are applied only to representative data selected via clustering-based sampling. High-quality training data is constructed through in-cluster label propagation and LLM augmentation with verification. Finally, a classifier is trained to detect all errors. Extensive experiments on seven public datasets demonstrate that, ZeroED substantially outperforms state-of-the-art methods by a maximum 30% improvement in F1 score and up to 90% token cost reduction.

Lossless Privacy-Preserving Aggregation for Decentralized Federated Learning

Jan 08, 2025

Abstract:Privacy concerns arise as sensitive data proliferate. Despite decentralized federated learning (DFL) aggregating gradients from neighbors to avoid direct data transmission, it still poses indirect data leaks from the transmitted gradients. Existing privacy-preserving methods for DFL add noise to gradients. They either diminish the model predictive accuracy or suffer from ineffective gradient protection. In this paper, we propose a novel lossless privacy-preserving aggregation rule named LPPA to enhance gradient protection as much as possible but without loss of DFL model predictive accuracy. LPPA subtly injects the noise difference between the sent and received noise into transmitted gradients for gradient protection. The noise difference incorporates neighbors' randomness for each client, effectively safeguarding against data leaks. LPPA employs the noise flow conservation theory to ensure that the noise impact can be globally eliminated. The global sum of all noise differences remains zero, ensuring that accurate gradient aggregation is unaffected and the model accuracy remains intact. We theoretically prove that the privacy-preserving capacity of LPPA is \sqrt{2} times greater than that of noise addition, while maintaining comparable model accuracy to the standard DFL aggregation without noise injection. Experimental results verify the theoretical findings and show that LPPA achieves a 13% mean improvement in accuracy over noise addition. We also demonstrate the effectiveness of LPPA in protecting raw data and guaranteeing lossless model accuracy.

Gradient Purification: Defense Against Poisoning Attack in Decentralized Federated Learning

Jan 08, 2025

Abstract:Decentralized federated learning (DFL) is inherently vulnerable to poisoning attacks, as malicious clients can transmit manipulated model gradients to neighboring clients. Existing defense methods either reject suspicious gradients per iteration or restart DFL aggregation after detecting all malicious clients. They overlook the potential accuracy benefit from the discarded malicious gradients. In this paper, we propose a novel gradient purification defense, named GPD, that integrates seamlessly with existing DFL aggregation to defend against poisoning attacks. It aims to mitigate the harm in model gradients while retaining the benefit in model weights for enhancing accuracy. For each benign client in GPD, a recording variable is designed to track the historically aggregated gradients from one of its neighbors. It allows benign clients to precisely detect malicious neighbors and swiftly mitigate aggregated malicious gradients via historical consistency checks. Upon mitigation, GPD optimizes model weights via aggregating gradients solely from benign clients. This retains the previously beneficial portions from malicious clients and exploits the contributions from benign clients, thereby significantly enhancing the model accuracy. We analyze the convergence of GPD, as well as its ability to harvest high accuracy. Extensive experiments over three datasets demonstrate that, GPD is capable of mitigating poisoning attacks under both iid and non-iid data distributions. It significantly outperforms state-of-the-art defenses in terms of accuracy against various poisoning attacks.

Differentiable and Scalable Generative Adversarial Models for Data Imputation

Jan 10, 2022

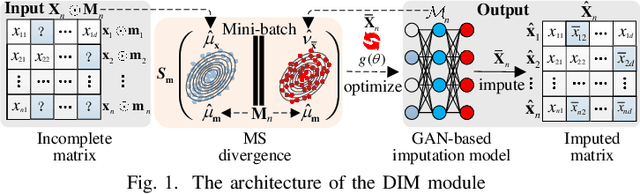

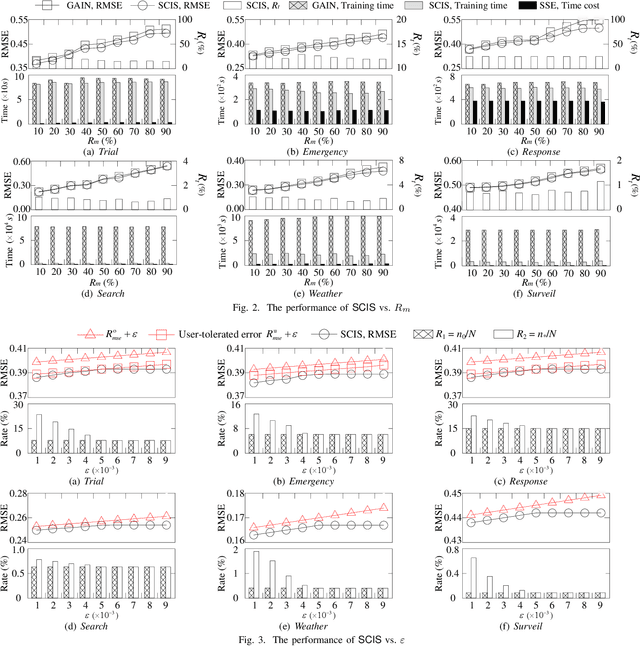

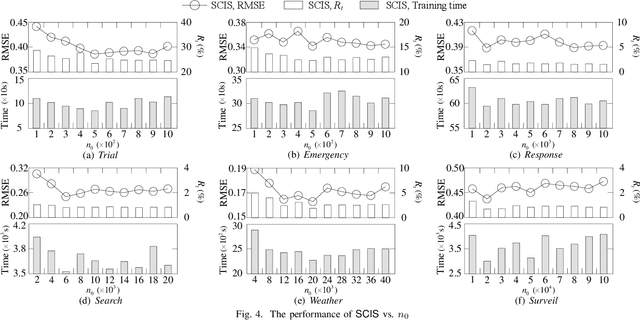

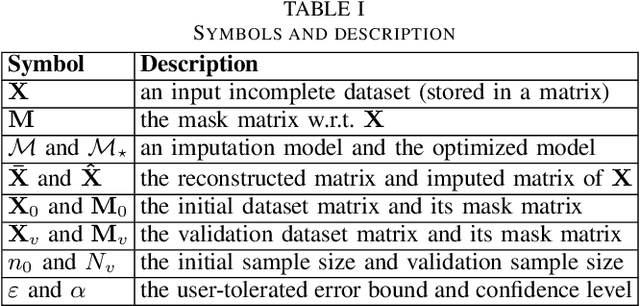

Abstract:Data imputation has been extensively explored to solve the missing data problem. The dramatically increasing volume of incomplete data makes the imputation models computationally infeasible in many real-life applications. In this paper, we propose an effective scalable imputation system named SCIS to significantly speed up the training of the differentiable generative adversarial imputation models under accuracy-guarantees for large-scale incomplete data. SCIS consists of two modules, differentiable imputation modeling (DIM) and sample size estimation (SSE). DIM leverages a new masking Sinkhorn divergence function to make an arbitrary generative adversarial imputation model differentiable, while for such a differentiable imputation model, SSE can estimate an appropriate sample size to ensure the user-specified imputation accuracy of the final model. Extensive experiments upon several real-life large-scale datasets demonstrate that, our proposed system can accelerate the generative adversarial model training by 7.1x. Using around 7.6% samples, SCIS yields competitive accuracy with the state-of-the-art imputation methods in a much shorter computation time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge