Xiaoyang Tang

Multimodal-Enhanced Objectness Learner for Corner Case Detection in Autonomous Driving

Feb 03, 2024

Abstract:Previous works on object detection have achieved high accuracy in closed-set scenarios, but their performance in open-world scenarios is not satisfactory. One of the challenging open-world problems is corner case detection in autonomous driving. Existing detectors struggle with these cases, relying heavily on visual appearance and exhibiting poor generalization ability. In this paper, we propose a solution by reducing the discrepancy between known and unknown classes and introduce a multimodal-enhanced objectness notion learner. Leveraging both vision-centric and image-text modalities, our semi-supervised learning framework imparts objectness knowledge to the student model, enabling class-aware detection. Our approach, Multimodal-Enhanced Objectness Learner (MENOL) for Corner Case Detection, significantly improves recall for novel classes with lower training costs. By achieving a 76.6% mAR-corner and 79.8% mAR-agnostic on the CODA-val dataset with just 5100 labeled training images, MENOL outperforms the baseline ORE by 71.3% and 60.6%, respectively. The code will be available at https://github.com/tryhiseyyysum/MENOL.

The Automatic Identification of Butterfly Species

Mar 18, 2018

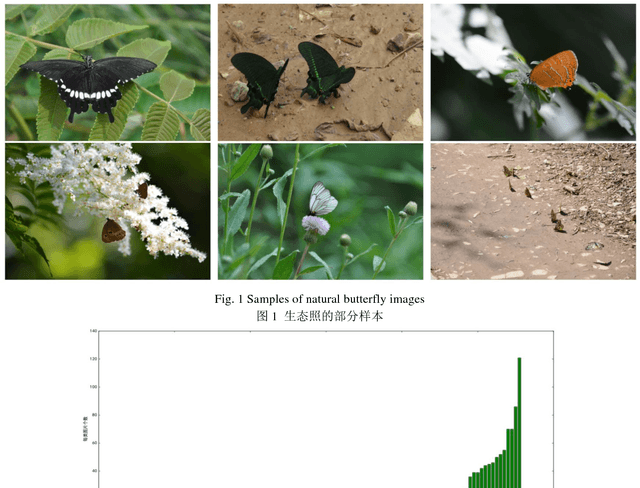

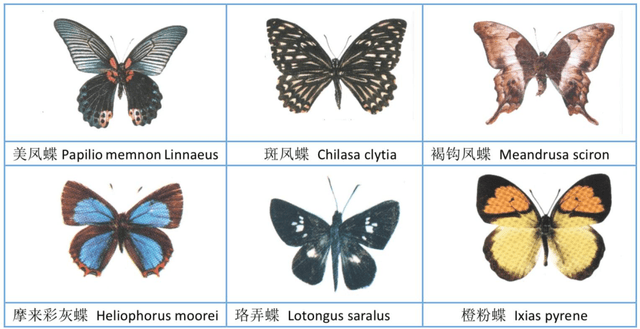

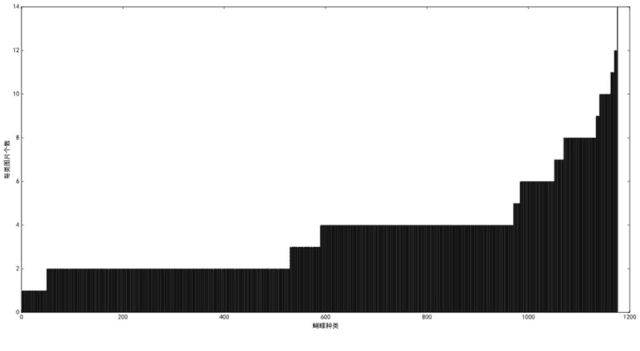

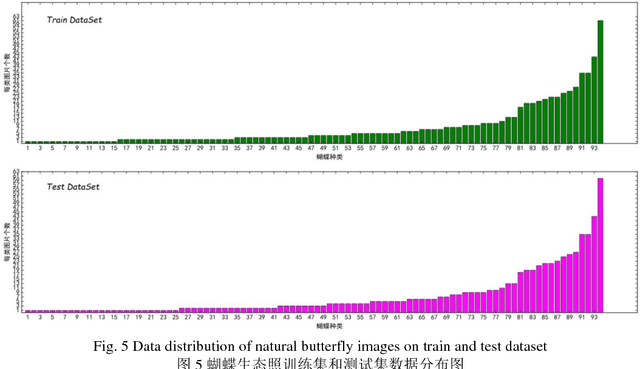

Abstract:The available butterfly data sets comprise a few limited species, and the images in the data sets are always standard patterns without the images of butterflies in their living environment. To overcome the aforementioned limitations in the butterfly data sets, we build a butterfly data set composed of all species of butterflies in China with 4270 standard pattern images of 1176 butterfly species, and 1425 images from living environment of 111 species. We propose to use the deep learning technique Faster-Rcnn to train an automatic butterfly identification system including butterfly position detection and species recognition. We delete those species with only one living environment image from data set, then partition the rest images from living environment into two subsets, one used as test subset, the other as training subset respectively combined with all standard pattern butterfly images or the standard pattern butterfly images with the same species of the images from living environment. In order to construct the training subset for FasterRcnn, nine methods were adopted to amplifying the images in the training subset including the turning of up and down, and left and right, rotation with different angles, adding noises, blurring, and contrast ratio adjusting etc. Three prediction models were trained. The mAP (Mean Average prediction) criterion was used to evaluate the performance of the prediction model. The experimental results demonstrate that our Faster-Rcnn based butterfly automatic identification system performed well, and its worst mAP is up to 60%, and can simultaneously detect the positions of more than one butterflies in one images from living environment and recognize the species of those butterflies as well.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge