Xiaoyan Cao

TOPIC: A Parallel Association Paradigm for Multi-Object Tracking under Complex Motions and Diverse Scenes

Aug 22, 2023

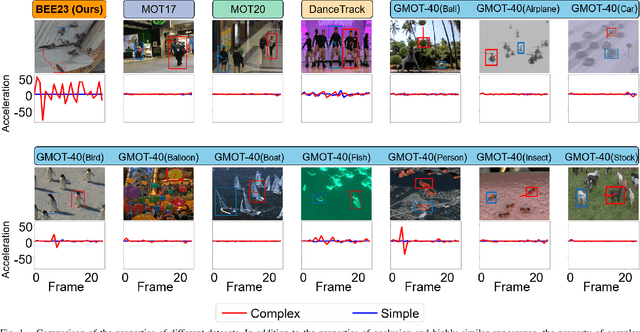

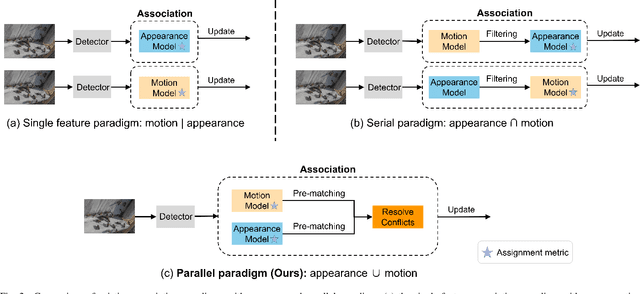

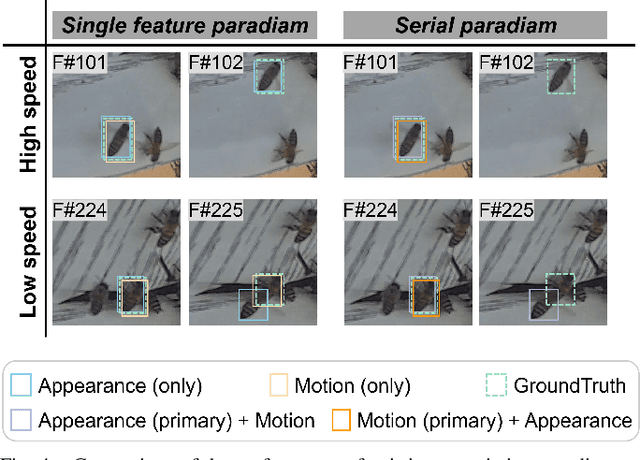

Abstract:Video data and algorithms have been driving advances in multi-object tracking (MOT). While existing MOT datasets focus on occlusion and appearance similarity, complex motion patterns are widespread yet overlooked. To address this issue, we introduce a new dataset called BEE23 to highlight complex motions. Identity association algorithms have long been the focus of MOT research. Existing trackers can be categorized into two association paradigms: single-feature paradigm (based on either motion or appearance feature) and serial paradigm (one feature serves as secondary while the other is primary). However, these paradigms are incapable of fully utilizing different features. In this paper, we propose a parallel paradigm and present the Two rOund Parallel matchIng meChanism (TOPIC) to implement it. The TOPIC leverages both motion and appearance features and can adaptively select the preferable one as the assignment metric based on motion level. Moreover, we provide an Attention-based Appearance Reconstruct Module (AARM) to reconstruct appearance feature embeddings, thus enhancing the representation of appearance features. Comprehensive experiments show that our approach achieves state-of-the-art performance on four public datasets and BEE23. Notably, our proposed parallel paradigm surpasses the performance of existing association paradigms by a large margin, e.g., reducing false negatives by 12% to 51% compared to the single-feature association paradigm. The introduced dataset and association paradigm in this work offers a fresh perspective for advancing the MOT field. The source code and dataset are available at https://github.com/holmescao/TOPICTrack.

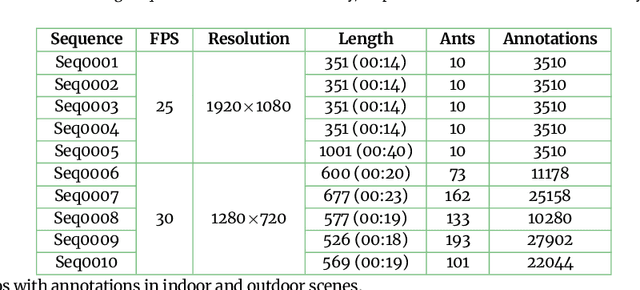

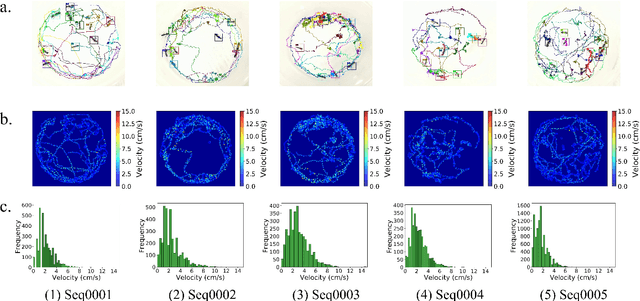

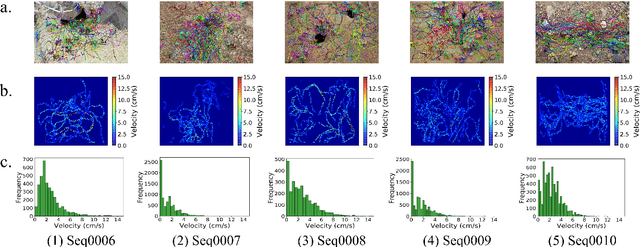

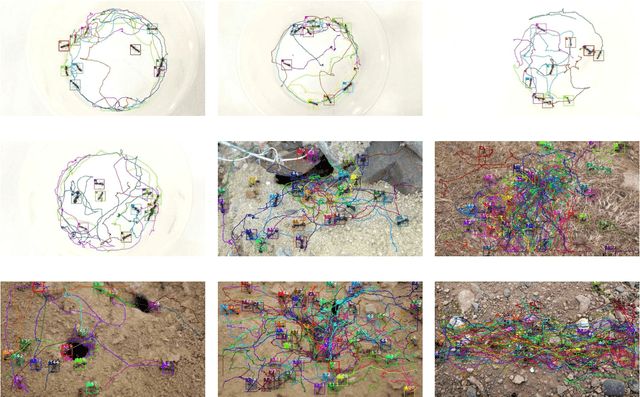

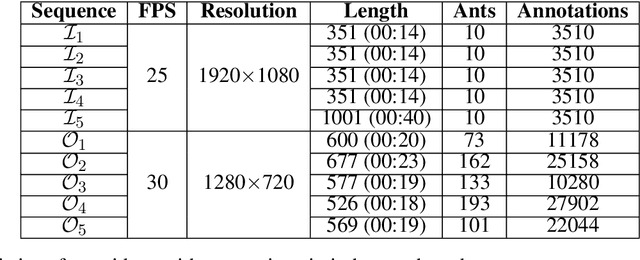

A dataset of ant colonies motion trajectories in indoor and outdoor scenes for social cluster behavior study

Apr 09, 2022

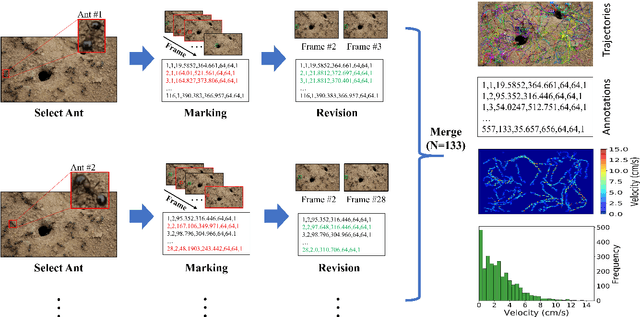

Abstract:Motion and interaction of social insects (such as ants) have been studied by many researchers to understand the clustering mechanism. Most studies in the field of ant behavior have only focused on indoor environments, while outdoor environments are still underexplored. In this paper, we collect 10 videos of ant colonies from different indoor and outdoor scenes. And we develop an image sequence marking software named VisualMarkData, which enables us to provide annotations of ants in the video. In all 5354 frames, the location information and the identification number of each ant are recorded for a total of 712 ants and 114112 annotations. Moreover, we provide visual analysis tools to assess and validate the technical quality and reproducibility of our data. It is hoped that this dataset will contribute to a deeper exploration on the behavior of the ant colony.

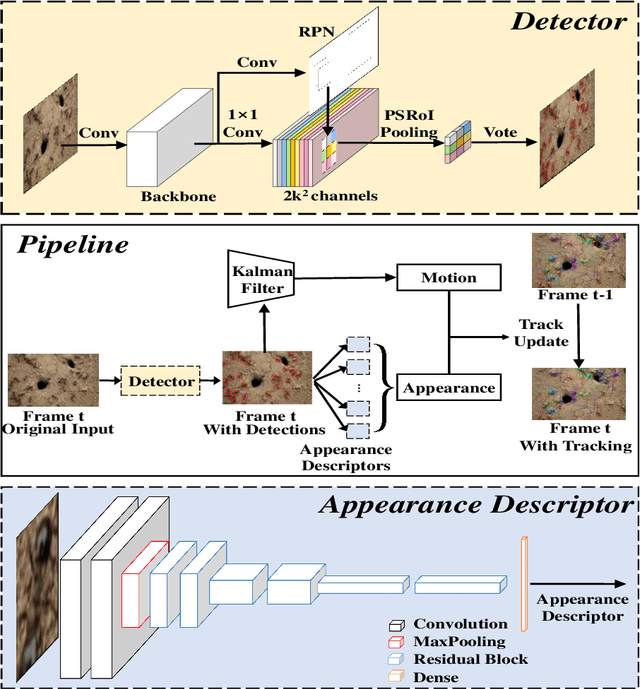

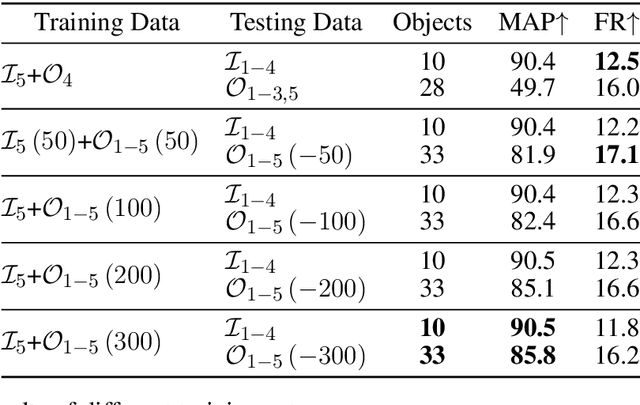

Swarm behavior tracking based on a deep vision algorithm

Apr 07, 2022

Abstract:The intelligent swarm behavior of social insects (such as ants) springs up in different environments, promising to provide insights for the study of embodied intelligence. Researching swarm behavior requires that researchers could accurately track each individual over time. Obviously, manually labeling individual insects in a video is labor-intensive. Automatic tracking methods, however, also poses serious challenges: (1) individuals are small and similar in appearance; (2) frequent interactions with each other cause severe and long-term occlusion. With the advances of artificial intelligence and computing vision technologies, we are hopeful to provide a tool to automate monitor multiple insects to address the above challenges. In this paper, we propose a detection and tracking framework for multi-ant tracking in the videos by: (1) adopting a two-stage object detection framework using ResNet-50 as backbone and coding the position of regions of interest to locate ants accurately; (2) using the ResNet model to develop the appearance descriptors of ants; (3) constructing long-term appearance sequences and combining them with motion information to achieve online tracking. To validate our method, we construct an ant database including 10 videos of ants from different indoor and outdoor scenes. We achieve a state-of-the-art performance of 95.7\% mMOTA and 81.1\% mMOTP in indoor videos, 81.8\% mMOTA and 81.9\% mMOTP in outdoor videos. Additionally, Our method runs 6-10 times faster than existing methods for insect tracking. Experimental results demonstrate that our method provides a powerful tool for accelerating the unraveling of the mechanisms underlying the swarm behavior of social insects.

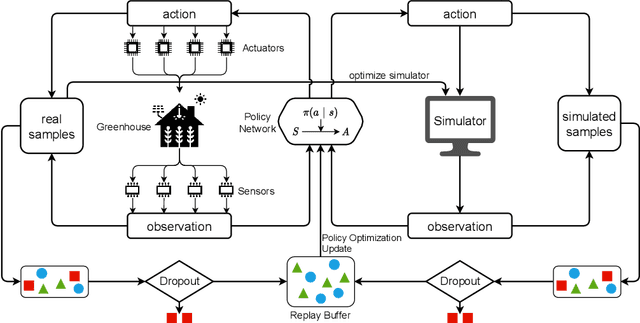

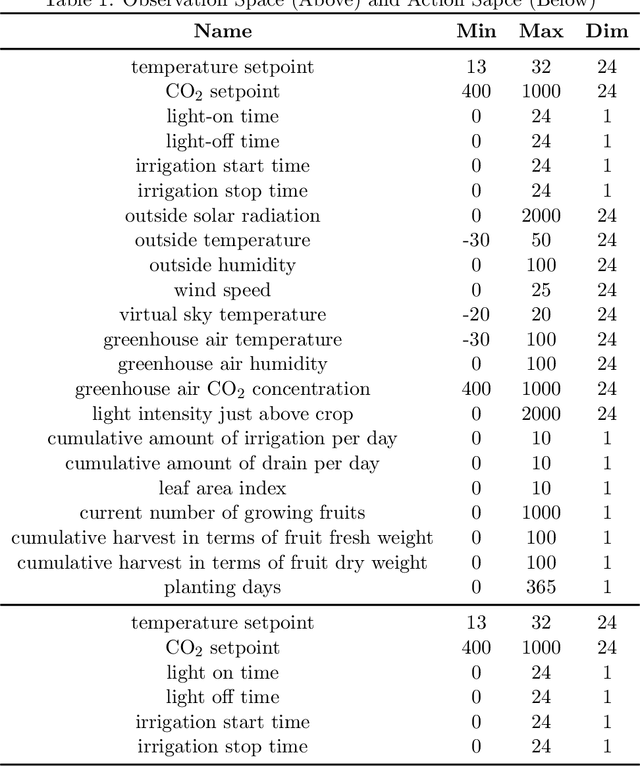

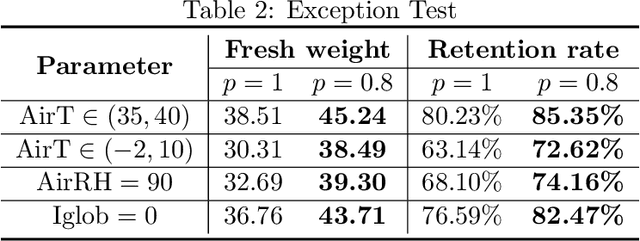

Robust Model-based Reinforcement Learning for Autonomous Greenhouse Control

Aug 26, 2021

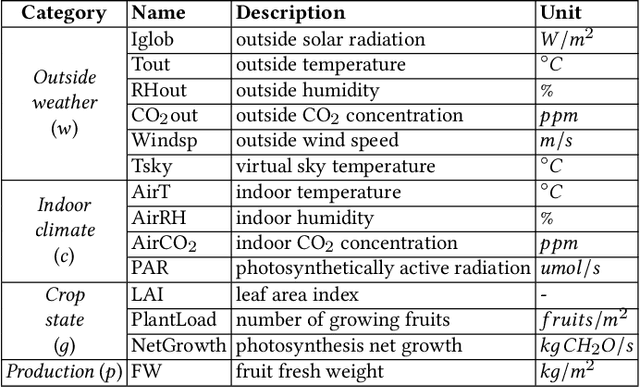

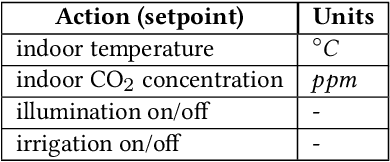

Abstract:Due to the high efficiency and less weather dependency, autonomous greenhouses provide an ideal solution to meet the increasing demand for fresh food. However, managers are faced with some challenges in finding appropriate control strategies for crop growth, since the decision space of the greenhouse control problem is an astronomical number. Therefore, an intelligent closed-loop control framework is highly desired to generate an automatic control policy. As a powerful tool for optimal control, reinforcement learning (RL) algorithms can surpass human beings' decision-making and can also be seamlessly integrated into the closed-loop control framework. However, in complex real-world scenarios such as agricultural automation control, where the interaction with the environment is time-consuming and expensive, the application of RL algorithms encounters two main challenges, i.e., sample efficiency and safety. Although model-based RL methods can greatly mitigate the efficiency problem of greenhouse control, the safety problem has not got too much attention. In this paper, we present a model-based robust RL framework for autonomous greenhouse control to meet the sample efficiency and safety challenges. Specifically, our framework introduces an ensemble of environment models to work as a simulator and assist in policy optimization, thereby addressing the low sample efficiency problem. As for the safety concern, we propose a sample dropout module to focus more on worst-case samples, which can help improve the adaptability of the greenhouse planting policy in extreme cases. Experimental results demonstrate that our approach can learn a more effective greenhouse planting policy with better robustness than existing methods.

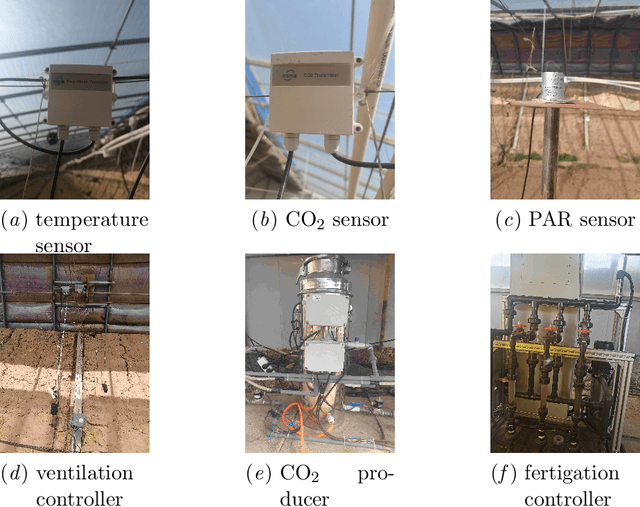

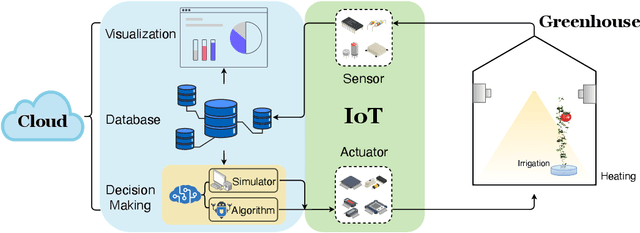

IGrow: A Smart Agriculture Solution to Autonomous Greenhouse Control

Jul 06, 2021

Abstract:Agriculture is the foundation of human civilization. However, the rapid increase and aging of the global population pose challenges on this cornerstone by demanding more healthy and fresh food. Internet of Things (IoT) technology makes modern autonomous greenhouse a viable and reliable engine of food production. However, the educated and skilled labor capable of overseeing high-tech greenhouses is scarce. Artificial intelligence (AI) and cloud computing technologies are promising solutions for precision control and high-efficiency production in such controlled environments. In this paper, we propose a smart agriculture solution, namely iGrow: (1) we use IoT and cloud computing technologies to measure, collect, and manage growing data, to support iteration of our decision-making AI module, which consists of an incremental model and an optimization algorithm; (2) we propose a three-stage incremental model based on accumulating data, enabling growers/central computers to schedule control strategies conveniently and at low cost; (3) we propose a model-based iterative optimization algorithm, which can dynamically optimize the greenhouse control strategy in real-time production. In the simulated experiment, evaluation results show the accuracy of our incremental model is comparable to an advanced tomato simulator, while our optimization algorithms can beat the champion of the 2nd Autonomous Greenhouse Challenge. Compelling results from the A/B test in real greenhouses demonstrate that our solution significantly increases production (commercially sellable fruits) (+ 10.15%) and net profit (+ 87.07%) with statistical significance compared to planting experts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge