Xiaoxin Li

Leveraging Persistence Image to Enhance Robustness and Performance in Curvilinear Structure Segmentation

Jan 25, 2026Abstract:Segmenting curvilinear structures in medical images is essential for analyzing morphological patterns in clinical applications. Integrating topological properties, such as connectivity, improves segmentation accuracy and consistency. However, extracting and embedding such properties - especially from Persistence Diagrams (PD) - is challenging due to their non-differentiability and computational cost. Existing approaches mostly encode topology through handcrafted loss functions, which generalize poorly across tasks. In this paper, we propose PIs-Regressor, a simple yet effective module that learns persistence image (PI) - finite, differentiable representations of topological features - directly from data. Together with Topology SegNet, which fuses these features in both downsampling and upsampling stages, our framework integrates topology into the network architecture itself rather than auxiliary losses. Unlike existing methods that depend heavily on handcrafted loss functions, our approach directly incorporates topological information into the network structure, leading to more robust segmentation. Our design is flexible and can be seamlessly combined with other topology-based methods to further enhance segmentation performance. Experimental results show that integrating topological features enhances model robustness, effectively handling challenges like overexposure and blurring in medical imaging. Our approach on three curvilinear benchmarks demonstrate state-of-the-art performance in both pixel-level accuracy and topological fidelity.

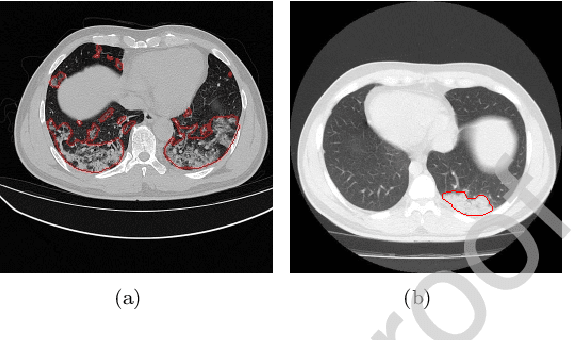

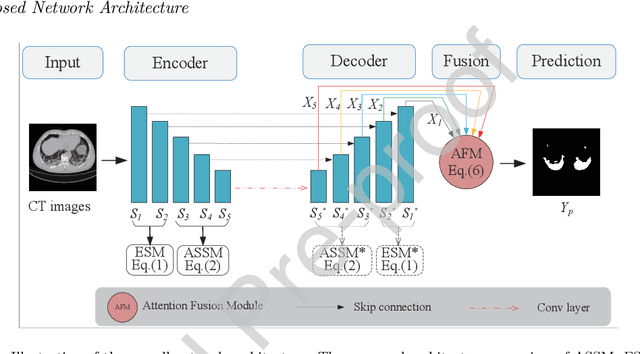

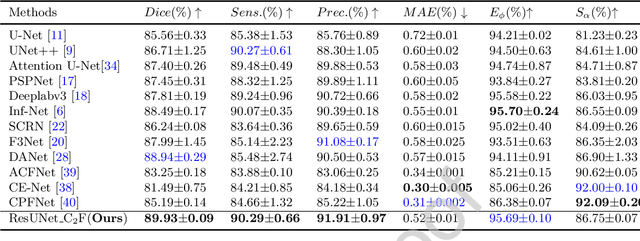

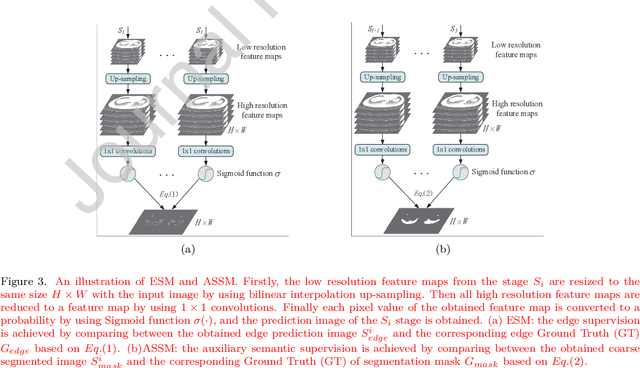

Deep Co-supervision and Attention Fusion Strategy for Automatic COVID-19 Lung Infection Segmentation on CT Images

Dec 20, 2021

Abstract:Due to the irregular shapes,various sizes and indistinguishable boundaries between the normal and infected tissues, it is still a challenging task to accurately segment the infected lesions of COVID-19 on CT images. In this paper, a novel segmentation scheme is proposed for the infections of COVID-19 by enhancing supervised information and fusing multi-scale feature maps of different levels based on the encoder-decoder architecture. To this end, a deep collaborative supervision (Co-supervision) scheme is proposed to guide the network learning the features of edges and semantics. More specifically, an Edge Supervised Module (ESM) is firstly designed to highlight low-level boundary features by incorporating the edge supervised information into the initial stage of down-sampling. Meanwhile, an Auxiliary Semantic Supervised Module (ASSM) is proposed to strengthen high-level semantic information by integrating mask supervised information into the later stage. Then an Attention Fusion Module (AFM) is developed to fuse multiple scale feature maps of different levels by using an attention mechanism to reduce the semantic gaps between high-level and low-level feature maps. Finally, the effectiveness of the proposed scheme is demonstrated on four various COVID-19 CT datasets. The results show that the proposed three modules are all promising. Based on the baseline (ResUnet), using ESM, ASSM, or AFM alone can respectively increase Dice metric by 1.12\%, 1.95\%,1.63\% in our dataset, while the integration by incorporating three models together can rise 3.97\%. Compared with the existing approaches in various datasets, the proposed method can obtain better segmentation performance in some main metrics, and can achieve the best generalization and comprehensive performance.

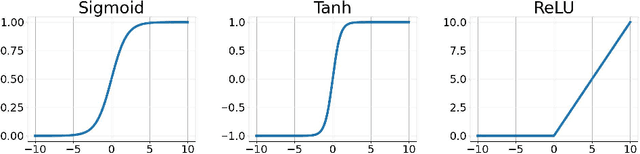

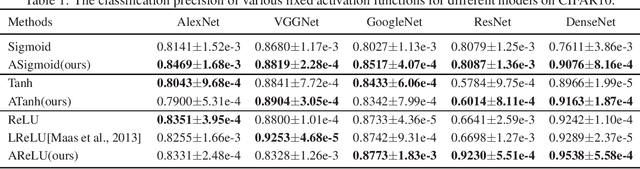

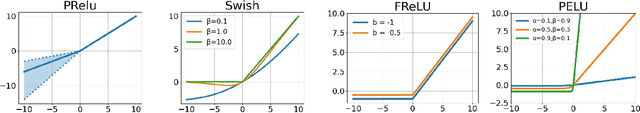

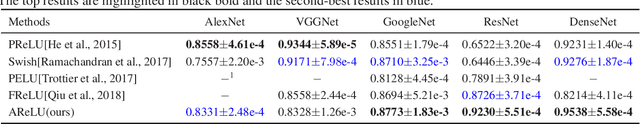

Adaptively Customizing Activation Functions for Various Layers

Dec 17, 2021

Abstract:To enhance the nonlinearity of neural networks and increase their mapping abilities between the inputs and response variables, activation functions play a crucial role to model more complex relationships and patterns in the data. In this work, a novel methodology is proposed to adaptively customize activation functions only by adding very few parameters to the traditional activation functions such as Sigmoid, Tanh, and ReLU. To verify the effectiveness of the proposed methodology, some theoretical and experimental analysis on accelerating the convergence and improving the performance is presented, and a series of experiments are conducted based on various network models (such as AlexNet, VGGNet, GoogLeNet, ResNet and DenseNet), and various datasets (such as CIFAR10, CIFAR100, miniImageNet, PASCAL VOC and COCO) . To further verify the validity and suitability in various optimization strategies and usage scenarios, some comparison experiments are also implemented among different optimization strategies (such as SGD, Momentum, AdaGrad, AdaDelta and ADAM) and different recognition tasks like classification and detection. The results show that the proposed methodology is very simple but with significant performance in convergence speed, precision and generalization, and it can surpass other popular methods like ReLU and adaptive functions like Swish in almost all experiments in terms of overall performance.The code is publicly available at https://github.com/HuHaigen/Adaptively-Customizing-Activation-Functions. The package includes the proposed three adaptive activation functions for reproducibility purposes.

Training Deep Neural Networks for Wireless Sensor Networks Using Loosely and Weakly Labeled Images

Oct 06, 2020

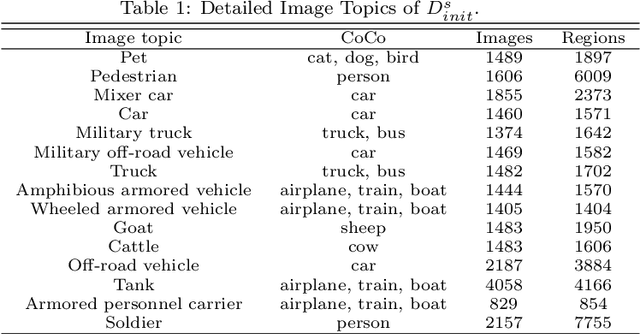

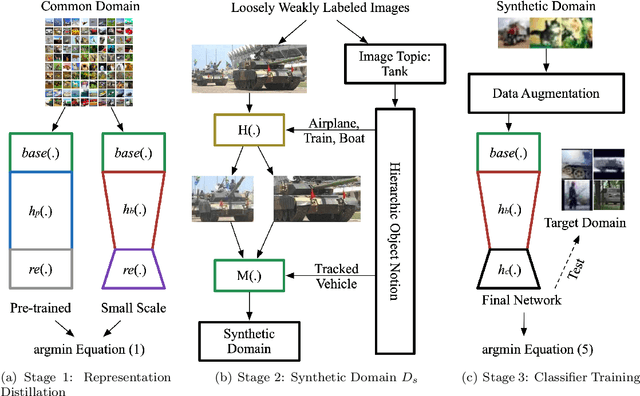

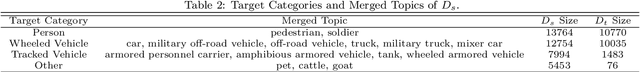

Abstract:Although deep learning has achieved remarkable successes over the past years, few reports have been published about applying deep neural networks to Wireless Sensor Networks (WSNs) for image targets recognition where data, energy, computation resources are limited. In this work, a Cost-Effective Domain Generalization (CEDG) algorithm has been proposed to train an efficient network with minimum labor requirements. CEDG transfers networks from a publicly available source domain to an application-specific target domain through an automatically allocated synthetic domain. The target domain is isolated from parameters tuning and used for model selection and testing only. The target domain is significantly different from the source domain because it has new target categories and is consisted of low-quality images that are out of focus, low in resolution, low in illumination, low in photographing angle. The trained network has about 7M (ResNet-20 is about 41M) multiplications per prediction that is small enough to allow a digital signal processor chip to do real-time recognitions in our WSN. The category-level averaged error on the unseen and unbalanced target domain has been decreased by 41.12%.

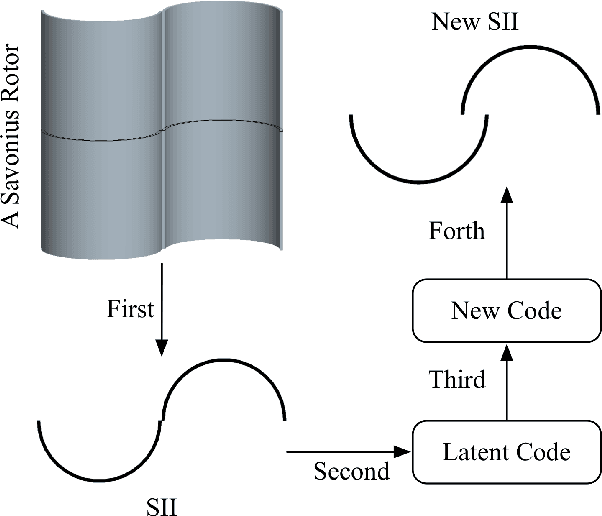

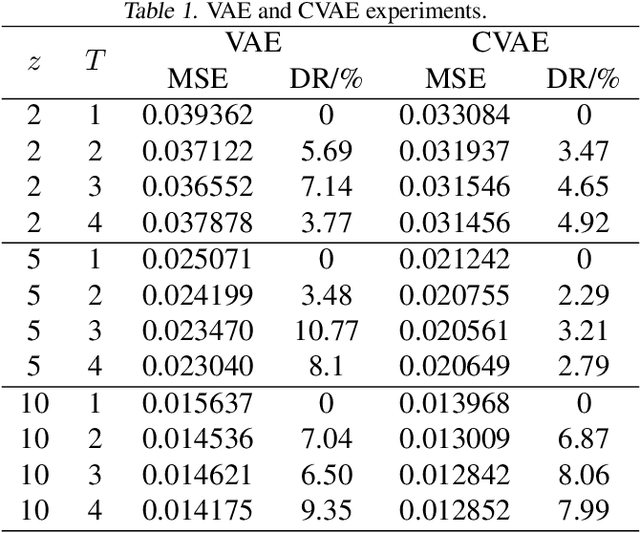

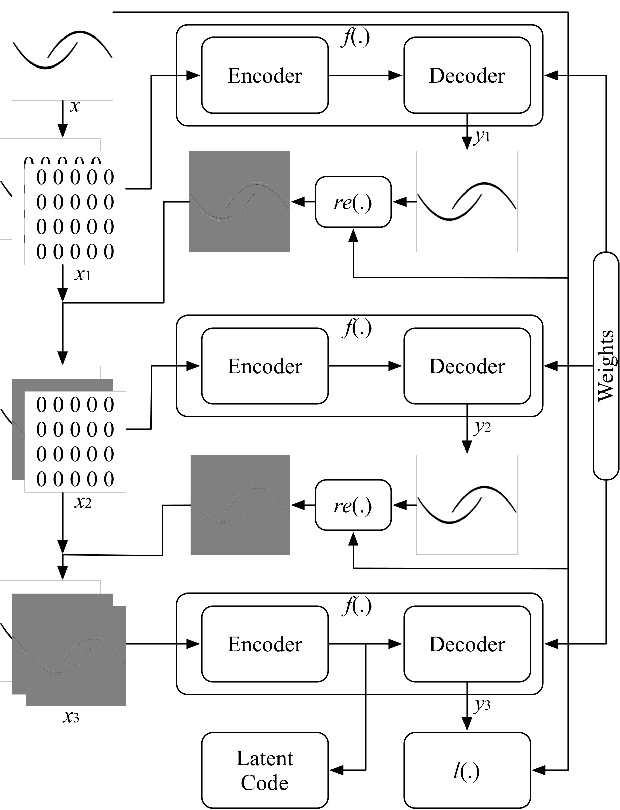

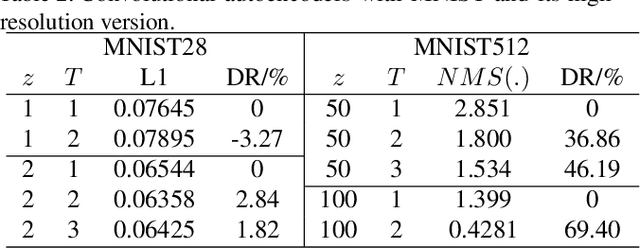

Residual-Recursion Autoencoder for Shape Illustration Images

Feb 06, 2020

Abstract:Shape illustration images (SIIs) are common and important in describing the cross-sections of industrial products. Same as MNIST, the handwritten digit images, SIIs are gray or binary and containing shapes that are surrounded by large areas of blanks. In this work, Residual-Recursion Autoencoder (RRAE) has been proposed to extract low-dimensional features from SIIs while maintaining reconstruction accuracy as high as possible. RRAE will try to reconstruct the original image several times and recursively fill the latest residual image to the reserved channel of the encoder's input before the next trial of reconstruction. As a kind of neural network training framework, RRAE can wrap over other autoencoders and increase their performance. From experiment results, the reconstruction loss is decreased by 86.47% for convolutional autoencoder with high-resolution SIIs, 10.77% for variational autoencoder and 8.06% for conditional variational autoencoder with MNIST.

Towards the Automation of Deep Image Prior

Nov 17, 2019

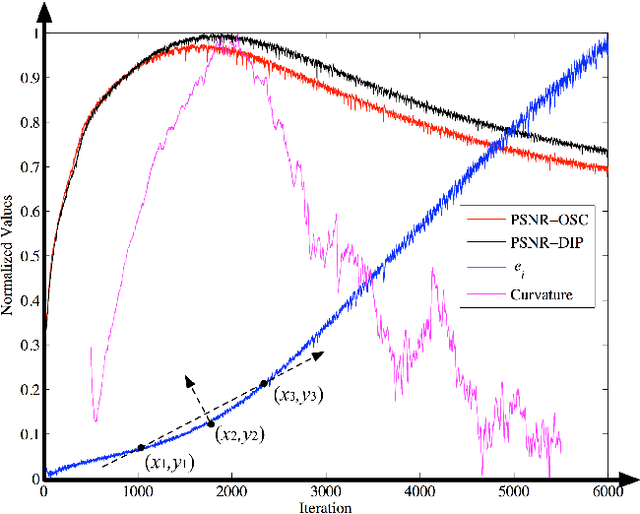

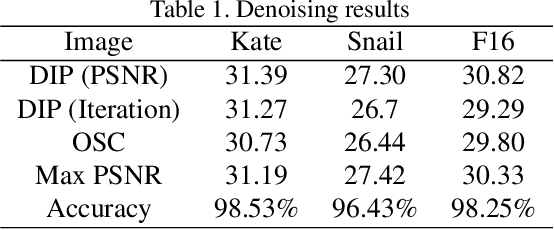

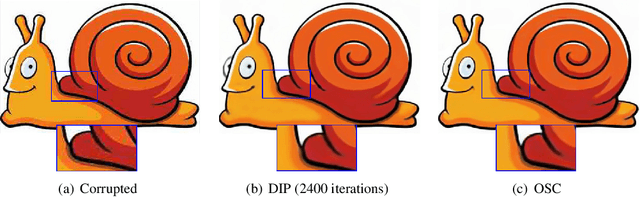

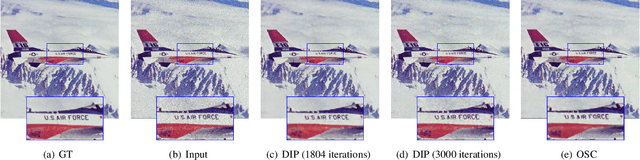

Abstract:Single image inverse problem is a notoriously challenging ill-posed problem that aims to restore the original image from one of its corrupted versions. Recently, this field has been immensely influenced by the emergence of deep-learning techniques. Deep Image Prior (DIP) offers a new approach that forces the recovered image to be synthesized from a given deep architecture. While DIP is quite an effective unsupervised approach, it is deprecated in real-world applications because of the requirement of human assistance. In this work, we aim to find the best-recovered image without the assistance of humans by adding a stopping criterion, which will reach maximum when the iteration no longer improves the image quality. More specifically, we propose to add a pseudo noise to the corrupted image and measure the pseudo-noise component in the recovered image by the orthogonality between signal and noise. The accuracy of the orthogonal stopping criterion has been demonstrated for several tested problems such as denoising, super-resolution, and inpainting, in which 38 out of 40 experiments are higher than 95%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge