Jingchang Huang

Training Deep Neural Networks for Wireless Sensor Networks Using Loosely and Weakly Labeled Images

Oct 06, 2020

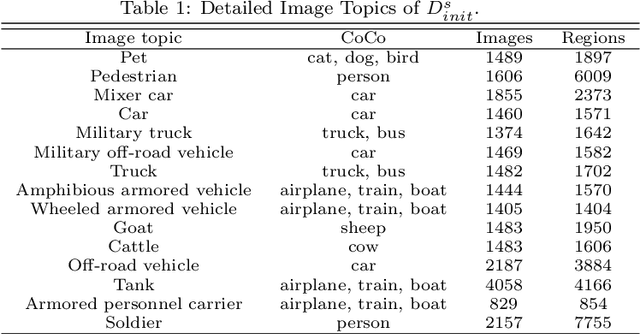

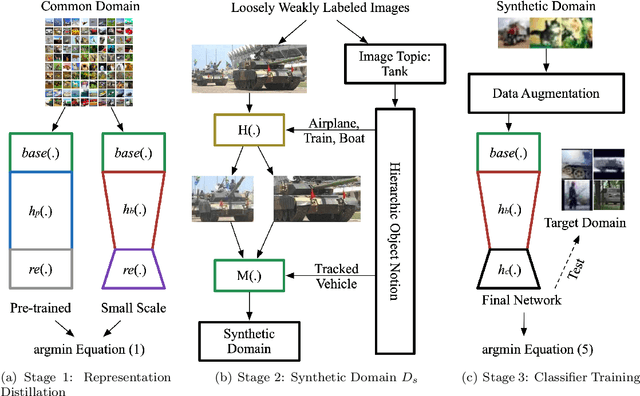

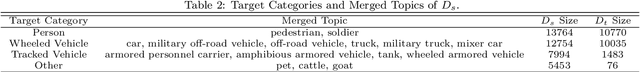

Abstract:Although deep learning has achieved remarkable successes over the past years, few reports have been published about applying deep neural networks to Wireless Sensor Networks (WSNs) for image targets recognition where data, energy, computation resources are limited. In this work, a Cost-Effective Domain Generalization (CEDG) algorithm has been proposed to train an efficient network with minimum labor requirements. CEDG transfers networks from a publicly available source domain to an application-specific target domain through an automatically allocated synthetic domain. The target domain is isolated from parameters tuning and used for model selection and testing only. The target domain is significantly different from the source domain because it has new target categories and is consisted of low-quality images that are out of focus, low in resolution, low in illumination, low in photographing angle. The trained network has about 7M (ResNet-20 is about 41M) multiplications per prediction that is small enough to allow a digital signal processor chip to do real-time recognitions in our WSN. The category-level averaged error on the unseen and unbalanced target domain has been decreased by 41.12%.

Exploring Spatial Significance via Hybrid Pyramidal Graph Network for Vehicle Re-identification

Jun 05, 2020

Abstract:Existing vehicle re-identification methods commonly use spatial pooling operations to aggregate feature maps extracted via off-the-shelf backbone networks. They ignore exploring the spatial significance of feature maps, eventually degrading the vehicle re-identification performance. In this paper, firstly, an innovative spatial graph network (SGN) is proposed to elaborately explore the spatial significance of feature maps. The SGN stacks multiple spatial graphs (SGs). Each SG assigns feature map's elements as nodes and utilizes spatial neighborhood relationships to determine edges among nodes. During the SGN's propagation, each node and its spatial neighbors on an SG are aggregated to the next SG. On the next SG, each aggregated node is re-weighted with a learnable parameter to find the significance at the corresponding location. Secondly, a novel pyramidal graph network (PGN) is designed to comprehensively explore the spatial significance of feature maps at multiple scales. The PGN organizes multiple SGNs in a pyramidal manner and makes each SGN handles feature maps of a specific scale. Finally, a hybrid pyramidal graph network (HPGN) is developed by embedding the PGN behind a ResNet-50 based backbone network. Extensive experiments on three large scale vehicle databases (i.e., VeRi776, VehicleID, and VeRi-Wild) demonstrate that the proposed HPGN is superior to state-of-the-art vehicle re-identification approaches.

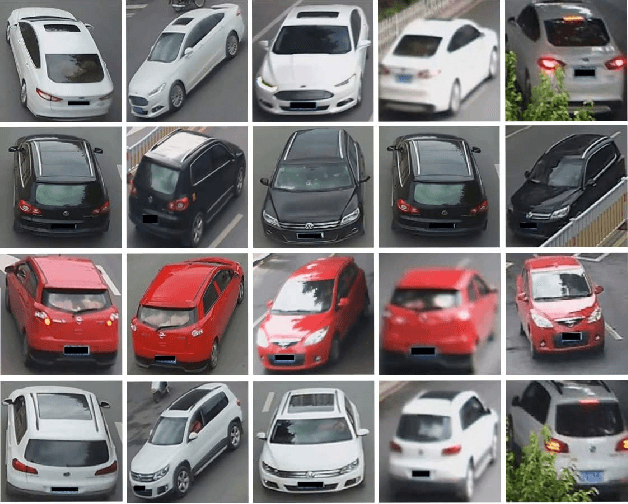

Vehicle Re-identification Using Quadruple Directional Deep Learning Features

Nov 13, 2018

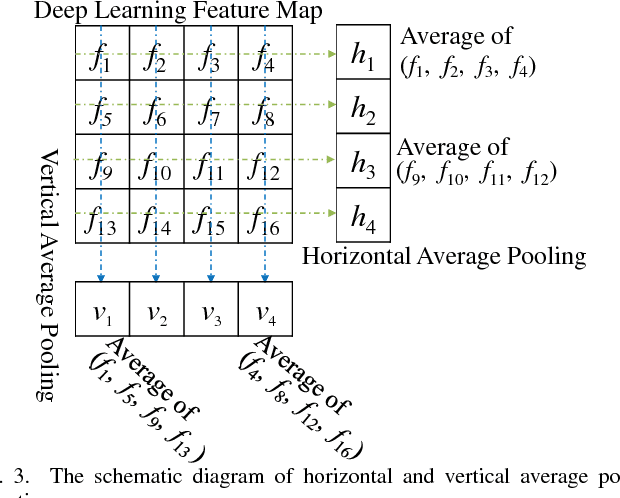

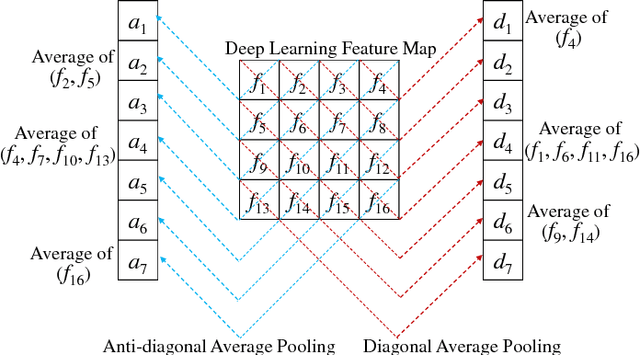

Abstract:In order to resist the adverse effect of viewpoint variations for improving vehicle re-identification performance, we design quadruple directional deep learning networks to extract quadruple directional deep learning features (QD-DLF) of vehicle images. The quadruple directional deep learning networks are with similar overall architecture, including the same basic deep learning architecture but different directional feature pooling layers. Specifically, the same basic deep learning architecture is a shortly and densely connected convolutional neural network to extract basic feature maps of an input square vehicle image in the first stage. Then, the quadruple directional deep learning networks utilize different directional pooling layers, i.e., horizontal average pooling (HAP) layer, vertical average pooling (VAP) layer, diagonal average pooling (DAP) layer and anti-diagonal average pooling (AAP) layer, to compress the basic feature maps into horizontal, vertical, diagonal and anti-diagonal directional feature maps, respectively. Finally, these directional feature maps are spatially normalized and concatenated together as a quadruple directional deep learning feature for vehicle re-identification. Extensive experiments on both VeRi and VehicleID databases show that the proposed QD-DLF approach outperforms multiple state-of-the-art vehicle re-identification methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge