Xi-Qiao Feng

Discrete Solution Operator Learning for Geometry-Dependent PDEs

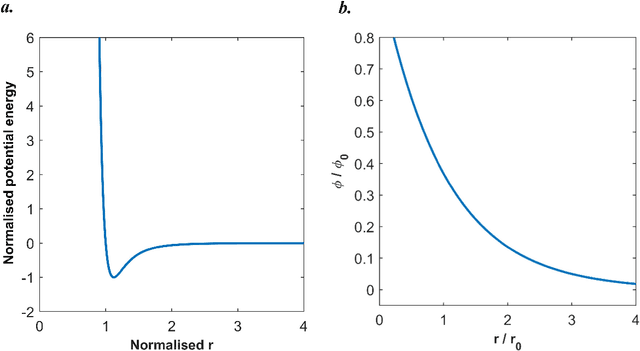

Jan 15, 2026Abstract:Neural operator learning accelerates PDE solution by approximating operators as mappings between continuous function spaces. Yet in many engineering settings, varying geometry induces discrete structural changes, including topological changes, abrupt changes in boundary conditions or boundary types, and changes in the computational domain, which break the smooth-variation premise. Here we introduce Discrete Solution Operator Learning (DiSOL), a complementary paradigm that learns discrete solution procedures rather than continuous function-space operators. DiSOL factorizes the solver into learnable stages that mirror classical discretizations: local contribution encoding, multiscale assembly, and implicit solution reconstruction on an embedded grid, thereby preserving procedure-level consistency while adapting to geometry-dependent discrete structures. Across geometry-dependent Poisson, advection-diffusion, linear elasticity, as well as spatiotemporal heat conduction problems, DiSOL produces stable and accurate predictions under both in-distribution and strongly out-of-distribution geometries, including discontinuous boundaries and topological changes. These results highlight the need for procedural operator representations in geometry-dominated problems and position discrete solution operator learning as a distinct, complementary direction in scientific machine learning.

Energy-based physics-informed neural network for frictionless contact problems under large deformation

Nov 06, 2024

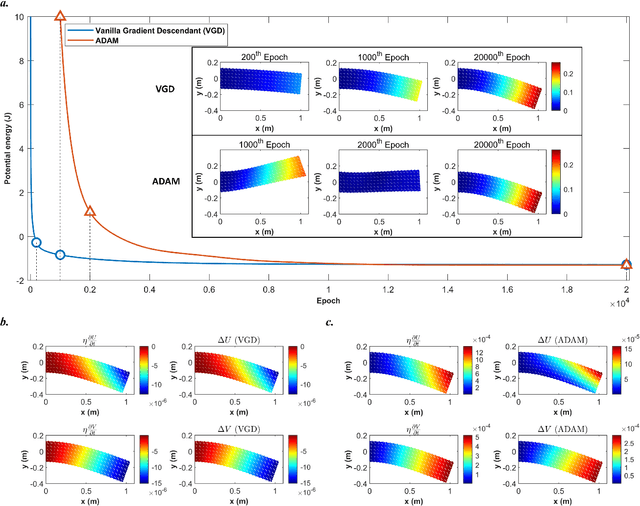

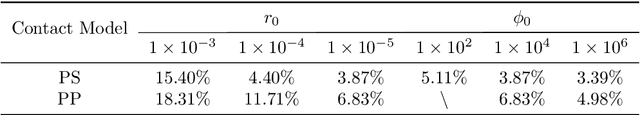

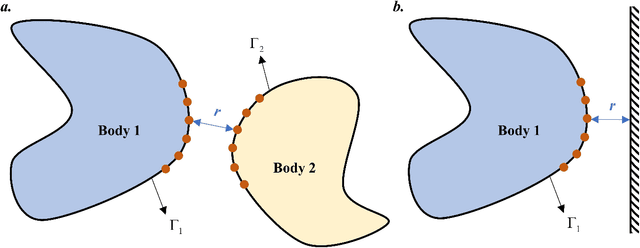

Abstract:Numerical methods for contact mechanics are of great importance in engineering applications, enabling the prediction and analysis of complex surface interactions under various conditions. In this work, we propose an energy-based physics-informed neural network (PINNs) framework for solving frictionless contact problems under large deformation. Inspired by microscopic Lennard-Jones potential, a surface contact energy is used to describe the contact phenomena. To ensure the robustness of the proposed PINN framework, relaxation, gradual loading and output scaling techniques are introduced. In the numerical examples, the well-known Hertz contact benchmark problem is conducted, demonstrating the effectiveness and robustness of the proposed PINNs framework. Moreover, challenging contact problems with the consideration of geometrical and material nonlinearities are tested. It has been shown that the proposed PINNs framework provides a reliable and powerful tool for nonlinear contact mechanics. More importantly, the proposed PINNs framework exhibits competitive computational efficiency to the commercial FEM software when dealing with those complex contact problems. The codes used in this manuscript are available at https://github.com/JinshuaiBai/energy_PINN_Contact.(The code will be available after acceptance)

Physics-informed radial basis network : A local approximating neural network for solving nonlinear PDEs

Apr 20, 2023

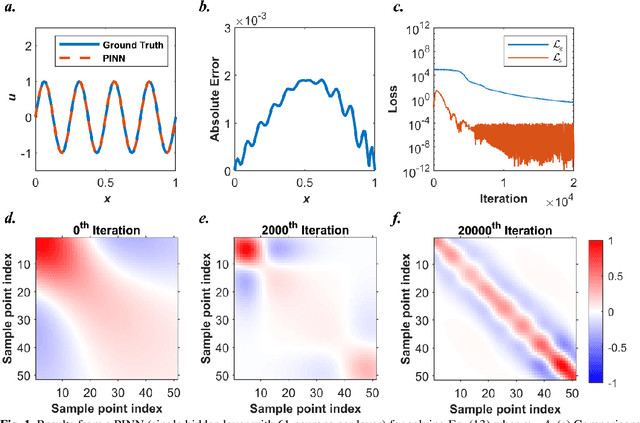

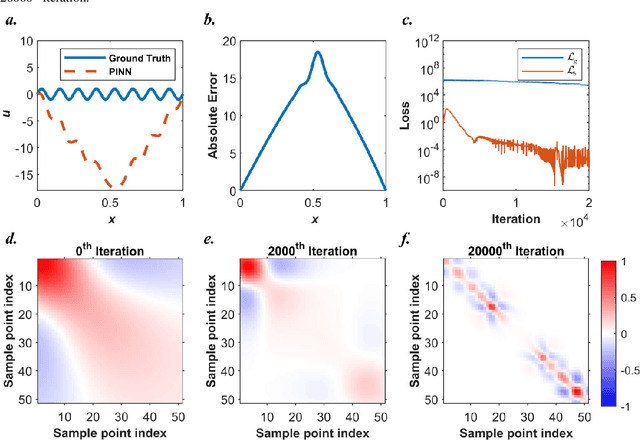

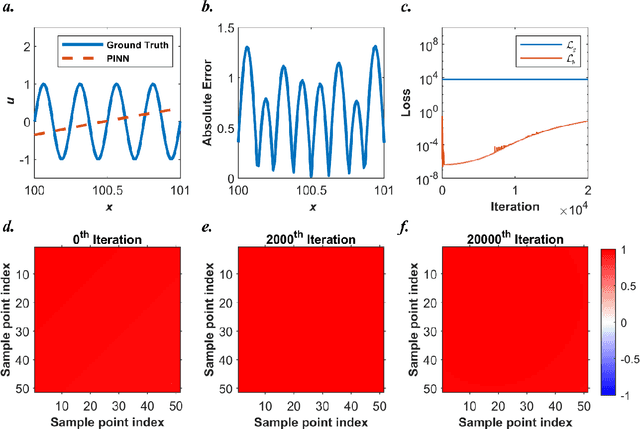

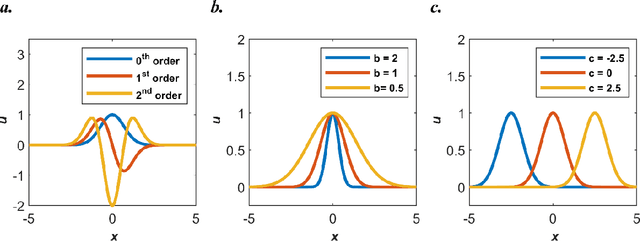

Abstract:Our recent intensive study has found that physics-informed neural networks (PINN) tend to be local approximators after training. This observation leads to this novel physics-informed radial basis network (PIRBN), which can maintain the local property throughout the entire training process. Compared to deep neural networks, a PIRBN comprises of only one hidden layer and a radial basis "activation" function. Under appropriate conditions, we demonstrated that the training of PIRBNs using gradient descendent methods can converge to Gaussian processes. Besides, we studied the training dynamics of PIRBN via the neural tangent kernel (NTK) theory. In addition, comprehensive investigations regarding the initialisation strategies of PIRBN were conducted. Based on numerical examples, PIRBN has been demonstrated to be more effective and efficient than PINN in solving PDEs with high-frequency features and ill-posed computational domains. Moreover, the existing PINN numerical techniques, such as adaptive learning, decomposition and different types of loss functions, are applicable to PIRBN. The programs that can regenerate all numerical results can be found at https://github.com/JinshuaiBai/PIRBN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge