Woong Bae

Resource Optimized Neural Architecture Search for 3D Medical Image Segmentation

Sep 02, 2019

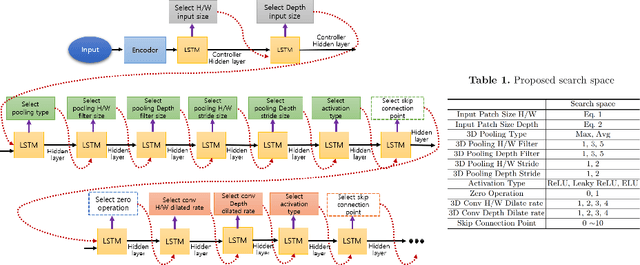

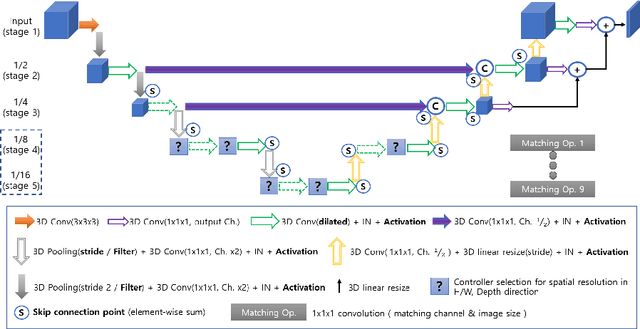

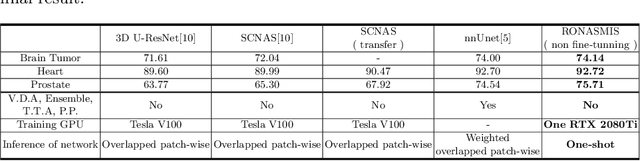

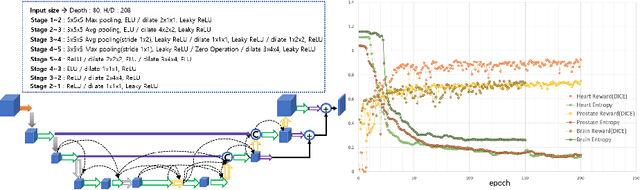

Abstract:Neural Architecture Search (NAS), a framework which automates the task of designing neural networks, has recently been actively studied in the field of deep learning. However, there are only a few NAS methods suitable for 3D medical image segmentation. Medical 3D images are generally very large; thus it is difficult to apply previous NAS methods due to their GPU computational burden and long training time. We propose the resource-optimized neural architecture search method which can be applied to 3D medical segmentation tasks in a short training time (1.39 days for 1GB dataset) using a small amount of computation power (one RTX 2080Ti, 10.8GB GPU memory). Excellent performance can also be achieved without retraining(fine-tuning) which is essential in most NAS methods. These advantages can be achieved by using a reinforcement learning-based controller with parameter sharing and focusing on the optimal search space configuration of macro search rather than micro search. Our experiments demonstrate that the proposed NAS method outperforms manually designed networks with state-of-the-art performance in 3D medical image segmentation.

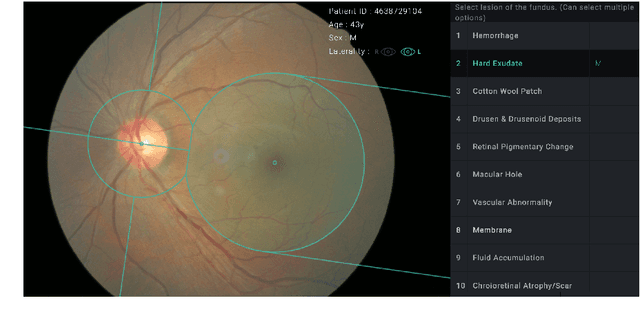

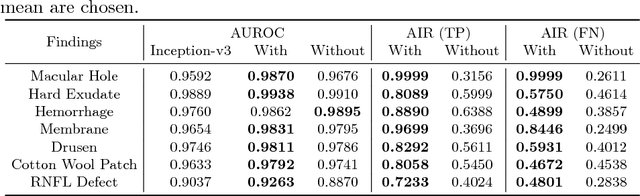

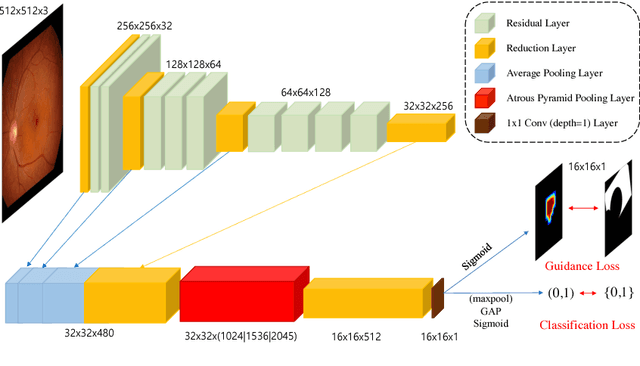

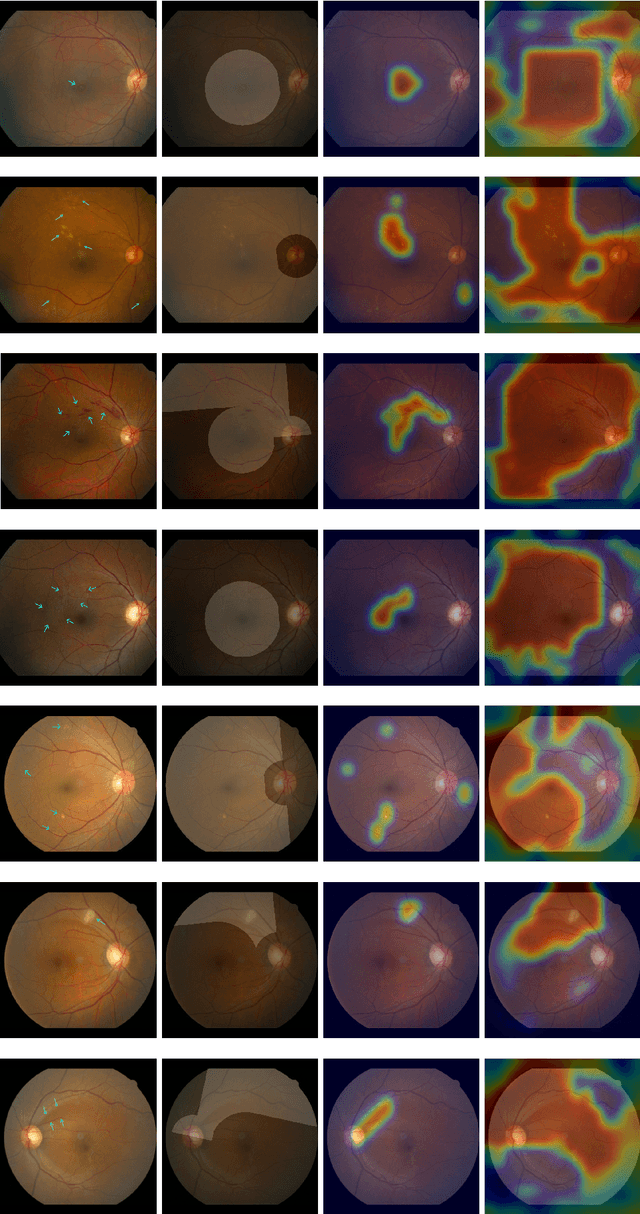

Classification of Findings with Localized Lesions in Fundoscopic Images using a Regionally Guided CNN

Nov 02, 2018

Abstract:Fundoscopic images are often investigated by ophthalmologists to spot abnormal lesions to make diagnoses. Recent successes of convolutional neural networks are confined to diagnoses of few diseases without proper localization of lesion. In this paper, we propose an efficient annotation method for localizing lesions and a CNN architecture that can classify an individual finding and localize the lesions at the same time. Also, we introduce a new loss function to guide the network to learn meaningful patterns with the guidance of the regional annotations. In experiments, we demonstrate that our network performed better than the widely used network and the guidance loss helps achieve higher AUROC up to 4.1% and superior localization capability.

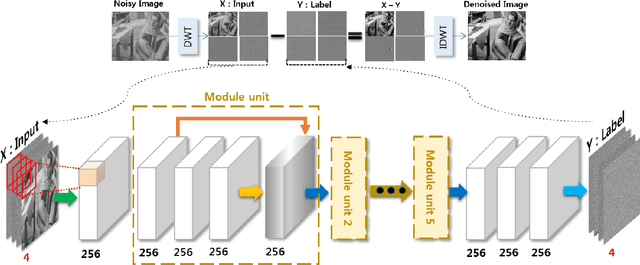

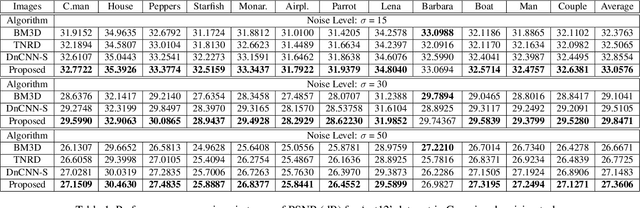

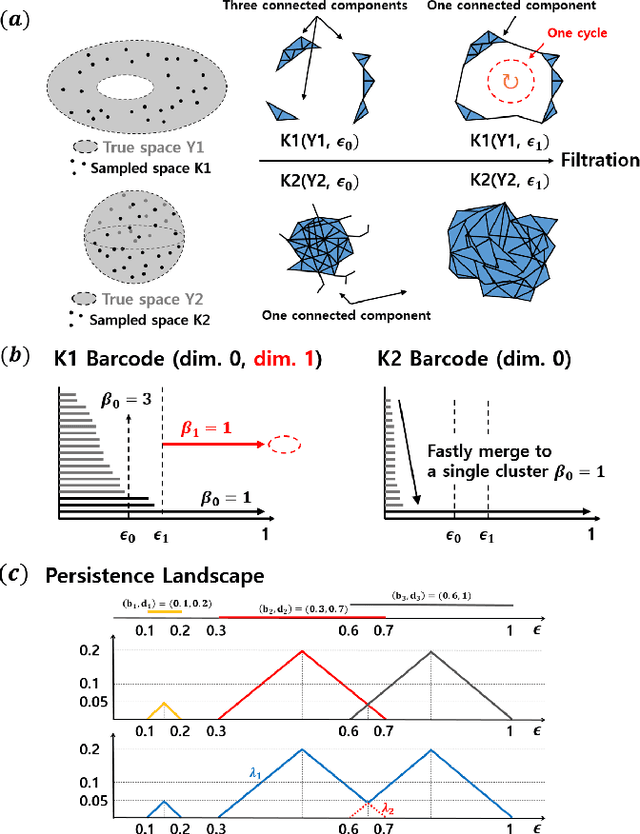

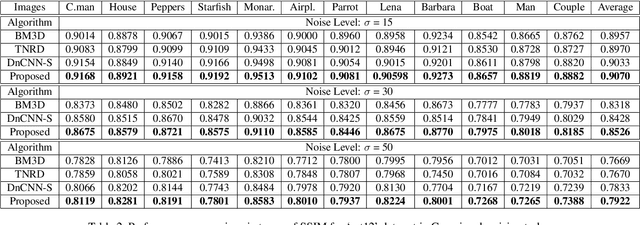

Beyond Deep Residual Learning for Image Restoration: Persistent Homology-Guided Manifold Simplification

Jun 08, 2017

Abstract:The latest deep learning approaches perform better than the state-of-the-art signal processing approaches in various image restoration tasks. However, if an image contains many patterns and structures, the performance of these CNNs is still inferior. To address this issue, here we propose a novel feature space deep residual learning algorithm that outperforms the existing residual learning. The main idea is originated from the observation that the performance of a learning algorithm can be improved if the input and/or label manifolds can be made topologically simpler by an analytic mapping to a feature space. Our extensive numerical studies using denoising experiments and NTIRE single-image super-resolution (SISR) competition demonstrate that the proposed feature space residual learning outperforms the existing state-of-the-art approaches. Moreover, our algorithm was ranked third in NTIRE competition with 5-10 times faster computational time compared to the top ranked teams. The source code is available on page : https://github.com/iorism/CNN.git

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge