William Yerazunis

Learning Object Manipulation With Under-Actuated Impulse Generator Arrays

Mar 06, 2023

Abstract:For more than half a century, vibratory bowl feeders have been the standard in automated assembly for singulation, orientation, and manipulation of small parts. Unfortunately, these feeders are expensive, noisy, and highly specialized on a single part design bases. We consider an alternative device and learning control method for singulation, orientation, and manipulation by means of seven fixed-position variable-energy solenoid impulse actuators located beneath a semi-rigid part supporting surface. Using computer vision to provide part pose information, we tested various machine learning (ML) algorithms to generate a control policy that selects the optimal actuator and actuation energy. Our manipulation test object is a 6-sided craps-style die. Using the most suitable ML algorithm, we were able to flip the die to any desired face 30.4\% of the time with a single impulse, and 51.3\% with two chosen impulses, versus a random policy succeeding 5.1\% of the time (that is, a randomly chosen impulse delivered by a randomly chosen solenoid).

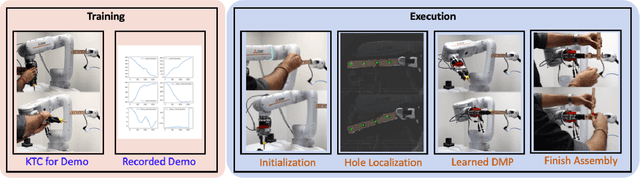

Generalizable Human-Robot Collaborative Assembly Using Imitation Learning and Force Control

Dec 02, 2022

Abstract:Robots have been steadily increasing their presence in our daily lives, where they can work along with humans to provide assistance in various tasks on industry floors, in offices, and in homes. Automated assembly is one of the key applications of robots, and the next generation assembly systems could become much more efficient by creating collaborative human-robot systems. However, although collaborative robots have been around for decades, their application in truly collaborative systems has been limited. This is because a truly collaborative human-robot system needs to adjust its operation with respect to the uncertainty and imprecision in human actions, ensure safety during interaction, etc. In this paper, we present a system for human-robot collaborative assembly using learning from demonstration and pose estimation, so that the robot can adapt to the uncertainty caused by the operation of humans. Learning from demonstration is used to generate motion trajectories for the robot based on the pose estimate of different goal locations from a deep learning-based vision system. The proposed system is demonstrated using a physical 6 DoF manipulator in a collaborative human-robot assembly scenario. We show successful generalization of the system's operation to changes in the initial and final goal locations through various experiments.

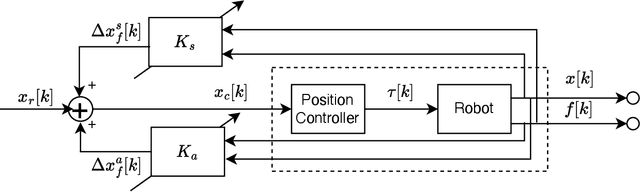

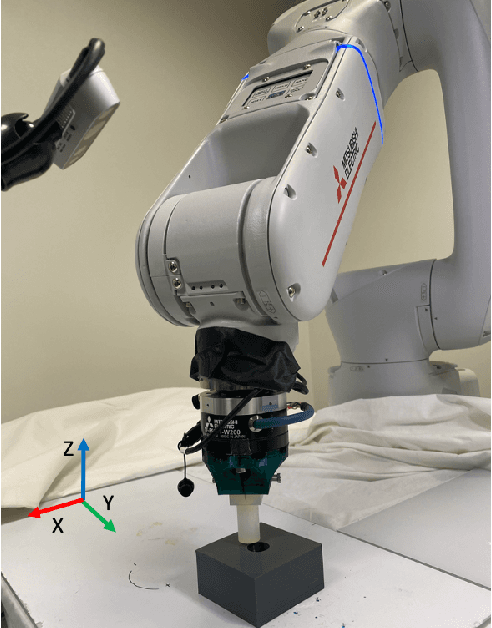

Design of Adaptive Compliance Controllers for Safe Robotic Assembly

Apr 22, 2022

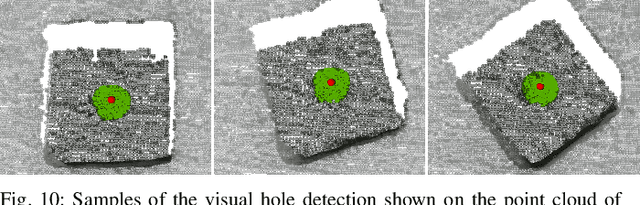

Abstract:Insertion operations are a critical element of most robotic assembly operation, and peg-in-hole (PiH) insertion is one of the most widely studied tasks in the industrial and academic manipulation communities. PiH insertion is in fact an entire class of problems, where the complexity of the problem can depend on the type of misalignment and contact formation during an insertion attempt. In this paper, we present the design and analysis of adaptive compliance controllers which can be used in insertion-type assembly tasks, including learning-based compliance controllers which can be used for insertion problems in the presence of uncertainty in the goal location during robotic assembly. We first present the design of compliance controllers which can ensure safe operation of the robot by limiting experienced contact forces during contact formation. Consequently, we present analysis of the force signature obtained during the contact formation to learn the corrective action needed to perform insertion. Finally, we use the proposed compliance controllers and learned models to design a policy that can successfully perform insertion in novel test conditions with almost perfect success rate. We validate the proposed approach on a physical robotic test-bed using a 6-DoF manipulator arm.

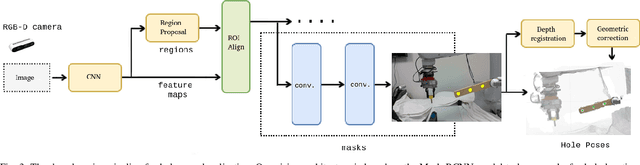

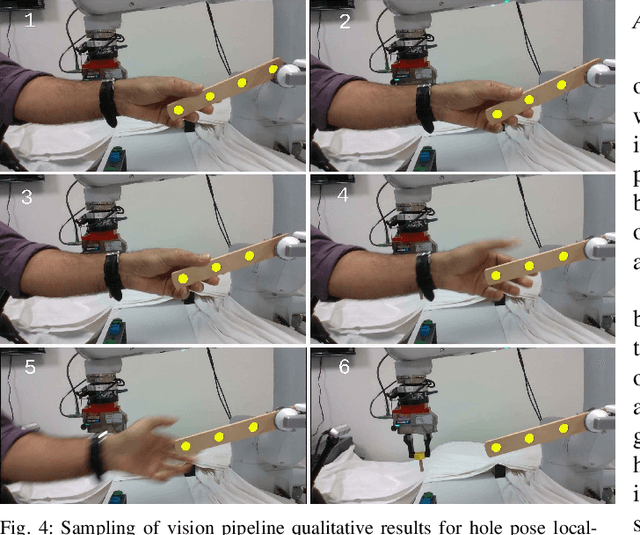

Imitation and Supervised Learning of Compliance for Robotic Assembly

Nov 20, 2021

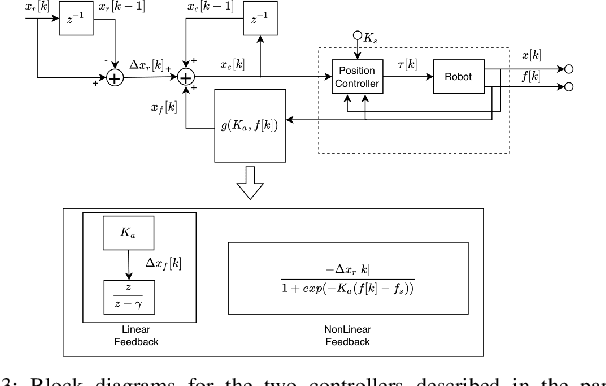

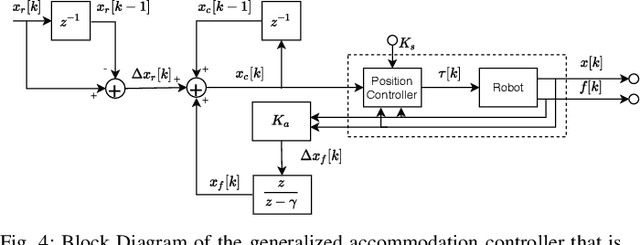

Abstract:We present the design of a learning-based compliance controller for assembly operations for industrial robots. We propose a solution within the general setting of learning from demonstration (LfD), where a nominal trajectory is provided through demonstration by an expert teacher. This can be used to learn a suitable representation of the skill that can be generalized to novel positions of one of the parts involved in the assembly, for example the hole in a peg-in-hole (PiH) insertion task. Under the expectation that this novel position might not be entirely accurately estimated by a vision or other sensing system, the robot will need to further modify the generated trajectory in response to force readings measured by means of a force-torque (F/T) sensor mounted at the wrist of the robot or another suitable location. Under the assumption of constant velocity of traversing the reference trajectory during assembly, we propose a novel accommodation force controller that allows the robot to safely explore different contact configurations. The data collected using this controller is used to train a Gaussian process model to predict the misalignment in the position of the peg with respect to the target hole. We show that the proposed learning-based approach can correct various contact configurations caused by misalignment between the assembled parts in a PiH task, achieving high success rate during insertion. We show results using an industrial manipulator arm, and demonstrate that the proposed method can perform adaptive insertion using force feedback from the trained machine learning models.

Semiparametrical Gaussian Processes Learning of Forward Dynamical Models for Navigating in a Circular Maze

Sep 18, 2018

Abstract:This paper presents a problem of model learning for the purpose of learning how to navigate a ball to a goal state in a circular maze environment with two degrees of freedom. The motion of the ball in the maze environment is influenced by several non-linear effects such as dry friction and contacts, which are difficult to model physically. We propose a semiparametric model to estimate the motion dynamics of the ball based on Gaussian Process Regression equipped with basis functions obtained from physics first principles. The accuracy of this semiparametric model is shown not only in estimation but also in prediction at n-steps ahead and its compared with standard algorithms for model learning. The learned model is then used in a trajectory optimization algorithm to compute ball trajectories. We propose the system presented in the paper as a benchmark problem for reinforcement and robot learning, for its interesting and challenging dynamics and its relative ease of reproducibility.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge