Wenyuan Gao

GenEM: Physics-Informed Generative Cryo-Electron Microscopy

Dec 04, 2023

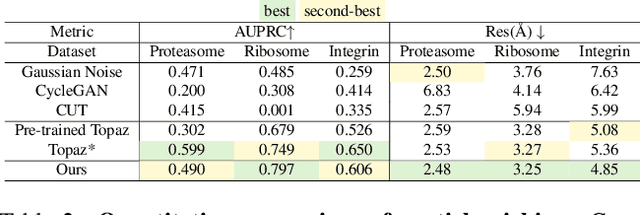

Abstract:In the past decade, deep conditional generative models have revolutionized the generation of realistic images, extending their application from entertainment to scientific domains. Single-particle cryo-electron microscopy (cryo-EM) is crucial in resolving near-atomic resolution 3D structures of proteins, such as the SARS-COV-2 spike protein. To achieve high-resolution reconstruction, AI models for particle picking and pose estimation have been adopted. However, their performance is still limited as they lack high-quality annotated datasets. To address this, we introduce physics-informed generative cryo-electron microscopy (GenEM), which for the first time integrates physical-based cryo-EM simulation with a generative unpaired noise translation to generate physically correct synthetic cryo-EM datasets with realistic noises. Initially, GenEM simulates the cryo-EM imaging process based on a virtual specimen. To generate realistic noises, we leverage an unpaired noise translation via contrastive learning with a novel mask-guided sampling scheme. Extensive experiments show that GenEM is capable of generating realistic cryo-EM images. The generated dataset can further enhance particle picking and pose estimation models, eventually improving the reconstruction resolution. We will release our code and annotated synthetic datasets.

Interactive Variance Attention based Online Spoiler Detection for Time-Sync Comments

Aug 21, 2019

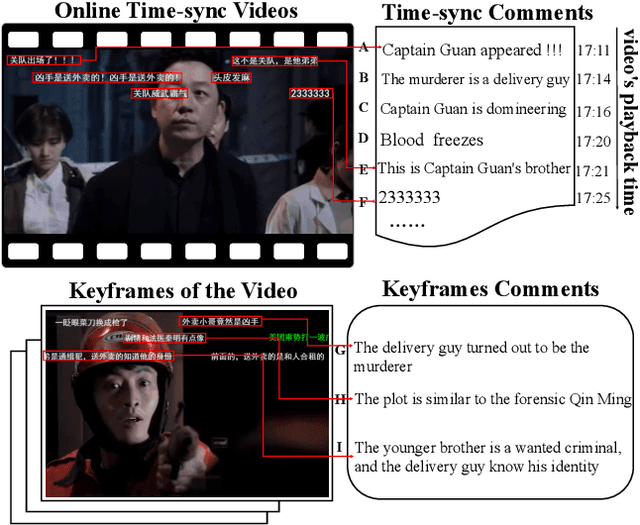

Abstract:Nowadays, time-sync comment (TSC), a new form of interactive comments, has become increasingly popular in Chinese video websites. By posting TSCs, people can easily express their feelings and exchange their opinions with others when watching online videos. However, some spoilers appear among the TSCs. These spoilers reveal crucial plots in videos that ruin people's surprise when they first watch the video. In this paper, we proposed a novel Similarity-Based Network with Interactive Variance Attention (SBN-IVA) to classify comments as spoilers or not. In this framework, we firstly extract textual features of TSCs through the word-level attentive encoder. We design Similarity-Based Network (SBN) to acquire neighbor and keyframe similarity according to semantic similarity and timestamps of TSCs. Then, we implement Interactive Variance Attention (IVA) to eliminate the impact of noise comments. Finally, we obtain the likelihood of spoiler based on the difference between the neighbor and keyframe similarity. Experiments show SBN-IVA is on average 11.2\% higher than the state-of-the-art method on F1-score in baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge