Weijian Ruan

An Efficient and Mixed Heterogeneous Model for Image Restoration

Apr 15, 2025Abstract:Image restoration~(IR), as a fundamental multimedia data processing task, has a significant impact on downstream visual applications. In recent years, researchers have focused on developing general-purpose IR models capable of handling diverse degradation types, thereby reducing the cost and complexity of model development. Current mainstream approaches are based on three architectural paradigms: CNNs, Transformers, and Mambas. CNNs excel in efficient inference, whereas Transformers and Mamba excel at capturing long-range dependencies and modeling global contexts. While each architecture has demonstrated success in specialized, single-task settings, limited efforts have been made to effectively integrate heterogeneous architectures to jointly address diverse IR challenges. To bridge this gap, we propose RestorMixer, an efficient and general-purpose IR model based on mixed-architecture fusion. RestorMixer adopts a three-stage encoder-decoder structure, where each stage is tailored to the resolution and feature characteristics of the input. In the initial high-resolution stage, CNN-based blocks are employed to rapidly extract shallow local features. In the subsequent stages, we integrate a refined multi-directional scanning Mamba module with a multi-scale window-based self-attention mechanism. This hierarchical and adaptive design enables the model to leverage the strengths of CNNs in local feature extraction, Mamba in global context modeling, and attention mechanisms in dynamic feature refinement. Extensive experimental results demonstrate that RestorMixer achieves leading performance across multiple IR tasks while maintaining high inference efficiency. The official code can be accessed at https://github.com/ClimBin/RestorMixer.

Mixed Degradation Image Restoration via Local Dynamic Optimization and Conditional Embedding

Nov 25, 2024Abstract:Multiple-in-one image restoration (IR) has made significant progress, aiming to handle all types of single degraded image restoration with a single model. However, in real-world scenarios, images often suffer from combinations of multiple degradation factors. Existing multiple-in-one IR models encounter challenges related to degradation diversity and prompt singularity when addressing this issue. In this paper, we propose a novel multiple-in-one IR model that can effectively restore images with both single and mixed degradations. To address degradation diversity, we design a Local Dynamic Optimization (LDO) module which dynamically processes degraded areas of varying types and granularities. To tackle the prompt singularity issue, we develop an efficient Conditional Feature Embedding (CFE) module that guides the decoder in leveraging degradation-type-related features, significantly improving the model's performance in mixed degradation restoration scenarios. To validate the effectiveness of our model, we introduce a new dataset containing both single and mixed degradation elements. Experimental results demonstrate that our proposed model achieves state-of-the-art (SOTA) performance not only on mixed degradation tasks but also on classic single-task restoration benchmarks.

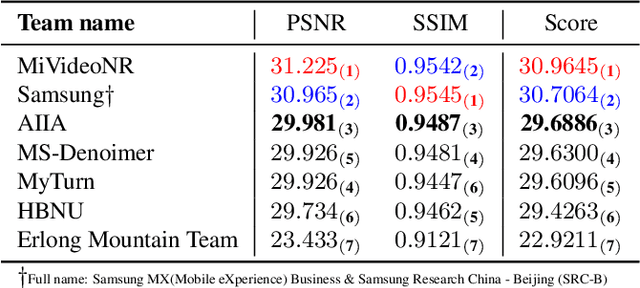

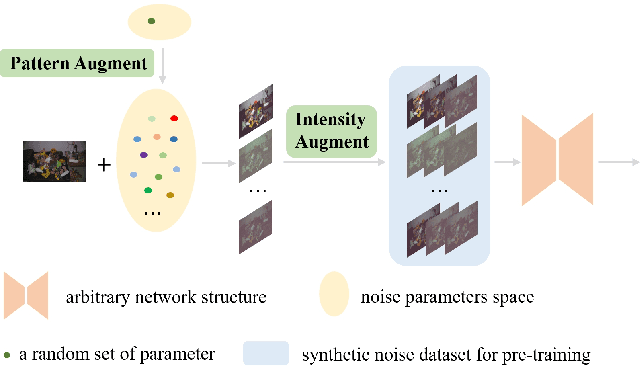

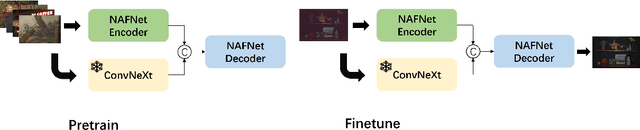

MIPI 2024 Challenge on Few-shot RAW Image Denoising: Methods and Results

Jun 11, 2024

Abstract:The increasing demand for computational photography and imaging on mobile platforms has led to the widespread development and integration of advanced image sensors with novel algorithms in camera systems. However, the scarcity of high-quality data for research and the rare opportunity for in-depth exchange of views from industry and academia constrain the development of mobile intelligent photography and imaging (MIPI). Building on the achievements of the previous MIPI Workshops held at ECCV 2022 and CVPR 2023, we introduce our third MIPI challenge including three tracks focusing on novel image sensors and imaging algorithms. In this paper, we summarize and review the Few-shot RAW Image Denoising track on MIPI 2024. In total, 165 participants were successfully registered, and 7 teams submitted results in the final testing phase. The developed solutions in this challenge achieved state-of-the-art erformance on Few-shot RAW Image Denoising. More details of this challenge and the link to the dataset can be found at https://mipichallenge.org/MIPI2024.

Semantic-guided Pixel Sampling for Cloth-Changing Person Re-identification

Jul 24, 2021

Abstract:Cloth-changing person re-identification (re-ID) is a new rising research topic that aims at retrieving pedestrians whose clothes are changed. This task is quite challenging and has not been fully studied to date. Current works mainly focus on body shape or contour sketch, but they are not robust enough due to view and posture variations. The key to this task is to exploit cloth-irrelevant cues. This paper proposes a semantic-guided pixel sampling approach for the cloth-changing person re-ID task. We do not explicitly define which feature to extract but force the model to automatically learn cloth-irrelevant cues. Specifically, we first recognize the pedestrian's upper clothes and pants, then randomly change them by sampling pixels from other pedestrians. The changed samples retain the identity labels but exchange the pixels of clothes or pants among different pedestrians. Besides, we adopt a loss function to constrain the learned features to keep consistent before and after changes. In this way, the model is forced to learn cues that are irrelevant to upper clothes and pants. We conduct extensive experiments on the latest released PRCC dataset. Our method achieved 65.8% on Rank1 accuracy, which outperforms previous methods with a large margin. The code is available at https://github.com/shuxjweb/pixel_sampling.git.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge