Wei Zhen Teoh

Salient Facial Features from Humans and Deep Neural Networks

Mar 08, 2020

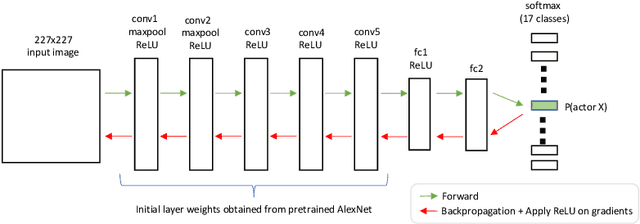

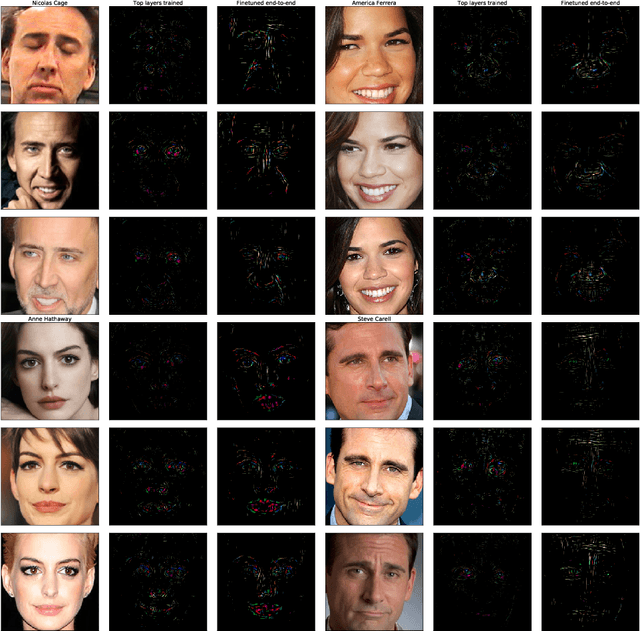

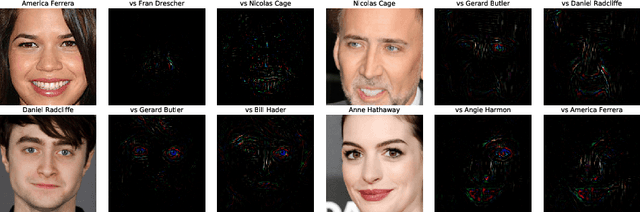

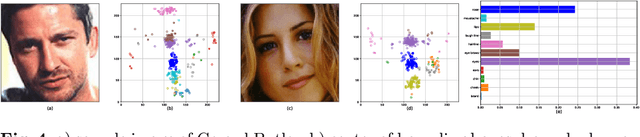

Abstract:In this work, we explore the features that are used by humans and by convolutional neural networks (ConvNets) to classify faces. We use Guided Backpropagation (GB) to visualize the facial features that influence the output of a ConvNet the most when identifying specific individuals; we explore how to best use GB for that purpose. We use a human intelligence task to find out which facial features humans find to be the most important for identifying specific individuals. We explore the differences between the saliency information gathered from humans and from ConvNets. Humans develop biases in employing available information on facial features to discriminate across faces. Studies show these biases are influenced both by neurological development and by each individual's social experience. In recent years the computer vision community has achieved human-level performance in many face processing tasks with deep neural network-based models. These face processing systems are also subject to systematic biases due to model architectural choices and training data distribution.

MelGAN: Generative Adversarial Networks for Conditional Waveform Synthesis

Oct 28, 2019

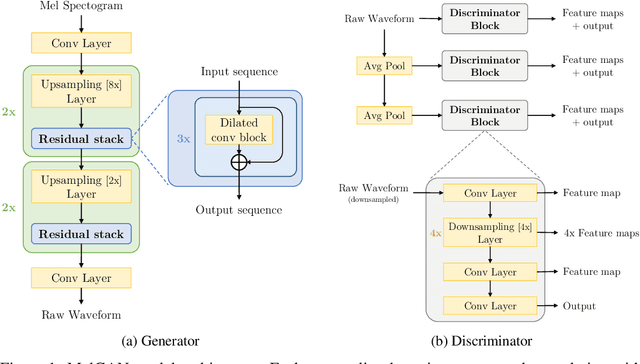

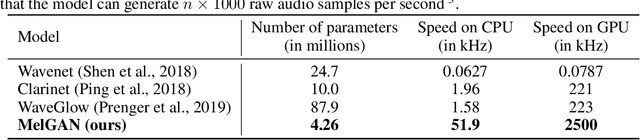

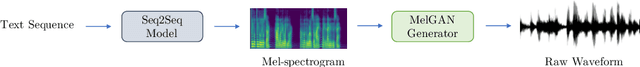

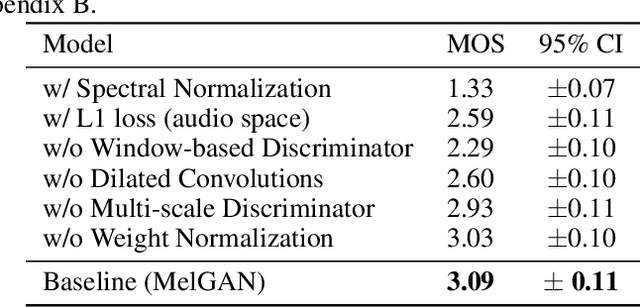

Abstract:Previous works (Donahue et al., 2018a; Engel et al., 2019a) have found that generating coherent raw audio waveforms with GANs is challenging. In this paper, we show that it is possible to train GANs reliably to generate high quality coherent waveforms by introducing a set of architectural changes and simple training techniques. Subjective evaluation metric (Mean Opinion Score, or MOS) shows the effectiveness of the proposed approach for high quality mel-spectrogram inversion. To establish the generality of the proposed techniques, we show qualitative results of our model in speech synthesis, music domain translation and unconditional music synthesis. We evaluate the various components of the model through ablation studies and suggest a set of guidelines to design general purpose discriminators and generators for conditional sequence synthesis tasks. Our model is non-autoregressive, fully convolutional, with significantly fewer parameters than competing models and generalizes to unseen speakers for mel-spectrogram inversion. Our pytorch implementation runs at more than 100x faster than realtime on GTX 1080Ti GPU and more than 2x faster than real-time on CPU, without any hardware specific optimization tricks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge