Shanmeng Sun

Salient Facial Features from Humans and Deep Neural Networks

Mar 08, 2020

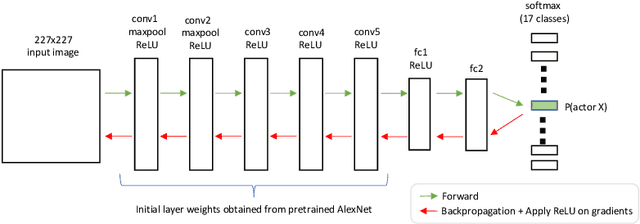

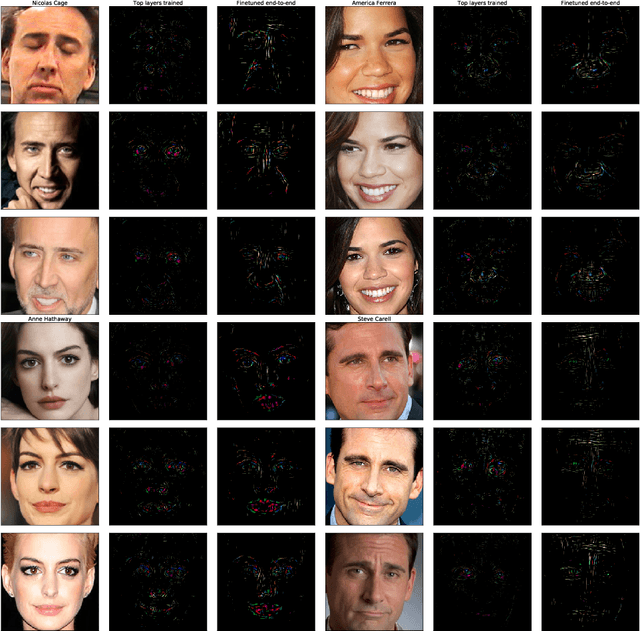

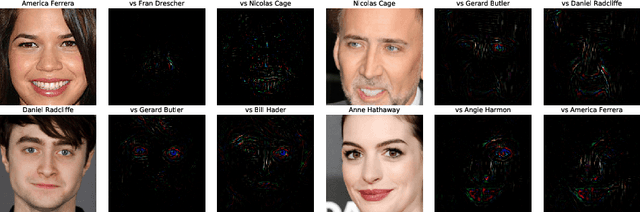

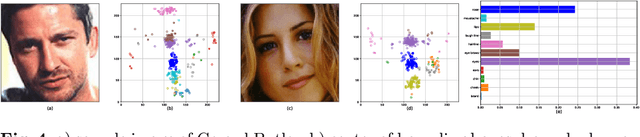

Abstract:In this work, we explore the features that are used by humans and by convolutional neural networks (ConvNets) to classify faces. We use Guided Backpropagation (GB) to visualize the facial features that influence the output of a ConvNet the most when identifying specific individuals; we explore how to best use GB for that purpose. We use a human intelligence task to find out which facial features humans find to be the most important for identifying specific individuals. We explore the differences between the saliency information gathered from humans and from ConvNets. Humans develop biases in employing available information on facial features to discriminate across faces. Studies show these biases are influenced both by neurological development and by each individual's social experience. In recent years the computer vision community has achieved human-level performance in many face processing tasks with deep neural network-based models. These face processing systems are also subject to systematic biases due to model architectural choices and training data distribution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge