Walter Vinci

HP SCDS, León, Spain

Anomaly Detection in Aeronautics Data with Quantum-compatible Discrete Deep Generative Model

Mar 22, 2023Abstract:Deep generative learning cannot only be used for generating new data with statistical characteristics derived from input data but also for anomaly detection, by separating nominal and anomalous instances based on their reconstruction quality. In this paper, we explore the performance of three unsupervised deep generative models -- variational autoencoders (VAEs) with Gaussian, Bernoulli, and Boltzmann priors -- in detecting anomalies in flight-operations data of commercial flights consisting of multivariate time series. We devised two VAE models with discrete latent variables (DVAEs), one with a factorized Bernoulli prior and one with a restricted Boltzmann machine (RBM) as prior, because of the demand for discrete-variable models in machine-learning applications and because the integration of quantum devices based on two-level quantum systems requires such models. The DVAE with RBM prior, using a relatively simple -- and classically or quantum-mechanically enhanceable -- sampling technique for the evolution of the RBM's negative phase, performed better than the Bernoulli DVAE and on par with the Gaussian model, which has a continuous latent space. Our studies demonstrate the competitiveness of a discrete deep generative model with its Gaussian counterpart on anomaly-detection tasks. Moreover, the DVAE model with RBM prior can be easily integrated with quantum sampling by outsourcing its generative process to measurements of quantum states obtained from a quantum annealer or gate-model device.

RBM-Flow and D-Flow: Invertible Flows with Discrete Energy Base Spaces

Jan 28, 2021

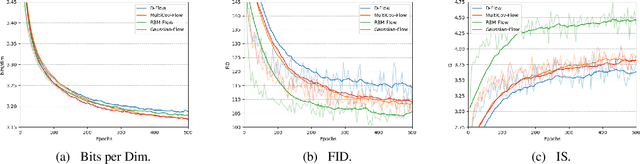

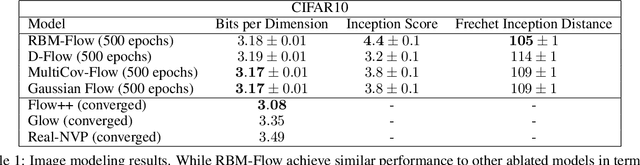

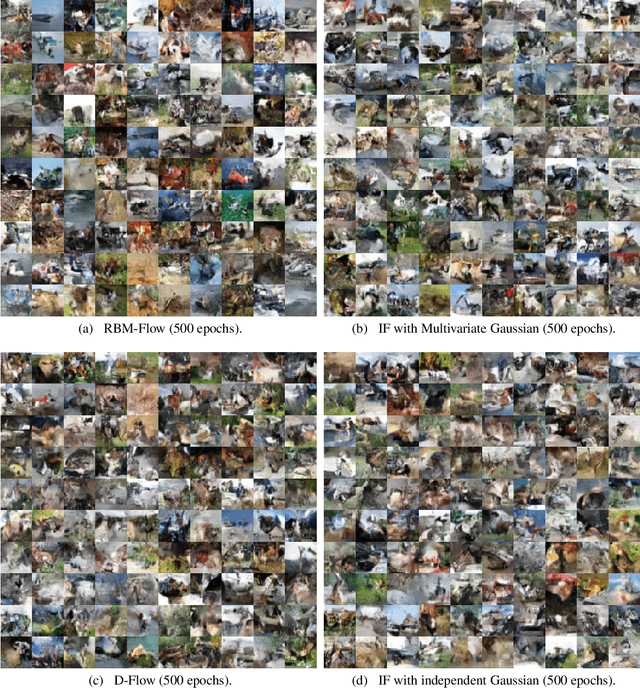

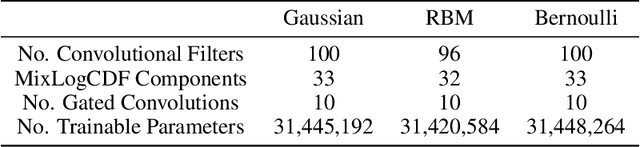

Abstract:Efficient sampling of complex data distributions can be achieved using trained invertible flows (IF), where the model distribution is generated by pushing a simple base distribution through multiple non-linear bijective transformations. However, the iterative nature of the transformations in IFs can limit the approximation to the target distribution. In this paper we seek to mitigate this by implementing RBM-Flow, an IF model whose base distribution is a Restricted Boltzmann Machine (RBM) with a continuous smoothing applied. We show that by using RBM-Flow we are able to improve the quality of samples generated, quantified by the Inception Scores (IS) and Frechet Inception Distance (FID), over baseline models with the same IF transformations, but with less expressive base distributions. Furthermore, we also obtain D-Flow, an IF model with uncorrelated discrete latent variables. We show that D-Flow achieves similar likelihoods and FID/IS scores to those of a typical IF with Gaussian base variables, but with the additional benefit that global features are meaningfully encoded as discrete labels in the latent space.

High-Dimensional Similarity Search with Quantum-Assisted Variational Autoencoder

Jun 13, 2020

Abstract:Recent progress in quantum algorithms and hardware indicates the potential importance of quantum computing in the near future. However, finding suitable application areas remains an active area of research. Quantum machine learning is touted as a potential approach to demonstrate quantum advantage within both the gate-model and the adiabatic schemes. For instance, the Quantum-assisted Variational Autoencoder has been proposed as a quantum enhancement to the discrete VAE. We extend on previous work and study the real-world applicability of a QVAE by presenting a proof-of-concept for similarity search in large-scale high-dimensional datasets. While exact and fast similarity search algorithms are available for low dimensional datasets, scaling to high-dimensional data is non-trivial. We show how to construct a space-efficient search index based on the latent space representation of a QVAE. Our experiments show a correlation between the Hamming distance in the embedded space and the Euclidean distance in the original space on the Moderate Resolution Imaging Spectroradiometer (MODIS) dataset. Further, we find real-world speedups compared to linear search and demonstrate memory-efficient scaling to half a billion data points.

A Path Towards Quantum Advantage in Training Deep Generative Models with Quantum Annealers

Dec 04, 2019

Abstract:The development of quantum-classical hybrid (QCH) algorithms is critical to achieve state-of-the-art computational models. A QCH variational autoencoder (QVAE) was introduced in Ref. [1] by some of the authors of this paper. QVAE consists of a classical auto-encoding structure realized by traditional deep neural networks to perform inference to, and generation from, a discrete latent space. The latent generative process is formalized as thermal sampling from either a quantum or classical Boltzmann machine (QBM or BM). This setup allows quantum-assisted training of deep generative models by physically simulating the generative process with quantum annealers. In this paper, we have successfully employed D-Wave quantum annealers as Boltzmann samplers to perform quantum-assisted, end-to-end training of QVAE. The hybrid structure of QVAE allows us to deploy current-generation quantum annealers in QCH generative models to achieve competitive performance on datasets such as MNIST. The results presented in this paper suggest that commercially available quantum annealers can be deployed, in conjunction with well-crafted classical deep neutral networks, to achieve competitive results in unsupervised and semisupervised tasks on large-scale datasets. We also provide evidence that our setup is able to exploit large latent-space (Q)BMs, which develop slowly mixing modes. This expressive latent space results in slow and inefficient classical sampling, and paves the way to achieve quantum advantage with quantum annealing in realistic sampling applications.

PixelVAE++: Improved PixelVAE with Discrete Prior

Aug 26, 2019

Abstract:Constructing powerful generative models for natural images is a challenging task. PixelCNN models capture details and local information in images very well but have limited receptive field. Variational autoencoders with a factorial decoder can capture global information easily, but they often fail to reconstruct details faithfully. PixelVAE combines the best features of the two models and constructs a generative model that is able to learn local and global structures. Here we introduce PixelVAE++, a VAE with three types of latent variables and a PixelCNN++ for the decoder. We introduce a novel architecture that reuses a part of the decoder as an encoder. We achieve the state of the art performance on binary data sets such as MNIST and Omniglot and achieve the state of the art performance on CIFAR-10 among latent variable models while keeping the latent variables informative.

Quantum Variational Autoencoder

Feb 15, 2018

Abstract:Variational autoencoders (VAEs) are powerful generative models with the salient ability to perform inference. Here, we introduce a \emph{quantum variational autoencoder} (QVAE): a VAE whose latent generative process is implemented as a quantum Boltzmann machine (QBM). We show that our model can be trained end-to-end by maximizing a well-defined loss-function: a "quantum" lower-bound to a variational approximation of the log-likelihood. We use quantum Monte Carlo (QMC) simulations to train and evaluate the performance of QVAEs. To achieve the best performance, we first create a VAE platform with discrete latent space generated by a restricted Boltzmann machine (RBM). Our model achieves state-of-the-art performance on the MNIST dataset when compared against similar approaches that only involve discrete variables in the generative process. We consider QVAEs with a smaller number of latent units to be able to perform QMC simulations, which are computationally expensive. We show that QVAEs can be trained effectively in regimes where quantum effects are relevant despite training via the quantum bound. Our findings open the way to the use of quantum computers to train QVAEs to achieve competitive performance for generative models. Placing a QBM in the latent space of a VAE leverages the full potential of current and next-generation quantum computers as sampling devices.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge