Vladyslav Usenko

Square Root Marginalization for Sliding-Window Bundle Adjustment

Sep 05, 2021

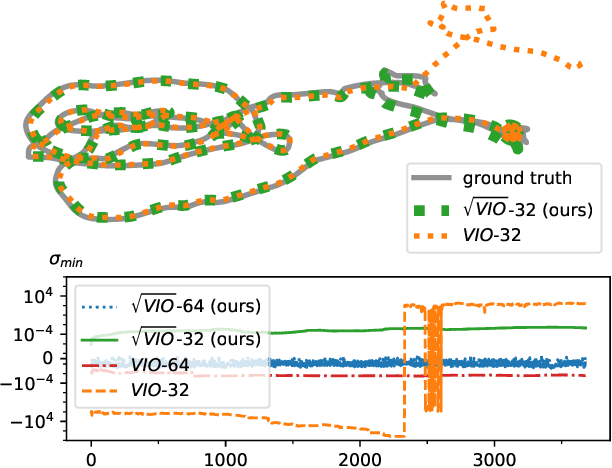

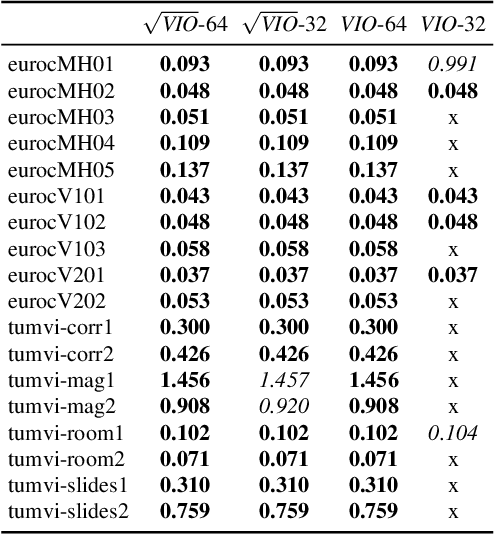

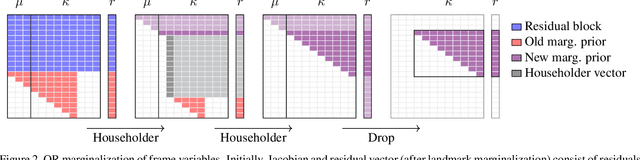

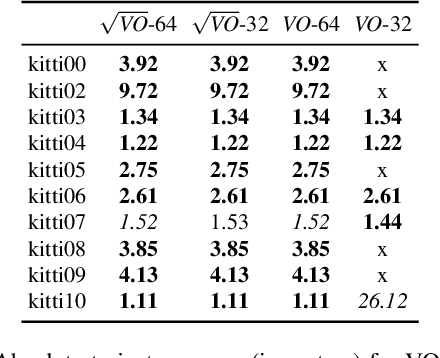

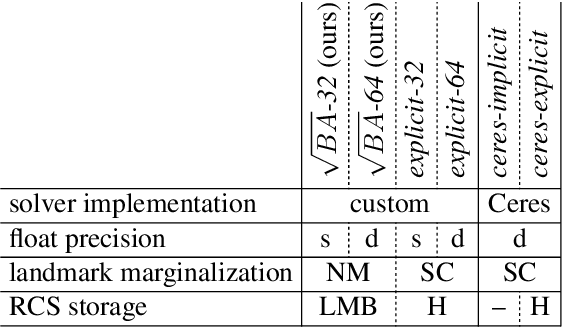

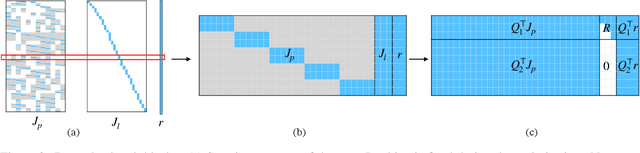

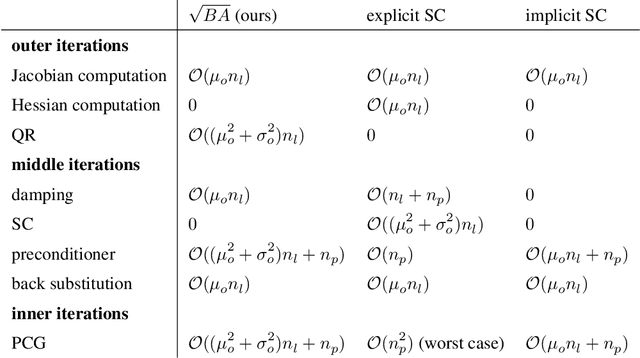

Abstract:In this paper we propose a novel square root sliding-window bundle adjustment suitable for real-time odometry applications. The square root formulation pervades three major aspects of our optimization-based sliding-window estimator: for bundle adjustment we eliminate landmark variables with nullspace projection; to store the marginalization prior we employ a matrix square root of the Hessian; and when marginalizing old poses we avoid forming normal equations and update the square root prior directly with a specialized QR decomposition. We show that the proposed square root marginalization is algebraically equivalent to the conventional use of Schur complement (SC) on the Hessian. Moreover, it elegantly deals with rank-deficient Jacobians producing a prior equivalent to SC with Moore-Penrose inverse. Our evaluation of visual and visual-inertial odometry on real-world datasets demonstrates that the proposed estimator is 36% faster than the baseline. It furthermore shows that in single precision, conventional Hessian-based marginalization leads to numeric failures and reduced accuracy. We analyse numeric properties of the marginalization prior to explain why our square root form does not suffer from the same effect and therefore entails superior performance.

Square Root Bundle Adjustment for Large-Scale Reconstruction

Mar 30, 2021

Abstract:We propose a new formulation for the bundle adjustment problem which relies on nullspace marginalization of landmark variables by QR decomposition. Our approach, which we call square root bundle adjustment, is algebraically equivalent to the commonly used Schur complement trick, improves the numeric stability of computations, and allows for solving large-scale bundle adjustment problems with single-precision floating-point numbers. We show in real-world experiments with the BAL datasets that even in single precision the proposed solver achieves on average equally accurate solutions compared to Schur complement solvers using double precision. It runs significantly faster, but can require larger amounts of memory on dense problems. The proposed formulation relies on simple linear algebra operations and opens the way for efficient implementations of bundle adjustment on hardware platforms optimized for single-precision linear algebra processing.

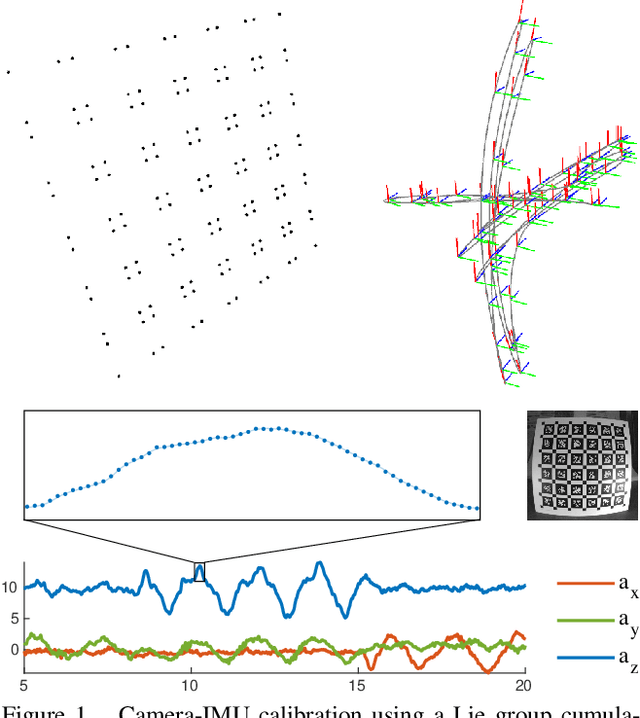

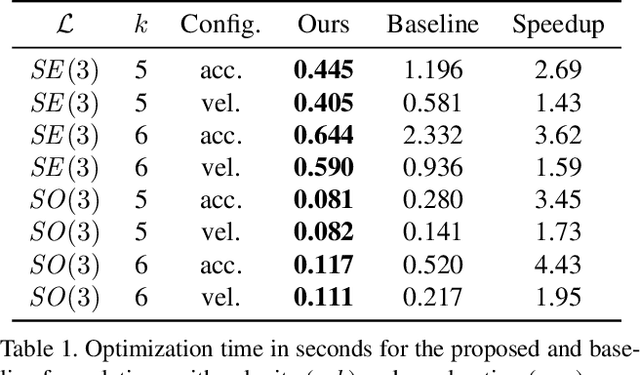

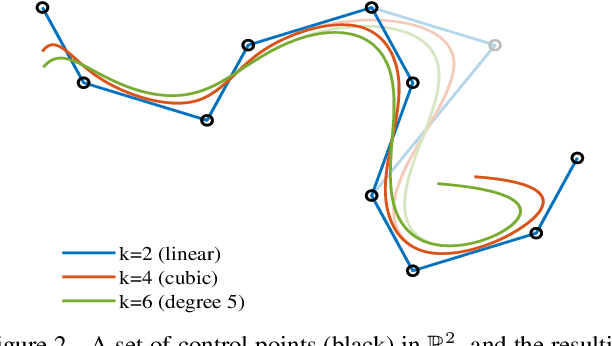

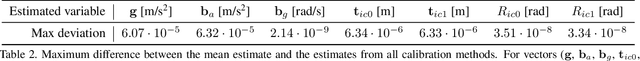

Efficient Derivative Computation for Cumulative B-Splines on Lie Groups

Nov 20, 2019

Abstract:Continuous-time trajectory representation has recently gained popularity for tasks where the fusion of high-frame-rate sensors and multiple unsynchronized devices is required. Lie group cumulative B-splines are a popular way of representing continuous trajectories without singularities. They have been used in near real-time SLAM and odometry systems with IMU, LiDAR, regular, RGB-D and event cameras, as well as for offline calibration. These applications require efficient computation of time derivatives (velocity, acceleration), but all prior works rely on a computationally suboptimal formulation. In this work we present an alternative derivation of time derivatives based on recurrence relations that needs $\mathcal{O}(k)$ instead of $\mathcal{O}(k^2)$ matrix operations (for a spline of order $k$) and results in simple and elegant expressions. While producing the same result, the proposed approach significantly speeds up the trajectory optimization and allows for computing simple analytic derivatives with respect to spline knots. The results presented in this paper pave the way for incorporating continuous-time trajectory representations into more applications where real-time performance is required.

Rolling-Shutter Modelling for Direct Visual-Inertial Odometry

Nov 04, 2019

Abstract:We present a direct visual-inertial odometry (VIO) method which estimates the motion of the sensor setup and sparse 3D geometry of the environment based on measurements from a rolling-shutter camera and an inertial measurement unit (IMU). The visual part of the system performs a photometric bundle adjustment on a sparse set of points. This direct approach does not extract feature points and is able to track not only corners, but any pixels with sufficient gradient magnitude. Neglecting rolling-shutter effects in the visual part severely degrades accuracy and robustness of the system. In this paper, we incorporate a rolling-shutter model into the photometric bundle adjustment that estimates a set of recent keyframe poses and the inverse depth of a sparse set of points. IMU information is accumulated between several frames using measurement preintegration, and is inserted into the optimization as an additional constraint between selected keyframes. For every keyframe we estimate not only the pose but also velocity and biases to correct the IMU measurements. Unlike systems with global-shutter cameras, we use both IMU measurements and rolling-shutter effects of the camera to estimate velocity and biases for every state. Last, we evaluate our system on a novel dataset that contains global-shutter and rolling-shutter images, IMU data and ground-truth poses for ten different sequences, which we make publicly available. Evaluation shows that the proposed method outperforms a system where rolling shutter is not modelled and achieves similar accuracy to the global-shutter method on global-shutter data.

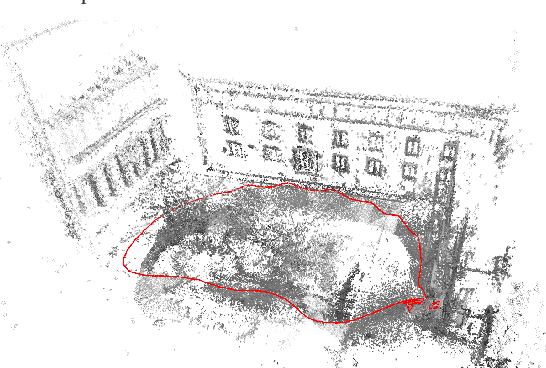

Visual-Inertial Mapping with Non-Linear Factor Recovery

Apr 29, 2019

Abstract:Cameras and inertial measurement units are complementary sensors for ego-motion estimation and environment mapping. Their combination makes visual-inertial odometry (VIO) systems more accurate and robust. For globally consistent mapping, however, combining visual and inertial information is not straightforward. To estimate the motion and geometry with a set of images large baselines are required. Because of that, most systems operate on keyframes that have large time intervals between each other. Inertial data on the other hand quickly degrades with the duration of the intervals and after several seconds of integration, it typically contains only little useful information. In this paper, we propose to extract relevant information for visual-inertial mapping from visual-inertial odometry using non-linear factor recovery. We reconstruct a set of non-linear factors that make an optimal approximation of the information on the trajectory accumulated by VIO. To obtain a globally consistent map we combine these factors with loop-closing constraints using bundle adjustment. The VIO factors make the roll and pitch angles of the global map observable, and improve the robustness and the accuracy of the mapping. In experiments on a public benchmark, we demonstrate superior performance of our method over the state-of-the-art approaches.

The Double Sphere Camera Model

Oct 29, 2018

Abstract:Vision-based motion estimation and 3D reconstruction, which have numerous applications (e.g., autonomous driving, navigation systems for airborne devices and augmented reality) are receiving significant research attention. To increase the accuracy and robustness, several researchers have recently demonstrated the benefit of using large field-of-view cameras for such applications. In this paper, we provide an extensive review of existing models for large field-of-view cameras. For each model we provide projection and unprojection functions and the subspace of points that result in valid projection. Then, we propose the Double Sphere camera model that well fits with large field-of-view lenses, is computationally inexpensive and has a closed-form inverse. We evaluate the model using a calibration dataset with several different lenses and compare the models using the metrics that are relevant for Visual Odometry, i.e., reprojection error, as well as computation time for projection and unprojection functions and their Jacobians. We also provide qualitative results and discuss the performance of all models.

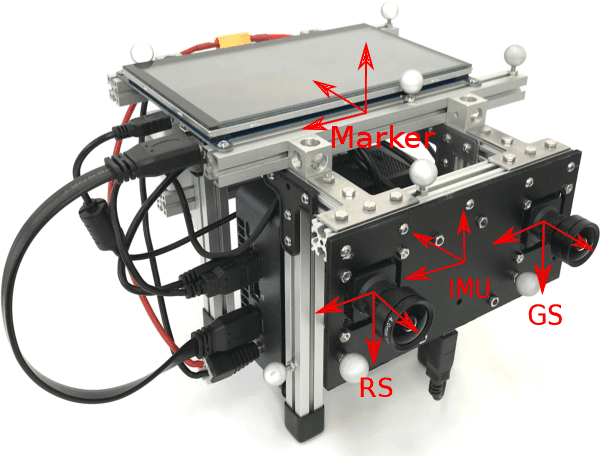

The TUM VI Benchmark for Evaluating Visual-Inertial Odometry

Sep 20, 2018

Abstract:Visual odometry and SLAM methods have a large variety of applications in domains such as augmented reality or robotics. Complementing vision sensors with inertial measurements tremendously improves tracking accuracy and robustness, and thus has spawned large interest in the development of visual-inertial (VI) odometry approaches. In this paper, we propose the TUM VI benchmark, a novel dataset with a diverse set of sequences in different scenes for evaluating VI odometry. It provides camera images with 1024x1024 resolution at 20 Hz, high dynamic range and photometric calibration. An IMU measures accelerations and angular velocities on 3 axes at 200 Hz, while the cameras and IMU sensors are time-synchronized in hardware. For trajectory evaluation, we also provide accurate pose ground truth from a motion capture system at high frequency (120 Hz) at the start and end of the sequences which we accurately aligned with the camera and IMU measurements. The full dataset with raw and calibrated data is publicly available. We also evaluate state-of-the-art VI odometry approaches on our dataset.

Omnidirectional DSO: Direct Sparse Odometry with Fisheye Cameras

Aug 08, 2018

Abstract:We propose a novel real-time direct monocular visual odometry for omnidirectional cameras. Our method extends direct sparse odometry (DSO) by using the unified omnidirectional model as a projection function, which can be applied to fisheye cameras with a field-of-view (FoV) well above 180 degrees. This formulation allows for using the full area of the input image even with strong distortion, while most existing visual odometry methods can only use a rectified and cropped part of it. Model parameters within an active keyframe window are jointly optimized, including the intrinsic/extrinsic camera parameters, 3D position of points, and affine brightness parameters. Thanks to the wide FoV, image overlap between frames becomes bigger and points are more spatially distributed. Our results demonstrate that our method provides increased accuracy and robustness over state-of-the-art visual odometry algorithms.

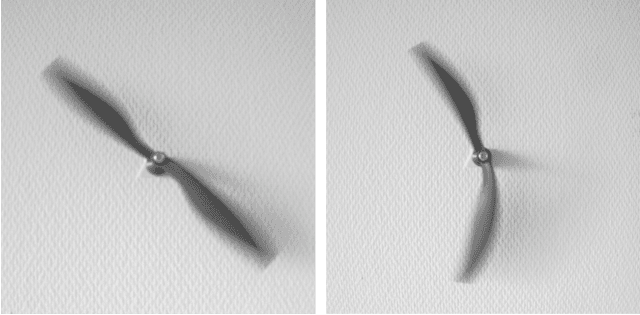

Direct Sparse Odometry with Rolling Shutter

Aug 01, 2018

Abstract:Neglecting the effects of rolling-shutter cameras for visual odometry (VO) severely degrades accuracy and robustness. In this paper, we propose a novel direct monocular VO method that incorporates a rolling-shutter model. Our approach extends direct sparse odometry which performs direct bundle adjustment of a set of recent keyframe poses and the depths of a sparse set of image points. We estimate the velocity at each keyframe and impose a constant-velocity prior for the optimization. In this way, we obtain a near real-time, accurate direct VO method. Our approach achieves improved results on challenging rolling-shutter sequences over state-of-the-art global-shutter VO.

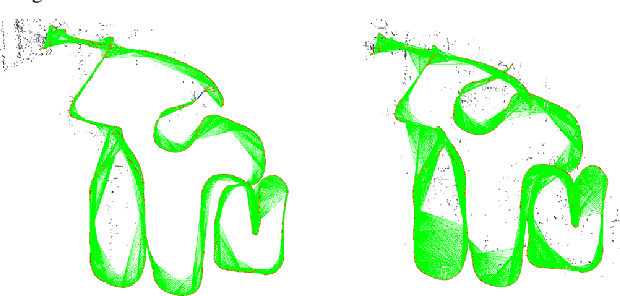

Direct Sparse Visual-Inertial Odometry using Dynamic Marginalization

Apr 16, 2018

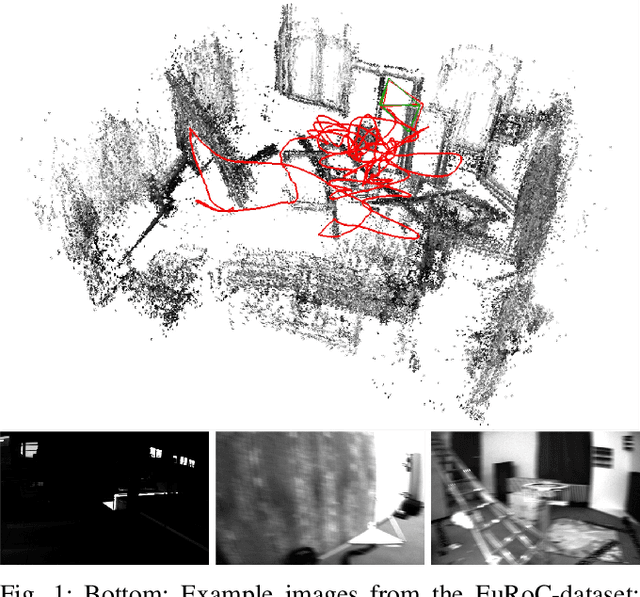

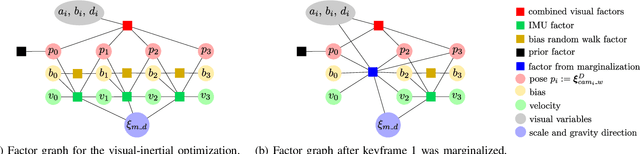

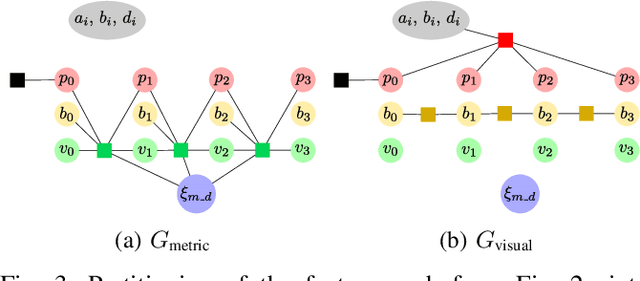

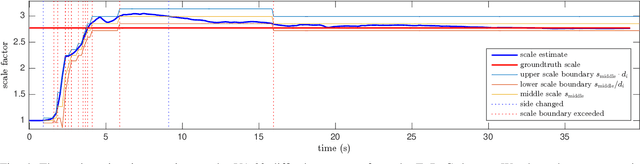

Abstract:We present VI-DSO, a novel approach for visual-inertial odometry, which jointly estimates camera poses and sparse scene geometry by minimizing photometric and IMU measurement errors in a combined energy functional. The visual part of the system performs a bundle-adjustment like optimization on a sparse set of points, but unlike key-point based systems it directly minimizes a photometric error. This makes it possible for the system to track not only corners, but any pixels with large enough intensity gradients. IMU information is accumulated between several frames using measurement preintegration, and is inserted into the optimization as an additional constraint between keyframes. We explicitly include scale and gravity direction into our model and jointly optimize them together with other variables such as poses. As the scale is often not immediately observable using IMU data this allows us to initialize our visual-inertial system with an arbitrary scale instead of having to delay the initialization until everything is observable. We perform partial marginalization of old variables so that updates can be computed in a reasonable time. In order to keep the system consistent we propose a novel strategy which we call "dynamic marginalization". This technique allows us to use partial marginalization even in cases where the initial scale estimate is far from the optimum. We evaluate our method on the challenging EuRoC dataset, showing that VI-DSO outperforms the state of the art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge