Vishesh Mistry

Liveness Detection Competition -- Noncontact-based Fingerprint Algorithms and Systems (LivDet-2023 Noncontact Fingerprint)

Oct 01, 2023

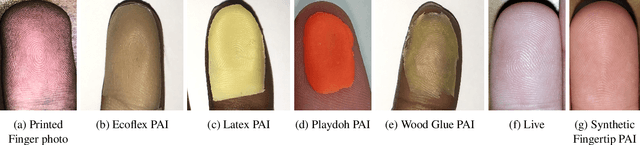

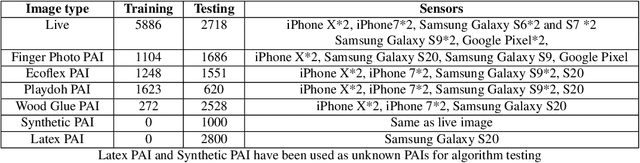

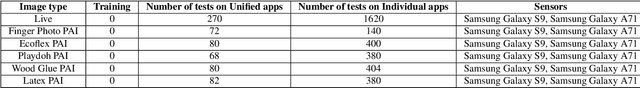

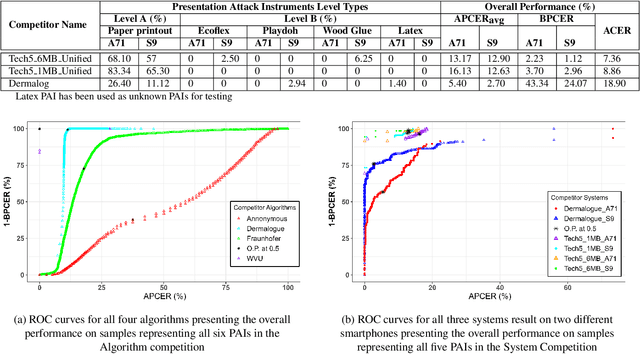

Abstract:Liveness Detection (LivDet) is an international competition series open to academia and industry with the objec-tive to assess and report state-of-the-art in Presentation Attack Detection (PAD). LivDet-2023 Noncontact Fingerprint is the first edition of the noncontact fingerprint-based PAD competition for algorithms and systems. The competition serves as an important benchmark in noncontact-based fingerprint PAD, offering (a) independent assessment of the state-of-the-art in noncontact-based fingerprint PAD for algorithms and systems, and (b) common evaluation protocol, which includes finger photos of a variety of Presentation Attack Instruments (PAIs) and live fingers to the biometric research community (c) provides standard algorithm and system evaluation protocols, along with the comparative analysis of state-of-the-art algorithms from academia and industry with both old and new android smartphones. The winning algorithm achieved an APCER of 11.35% averaged overall PAIs and a BPCER of 0.62%. The winning system achieved an APCER of 13.0.4%, averaged over all PAIs tested over all the smartphones, and a BPCER of 1.68% over all smartphones tested. Four-finger systems that make individual finger-based PAD decisions were also tested. The dataset used for competition will be available 1 to all researchers as per data share protocol

AdvBiom: Adversarial Attacks on Biometric Matchers

Jan 10, 2023Abstract:With the advent of deep learning models, face recognition systems have achieved impressive recognition rates. The workhorses behind this success are Convolutional Neural Networks (CNNs) and the availability of large training datasets. However, we show that small human-imperceptible changes to face samples can evade most prevailing face recognition systems. Even more alarming is the fact that the same generator can be extended to other traits in the future. In this work, we present how such a generator can be trained and also extended to other biometric modalities, such as fingerprint recognition systems.

Fingerprint Synthesis: Search with 100 Million Prints

Dec 16, 2019

Abstract:Evaluation of large-scale fingerprint search algorithms has been limited due to lack of publicly available datasets. A solution to this problem is to synthesize a dataset of fingerprints with characteristics similar to those of real fingerprints. We propose a Generative Adversarial Network (GAN) to synthesize a fingerprint dataset consisting of 100 million fingerprint images. In comparison to published methods, our approach incorporates an identity loss which guides the generator to synthesize a diverse set of fingerprints corresponding to more distinct identities. To demonstrate that the characteristics of our synthesized fingerprints are similar to those of real fingerprints, we show that (i) the NFIQ quality value distribution of the synthetic fingerprints follows the corresponding distribution of real fingerprints and (ii) the synthetic fingerprints are more distinct than existing synthetic fingerprints (and more closely align with the distinctiveness of real fingerprints). We use our synthesis algorithm to generate 100 million fingerprint images in 17.5 hours on 100 Tesla K80 GPUs when executed in parallel. Finally, we report for the first time in open literature, search accuracy (DeepPrint rank-1 accuracy of 91.4%) against a gallery of 100 million fingerprint images (using 2,000 NIST SD4 rolled prints as the queries).

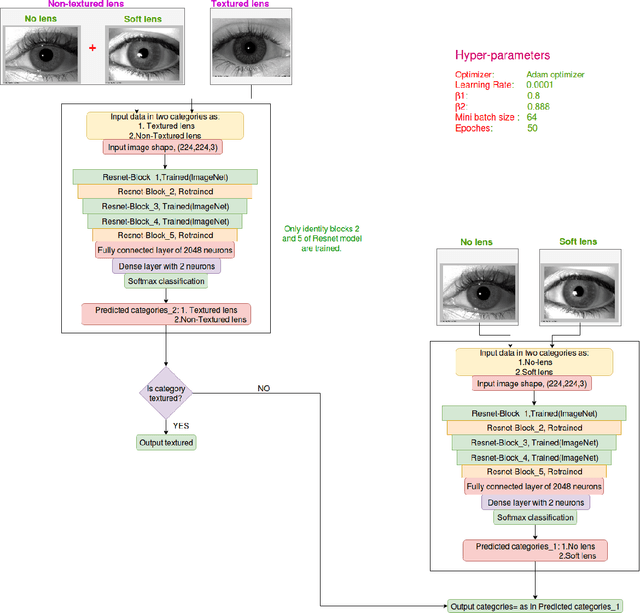

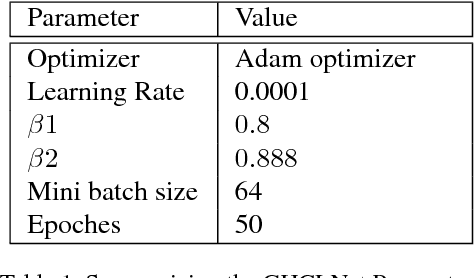

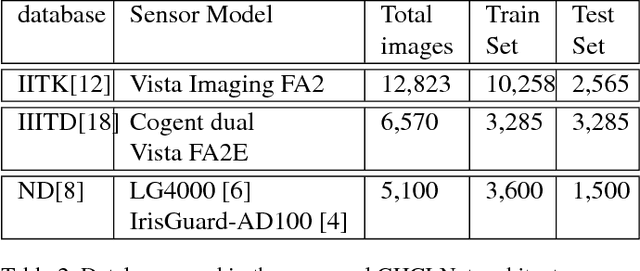

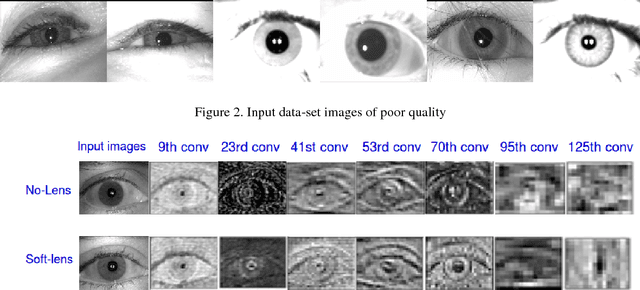

GHCLNet: A Generalized Hierarchically tuned Contact Lens detection Network

Oct 14, 2017

Abstract:Iris serves as one of the best biometric modality owing to its complex, unique and stable structure. However, it can still be spoofed using fabricated eyeballs and contact lens. Accurate identification of contact lens is must for reliable performance of any biometric authentication system based on this modality. In this paper, we present a novel approach for detecting contact lens using a Generalized Hierarchically tuned Contact Lens detection Network (GHCLNet) . We have proposed hierarchical architecture for three class oculus classification namely: no lens, soft lens and cosmetic lens. Our network architecture is inspired by ResNet-50 model. This network works on raw input iris images without any pre-processing and segmentation requirement and this is one of its prodigious strength. We have performed extensive experimentation on two publicly available data-sets namely: 1)IIIT-D 2)ND and on IIT-K data-set (not publicly available) to ensure the generalizability of our network. The proposed architecture results are quite promising and outperforms the available state-of-the-art lens detection algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge