Virgile Mison

Adversarial recovery of agent rewards from latent spaces of the limit order book

Dec 09, 2019

Abstract:Inverse reinforcement learning has proved its ability to explain state-action trajectories of expert agents by recovering their underlying reward functions in increasingly challenging environments. Recent advances in adversarial learning have allowed extending inverse RL to applications with non-stationary environment dynamics unknown to the agents, arbitrary structures of reward functions and improved handling of the ambiguities inherent to the ill-posed nature of inverse RL. This is particularly relevant in real time applications on stochastic environments involving risk, like volatile financial markets. Moreover, recent work on simulation of complex environments enable learning algorithms to engage with real market data through simulations of its latent space representations, avoiding a costly exploration of the original environment. In this paper, we explore whether adversarial inverse RL algorithms can be adapted and trained within such latent space simulations from real market data, while maintaining their ability to recover agent rewards robust to variations in the underlying dynamics, and transfer them to new regimes of the original environment.

Sensitivity based Neural Networks Explanations

Dec 03, 2018

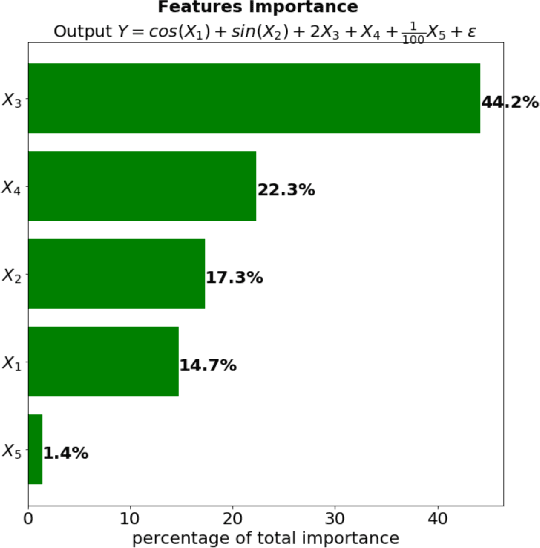

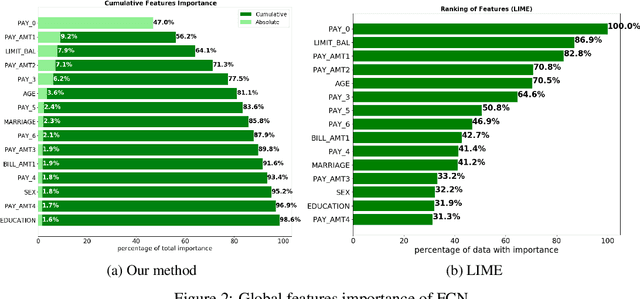

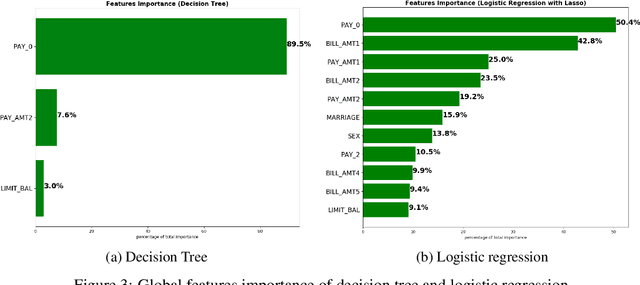

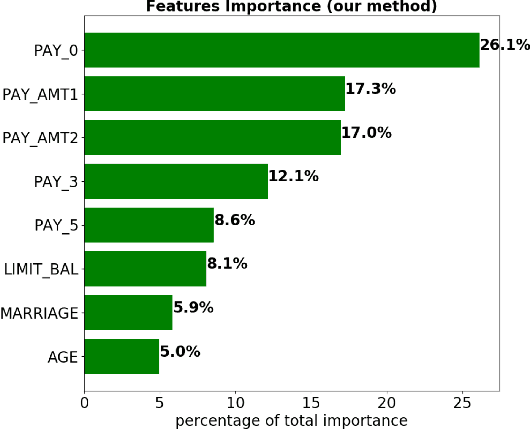

Abstract:Although neural networks can achieve very high predictive performance on various different tasks such as image recognition or natural language processing, they are often considered as opaque "black boxes". The difficulty of interpreting the predictions of a neural network often prevents its use in fields where explainability is important, such as the financial industry where regulators and auditors often insist on this aspect. In this paper, we present a way to assess the relative input features importance of a neural network based on the sensitivity of the model output with respect to its input. This method has the advantage of being fast to compute, it can provide both global and local levels of explanations and is applicable for many types of neural network architectures. We illustrate the performance of this method on both synthetic and real data and compare it with other interpretation techniques. This method is implemented into an open-source Python package that allows its users to easily generate and visualize explanations for their neural networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge