Varun Shenoy

A Novel Domain Adaptation Framework for Medical Image Segmentation

Oct 11, 2018

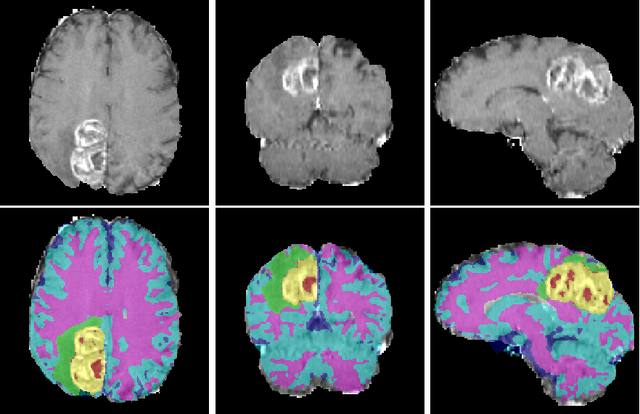

Abstract:We propose a segmentation framework that uses deep neural networks and introduce two innovations. First, we describe a biophysics-based domain adaptation method. Second, we propose an automatic method to segment white and gray matter, and cerebrospinal fluid, in addition to tumorous tissue. Regarding our first innovation, we use a domain adaptation framework that combines a novel multispecies biophysical tumor growth model with a generative adversarial model to create realistic looking synthetic multimodal MR images with known segmentation. Regarding our second innovation, we propose an automatic approach to enrich available segmentation data by computing the segmentation for healthy tissues. This segmentation, which is done using diffeomorphic image registration between the BraTS training data and a set of prelabeled atlases, provides more information for training and reduces the class imbalance problem. Our overall approach is not specific to any particular neural network and can be used in conjunction with existing solutions. We demonstrate the performance improvement using a 2D U-Net for the BraTS'18 segmentation challenge. Our biophysics based domain adaptation achieves better results, as compared to the existing state-of-the-art GAN model used to create synthetic data for training.

Deepwound: Automated Postoperative Wound Assessment and Surgical Site Surveillance through Convolutional Neural Networks

Jul 11, 2018

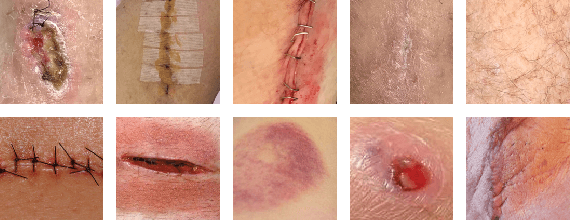

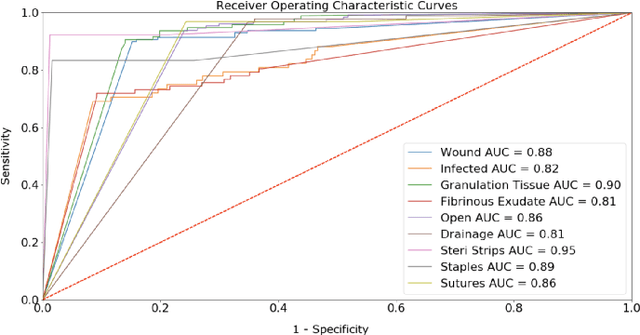

Abstract:Postoperative wound complications are a significant cause of expense for hospitals, doctors, and patients. Hence, an effective method to diagnose the onset of wound complications is strongly desired. Algorithmically classifying wound images is a difficult task due to the variability in the appearance of wound sites. Convolutional neural networks (CNNs), a subgroup of artificial neural networks that have shown great promise in analyzing visual imagery, can be leveraged to categorize surgical wounds. We present a multi-label CNN ensemble, Deepwound, trained to classify wound images using only image pixels and corresponding labels as inputs. Our final computational model can accurately identify the presence of nine labels: drainage, fibrinous exudate, granulation tissue, surgical site infection, open wound, staples, steri strips, and sutures. Our model achieves receiver operating curve (ROC) area under curve (AUC) scores, sensitivity, specificity, and F1 scores superior to prior work in this area. Smartphones provide a means to deliver accessible wound care due to their increasing ubiquity. Paired with deep neural networks, they offer the capability to provide clinical insight to assist surgeons during postoperative care. We also present a mobile application frontend to Deepwound that assists patients in tracking their wound and surgical recovery from the comfort of their home.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge