Vandad Davoodnia

UPose3D: Uncertainty-Aware 3D Human Pose Estimation with Cross-View and Temporal Cues

Apr 23, 2024Abstract:We introduce UPose3D, a novel approach for multi-view 3D human pose estimation, addressing challenges in accuracy and scalability. Our method advances existing pose estimation frameworks by improving robustness and flexibility without requiring direct 3D annotations. At the core of our method, a pose compiler module refines predictions from a 2D keypoints estimator that operates on a single image by leveraging temporal and cross-view information. Our novel cross-view fusion strategy is scalable to any number of cameras, while our synthetic data generation strategy ensures generalization across diverse actors, scenes, and viewpoints. Finally, UPose3D leverages the prediction uncertainty of both the 2D keypoint estimator and the pose compiler module. This provides robustness to outliers and noisy data, resulting in state-of-the-art performance in out-of-distribution settings. In addition, for in-distribution settings, UPose3D yields a performance rivaling methods that rely on 3D annotated data, while being the state-of-the-art among methods relying only on 2D supervision.

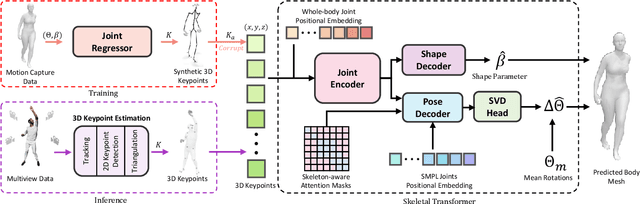

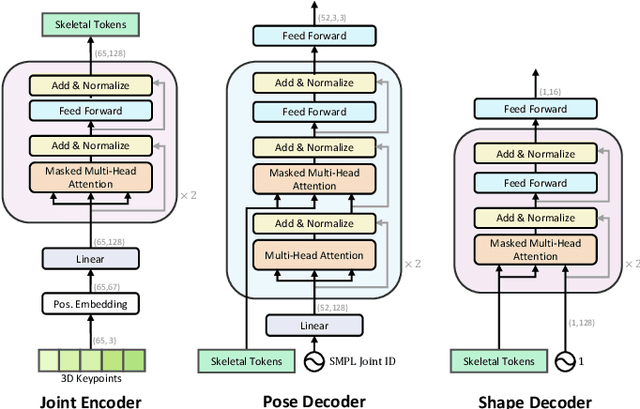

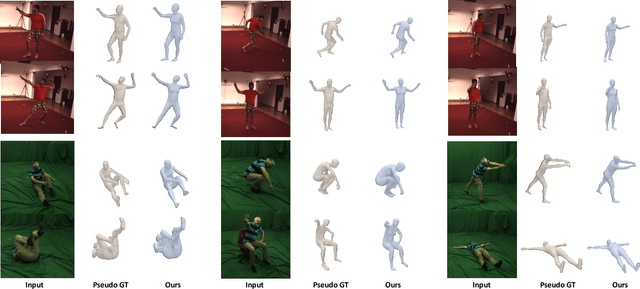

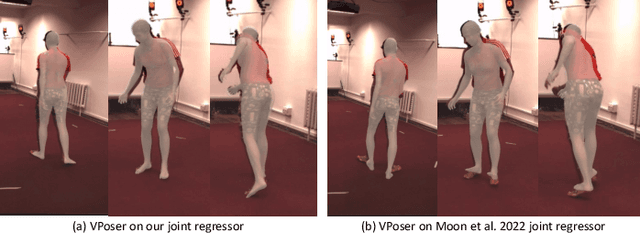

SkelFormer: Markerless 3D Pose and Shape Estimation using Skeletal Transformers

Apr 19, 2024

Abstract:We introduce SkelFormer, a novel markerless motion capture pipeline for multi-view human pose and shape estimation. Our method first uses off-the-shelf 2D keypoint estimators, pre-trained on large-scale in-the-wild data, to obtain 3D joint positions. Next, we design a regression-based inverse-kinematic skeletal transformer that maps the joint positions to pose and shape representations from heavily noisy observations. This module integrates prior knowledge about pose space and infers the full pose state at runtime. Separating the 3D keypoint detection and inverse-kinematic problems, along with the expressive representations learned by our skeletal transformer, enhance the generalization of our method to unseen noisy data. We evaluate our method on three public datasets in both in-distribution and out-of-distribution settings using three datasets, and observe strong performance with respect to prior works. Moreover, ablation experiments demonstrate the impact of each of the modules of our architecture. Finally, we study the performance of our method in dealing with noise and heavy occlusions and find considerable robustness with respect to other solutions.

Human Pose Estimation from Ambiguous Pressure Recordings with Spatio-temporal Masked Transformers

Mar 10, 2023

Abstract:Despite the impressive performance of vision-based pose estimators, they generally fail to perform well under adverse vision conditions and often don't satisfy the privacy demands of customers. As a result, researchers have begun to study tactile sensing systems as an alternative. However, these systems suffer from noisy and ambiguous recordings. To tackle this problem, we propose a novel solution for pose estimation from ambiguous pressure data. Our method comprises a spatio-temporal vision transformer with an encoder-decoder architecture. Detailed experiments on two popular public datasets reveal that our model outperforms existing solutions in the area. Moreover, we observe that increasing the number of temporal crops in the early stages of the network positively impacts the performance while pre-training the network in a self-supervised setting using a masked auto-encoder approach also further improves the results.

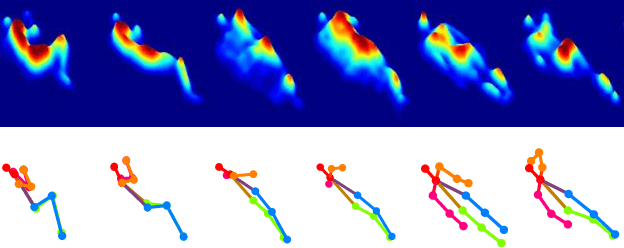

Estimating Pose from Pressure Data for Smart Beds with Deep Image-based Pose Estimators

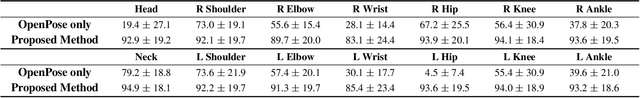

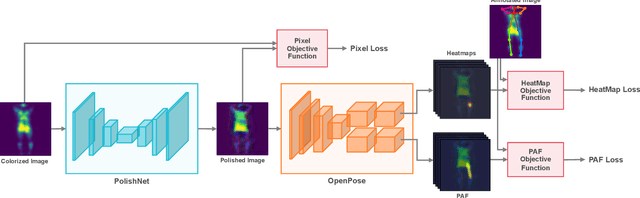

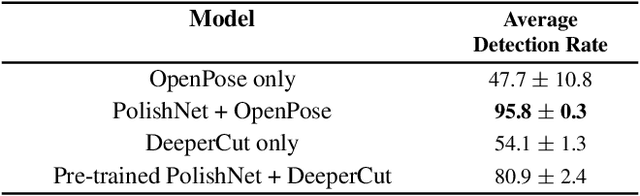

Jun 13, 2022Abstract:In-bed pose estimation has shown value in fields such as hospital patient monitoring, sleep studies, and smart homes. In this paper, we explore different strategies for detecting body pose from highly ambiguous pressure data, with the aid of pre-existing pose estimators. We examine the performance of pre-trained pose estimators by using them either directly or by re-training them on two pressure datasets. We also explore other strategies utilizing a learnable pre-processing domain adaptation step, which transforms the vague pressure maps to a representation closer to the expected input space of common purpose pose estimation modules. Accordingly, we used a fully convolutional network with multiple scales to provide the pose-specific characteristics of the pressure maps to the pre-trained pose estimation module. Our complete analysis of different approaches shows that the combination of learnable pre-processing module along with re-training pre-existing image-based pose estimators on the pressure data is able to overcome issues such as highly vague pressure points to achieve very high pose estimation accuracy.

* The version of record of this article, first published in Applied Intelligence, is available online at Publisher's website https://doi.org/10.1007/s10489-021-02418-y. arXiv admin note: substantial text overlap with arXiv:1908.08919

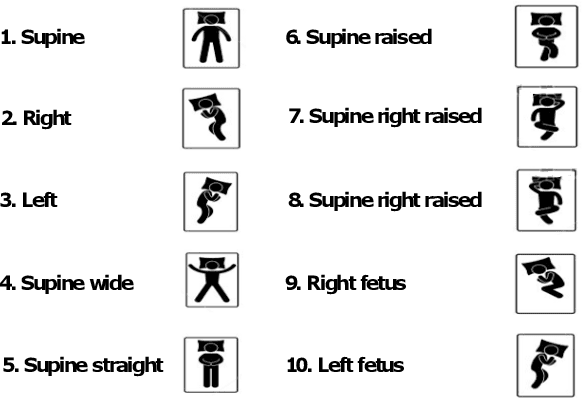

Identity and Posture Recognition in Smart Beds with Deep Multitask Learning

Apr 05, 2021

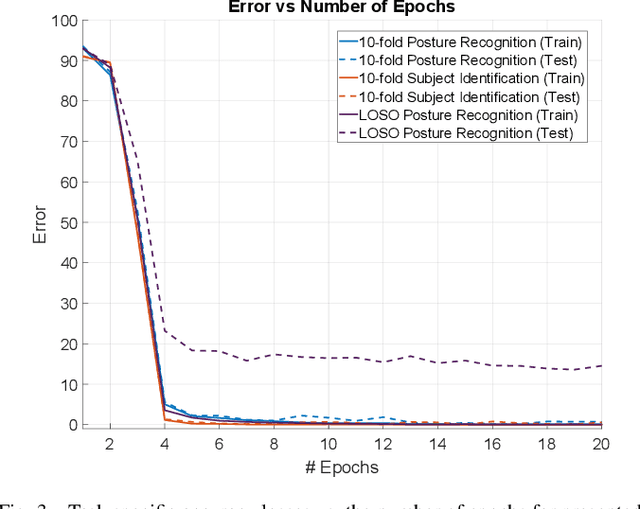

Abstract:Sleep posture analysis is widely used for clinical patient monitoring and sleep studies. Earlier research has revealed that sleep posture highly influences symptoms of diseases such as apnea and pressure ulcers. In this study, we propose a robust deep learning model capable of accurately detecting subjects and their sleeping postures using the publicly available data acquired from a commercial pressure mapping system. A combination of loss functions is used to discriminate subjects and their sleeping postures simultaneously. The experimental results show that our proposed method can identify the patients and their in-bed posture with almost no errors in a 10-fold cross-validation scheme. Furthermore, we show that our network achieves an average accuracy of up to 99% when faced with new subjects in a leave-one-subject-out validation procedure on the three most common sleeping posture categories. We demonstrate the effects of the combined cost function over its parameter and show that learning both tasks simultaneously improves performance significantly. Finally, we evaluate our proposed pipeline by testing it over augmented images of our dataset. The proposed algorithm can ultimately be used in clinical and smart home environments as a complementary tool with other available automated patient monitoring systems.

* \c{opyright} 2019 IEEE. Personal use of this material is permitted. Permission from IEEE must be obtained for all other uses, in any current or future media, including reprinting/republishing this material for advertising or promotional purposes, creating new collective works, for resale or redistribution to servers or lists, or reuse of any copyrighted component of this work in other works

Deep Multitask Learning for Pervasive BMI Estimation and Identity Recognition in Smart Beds

Jun 18, 2020

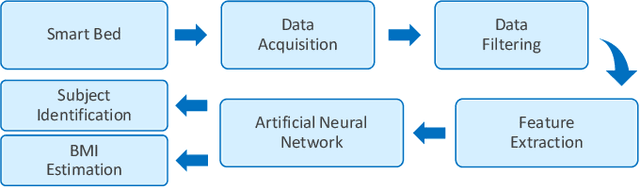

Abstract:Smart devices in the Internet of Things (IoT) paradigm provide a variety of unobtrusive and pervasive means for continuous monitoring of bio-metrics and health information. Furthermore, automated personalization and authentication through such smart systems can enable better user experience and security. In this paper, simultaneous estimation and monitoring of body mass index (BMI) and user identity recognition through a unified machine learning framework using smart beds is explored. To this end, we utilize pressure data collected from textile-based sensor arrays integrated onto a mattress to estimate the BMI values of subjects and classify their identities in different positions by using a deep multitask neural network. First, we filter and extract 14 features from the data and subsequently employ deep neural networks for BMI estimation and subject identification on two different public datasets. Finally, we demonstrate that our proposed solution outperforms prior works and several machine learning benchmarks by a considerable margin, while also estimating users' BMI in a 10-fold cross-validation scheme.

In-bed Pressure-based Pose Estimation using Image Space Representation Learning

Aug 21, 2019

Abstract:In-bed pose estimation has shown value in fields such as hospital patient monitoring, sleep studies, and smart homes. In this paper, we present a novel in-bed pressure-based pose estimation approach capable of accurately detecting body parts from highly ambiguous pressure data. We exploit the idea of using a learnable pre-processing step, which transforms the vague pressure maps to a representation close to the expected input space of common purpose pose identification modules, which fail if solely used on the pressure data. To this end, a fully convolutional network with multiple scales is used as the learnable pre-processing step to provide the pose-specific characteristics of the pressure maps to the pre-trained pose identification module. A combination of loss functions is used to model the constraints, ensuring that unclear body parts are reconstructed correctly while preventing the pre-processing block from generating arbitrary images. The evaluation results show high visual fidelity in the generated pre-processed images as well as high detection rates in pose estimation. Furthermore, we show that the trained pre-processing block can be effective for pose identification models for which it has not been trained as well.

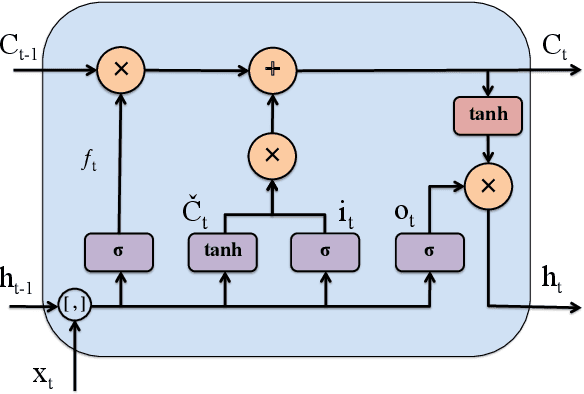

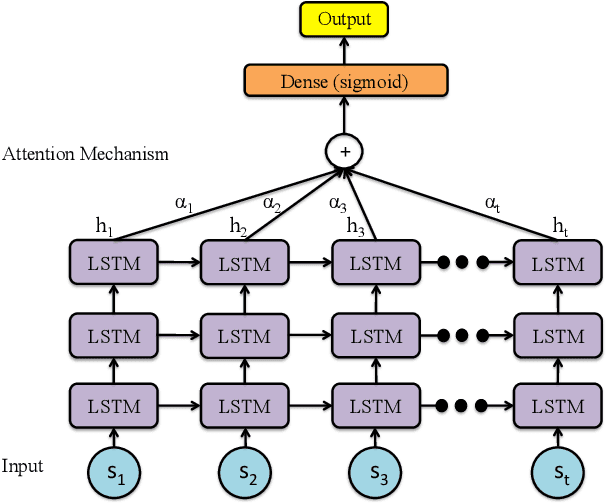

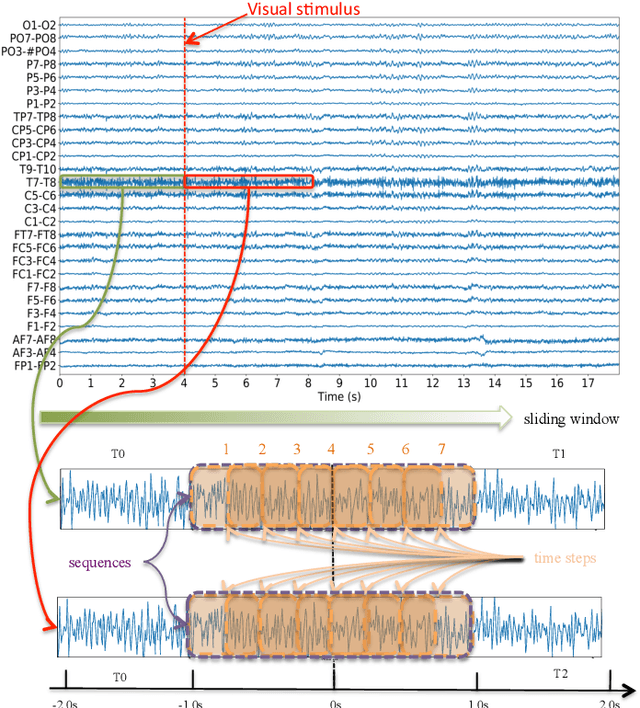

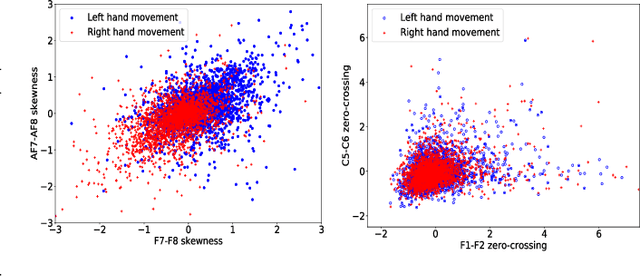

Classification of Hand Movements from EEG using a Deep Attention-based LSTM Network

Aug 06, 2019

Abstract:Classifying limb movements using brain activity is an important task in Brain-computer Interfaces (BCI) that has been successfully used in multiple application domains, ranging from human-computer interaction to medical and biomedical applications. This paper proposes a novel solution for classification of left/right hand movement by exploiting a Long Short-Term Memory (LSTM) network with attention mechanism to learn from sequential data available in the electroencephalogram (EEG) signals. In this context, a wide range of time and frequency domain features are first extracted from the EEG signal and are then evaluated using a Random Forest (RF) to select the most important features. The selected features are arranged as a spatio-temporal sequence to feed the LSTM network, learning from the sequential data to perform the classification task. We conduct extensive experiments with the EEG motor movement/imagery database and show that our proposed solution achieves effective results outperforming baseline methods and the state-of-the-art in both intra-subject and cross-subject evaluation schemes. Moreover, we utilize the proposed framework to analyze the information as received by the sensors and monitor the activated regions of the brain by tracking EEG topography throughout the experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge