Vaibhav Srivastava

Velocity-Form Data-Enabled Predictive Control of Soft Robots under Unknown External Payloads

Oct 06, 2025

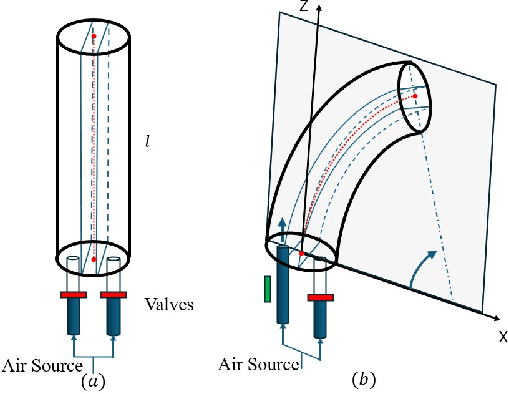

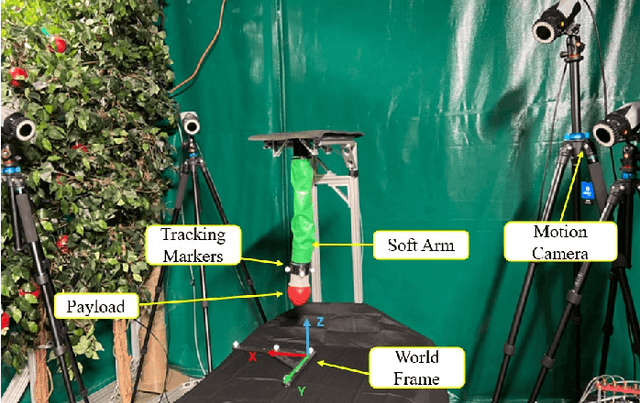

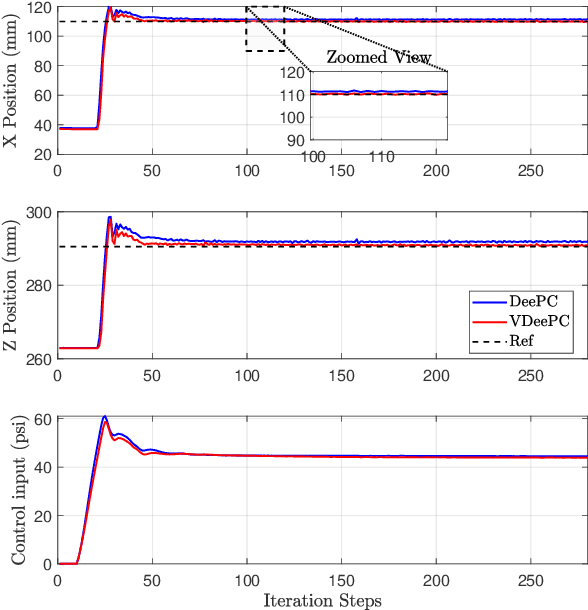

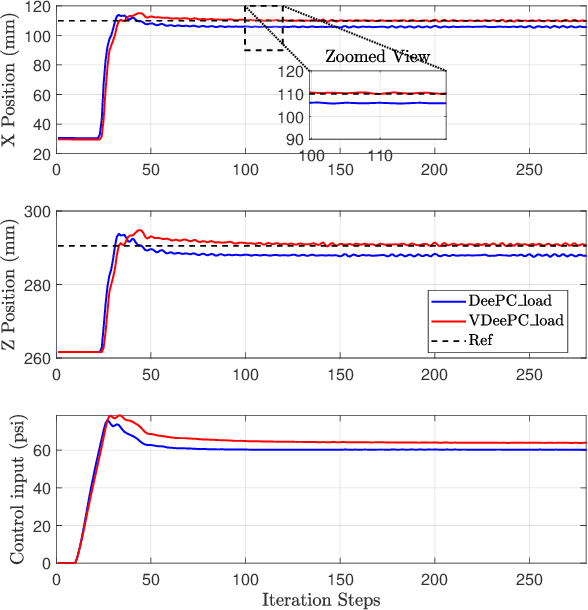

Abstract:Data-driven control methods such as data-enabled predictive control (DeePC) have shown strong potential in efficient control of soft robots without explicit parametric models. However, in object manipulation tasks, unknown external payloads and disturbances can significantly alter the system dynamics and behavior, leading to offset error and degraded control performance. In this paper, we present a novel velocity-form DeePC framework that achieves robust and optimal control of soft robots under unknown payloads. The proposed framework leverages input-output data in an incremental representation to mitigate performance degradation induced by unknown payloads, eliminating the need for weighted datasets or disturbance estimators. We validate the method experimentally on a planar soft robot and demonstrate its superior performance compared to standard DeePC in scenarios involving unknown payloads.

Modeling Trust Dynamics in Robot-Assisted Delivery: Impact of Trust Repair Strategies

Jun 12, 2025Abstract:With increasing efficiency and reliability, autonomous systems are becoming valuable assistants to humans in various tasks. In the context of robot-assisted delivery, we investigate how robot performance and trust repair strategies impact human trust. In this task, while handling a secondary task, humans can choose to either send the robot to deliver autonomously or manually control it. The trust repair strategies examined include short and long explanations, apology and promise, and denial. Using data from human participants, we model human behavior using an Input-Output Hidden Markov Model (IOHMM) to capture the dynamics of trust and human action probabilities. Our findings indicate that humans are more likely to deploy the robot autonomously when their trust is high. Furthermore, state transition estimates show that long explanations are the most effective at repairing trust following a failure, while denial is most effective at preventing trust loss. We also demonstrate that the trust estimates generated by our model are isomorphic to self-reported trust values, making them interpretable. This model lays the groundwork for developing optimal policies that facilitate real-time adjustment of human trust in autonomous systems.

Fast Online Adaptive Neural MPC via Meta-Learning

Apr 24, 2025Abstract:Data-driven model predictive control (MPC) has demonstrated significant potential for improving robot control performance in the presence of model uncertainties. However, existing approaches often require extensive offline data collection and computationally intensive training, limiting their ability to adapt online. To address these challenges, this paper presents a fast online adaptive MPC framework that leverages neural networks integrated with Model-Agnostic Meta-Learning (MAML). Our approach focuses on few-shot adaptation of residual dynamics - capturing the discrepancy between nominal and true system behavior - using minimal online data and gradient steps. By embedding these meta-learned residual models into a computationally efficient L4CasADi-based MPC pipeline, the proposed method enables rapid model correction, enhances predictive accuracy, and improves real-time control performance. We validate the framework through simulation studies on a Van der Pol oscillator, a Cart-Pole system, and a 2D quadrotor. Results show significant gains in adaptation speed and prediction accuracy over both nominal MPC and nominal MPC augmented with a freshly initialized neural network, underscoring the effectiveness of our approach for real-time adaptive robot control.

Trust-Aware Assistance Seeking in Human-Supervised Autonomy

Oct 27, 2024Abstract:Our goal is to model and experimentally assess trust evolution to predict future beliefs and behaviors of human-robot teams in dynamic environments. Research suggests that maintaining trust among team members in a human-robot team is vital for successful team performance. Research suggests that trust is a multi-dimensional and latent entity that relates to past experiences and future actions in a complex manner. Employing a human-robot collaborative task, we design an optimal assistance-seeking strategy for the robot using a POMDP framework. In the task, the human supervises an autonomous mobile manipulator collecting objects in an environment. The supervisor's task is to ensure that the robot safely executes its task. The robot can either choose to attempt to collect the object or seek human assistance. The human supervisor actively monitors the robot's activities, offering assistance upon request, and intervening if they perceive the robot may fail. In this setting, human trust is the hidden state, and the primary objective is to optimize team performance. We execute two sets of human-robot interaction experiments. The data from the first experiment are used to estimate POMDP parameters, which are used to compute an optimal assistance-seeking policy evaluated in the second experiment. The estimated POMDP parameters reveal that, for most participants, human intervention is more probable when trust is low, particularly in high-complexity tasks. Our estimates suggest that the robot's action of asking for assistance in high-complexity tasks can positively impact human trust. Our experimental results show that the proposed trust-aware policy is better than an optimal trust-agnostic policy. By comparing model estimates of human trust, obtained using only behavioral data, with the collected self-reported trust values, we show that model estimates are isomorphic to self-reported responses.

Assistance-Seeking in Human-Supervised Autonomy: Role of Trust and Secondary Task Engagement (Extended Version)

May 30, 2024Abstract:Using a dual-task paradigm, we explore how robot actions, performance, and the introduction of a secondary task influence human trust and engagement. In our study, a human supervisor simultaneously engages in a target-tracking task while supervising a mobile manipulator performing an object collection task. The robot can either autonomously collect the object or ask for human assistance. The human supervisor also has the choice to rely upon or interrupt the robot. Using data from initial experiments, we model the dynamics of human trust and engagement using a linear dynamical system (LDS). Furthermore, we develop a human action model to define the probability of human reliance on the robot. Our model suggests that participants are more likely to interrupt the robot when their trust and engagement are low during high-complexity collection tasks. Using Model Predictive Control (MPC), we design an optimal assistance-seeking policy. Evaluation experiments demonstrate the superior performance of the MPC policy over the baseline policy for most participants.

On Multi-Fidelity Impedance Tuning for Human-Robot Cooperative Manipulation

Oct 09, 2023Abstract:We examine how a human-robot interaction (HRI) system may be designed when input-output data from previous experiments are available. In particular, we consider how to select an optimal impedance in the assistance design for a cooperative manipulation task with a new operator. Due to the variability between individuals, the design parameters that best suit one operator of the robot may not be the best parameters for another one. However, by incorporating historical data using a linear auto-regressive (AR-1) Gaussian process, the search for a new operator's optimal parameters can be accelerated. We lay out a framework for optimizing the human-robot cooperative manipulation that only requires input-output data. We establish how the AR-1 model improves the bound on the regret and numerically simulate a human-robot cooperative manipulation task to show the regret improvement. Further, we show how our approach's input-output nature provides robustness against modeling error through an additional numerical study.

Deterministic Sequencing of Exploration and Exploitation for Reinforcement Learning

Sep 15, 2022Abstract:We propose Deterministic Sequencing of Exploration and Exploitation (DSEE) algorithm with interleaving exploration and exploitation epochs for model-based RL problems that aim to simultaneously learn the system model, i.e., a Markov decision process (MDP), and the associated optimal policy. During exploration, DSEE explores the environment and updates the estimates for expected reward and transition probabilities. During exploitation, the latest estimates of the expected reward and transition probabilities are used to obtain a robust policy with high probability. We design the lengths of the exploration and exploitation epochs such that the cumulative regret grows as a sub-linear function of time.

Towards Modeling Human Motor Learning Dynamics in High-Dimensional Spaces

Feb 06, 2022

Abstract:Designing effective rehabilitation strategies for upper extremities, particularly hands and fingers, warrants the need for a computational model of human motor learning. The presence of large degrees of freedom (DoFs) available in these systems makes it difficult to balance the trade-off between learning the full dexterity and accomplishing manipulation goals. The motor learning literature argues that humans use motor synergies to reduce the dimension of control space. Using the low-dimensional space spanned by these synergies, we develop a computational model based on the internal model theory of motor control. We analyze the proposed model in terms of its convergence properties and fit it to the data collected from human experiments. We compare the performance of the fitted model to the experimental data and show that it captures human motor learning behavior well.

Online Estimation and Coverage Control with Heterogeneous Sensing Information

Jun 28, 2021

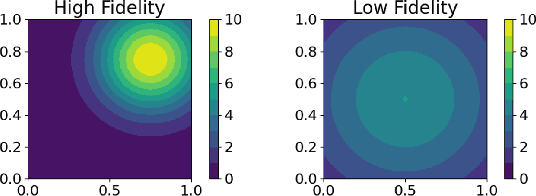

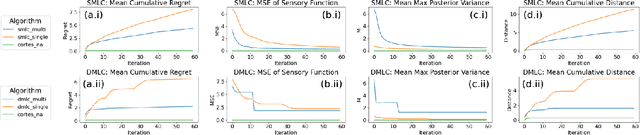

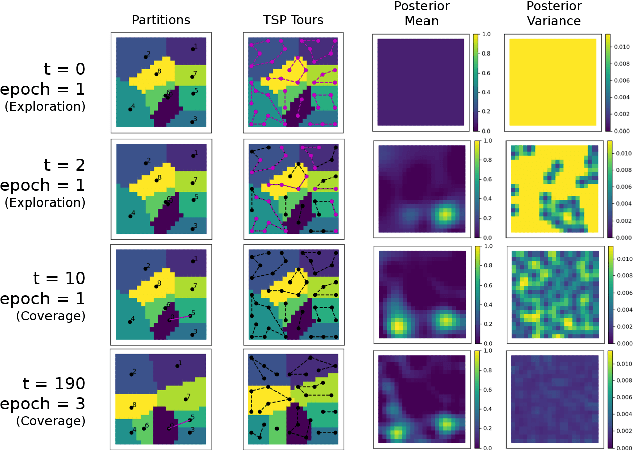

Abstract:Heterogeneous multi-robot sensing systems are able to characterize physical processes more comprehensively than homogeneous systems. Access to multiple modalities of sensory data allow such systems to fuse information between complementary sources and learn richer representations of a phenomenon of interest. Often, these data are correlated but vary in fidelity, i.e., accuracy (bias) and precision (noise). Low-fidelity data may be more plentiful, while high-fidelity data may be more trustworthy. In this paper, we address the problem of multi-robot online estimation and coverage control by combining low- and high-fidelity data to learn and cover a sensory function of interest. We propose two algorithms for this task of heterogeneous learning and coverage -- namely Stochastic Sequencing of Multi-fidelity Learning and Coverage (SMLC) and Deterministic Sequencing of Multi-fidelity Learning and Coverage (DMLC) -- and prove that they converge asymptotically. In addition, we demonstrate the empirical efficacy of SMLC and DMLC through numerical simulations.

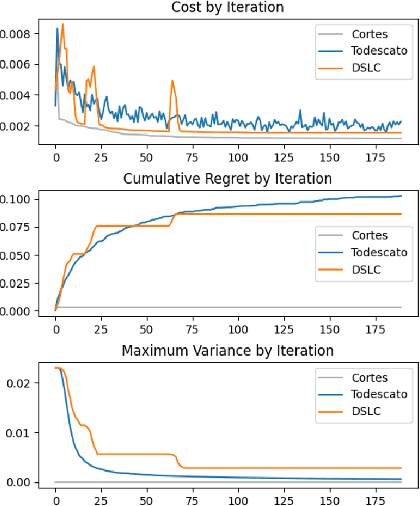

Regret Analysis of Distributed Gaussian Process Estimation and Coverage

Feb 05, 2021

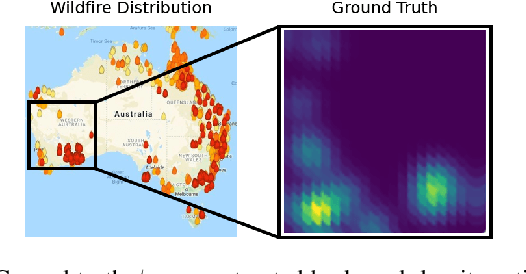

Abstract:We study the problem of distributed multi-robot coverage over an unknown, nonuniform sensory field. Modeling the sensory field as a realization of a Gaussian Process and using Bayesian techniques, we devise a policy which aims to balance the tradeoff between learning the sensory function and covering the environment. We propose an adaptive coverage algorithm called Deterministic Sequencing of Learning and Coverage (DSLC) that schedules learning and coverage epochs such that its emphasis gradually shifts from exploration to exploitation while never fully ceasing to learn. Using a novel definition of coverage regret which characterizes overall coverage performance of a multi-robot team over a time horizon $T$, we analyze DSLC to provide an upper bound on expected cumulative coverage regret. Finally, we illustrate the empirical performance of the algorithm through simulations of the coverage task over an unknown distribution of wildfires.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge