Todd C. Hollon

Learning complete and explainable visual representations from itemized text supervision

Dec 11, 2025Abstract:Training vision models with language supervision enables general and transferable representations. However, many visual domains, especially non-object-centric domains such as medical imaging and remote sensing, contain itemized text annotations: multiple text items describing distinct and semantically independent findings within a single image. Such supervision differs from standard multi-caption supervision, where captions are redundant or highly overlapping. Here, we introduce ItemizedCLIP, a framework for learning complete and explainable visual representations from itemized text supervision. ItemizedCLIP employs a cross-attention module to produce text item-conditioned visual embeddings and a set of tailored objectives that jointly enforce item independence (distinct regions for distinct items) and representation completeness (coverage of all items). Across four domains with naturally itemized text supervision (brain MRI, head CT, chest CT, remote sensing) and one additional synthetically itemized dataset, ItemizedCLIP achieves substantial improvements in zero-shot performance and fine-grained interpretability over baselines. The resulting ItemizedCLIP representations are semantically grounded, item-differentiable, complete, and visually interpretable. Our code is available at https://github.com/MLNeurosurg/ItemizedCLIP.

Extending SEEDS to a Supervoxel Algorithm for Medical Image Analysis

Feb 04, 2025

Abstract:In this work, we extend the SEEDS superpixel algorithm from 2D images to 3D volumes, resulting in 3D SEEDS, a faster, better, and open-source supervoxel algorithm for medical image analysis. We compare 3D SEEDS with the widely used supervoxel algorithm SLIC on 13 segmentation tasks across 10 organs. 3D SEEDS accelerates supervoxel generation by a factor of 10, improves the achievable Dice score by +6.5%, and reduces the under-segmentation error by -0.16%. The code is available at https://github.com/Zch0414/3d_seeds

Step-Calibrated Diffusion for Biomedical Optical Image Restoration

Mar 26, 2024

Abstract:High-quality, high-resolution medical imaging is essential for clinical care. Raman-based biomedical optical imaging uses non-ionizing infrared radiation to evaluate human tissues in real time and is used for early cancer detection, brain tumor diagnosis, and intraoperative tissue analysis. Unfortunately, optical imaging is vulnerable to image degradation due to laser scattering and absorption, which can result in diagnostic errors and misguided treatment. Restoration of optical images is a challenging computer vision task because the sources of image degradation are multi-factorial, stochastic, and tissue-dependent, preventing a straightforward method to obtain paired low-quality/high-quality data. Here, we present Restorative Step-Calibrated Diffusion (RSCD), an unpaired image restoration method that views the image restoration problem as completing the finishing steps of a diffusion-based image generation task. RSCD uses a step calibrator model to dynamically determine the severity of image degradation and the number of steps required to complete the reverse diffusion process for image restoration. RSCD outperforms other widely used unpaired image restoration methods on both image quality and perceptual evaluation metrics for restoring optical images. Medical imaging experts consistently prefer images restored using RSCD in blinded comparison experiments and report minimal to no hallucinations. Finally, we show that RSCD improves performance on downstream clinical imaging tasks, including automated brain tumor diagnosis and deep tissue imaging. Our code is available at https://github.com/MLNeurosurg/restorative_step-calibrated_diffusion.

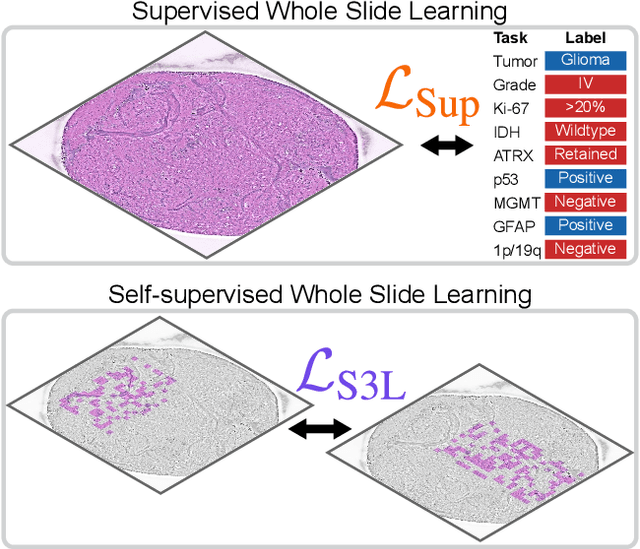

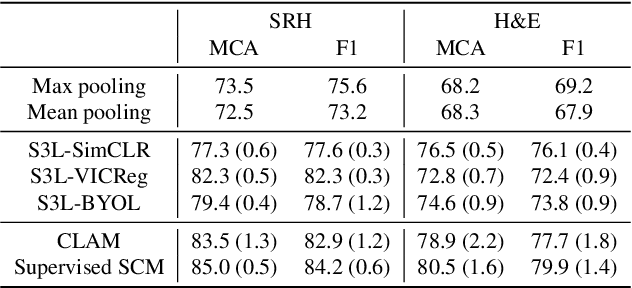

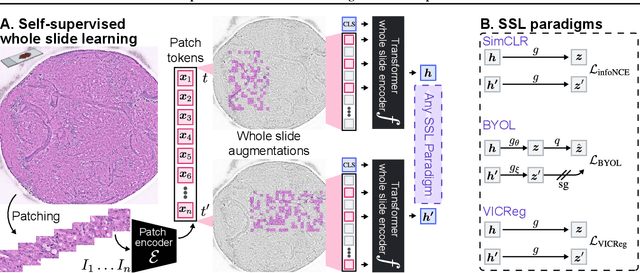

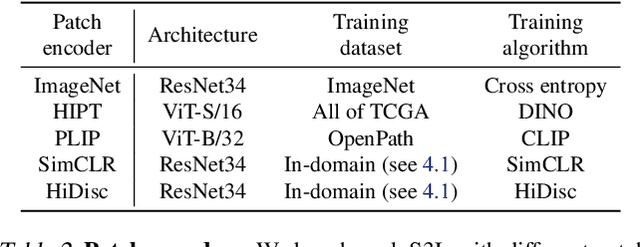

A self-supervised framework for learning whole slide representations

Feb 09, 2024

Abstract:Whole slide imaging is fundamental to biomedical microscopy and computational pathology. However, whole slide images (WSIs) present a complex computer vision challenge due to their gigapixel size, diverse histopathologic features, spatial heterogeneity, and limited/absent data annotations. These challenges highlight that supervised training alone can result in suboptimal whole slide representations. Self-supervised representation learning can achieve high-quality WSI visual feature learning for downstream diagnostic tasks, such as cancer diagnosis or molecular genetic prediction. Here, we present a general self-supervised whole slide learning (S3L) framework for gigapixel-scale self-supervision of WSIs. S3L combines data transformation strategies from transformer-based vision and language modeling into a single unified framework to generate paired views for self-supervision. S3L leverages the inherent regional heterogeneity, histologic feature variability, and information redundancy within WSIs to learn high-quality whole-slide representations. We benchmark S3L visual representations on two diagnostic tasks for two biomedical microscopy modalities. S3L significantly outperforms WSI baselines for cancer diagnosis and genetic mutation prediction. Additionally, S3L achieves good performance using both in-domain and out-of-distribution patch encoders, demonstrating good flexibility and generalizability.

Development and validation of an artificial intelligence model to accurately predict spinopelvic parameters

Feb 09, 2024Abstract:Objective. Achieving appropriate spinopelvic alignment has been shown to be associated with improved clinical symptoms. However, measurement of spinopelvic radiographic parameters is time-intensive and interobserver reliability is a concern. Automated measurement tools have the promise of rapid and consistent measurements, but existing tools are still limited by some degree of manual user-entry requirements. This study presents a novel artificial intelligence (AI) tool called SpinePose that automatically predicts spinopelvic parameters with high accuracy without the need for manual entry. Methods. SpinePose was trained and validated on 761 sagittal whole-spine X-rays to predict sagittal vertical axis (SVA), pelvic tilt (PT), pelvic incidence (PI), sacral slope (SS), lumbar lordosis (LL), T1-pelvic angle (T1PA), and L1-pelvic angle (L1PA). A separate test set of 40 X-rays was labeled by 4 reviewers, including fellowship-trained spine surgeons and a fellowship-trained radiologist with neuroradiology subspecialty certification. Median errors relative to the most senior reviewer were calculated to determine model accuracy on test images. Intraclass correlation coefficients (ICC) were used to assess inter-rater reliability. Results. SpinePose exhibited the following median (interquartile range) parameter errors: SVA: 2.2(2.3)mm, p=0.93; PT: 1.3(1.2){\deg}, p=0.48; SS: 1.7(2.2){\deg}, p=0.64; PI: 2.2(2.1){\deg}, p=0.24; LL: 2.6(4.0){\deg}, p=0.89; T1PA: 1.1(0.9){\deg}, p=0.42; and L1PA: 1.4(1.6){\deg}, p=0.49. Model predictions also exhibited excellent reliability at all parameters (ICC: 0.91-1.0). Conclusions. SpinePose accurately predicted spinopelvic parameters with excellent reliability comparable to fellowship-trained spine surgeons and neuroradiologists. Utilization of predictive AI tools in spinal imaging can substantially aid in patient selection and surgical planning.

Fine-grained Text Style Transfer with Diffusion-Based Language Models

Jun 12, 2023

Abstract:Diffusion probabilistic models have shown great success in generating high-quality images controllably, and researchers have tried to utilize this controllability into text generation domain. Previous works on diffusion-based language models have shown that they can be trained without external knowledge (such as pre-trained weights) and still achieve stable performance and controllability. In this paper, we trained a diffusion-based model on StylePTB dataset, the standard benchmark for fine-grained text style transfers. The tasks in StylePTB requires much more refined control over the output text compared to tasks evaluated in previous works, and our model was able to achieve state-of-the-art performance on StylePTB on both individual and compositional transfers. Moreover, our model, trained on limited data from StylePTB without external knowledge, outperforms previous works that utilized pretrained weights, embeddings, and external grammar parsers, and this may indicate that diffusion-based language models have great potential under low-resource settings.

Artificial-intelligence-based molecular classification of diffuse gliomas using rapid, label-free optical imaging

Mar 23, 2023Abstract:Molecular classification has transformed the management of brain tumors by enabling more accurate prognostication and personalized treatment. However, timely molecular diagnostic testing for patients with brain tumors is limited, complicating surgical and adjuvant treatment and obstructing clinical trial enrollment. In this study, we developed DeepGlioma, a rapid ($< 90$ seconds), artificial-intelligence-based diagnostic screening system to streamline the molecular diagnosis of diffuse gliomas. DeepGlioma is trained using a multimodal dataset that includes stimulated Raman histology (SRH); a rapid, label-free, non-consumptive, optical imaging method; and large-scale, public genomic data. In a prospective, multicenter, international testing cohort of patients with diffuse glioma ($n=153$) who underwent real-time SRH imaging, we demonstrate that DeepGlioma can predict the molecular alterations used by the World Health Organization to define the adult-type diffuse glioma taxonomy (IDH mutation, 1p19q co-deletion and ATRX mutation), achieving a mean molecular classification accuracy of $93.3\pm 1.6\%$. Our results represent how artificial intelligence and optical histology can be used to provide a rapid and scalable adjunct to wet lab methods for the molecular screening of patients with diffuse glioma.

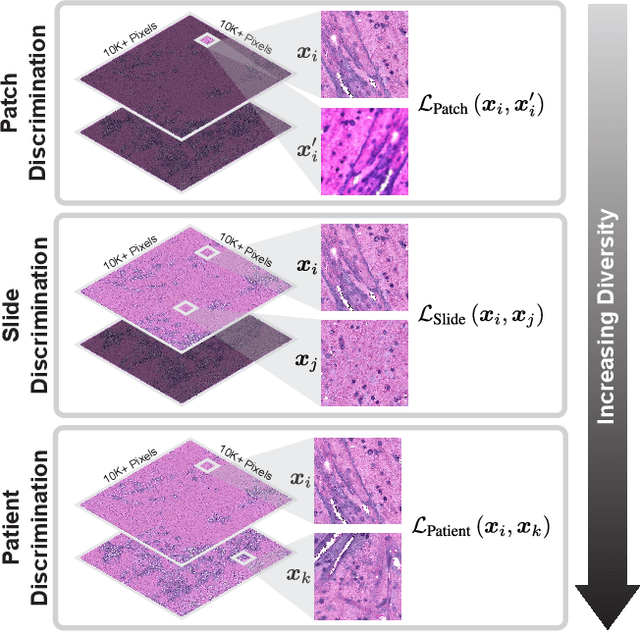

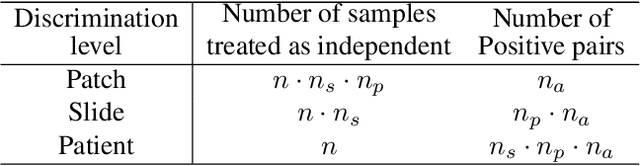

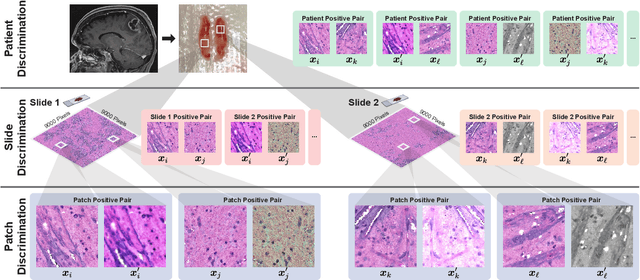

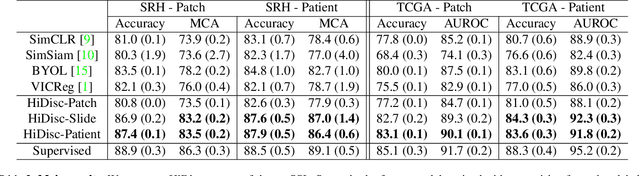

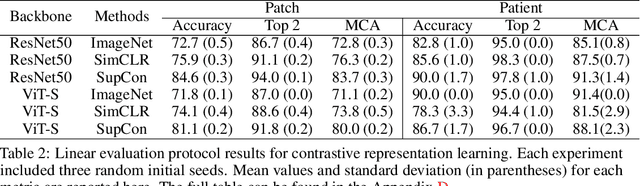

Hierarchical discriminative learning improves visual representations of biomedical microscopy

Mar 02, 2023

Abstract:Learning high-quality, self-supervised, visual representations is essential to advance the role of computer vision in biomedical microscopy and clinical medicine. Previous work has focused on self-supervised representation learning (SSL) methods developed for instance discrimination and applied them directly to image patches, or fields-of-view, sampled from gigapixel whole-slide images (WSIs) used for cancer diagnosis. However, this strategy is limited because it (1) assumes patches from the same patient are independent, (2) neglects the patient-slide-patch hierarchy of clinical biomedical microscopy, and (3) requires strong data augmentations that can degrade downstream performance. Importantly, sampled patches from WSIs of a patient's tumor are a diverse set of image examples that capture the same underlying cancer diagnosis. This motivated HiDisc, a data-driven method that leverages the inherent patient-slide-patch hierarchy of clinical biomedical microscopy to define a hierarchical discriminative learning task that implicitly learns features of the underlying diagnosis. HiDisc uses a self-supervised contrastive learning framework in which positive patch pairs are defined based on a common ancestry in the data hierarchy, and a unified patch, slide, and patient discriminative learning objective is used for visual SSL. We benchmark HiDisc visual representations on two vision tasks using two biomedical microscopy datasets, and demonstrate that (1) HiDisc pretraining outperforms current state-of-the-art self-supervised pretraining methods for cancer diagnosis and genetic mutation prediction, and (2) HiDisc learns high-quality visual representations using natural patch diversity without strong data augmentations.

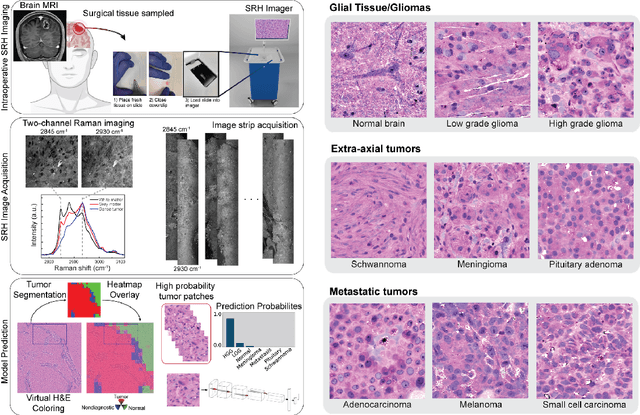

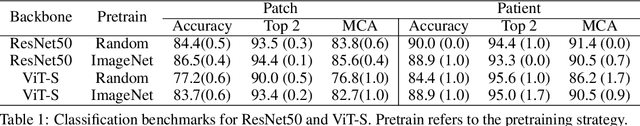

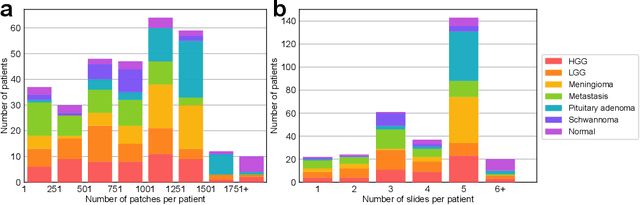

OpenSRH: optimizing brain tumor surgery using intraoperative stimulated Raman histology

Jun 16, 2022

Abstract:Accurate intraoperative diagnosis is essential for providing safe and effective care during brain tumor surgery. Our standard-of-care diagnostic methods are time, resource, and labor intensive, which restricts access to optimal surgical treatments. To address these limitations, we propose an alternative workflow that combines stimulated Raman histology (SRH), a rapid optical imaging method, with deep learning-based automated interpretation of SRH images for intraoperative brain tumor diagnosis and real-time surgical decision support. Here, we present OpenSRH, the first public dataset of clinical SRH images from 300+ brain tumors patients and 1300+ unique whole slide optical images. OpenSRH contains data from the most common brain tumors diagnoses, full pathologic annotations, whole slide tumor segmentations, raw and processed optical imaging data for end-to-end model development and validation. We provide a framework for patch-based whole slide SRH classification and inference using weak (i.e. patient-level) diagnostic labels. Finally, we benchmark two computer vision tasks: multiclass histologic brain tumor classification and patch-based contrastive representation learning. We hope OpenSRH will facilitate the clinical translation of rapid optical imaging and real-time ML-based surgical decision support in order to improve the access, safety, and efficacy of cancer surgery in the era of precision medicine. Dataset access, code, and benchmarks are available at opensrh.mlins.org.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge