Ting Ren

OMR-NET: a two-stage octave multi-scale residual network for screen content image compression

Jul 11, 2024

Abstract:Screen content (SC) differs from natural scene (NS) with unique characteristics such as noise-free, repetitive patterns, and high contrast. Aiming at addressing the inadequacies of current learned image compression (LIC) methods for SC, we propose an improved two-stage octave convolutional residual blocks (IToRB) for high and low-frequency feature extraction and a cascaded two-stage multi-scale residual blocks (CTMSRB) for improved multi-scale learning and nonlinearity in SC. Additionally, we employ a window-based attention module (WAM) to capture pixel correlations, especially for high contrast regions in the image. We also construct a diverse SC image compression dataset (SDU-SCICD2K) for training, including text, charts, graphics, animation, movie, game and mixture of SC images and NS images. Experimental results show our method, more suited for SC than NS data, outperforms existing LIC methods in rate-distortion performance on SC images. The code is publicly available at https://github.com/SunshineSki/OMR Net.git.

* 7 figures, 2 tables

Lossless Attention in Convolutional Networks for Facial Expression Recognition in the Wild

Jan 31, 2020

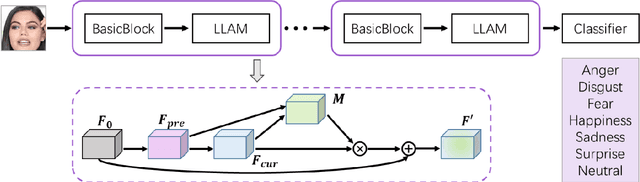

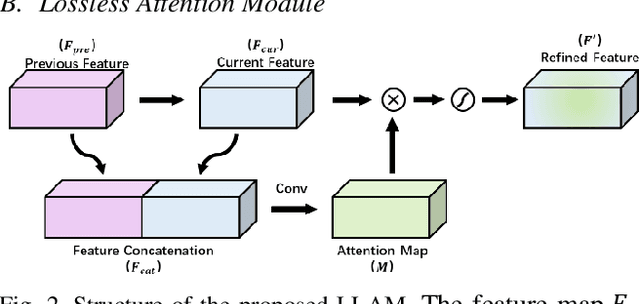

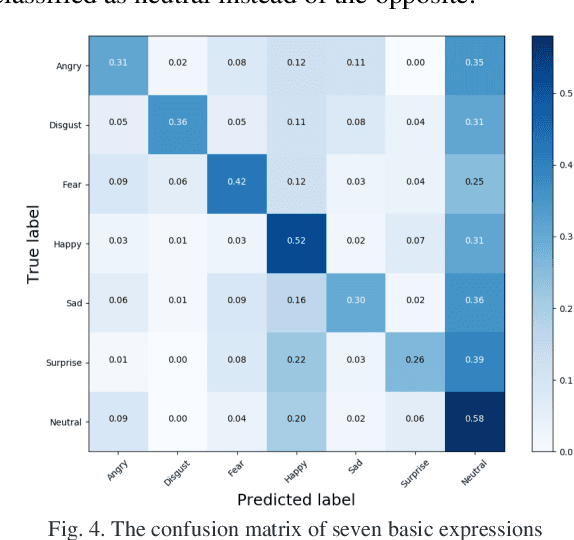

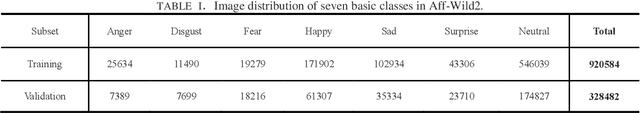

Abstract:Unlike the constraint frontal face condition, faces in the wild have various unconstrained interference factors, such as complex illumination, changing perspective and various occlusions. Facial expressions recognition (FER) in the wild is a challenging task and existing methods can't perform well. However, for occluded faces (containing occlusion caused by other objects and self-occlusion caused by head posture changes), the attention mechanism has the ability to focus on the non-occluded regions automatically. In this paper, we propose a Lossless Attention Model (LLAM) for convolutional neural networks (CNN) to extract attention-aware features from faces. Our module avoids decay information in the process of generating attention maps by using the information of the previous layer and not reducing the dimensionality. Sequentially, we adaptively refine the feature responses by fusing the attention map with the feature map. We participate in the seven basic expression classification sub-challenges of FG-2020 Affective Behavior Analysis in-the-wild Challenge. And we validate our method on the Aff-Wild2 datasets released by the Challenge. The total accuracy (Accuracy) and the unweighted mean (F1) of our method on the validation set are 0.49 and 0.38 respectively, and the final result is 0.42 (0.67 F1-Score + 0.33 Accuracy).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge