Thomas Pöllabauer

Semantic Segmentation of Transparent and Opaque Drinking Glasses with the Help of Zero-shot Learning

Mar 19, 2025

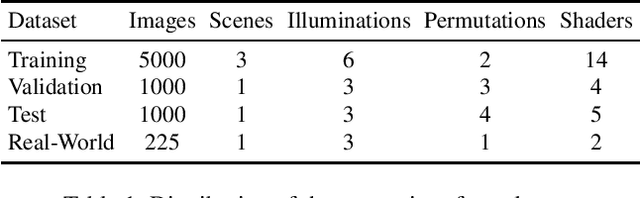

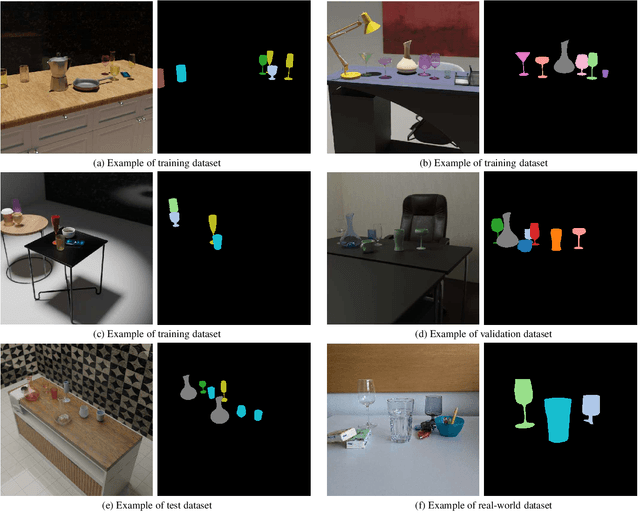

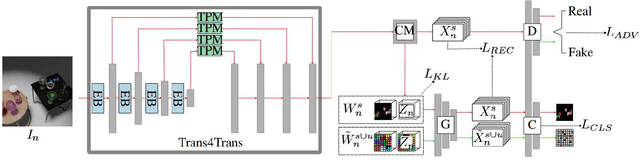

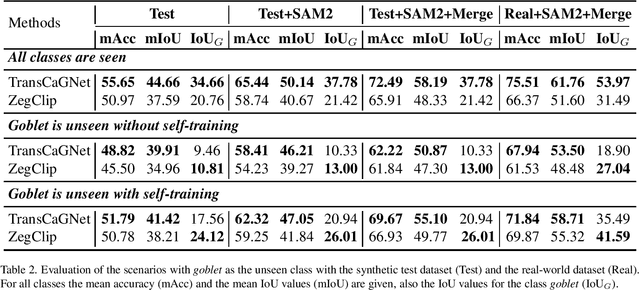

Abstract:Segmenting transparent structures in images is challenging since they are difficult to distinguish from the background. Common examples are drinking glasses, which are a ubiquitous part of our lives and appear in many different shapes and sizes. In this work we propose TransCaGNet, a modified version of the zero-shot model CaGNet. We exchange the segmentation backbone with the architecture of Trans4Trans to be capable of segmenting transparent objects. Since some glasses are rarely captured, we use zeroshot learning to be able to create semantic segmentations of glass categories not given during training. We propose a novel synthetic dataset covering a diverse set of different environmental conditions. Additionally we capture a real-world evaluation dataset since most applications take place in the real world. Comparing our model with Zeg-Clip we are able to show that TransCaGNet produces better mean IoU and accuracy values while ZegClip outperforms it mostly for unseen classes. To improve the segmentation results, we combine the semantic segmentation of the models with the segmentation results of SAM 2. Our evaluation emphasizes that distinguishing between different classes is challenging for the models due to similarity, points of view, or coverings. Taking this behavior into account, we assign glasses multiple possible categories. The modification leads to an improvement up to 13.68% for the mean IoU and up to 17.88% for the mean accuracy values on the synthetic dataset. Using our difficult synthetic dataset for training, the models produce even better results on the real-world dataset. The mean IoU is improved up to 5.55% and the mean accuracy up to 5.72% on the real-world dataset.

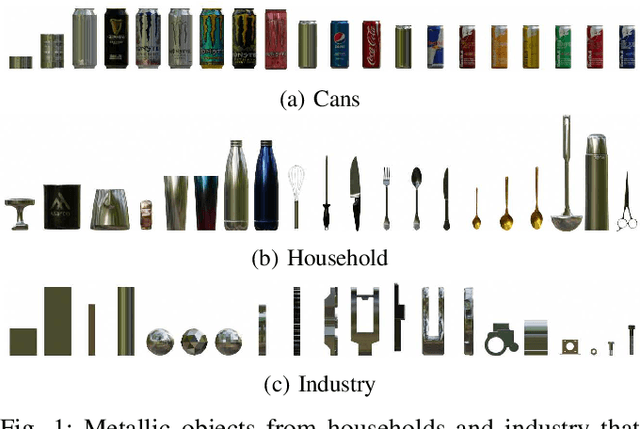

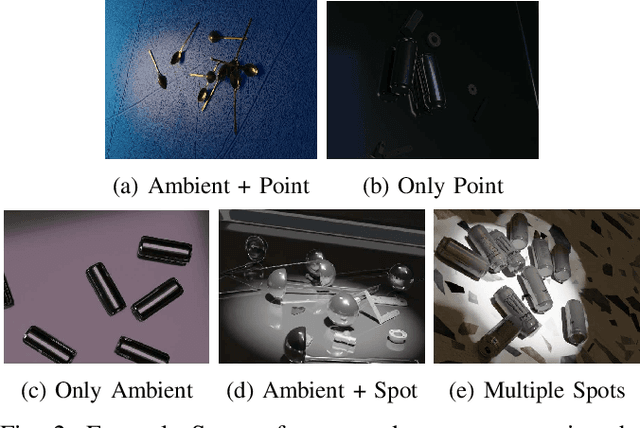

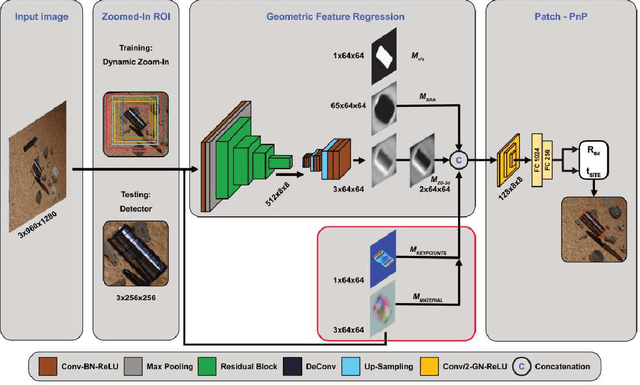

Improving 6D Object Pose Estimation of metallic Household and Industry Objects

Mar 05, 2025

Abstract:6D object pose estimation suffers from reduced accuracy when applied to metallic objects. We set out to improve the state-of-the-art by addressing challenges such as reflections and specular highlights in industrial applications. Our novel BOP-compatible dataset, featuring a diverse set of metallic objects (cans, household, and industrial items) under various lighting and background conditions, provides additional geometric and visual cues. We demonstrate that these cues can be effectively leveraged to enhance overall performance. To illustrate the usefulness of the additional features, we improve upon the GDRNPP algorithm by introducing an additional keypoint prediction and material estimator head in order to improve spatial scene understanding. Evaluations on the new dataset show improved accuracy for metallic objects, supporting the hypothesis that additional geometric and visual cues can improve learning.

EfficientPose 6D: Scalable and Efficient 6D Object Pose Estimation

Feb 19, 2025Abstract:In industrial applications requiring real-time feedback, such as quality control and robotic manipulation, the demand for high-speed and accurate pose estimation remains critical. Despite advances improving speed and accuracy in pose estimation, finding a balance between computational efficiency and accuracy poses significant challenges in dynamic environments. Most current algorithms lack scalability in estimation time, especially for diverse datasets, and the state-of-the-art (SOTA) methods are often too slow. This study focuses on developing a fast and scalable set of pose estimators based on GDRNPP to meet or exceed current benchmarks in accuracy and robustness, particularly addressing the efficiency-accuracy trade-off essential in real-time scenarios. We propose the AMIS algorithm to tailor the utilized model according to an application-specific trade-off between inference time and accuracy. We further show the effectiveness of the AMIS-based model choice on four prominent benchmark datasets (LM-O, YCB-V, T-LESS, and ITODD).

YCB-LUMA: YCB Object Dataset with Luminance Keying for Object Localization

Nov 20, 2024

Abstract:Localizing target objects in images is an important task in computer vision. Often it is the first step towards solving a variety of applications in autonomous driving, maintenance, quality insurance, robotics, and augmented reality. Best in class solutions for this task rely on deep neural networks, which require a set of representative training data for best performance. Creating sets of sufficient quality, variety, and size is often difficult, error prone, and expensive. This is where the method of luminance keying can help: it provides a simple yet effective solution to record high quality data for training object detection and segmentation. We extend previous work that presented luminance keying on the common YCB-V set of household objects by recording the remaining objects of the YCB superset. The additional variety of objects - addition of transparency, multiple color variations, non-rigid objects - further demonstrates the usefulness of luminance keying and might be used to test the applicability of the approach on new 2D object detection and segmentation algorithms.

End-to-End Probabilistic Geometry-Guided Regression for 6DoF Object Pose Estimation

Sep 18, 2024Abstract:6D object pose estimation is the problem of identifying the position and orientation of an object relative to a chosen coordinate system, which is a core technology for modern XR applications. State-of-the-art 6D object pose estimators directly predict an object pose given an object observation. Due to the ill-posed nature of the pose estimation problem, where multiple different poses can correspond to a single observation, generating additional plausible estimates per observation can be valuable. To address this, we reformulate the state-of-the-art algorithm GDRNPP and introduce EPRO-GDR (End-to-End Probabilistic Geometry-Guided Regression). Instead of predicting a single pose per detection, we estimate a probability density distribution of the pose. Using the evaluation procedure defined by the BOP (Benchmark for 6D Object Pose Estimation) Challenge, we test our approach on four of its core datasets and demonstrate superior quantitative results for EPRO-GDR on LM-O, YCB-V, and ITODD. Our probabilistic solution shows that predicting a pose distribution instead of a single pose can improve state-of-the-art single-view pose estimation while providing the additional benefit of being able to sample multiple meaningful pose candidates.

Transparency Distortion Robustness for SOTA Image Segmentation Tasks

May 21, 2024

Abstract:Semantic Image Segmentation facilitates a multitude of real-world applications ranging from autonomous driving over industrial process supervision to vision aids for human beings. These models are usually trained in a supervised fashion using example inputs. Distribution Shifts between these examples and the inputs in operation may cause erroneous segmentations. The robustness of semantic segmentation models against distribution shifts caused by differing camera or lighting setups, lens distortions, adversarial inputs and image corruptions has been topic of recent research. However, robustness against spatially varying radial distortion effects that can be caused by uneven glass structures (e.g. windows) or the chaotic refraction in heated air has not been addressed by the research community yet. We propose a method to synthetically augment existing datasets with spatially varying distortions. Our experiments show, that these distortion effects degrade the performance of state-of-the-art segmentation models. Pretraining and enlarged model capacities proof to be suitable strategies for mitigating performance degradation to some degree, while fine-tuning on distorted images only leads to marginal performance improvements.

Influence of Water Droplet Contamination for Transparency Segmentation

May 21, 2024Abstract:Computer vision techniques are on the rise for industrial applications, like process supervision and autonomous agents, e.g., in the healthcare domain and dangerous environments. While the general usability of these techniques is high, there are still challenging real-world use-cases. Especially transparent structures, which can appear in the form of glass doors, protective casings or everyday objects like glasses, pose a challenge for computer vision methods. This paper evaluates the combination of transparent objects in conjunction with (naturally occurring) contamination through environmental effects like hazing. We introduce a novel publicly available dataset containing 489 images incorporating three grades of water droplet contamination on transparent structures and examine the resulting influence on transparency handling. Our findings show, that contaminated transparent objects are easier to segment and that we are able to distinguish between different severity levels of contamination with a current state-of-the art machine-learning model. This in turn opens up the possibility to enhance computer vision systems regarding resilience against, e.g., datashifts through contaminated protection casings or implement an automated cleaning alert.

Fast Training Data Acquisition for Object Detection and Segmentation using Black Screen Luminance Keying

May 13, 2024

Abstract:Deep Neural Networks (DNNs) require large amounts of annotated training data for a good performance. Often this data is generated using manual labeling (error-prone and time-consuming) or rendering (requiring geometry and material information). Both approaches make it difficult or uneconomic to apply them to many small-scale applications. A fast and straightforward approach of acquiring the necessary training data would allow the adoption of deep learning to even the smallest of applications. Chroma keying is the process of replacing a color (usually blue or green) with another background. Instead of chroma keying, we propose luminance keying for fast and straightforward training image acquisition. We deploy a black screen with high light absorption (99.99\%) to record roughly 1-minute long videos of our target objects, circumventing typical problems of chroma keying, such as color bleeding or color overlap between background color and object color. Next we automatically mask our objects using simple brightness thresholding, saving the need for manual annotation. Finally, we automatically place the objects on random backgrounds and train a 2D object detector. We do extensive evaluation of the performance on the widely-used YCB-V object set and compare favourably to other conventional techniques such as rendering, without needing 3D meshes, materials or any other information of our target objects and in a fraction of the time needed for other approaches. Our work demonstrates highly accurate training data acquisition allowing to start training state-of-the-art networks within minutes.

One-to-many Reconstruction of 3D Geometry of cultural Artifacts using a synthetically trained Generative Model

Feb 13, 2024

Abstract:Estimating the 3D shape of an object using a single image is a difficult problem. Modern approaches achieve good results for general objects, based on real photographs, but worse results on less expressive representations such as historic sketches. Our automated approach generates a variety of detailed 3D representation from a single sketch, depicting a medieval statue, and can be guided by multi-modal inputs, such as text prompts. It relies solely on synthetic data for training, making it adoptable even in cases of only small numbers of training examples. Our solution allows domain experts such as a curators to interactively reconstruct potential appearances of lost artifacts.

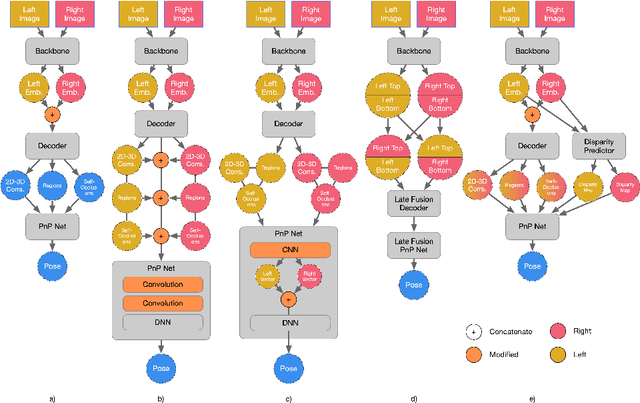

Extending 6D Object Pose Estimators for Stereo Vision

Feb 08, 2024

Abstract:Estimating the 6D pose of objects accurately, quickly, and robustly remains a difficult task. However, recent methods for directly regressing poses from RGB images using dense features have achieved state-of-the-art results. Stereo vision, which provides an additional perspective on the object, can help reduce pose ambiguity and occlusion. Moreover, stereo can directly infer the distance of an object, while mono-vision requires internalized knowledge of the object's size. To extend the state-of-the-art in 6D object pose estimation to stereo, we created a BOP compatible stereo version of the YCB-V dataset. Our method outperforms state-of-the-art 6D pose estimation algorithms by utilizing stereo vision and can easily be adopted for other dense feature-based algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge