Dieter Fellner

RangeSAM: Leveraging Visual Foundation Models for Range-View repesented LiDAR segmentation

Sep 19, 2025Abstract:Point cloud segmentation is central to autonomous driving and 3D scene understanding. While voxel- and point-based methods dominate recent research due to their compatibility with deep architectures and ability to capture fine-grained geometry, they often incur high computational cost, irregular memory access, and limited real-time efficiency. In contrast, range-view methods, though relatively underexplored - can leverage mature 2D semantic segmentation techniques for fast and accurate predictions. Motivated by the rapid progress in Visual Foundation Models (VFMs) for captioning, zero-shot recognition, and multimodal tasks, we investigate whether SAM2, the current state-of-the-art VFM for segmentation tasks, can serve as a strong backbone for LiDAR point cloud segmentation in the range view. We present , to our knowledge, the first range-view framework that adapts SAM2 to 3D segmentation, coupling efficient 2D feature extraction with standard projection/back-projection to operate on point clouds. To optimize SAM2 for range-view representations, we implement several architectural modifications to the encoder: (1) a novel module that emphasizes horizontal spatial dependencies inherent in LiDAR range images, (2) a customized configuration of tailored to the geometric properties of spherical projections, and (3) an adapted mechanism in the encoder backbone specifically designed to capture the unique spatial patterns and discontinuities present in range-view pseudo-images. Our approach achieves competitive performance on SemanticKITTI while benefiting from the speed, scalability, and deployment simplicity of 2D-centric pipelines. This work highlights the viability of VFMs as general-purpose backbones for 3D perception and opens a path toward unified, foundation-model-driven LiDAR segmentation. Results lets us conclude that range-view segmentation methods using VFMs leads to promising results.

Fast Training Data Acquisition for Object Detection and Segmentation using Black Screen Luminance Keying

May 13, 2024

Abstract:Deep Neural Networks (DNNs) require large amounts of annotated training data for a good performance. Often this data is generated using manual labeling (error-prone and time-consuming) or rendering (requiring geometry and material information). Both approaches make it difficult or uneconomic to apply them to many small-scale applications. A fast and straightforward approach of acquiring the necessary training data would allow the adoption of deep learning to even the smallest of applications. Chroma keying is the process of replacing a color (usually blue or green) with another background. Instead of chroma keying, we propose luminance keying for fast and straightforward training image acquisition. We deploy a black screen with high light absorption (99.99\%) to record roughly 1-minute long videos of our target objects, circumventing typical problems of chroma keying, such as color bleeding or color overlap between background color and object color. Next we automatically mask our objects using simple brightness thresholding, saving the need for manual annotation. Finally, we automatically place the objects on random backgrounds and train a 2D object detector. We do extensive evaluation of the performance on the widely-used YCB-V object set and compare favourably to other conventional techniques such as rendering, without needing 3D meshes, materials or any other information of our target objects and in a fraction of the time needed for other approaches. Our work demonstrates highly accurate training data acquisition allowing to start training state-of-the-art networks within minutes.

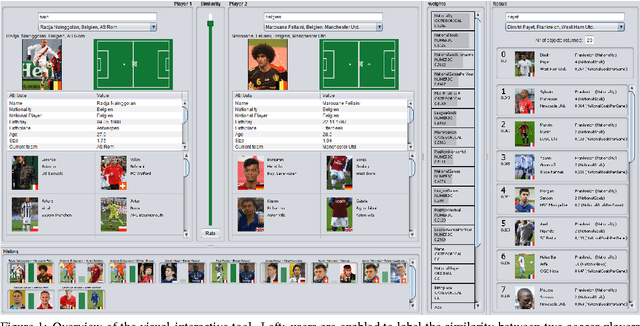

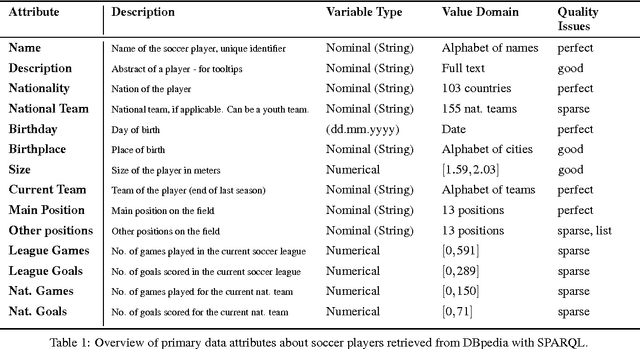

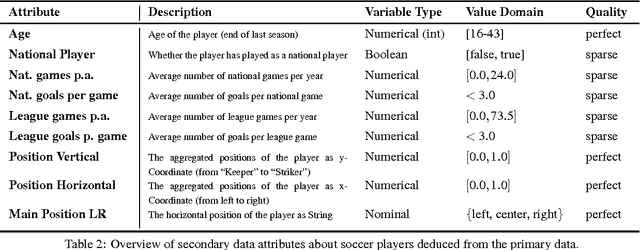

Visual-Interactive Similarity Search for Complex Objects by Example of Soccer Player Analysis

Mar 09, 2017

Abstract:The definition of similarity is a key prerequisite when analyzing complex data types in data mining, information retrieval, or machine learning. However, the meaningful definition is often hampered by the complexity of data objects and particularly by different notions of subjective similarity latent in targeted user groups. Taking the example of soccer players, we present a visual-interactive system that learns users' mental models of similarity. In a visual-interactive interface, users are able to label pairs of soccer players with respect to their subjective notion of similarity. Our proposed similarity model automatically learns the respective concept of similarity using an active learning strategy. A visual-interactive retrieval technique is provided to validate the model and to execute downstream retrieval tasks for soccer player analysis. The applicability of the approach is demonstrated in different evaluation strategies, including usage scenarions and cross-validation tests.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge