Thomas Oberlin

Multi-Objective Optimization for Synthetic-to-Real Style Transfer

Feb 03, 2026Abstract:Semantic segmentation networks require large amounts of pixel-level annotated data, which are costly to obtain for real-world images. Computer graphics engines can generate synthetic images alongside their ground-truth annotations. However, models trained on such images can perform poorly on real images due to the domain gap between real and synthetic images. Style transfer methods can reduce this difference by applying a realistic style to synthetic images. Choosing effective data transformations and their sequence is difficult due to the large combinatorial search space of style transfer operators. Using multi-objective genetic algorithms, we optimize pipelines to balance structural coherence and style similarity to target domains. We study the use of paired-image metrics on individual image samples during evolution to enable rapid pipeline evaluation, as opposed to standard distributional metrics that require the generation of many images. After optimization, we evaluate the resulting Pareto front using distributional metrics and segmentation performance. We apply this approach to standard datasets in synthetic-to-real domain adaptation: from the video game GTA5 to real image datasets Cityscapes and ACDC, focusing on adverse conditions. Results demonstrate that evolutionary algorithms can propose diverse augmentation pipelines adapted to different objectives. The contribution of this work is the formulation of style transfer as a sequencing problem suitable for evolutionary optimization and the study of efficient metrics that enable feasible search in this space. The source code is available at: https://github.com/echigot/MOOSS.

Synthetic Data for Robust Runway Detection

Oct 23, 2025Abstract:Deep vision models are now mature enough to be integrated in industrial and possibly critical applications such as autonomous navigation. Yet, data collection and labeling to train such models requires too much efforts and costs for a single company or product. This drawback is more significant in critical applications, where training data must include all possible conditions including rare scenarios. In this perspective, generating synthetic images is an appealing solution, since it allows a cheap yet reliable covering of all the conditions and environments, if the impact of the synthetic-to-real distribution shift is mitigated. In this article, we consider the case of runway detection that is a critical part in autonomous landing systems developed by aircraft manufacturers. We propose an image generation approach based on a commercial flight simulator that complements a few annotated real images. By controlling the image generation and the integration of real and synthetic data, we show that standard object detection models can achieve accurate prediction. We also evaluate their robustness with respect to adverse conditions, in our case nighttime images, that were not represented in the real data, and show the interest of using a customized domain adaptation strategy.

Style Transfer with Diffusion Models for Synthetic-to-Real Domain Adaptation

May 22, 2025

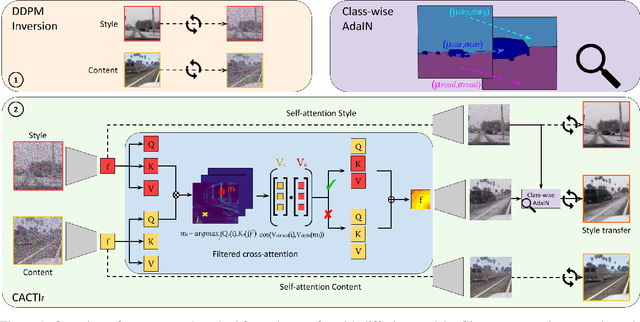

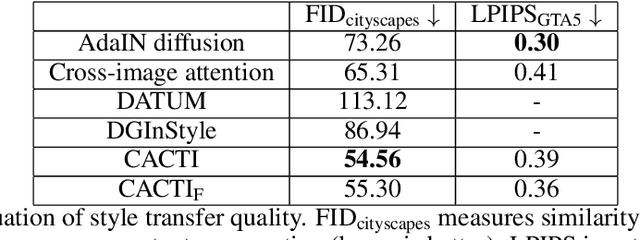

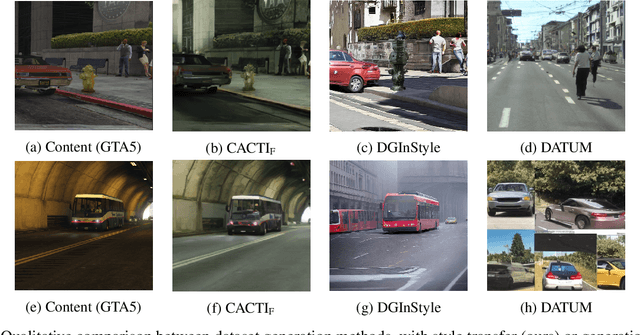

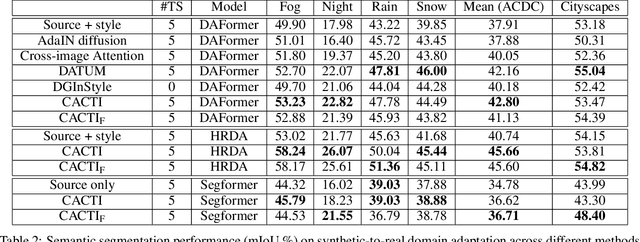

Abstract:Semantic segmentation models trained on synthetic data often perform poorly on real-world images due to domain gaps, particularly in adverse conditions where labeled data is scarce. Yet, recent foundation models enable to generate realistic images without any training. This paper proposes to leverage such diffusion models to improve the performance of vision models when learned on synthetic data. We introduce two novel techniques for semantically consistent style transfer using diffusion models: Class-wise Adaptive Instance Normalization and Cross-Attention (CACTI) and its extension with selective attention Filtering (CACTIF). CACTI applies statistical normalization selectively based on semantic classes, while CACTIF further filters cross-attention maps based on feature similarity, preventing artifacts in regions with weak cross-attention correspondences. Our methods transfer style characteristics while preserving semantic boundaries and structural coherence, unlike approaches that apply global transformations or generate content without constraints. Experiments using GTA5 as source and Cityscapes/ACDC as target domains show that our approach produces higher quality images with lower FID scores and better content preservation. Our work demonstrates that class-aware diffusion-based style transfer effectively bridges the synthetic-to-real domain gap even with minimal target domain data, advancing robust perception systems for challenging real-world applications. The source code is available at: https://github.com/echigot/cactif.

PG-DPIR: An efficient plug-and-play method for high-count Poisson-Gaussian inverse problems

Apr 14, 2025

Abstract:Poisson-Gaussian noise describes the noise of various imaging systems thus the need of efficient algorithms for Poisson-Gaussian image restoration. Deep learning methods offer state-of-the-art performance but often require sensor-specific training when used in a supervised setting. A promising alternative is given by plug-and-play (PnP) methods, which consist in learning only a regularization through a denoiser, allowing to restore images from several sources with the same network. This paper introduces PG-DPIR, an efficient PnP method for high-count Poisson-Gaussian inverse problems, adapted from DPIR. While DPIR is designed for white Gaussian noise, a naive adaptation to Poisson-Gaussian noise leads to prohibitively slow algorithms due to the absence of a closed-form proximal operator. To address this, we adapt DPIR for the specificities of Poisson-Gaussian noise and propose in particular an efficient initialization of the gradient descent required for the proximal step that accelerates convergence by several orders of magnitude. Experiments are conducted on satellite image restoration and super-resolution problems. High-resolution realistic Pleiades images are simulated for the experiments, which demonstrate that PG-DPIR achieves state-of-the-art performance with improved efficiency, which seems promising for on-ground satellite processing chains.

Deep priors for satellite image restoration with accurate uncertainties

Dec 05, 2024

Abstract:Satellite optical images, upon their on-ground receipt, offer a distorted view of the observed scene. Their restoration, classically including denoising, deblurring, and sometimes super-resolution, is required before their exploitation. Moreover, quantifying the uncertainty related to this restoration could be valuable by lowering the risk of hallucination and avoiding propagating these biases in downstream applications. Deep learning methods are now state-of-the-art for satellite image restoration. However, they require to train a specific network for each sensor and they do not provide the associated uncertainties. This paper proposes a generic method involving a single network to restore images from several sensors and a scalable way to derive the uncertainties. We focus on deep regularization (DR) methods, which learn a deep prior on target images before plugging it into a model-based optimization scheme. First, we introduce VBLE-xz, which solves the inverse problem in the latent space of a variational compressive autoencoder, estimating the uncertainty jointly in the latent and in the image spaces. It enables scalable posterior sampling with relevant and calibrated uncertainties. Second, we propose the denoiser-based method SatDPIR, adapted from DPIR, which efficiently computes accurate point estimates. We conduct a comprehensive set of experiments on very high resolution simulated and real Pleiades images, asserting both the performance and robustness of the proposed methods. VBLE-xz and SatDPIR achieve state-of-the-art results compared to direct inversion methods. In particular, VBLE-xz is a scalable method to get realistic posterior samples and accurate uncertainties, while SatDPIR represents a compelling alternative to direct inversion methods when uncertainty quantification is not required.

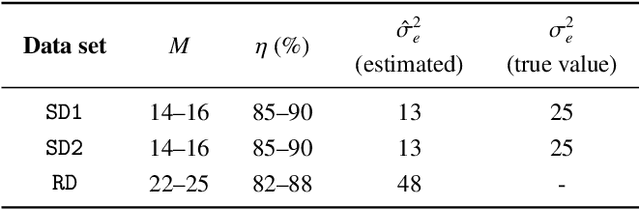

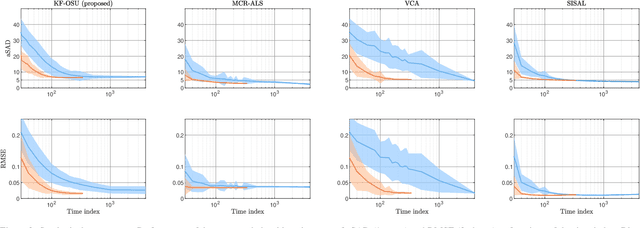

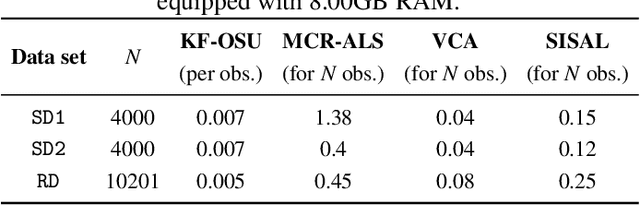

On-the-fly spectral unmixing based on Kalman filtering

Jul 22, 2024

Abstract:This work introduces an on-the-fly (i.e., online) linear unmixing method which is able to sequentially analyze spectral data acquired on a spectrum-by-spectrum basis. After deriving a sequential counterpart of the conventional linear mixing model, the proposed approach recasts the linear unmixing problem into a linear state-space estimation framework. Under Gaussian noise and state models, the estimation of the pure spectra can be efficiently conducted by resorting to Kalman filtering. Interestingly, it is shown that this Kalman filter can operate in a lower-dimensional subspace while ensuring the nonnegativity constraint inherent to pure spectra. This dimensionality reduction allows significantly lightening the computational burden, while leveraging recent advances related to the representation of essential spectral information. The proposed method is evaluated through extensive numerical experiments conducted on synthetic and real Raman data sets. The results show that this Kalman filter-based method offers a convenient trade-off between unmixing accuracy and computational efficiency, which is crucial for operating in an on-the-fly setting. To the best of the authors' knowledge, this is the first operational method which is able to solve the spectral unmixing problem efficiently in a dynamic fashion. It also constitutes a valuable building block for benefiting from acquisition and processing frameworks recently proposed in the microscopy literature, which are motivated by practical issues such as reducing acquisition time and avoiding potential damages being inflicted to photosensitive samples.

Leveraging Self-Supervised Instance Contrastive Learning for Radar Object Detection

Feb 13, 2024

Abstract:In recent years, driven by the need for safer and more autonomous transport systems, the automotive industry has shifted toward integrating a growing number of Advanced Driver Assistance Systems (ADAS). Among the array of sensors employed for object recognition tasks, radar sensors have emerged as a formidable contender due to their abilities in adverse weather conditions or low-light scenarios and their robustness in maintaining consistent performance across diverse environments. However, the small size of radar datasets and the complexity of the labelling of those data limit the performance of radar object detectors. Driven by the promising results of self-supervised learning in computer vision, this paper presents RiCL, an instance contrastive learning framework to pre-train radar object detectors. We propose to exploit the detection from the radar and the temporal information to pre-train the radar object detection model in a self-supervised way using contrastive learning. We aim to pre-train an object detector's backbone, head and neck to learn with fewer data. Experiments on the CARRADA and the RADDet datasets show the effectiveness of our approach in learning generic representations of objects in range-Doppler maps. Notably, our pre-training strategy allows us to use only 20% of the labelled data to reach a similar mAP@0.5 than a supervised approach using the whole training set.

Variational Bayes image restoration with compressive autoencoders

Nov 29, 2023

Abstract:Regularization of inverse problems is of paramount importance in computational imaging. The ability of neural networks to learn efficient image representations has been recently exploited to design powerful data-driven regularizers. While state-of-the-art plug-and-play methods rely on an implicit regularization provided by neural denoisers, alternative Bayesian approaches consider Maximum A Posteriori (MAP) estimation in the latent space of a generative model, thus with an explicit regularization. However, state-of-the-art deep generative models require a huge amount of training data compared to denoisers. Besides, their complexity hampers the optimization of the latent MAP. In this work, we propose to use compressive autoencoders for latent estimation. These networks, which can be seen as variational autoencoders with a flexible latent prior, are smaller and easier to train than state-of-the-art generative models. We then introduce the Variational Bayes Latent Estimation (VBLE) algorithm, which performs this estimation within the framework of variational inference. This allows for fast and easy (approximate) posterior sampling. Experimental results on image datasets BSD and FFHQ demonstrate that VBLE reaches similar performance than state-of-the-art plug-and-play methods, while being able to quantify uncertainties faster than other existing posterior sampling techniques.

A recurrent CNN for online object detection on raw radar frames

Dec 21, 2022Abstract:Automotive radar sensors provide valuable information for advanced driving assistance systems (ADAS). Radars can reliably estimate the distance to an object and the relative velocity, regardless of weather and light conditions. However, radar sensors suffer from low resolution and huge intra-class variations in the shape of objects. Exploiting the time information (e.g., multiple frames) has been shown to help to capture better the dynamics of objects and, therefore, the variation in the shape of objects. Most temporal radar object detectors use 3D convolutions to learn spatial and temporal information. However, these methods are often non-causal and unsuitable for real-time applications. This work presents RECORD, a new recurrent CNN architecture for online radar object detection. We propose an end-to-end trainable architecture mixing convolutions and ConvLSTMs to learn spatio-temporal dependencies between successive frames. Our model is causal and requires only the past information encoded in the memory of the ConvLSTMs to detect objects. Our experiments show such a method's relevance for detecting objects in different radar representations (range-Doppler, range-angle) and outperform state-of-the-art models on the ROD2021 and CARRADA datasets while being less computationally expensive. The code will be available soon.

Algorithms for audio inpainting based on probabilistic nonnegative matrix factorization

Jun 28, 2022

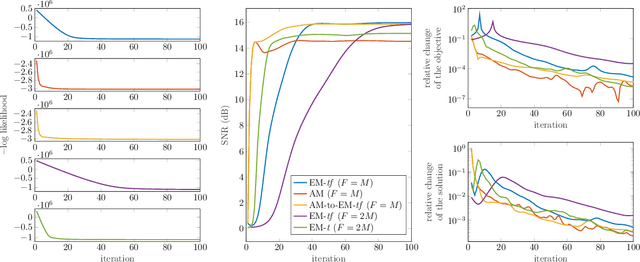

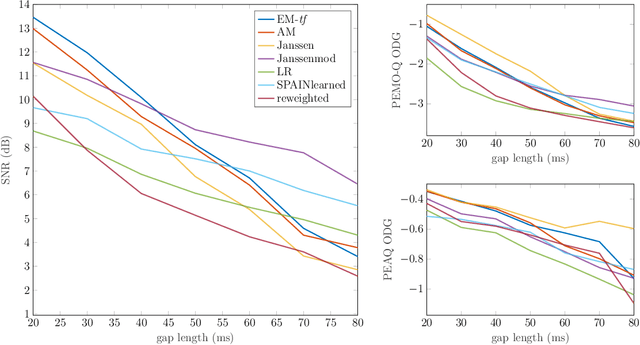

Abstract:Audio inpainting, i.e., the task of restoring missing or occluded audio signal samples, usually relies on sparse representations or autoregressive modeling. In this paper, we propose to structure the spectrogram with nonnegative matrix factorization (NMF) in a probabilistic framework. First, we treat the missing samples as latent variables, and derive two expectation-maximization algorithms for estimating the parameters of the model, depending on whether we formulate the problem in the time- or time-frequency domain. Then, we treat the missing samples as parameters, and we address this novel problem by deriving an alternating minimization scheme. We assess the potential of these algorithms for the task of restoring short- to middle-length gaps in music signals. Experiments reveal great convergence properties of the proposed methods, as well as competitive performance when compared to state-of-the-art audio inpainting techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge