Tetsuya Matsuda

Spatio-temporal reconstruction of substance dynamics using compressed sensing in multi-spectral magnetic resonance spectroscopic imaging

Mar 01, 2024

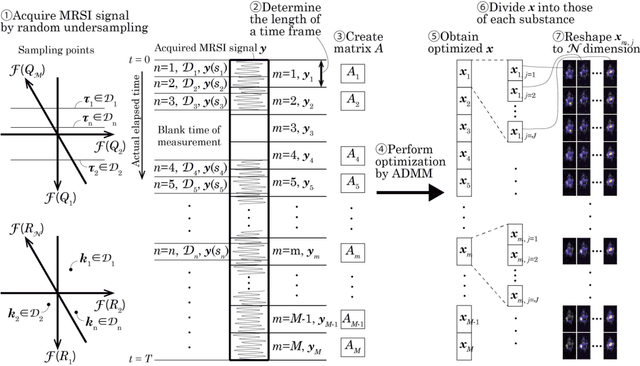

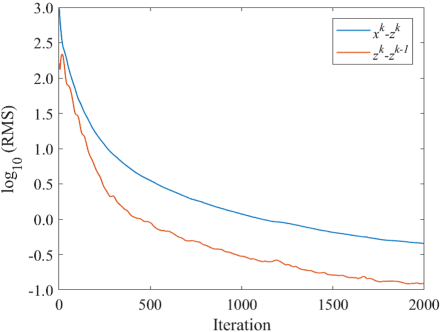

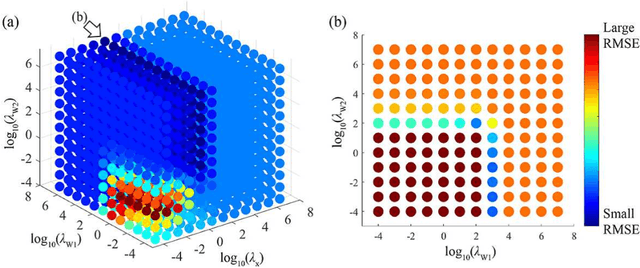

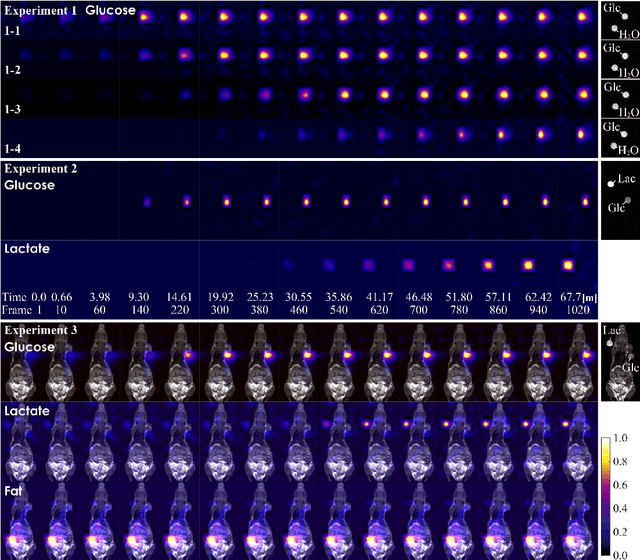

Abstract:The objective of our study is to observe dynamics of multiple substances in vivo with high temporal resolution from multi-spectral magnetic resonance spectroscopic imaging (MRSI) data. The multi-spectral MRSI can effectively separate spectral peaks of multiple substances and is useful to measure spatial distributions of substances. However it is difficult to measure time-varying substance distributions directly by ordinary full sampling because the measurement requires a significantly long time. In this study, we propose a novel method to reconstruct the spatio-temporal distributions of substances from randomly undersampled multi-spectral MRSI data on the basis of compressed sensing (CS) and the partially separable function model with base spectra of substances. In our method, we have employed spatio-temporal sparsity and temporal smoothness of the substance distributions as prior knowledge to perform CS. The effectiveness of our method has been evaluated using phantom data sets of glass tubes filled with glucose or lactate solution in increasing amounts over time and animal data sets of a tumor-bearing mouse to observe the metabolic dynamics involved in the Warburg effect in vivo. The reconstructed results are consistent with the expected behaviors, showing that our method can reconstruct the spatio-temporal distribution of substances with a temporal resolution of four seconds which is extremely short time scale compared with that of full sampling. Since this method utilizes only prior knowledge naturally assumed for the spatio-temporal distributions of substances and is independent of the number of the spectral and spatial dimensions or the acquisition sequence of MRSI, it is expected to contribute to revealing the underlying substance dynamics in MRSI data already acquired or to be acquired in the future.

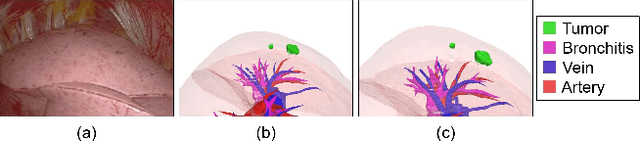

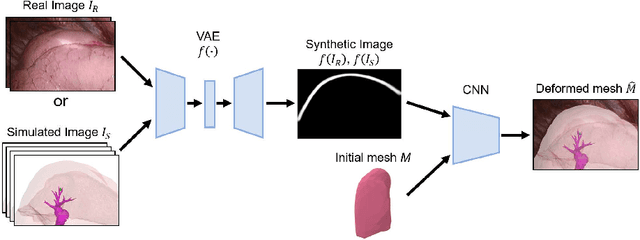

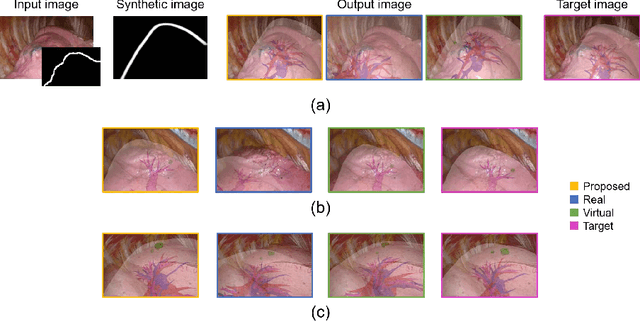

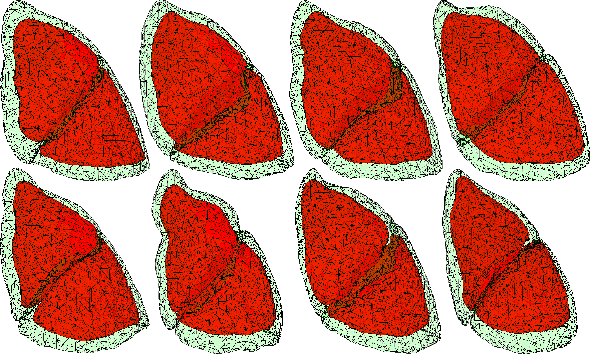

Shape Reconstruction from Thoracoscopic Images using Self-supervised Virtual Learning

Jan 25, 2023

Abstract:Intraoperative shape reconstruction of organs from endoscopic camera images is a complex yet indispensable technique for image-guided surgery. To address the uncertainty in reconstructing entire shapes from single-viewpoint occluded images, we propose a framework for generative virtual learning of shape reconstruction using image translation with common latent variables between simulated and real images. As it is difficult to prepare sufficient amount of data to learn the relationship between endoscopic images and organ shapes, self-supervised virtual learning is performed using simulated images generated from statistical shape models. However, small differences between virtual and real images can degrade the estimation performance even if the simulated images are regarded as equivalent by humans. To address this issue, a Variational Autoencoder is used to convert real and simulated images into identical synthetic images. In this study, we targeted the shape reconstruction of collapsed lungs from thoracoscopic images and confirmed that virtual learning could improve the similarity between real and simulated images. Furthermore, shape reconstruction error could be improved by 16.9%.

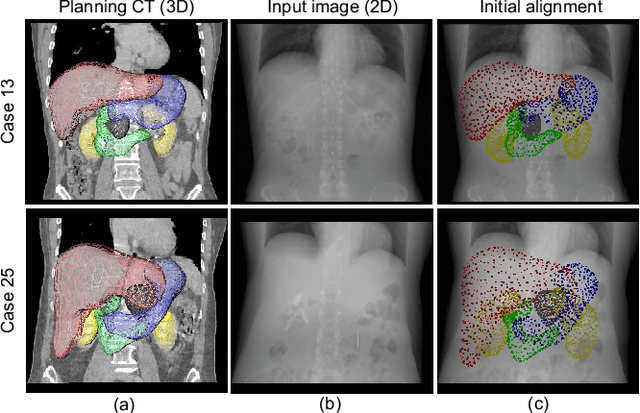

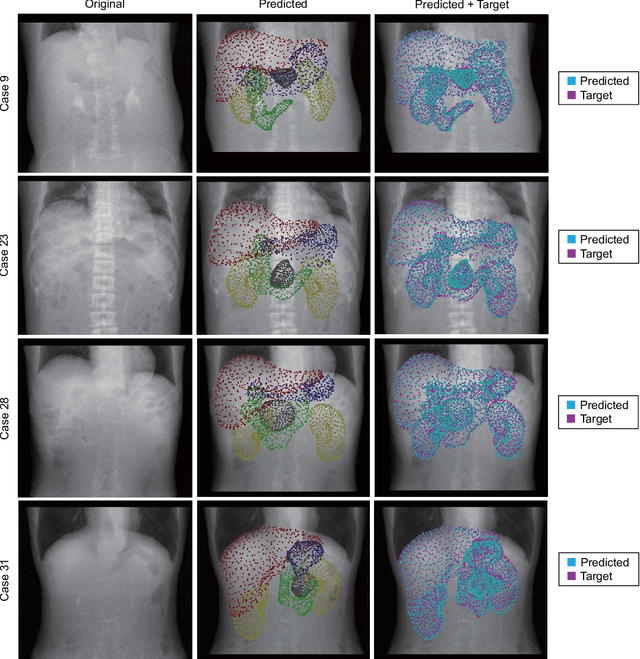

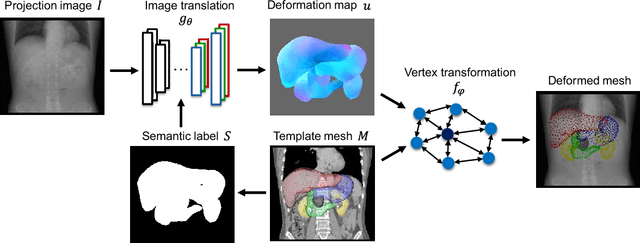

2D/3D Deep Image Registration by Learning 3D Displacement Fields for Abdominal Organs

Dec 11, 2022Abstract:Deformable registration of two-dimensional/three-dimensional (2D/3D) images of abdominal organs is a complicated task because the abdominal organs deform significantly and their contours are not detected in two-dimensional X-ray images. We propose a supervised deep learning framework that achieves 2D/3D deformable image registration between 3D volumes and single-viewpoint 2D projected images. The proposed method learns the translation from the target 2D projection images and the initial 3D volume to 3D displacement fields. In experiments, we registered 3D-computed tomography (CT) volumes to digitally reconstructed radiographs generated from abdominal 4D-CT volumes. For validation, we used 4D-CT volumes of 35 cases and confirmed that the 3D-CT volumes reflecting the nonlinear and local respiratory organ displacement were reconstructed. The proposed method demonstrate the compatible performance to the conventional methods with a dice similarity coefficient of 91.6 \% for the liver region and 85.9 \% for the stomach region, while estimating a significantly more accurate CT values.

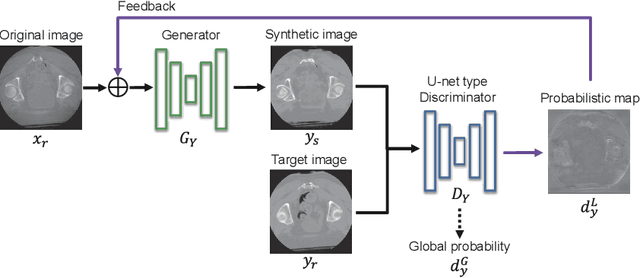

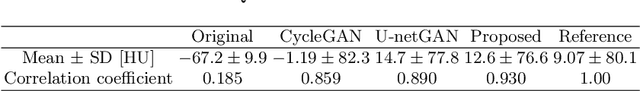

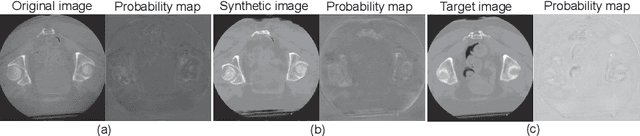

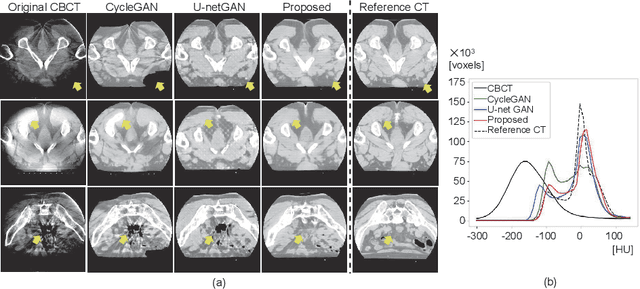

Feedback Assisted Adversarial Learning to Improve the Quality of Cone-beam CT Images

Oct 23, 2022

Abstract:Unsupervised image translation using adversarial learning has been attracting attention to improve the image quality of medical images. However, adversarial training based on the global evaluation values of discriminators does not provide sufficient translation performance for locally different image features. We propose adversarial learning with a feedback mechanism from a discriminator to improve the quality of CBCT images. This framework employs U-net as the discriminator and outputs a probability map representing the local discrimination results. The probability map is fed back to the generator and used for training to improve the image translation. Our experiments using 76 corresponding CT-CBCT images confirmed that the proposed framework could capture more diverse image features than conventional adversarial learning frameworks and produced synthetic images with pixel values close to the reference image and a correlation coefficient of 0.93.

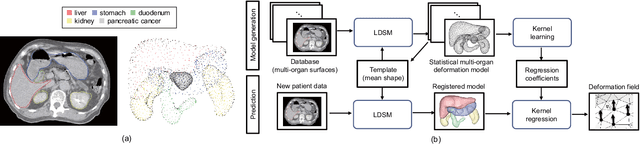

IGCN: Image-to-graph Convolutional Network for 2D/3D Deformable Registration

Oct 31, 2021

Abstract:Organ shape reconstruction based on a single-projection image during treatment has wide clinical scope, e.g., in image-guided radiotherapy and surgical guidance. We propose an image-to-graph convolutional network that achieves deformable registration of a 3D organ mesh for a single-viewpoint 2D projection image. This framework enables simultaneous training of two types of transformation: from the 2D projection image to a displacement map, and from the sampled per-vertex feature to a 3D displacement that satisfies the geometrical constraint of the mesh structure. Assuming application to radiation therapy, the 2D/3D deformable registration performance is verified for multiple abdominal organs that have not been targeted to date, i.e., the liver, stomach, duodenum, and kidney, and for pancreatic cancer. The experimental results show shape prediction considering relationships among multiple organs can be used to predict respiratory motion and deformation from digitally reconstructed radiographs with clinically acceptable accuracy.

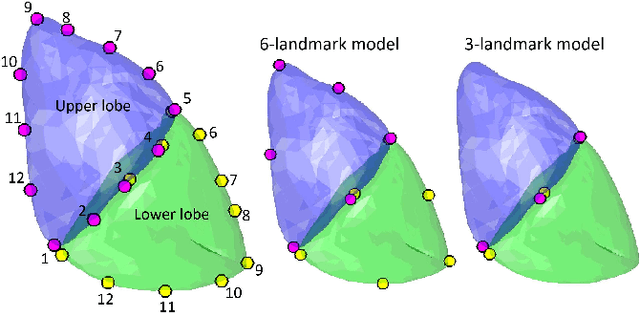

Kernel-based framework to estimate deformations of pneumothorax lung using relative position of anatomical landmarks

Feb 24, 2021

Abstract:In video-assisted thoracoscopic surgeries, successful procedures of nodule resection are highly dependent on the precise estimation of lung deformation between the inflated lung in the computed tomography (CT) images during preoperative planning and the deflated lung in the treatment views during surgery. Lungs in the pneumothorax state during surgery have a large volume change from normal lungs, making it difficult to build a mechanical model. The purpose of this study is to develop a deformation estimation method of the 3D surface of a deflated lung from a few partial observations. To estimate deformations for a largely deformed lung, a kernel regression-based solution was introduced. The proposed method used a few landmarks to capture the partial deformation between the 3D surface mesh obtained from preoperative CT and the intraoperative anatomical positions. The deformation for each vertex of the entire mesh model was estimated per-vertex as a relative position from the landmarks. The landmarks were placed in the anatomical position of the lung's outer contour. The method was applied on nine datasets of the left lungs of live Beagle dogs. Contrast-enhanced CT images of the lungs were acquired. The proposed method achieved a local positional error of vertices of 2.74 mm, Hausdorff distance of 6.11 mm, and Dice similarity coefficient of 0.94. Moreover, the proposed method could estimate lung deformations from a small number of training cases and a small observation area. This study contributes to the data-driven modeling of pneumothorax deformation of the lung.

Three-dimensional Generative Adversarial Nets for Unsupervised Metal Artifact Reduction

Nov 19, 2019

Abstract:The reduction of metal artifacts in computed tomography (CT) images, specifically for strong artifacts generated from multiple metal objects, is a challenging issue in medical imaging research. Although there have been some studies on supervised metal artifact reduction through the learning of synthesized artifacts, it is difficult for simulated artifacts to cover the complexity of the real physical phenomena that may be observed in X-ray propagation. In this paper, we introduce metal artifact reduction methods based on an unsupervised volume-to-volume translation learned from clinical CT images. We construct three-dimensional adversarial nets with a regularized loss function designed for metal artifacts from multiple dental fillings. The results of experiments using 915 CT volumes from real patients demonstrate that the proposed framework has an outstanding capacity to reduce strong artifacts and to recover underlying missing voxels, while preserving the anatomical features of soft tissues and tooth structures from the original images.

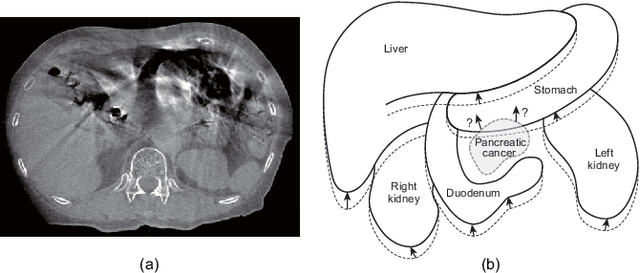

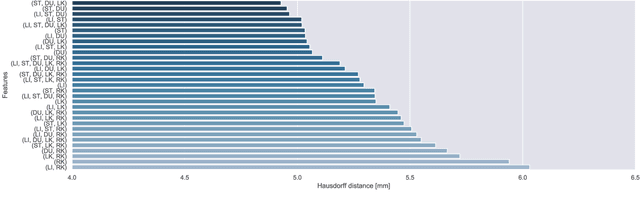

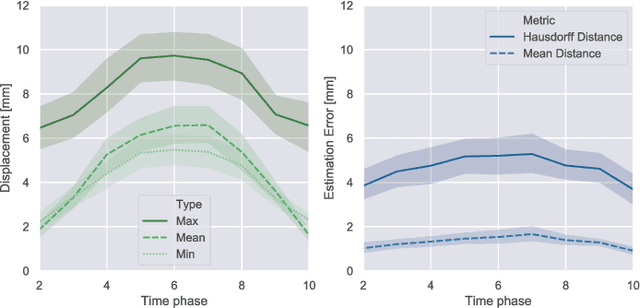

Statistical Deformation Reconstruction Using Multi-organ Shape Features for Pancreatic Cancer Localization

Nov 13, 2019

Abstract:Respiratory motion and the associated deformations of abdominal organs and tumors are essential information in clinical applications. However, inter- and intra-patient multi-organ deformations are complex and have not been statistically formulated, whereas single organ deformations have been widely studied. In this paper, we introduce a multi-organ deformation library and its application to deformation reconstruction based on the shape features of multiple abdominal organs. Statistical multi-organ motion/deformation models of the stomach, liver, left and right kidneys, and duodenum were generated by shape matching their region labels defined on four-dimensional computed tomography images. A total of 250 volumes were measured from 25 pancreatic cancer patients. This paper also proposes a per-region-based deformation learning using the reproducing kernel to predict the displacement of pancreatic cancer for adaptive radiotherapy. The experimental results show that the proposed concept estimates deformations better than general per-patient-based learning models and achieves a clinically acceptable estimation error with a mean distance of 1.2 $\pm$ 0.7 mm and a Hausdorff distance of 4.2 $\pm$ 2.3 mm throughout the respiratory motion.

Sparse Elasticity Reconstruction and Clustering using Local Displacement Fields

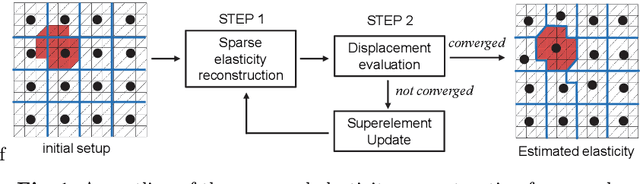

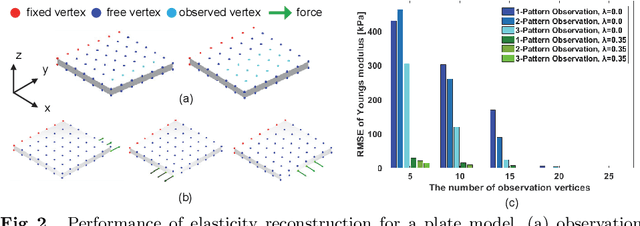

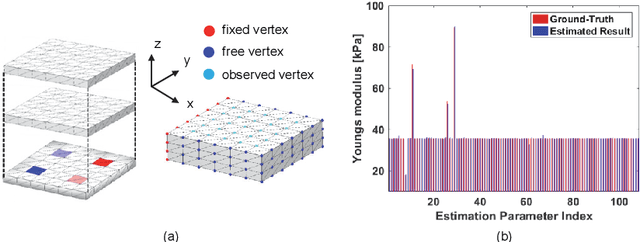

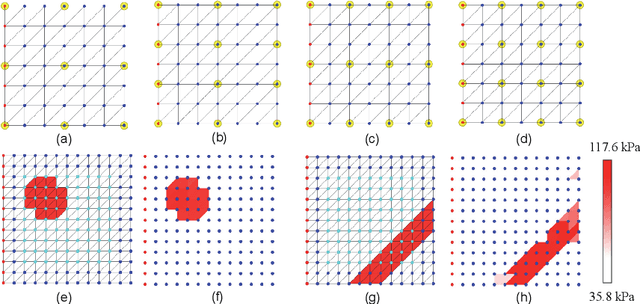

Feb 21, 2019

Abstract:This paper introduces an elasticity reconstruction method based on local displacement observations of elastic bodies. Sparse reconstruction theory is applied to formulate the underdetermined inverse problems of elasticity reconstruction including unobserved areas. An online local clustering scheme called a superelement is proposed to reduce the number of dimensions of the optimization parameters. Alternating the optimization of element boundaries and elasticity parameters enables the elasticity distribution to be estimated with a higher spatial resolution. The simulation experiments show that elasticity distribution is reconstructed based on observations of approximately 10% of the total body. The estimation error was improved when considering the sparseness of the elasticity distribution.

Deformation estimation of an elastic object by partial observation using a neural network

Nov 28, 2017

Abstract:Deformation estimation of elastic object assuming an internal organ is important for the computer navigation of surgery. The aim of this study is to estimate the deformation of an entire three-dimensional elastic object using displacement information of very few observation points. A learning approach with a neural network was introduced to estimate the entire deformation of an object. We applied our method to two elastic objects; a rectangular parallelepiped model, and a human liver model reconstructed from computed tomography data. The average estimation error for the human liver model was 0.041 mm when the object was deformed up to 66.4 mm, from only around 3 % observations. These results indicate that the deformation of an entire elastic object can be estimated with an acceptable level of error from limited observations by applying a trained neural network to a new deformation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge