Taro Sekiyama

Learning Weighted Finite Automata over the Max-Plus Semiring and its Termination

Jul 13, 2024

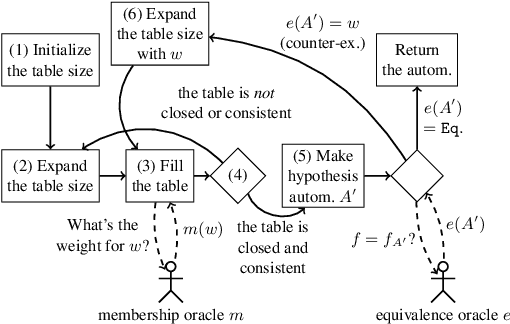

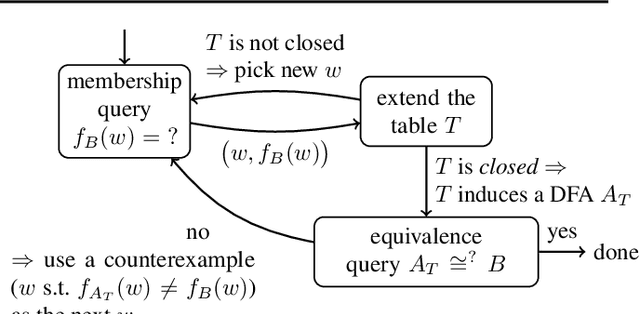

Abstract:Active learning of finite automata has been vigorously pursued for the purposes of analysis and explanation of black-box systems. In this paper, we study an L*-style learning algorithm for weighted automata over the max-plus semiring. The max-plus setting exposes a "consistency" issue in the previously studied semiring-generic extension of L*: we show that it can fail to maintain consistency of tables, and can thus make equivalence queries on obviously wrong hypothesis automata. We present a theoretical fix by a mathematically clean notion of column-closedness. We also present a nontrivial and reasonably broad class of weighted languages over the max-plus semiring in which our algorithm terminates.

Enhancing Loop-Invariant Synthesis via Reinforcement Learning

Aug 14, 2021

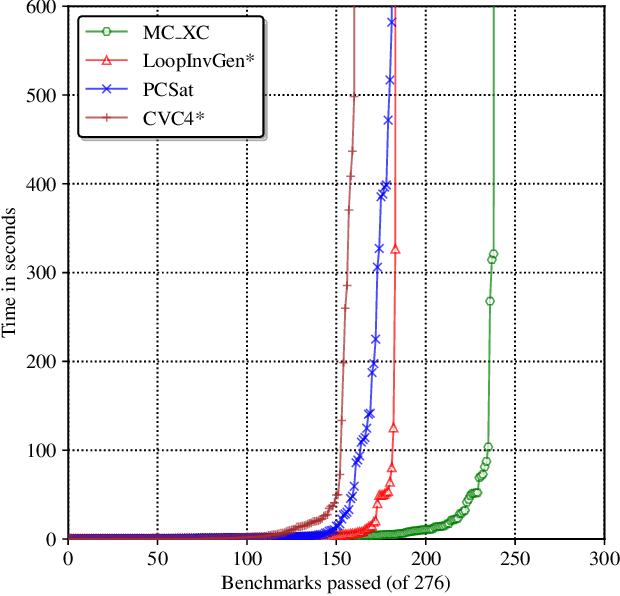

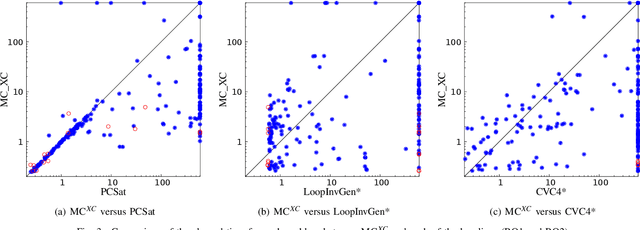

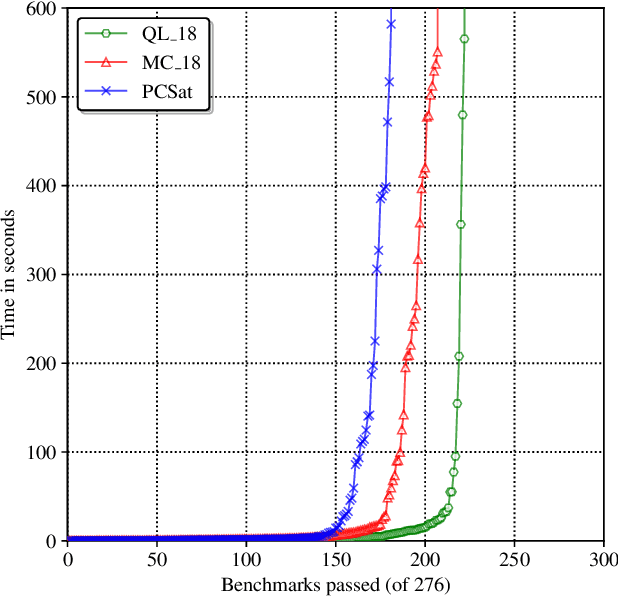

Abstract:Loop-invariant synthesis is the basis of every program verification procedure. Due to its undecidability in general, a tool for invariant synthesis necessarily uses heuristics. Despite the common belief that the design of heuristics is vital for the effective performance of a verifier, little work has been performed toward obtaining the optimal heuristics for each invariant-synthesis tool. Instead, developers have hand-tuned the heuristics of tools. This study demonstrates that we can effectively and automatically learn a good heuristic via reinforcement learning for an invariant synthesizer PCSat. Our experiment shows that PCSat combined with the heuristic learned by reinforcement learning outperforms the state-of-the-art solvers for this task. To the best of our knowledge, this is the first work that investigates learning the heuristics of an invariant synthesis tool.

Toward Neural-Network-Guided Program Synthesis and Verification

Mar 17, 2021

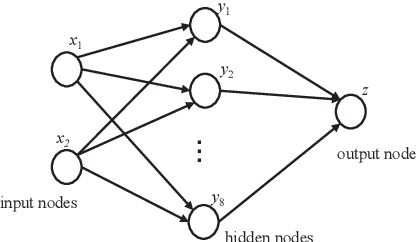

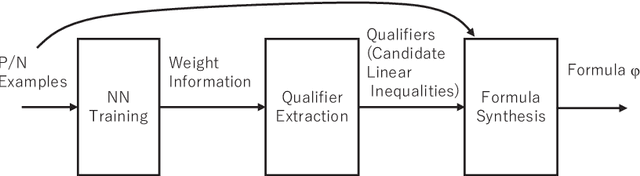

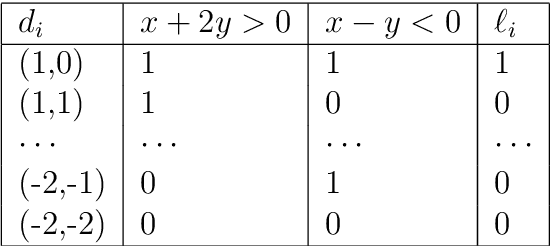

Abstract:We propose a novel framework of program and invariant synthesis called neural network-guided synthesis. We first show that, by suitably designing and training neural networks, we can extract logical formulas over integers from the weights and biases of the trained neural networks. Based on the idea, we have implemented a tool to synthesize formulas from positive/negative examples and implication constraints, and obtained promising experimental results. We also discuss two applications of our synthesis method. One is the use of our tool for qualifier discovery in the framework of ICE-learning-based CHC solving, which can in turn be applied to program verification and inductive invariant synthesis. Another application is to a new program development framework called oracle-based programming, which is a neural-network-guided variation of Solar-Lezama's program synthesis by sketching.

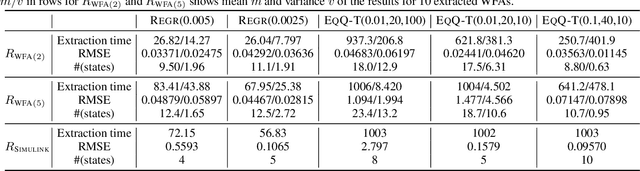

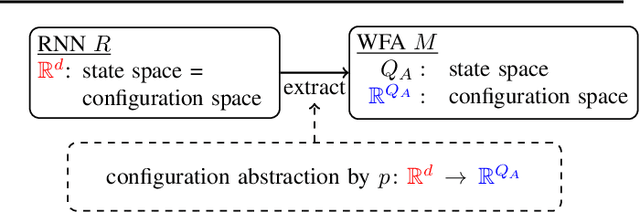

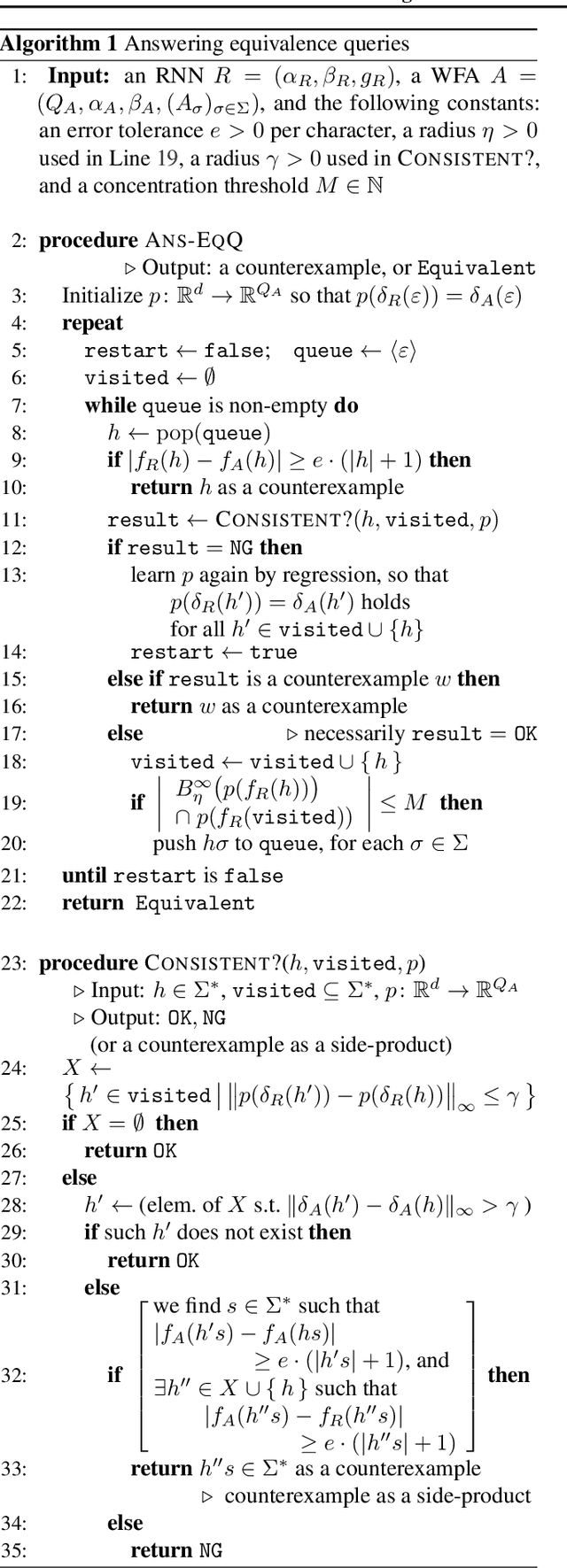

Weighted Automata Extraction from Recurrent Neural Networks via Regression on State Spaces

Apr 08, 2019

Abstract:We present a method to extract a weighted finite automaton (WFA) from a recurrent neural network (RNN). Our algorithm is based on the WFA learning algorithm by Balle and Mohri, which is in turn an extension of Angluin's classic \lstar algorithm. Our technical novelty is in the use of \emph{regression} methods for the so-called equivalence queries, thus exploiting the internal state space of an RNN. This way we achieve a quantitative extension of the recent work by Weiss, Goldberg and Yahav that extracts DFAs. Experiments demonstrate that our algorithm's practicality.

Automated proof synthesis for propositional logic with deep neural networks

May 30, 2018

Abstract:This work explores the application of deep learning, a machine learning technique that uses deep neural networks (DNN) in its core, to an automated theorem proving (ATP) problem. To this end, we construct a statistical model which quantifies the likelihood that a proof is indeed a correct one of a given proposition. Based on this model, we give a proof-synthesis procedure that searches for a proof in the order of the likelihood. This procedure uses an estimator of the likelihood of an inference rule being applied at each step of a proof. As an implementation of the estimator, we propose a proposition-to-proof architecture, which is a DNN tailored to the automated proof synthesis problem. To empirically demonstrate its usefulness, we apply our model to synthesize proofs of propositional logic. We train the proposition-to-proof model using a training dataset of proposition-proof pairs. The evaluation against a benchmark set shows the very high accuracy and an improvement to the recent work of neural proof synthesis.

Profile-guided memory optimization for deep neural networks

Apr 26, 2018

Abstract:Recent years have seen deep neural networks (DNNs) becoming wider and deeper to achieve better performance in many applications of AI. Such DNNs however require huge amounts of memory to store weights and intermediate results (e.g., activations, feature maps, etc.) in propagation. This requirement makes it difficult to run the DNNs on devices with limited, hard-to-extend memory, degrades the running time performance, and restricts the design of network models. We address this challenge by developing a novel profile-guided memory optimization to efficiently and quickly allocate memory blocks during the propagation in DNNs. The optimization utilizes a simple and fast heuristic algorithm based on the two-dimensional rectangle packing problem. Experimenting with well-known neural network models, we confirm that our method not only reduces the memory consumption by up to $49.5\%$ but also accelerates training and inference by up to a factor of four thanks to the rapidity of the memory allocation and the ability to use larger mini-batch sizes.

Lung Nodule Classification by the Combination of Fusion Classifier and Cascaded Convolutional Neural Networks

Dec 18, 2017

Abstract:Lung nodule classification is a class imbalanced problem, as nodules are found with much lower frequency than non-nodules. In the class imbalanced problem, conventional classifiers tend to be overwhelmed by the majority class and ignore the minority class. We showed that cascaded convolutional neural networks can classify the nodule candidates precisely for a class imbalanced nodule candidate data set in our previous study. In this paper, we propose Fusion classifier in conjunction with the cascaded convolutional neural network models. To fuse the models, nodule probabilities are calculated by using the convolutional neural network models at first. Then, Fusion classifier is trained and tested by the nodule probabilities. The proposed method achieved the sensitivity of 94.4% and 95.9% at 4 and 8 false positives per scan in Free Receiver Operating Characteristics (FROC) curve analysis, respectively.

Towards Proof Synthesis Guided by Neural Machine Translation for Intuitionistic Propositional Logic

Jun 20, 2017

Abstract:Inspired by the recent evolution of deep neural networks (DNNs) in machine learning, we explore their application to PL-related topics. This paper is the first step towards this goal; we propose a proof-synthesis method for the negation-free propositional logic in which we use a DNN to obtain a guide of proof search. The idea is to view the proof-synthesis problem as a translation from a proposition to its proof. We train seq2seq, which is a popular network in neural machine translation, so that it generates a proof encoded as a $\lambda$-term of a given proposition. We implement the whole framework and empirically observe that a generated proof term is close to a correct proof in terms of the tree edit distance of AST. This observation justifies using the output from a trained seq2seq model as a guide for proof search.

Multi-stage Neural Networks with Single-sided Classifiers for False Positive Reduction and its Evaluation using Lung X-ray CT Images

Mar 21, 2017

Abstract:Lung nodule classification is a class imbalanced problem because nodules are found with much lower frequency than non-nodules. In the class imbalanced problem, conventional classifiers tend to be overwhelmed by the majority class and ignore the minority class. We therefore propose cascaded convolutional neural networks to cope with the class imbalanced problem. In the proposed approach, multi-stage convolutional neural networks that perform as single-sided classifiers filter out obvious non-nodules. Successively, a convolutional neural network trained with a balanced data set calculates nodule probabilities. The proposed method achieved the sensitivity of 92.4\% and 94.5% at 4 and 8 false positives per scan in Free Receiver Operating Characteristics (FROC) curve analysis, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge