Rudy Raymond

Decentralized Collaborative Learning Framework with External Privacy Leakage Analysis

Apr 01, 2024

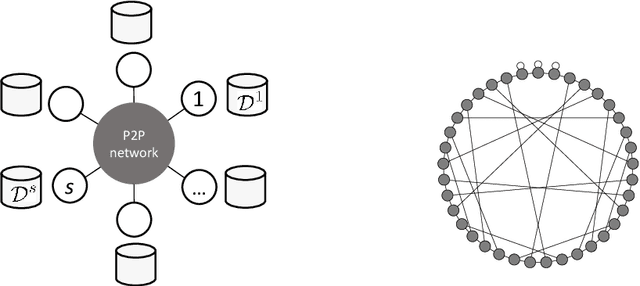

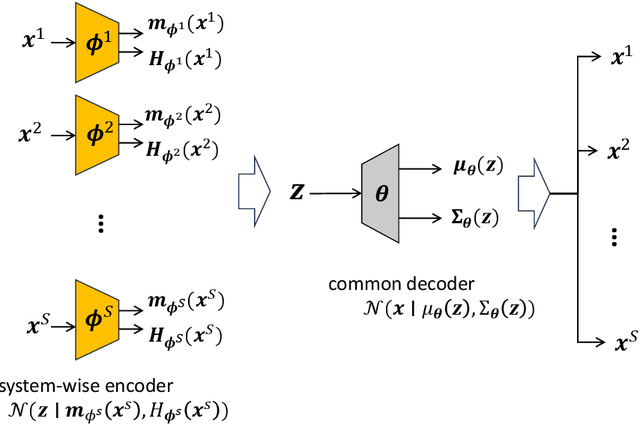

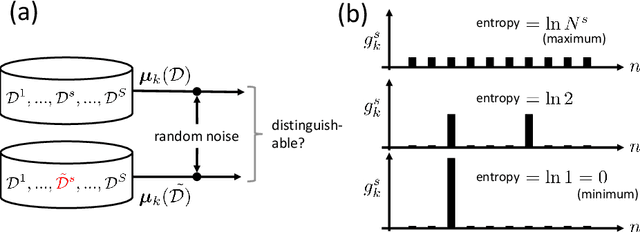

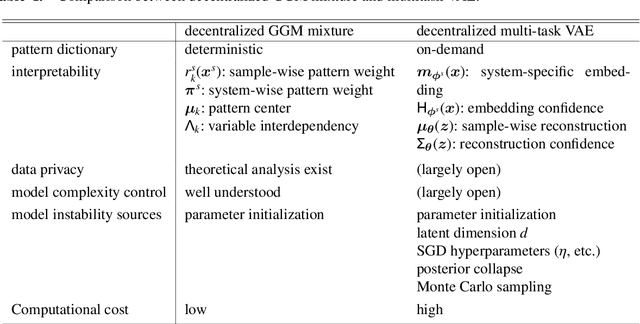

Abstract:This paper presents two methodological advancements in decentralized multi-task learning under privacy constraints, aiming to pave the way for future developments in next-generation Blockchain platforms. First, we expand the existing framework for collaborative dictionary learning (CollabDict), which has previously been limited to Gaussian mixture models, by incorporating deep variational autoencoders (VAEs) into the framework, with a particular focus on anomaly detection. We demonstrate that the VAE-based anomaly score function shares the same mathematical structure as the non-deep model, and provide comprehensive qualitative comparison. Second, considering the widespread use of "pre-trained models," we provide a mathematical analysis on data privacy leakage when models trained with CollabDict are shared externally. We show that the CollabDict approach, when applied to Gaussian mixtures, adheres to a Renyi differential privacy criterion. Additionally, we propose a practical metric for monitoring internal privacy breaches during the learning process.

Quantum Machine Learning on Near-Term Quantum Devices: Current State of Supervised and Unsupervised Techniques for Real-World Applications

Jul 03, 2023

Abstract:The past decade has seen considerable progress in quantum hardware in terms of the speed, number of qubits and quantum volume which is defined as the maximum size of a quantum circuit that can be effectively implemented on a near-term quantum device. Consequently, there has also been a rise in the number of works based on the applications of Quantum Machine Learning (QML) on real hardware to attain quantum advantage over their classical counterparts. In this survey, our primary focus is on selected supervised and unsupervised learning applications implemented on quantum hardware, specifically targeting real-world scenarios. Our survey explores and highlights the current limitations of QML implementations on quantum hardware. We delve into various techniques to overcome these limitations, such as encoding techniques, ansatz structure, error mitigation, and gradient methods. Additionally, we assess the performance of these QML implementations in comparison to their classical counterparts. Finally, we conclude our survey with a discussion on the existing bottlenecks associated with applying QML on real quantum devices and propose potential solutions for overcoming these challenges in the future.

Decentralized Collaborative Learning with Probabilistic Data Protection

Aug 24, 2022

Abstract:We discuss future directions of Blockchain as a collaborative value co-creation platform, in which network participants can gain extra insights that cannot be accessed when disconnected from the others. As such, we propose a decentralized machine learning framework that is carefully designed to respect the values of democracy, diversity, and privacy. Specifically, we propose a federated multi-task learning framework that integrates a privacy-preserving dynamic consensus algorithm. We show that a specific network topology called the expander graph dramatically improves the scalability of global consensus building. We conclude the paper by making some remarks on open problems.

Trainable Discrete Feature Embeddings for Variational Quantum Classifier

Jun 17, 2021

Abstract:Quantum classifiers provide sophisticated embeddings of input data in Hilbert space promising quantum advantage. The advantage stems from quantum feature maps encoding the inputs into quantum states with variational quantum circuits. A recent work shows how to map discrete features with fewer quantum bits using Quantum Random Access Coding (QRAC), an important primitive to encode binary strings into quantum states. We propose a new method to embed discrete features with trainable quantum circuits by combining QRAC and a recently proposed strategy for training quantum feature map called quantum metric learning. We show that the proposed trainable embedding requires not only as few qubits as QRAC but also overcomes the limitations of QRAC to classify inputs whose classes are based on hard Boolean functions. We numerically demonstrate its use in variational quantum classifiers to achieve better performances in classifying real-world datasets, and thus its possibility to leverage near-term quantum computers for quantum machine learning.

Profile-guided memory optimization for deep neural networks

Apr 26, 2018

Abstract:Recent years have seen deep neural networks (DNNs) becoming wider and deeper to achieve better performance in many applications of AI. Such DNNs however require huge amounts of memory to store weights and intermediate results (e.g., activations, feature maps, etc.) in propagation. This requirement makes it difficult to run the DNNs on devices with limited, hard-to-extend memory, degrades the running time performance, and restricts the design of network models. We address this challenge by developing a novel profile-guided memory optimization to efficiently and quickly allocate memory blocks during the propagation in DNNs. The optimization utilizes a simple and fast heuristic algorithm based on the two-dimensional rectangle packing problem. Experimenting with well-known neural network models, we confirm that our method not only reduces the memory consumption by up to $49.5\%$ but also accelerates training and inference by up to a factor of four thanks to the rapidity of the memory allocation and the ability to use larger mini-batch sizes.

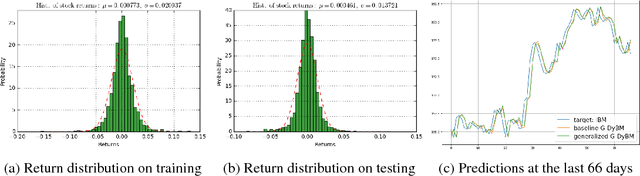

Dynamic Boltzmann Machines for Second Order Moments and Generalized Gaussian Distributions

Dec 17, 2017

Abstract:Dynamic Boltzmann Machine (DyBM) has been shown highly efficient to predict time-series data. Gaussian DyBM is a DyBM that assumes the predicted data is generated by a Gaussian distribution whose first-order moment (mean) dynamically changes over time but its second-order moment (variance) is fixed. However, in many financial applications, the assumption is quite limiting in two aspects. First, even when the data follows a Gaussian distribution, its variance may change over time. Such variance is also related to important temporal economic indicators such as the market volatility. Second, financial time-series data often requires learning datasets generated by the generalized Gaussian distribution with an additional shape parameter that is important to approximate heavy-tailed distributions. Addressing those aspects, we show how to extend DyBM that results in significant performance improvement in predicting financial time-series data.

A Deep-Learning Approach for Operation of an Automated Realtime Flare Forecast

Jun 06, 2016

Abstract:Automated forecasts serve important role in space weather science, by providing statistical insights to flare-trigger mechanisms, and by enabling tailor-made forecasts and high-frequency forecasts. Only by realtime forecast we can experimentally measure the performance of flare-forecasting methods while confidently avoiding overlearning. We have been operating unmanned flare forecast service since August, 2015 that provides 24-hour-ahead forecast of solar flares, every 12 minutes. We report the method and prediction results of the system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge