Tanja Schneeberger

Recognizing Emotion Regulation Strategies from Human Behavior with Large Language Models

Aug 08, 2024

Abstract:Human emotions are often not expressed directly, but regulated according to internal processes and social display rules. For affective computing systems, an understanding of how users regulate their emotions can be highly useful, for example to provide feedback in job interview training, or in psychotherapeutic scenarios. However, at present no method to automatically classify different emotion regulation strategies in a cross-user scenario exists. At the same time, recent studies showed that instruction-tuned Large Language Models (LLMs) can reach impressive performance across a variety of affect recognition tasks such as categorical emotion recognition or sentiment analysis. While these results are promising, it remains unclear to what extent the representational power of LLMs can be utilized in the more subtle task of classifying users' internal emotion regulation strategy. To close this gap, we make use of the recently introduced \textsc{Deep} corpus for modeling the social display of the emotion shame, where each point in time is annotated with one of seven different emotion regulation classes. We fine-tune Llama2-7B as well as the recently introduced Gemma model using Low-rank Optimization on prompts generated from different sources of information on the \textsc{Deep} corpus. These include verbal and nonverbal behavior, person factors, as well as the results of an in-depth interview after the interaction. Our results show, that a fine-tuned Llama2-7B LLM is able to classify the utilized emotion regulation strategy with high accuracy (0.84) without needing access to data from post-interaction interviews. This represents a significant improvement over previous approaches based on Bayesian Networks and highlights the importance of modeling verbal behavior in emotion regulation.

"GAN I hire you?" -- A System for Personalized Virtual Job Interview Training

Jun 08, 2022

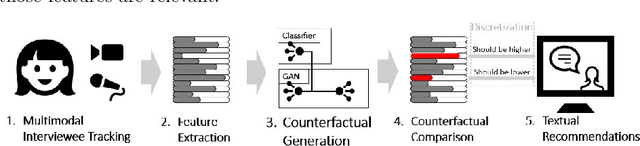

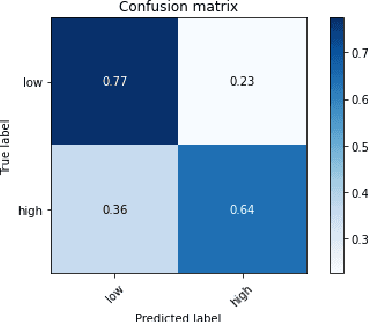

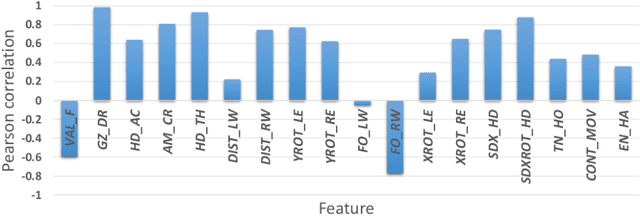

Abstract:Job interviews are usually high-stakes social situations where professional and behavioral skills are required for a satisfactory outcome. Professional job interview trainers give educative feedback about the shown behavior according to common standards. This feedback can be helpful concerning the improvement of behavioral skills needed for job interviews. A technological approach for generating such feedback might be a playful and low-key starting point for job interview training. Therefore, we extended an interactive virtual job interview training system with a Generative Adversarial Network (GAN)-based approach that first detects behavioral weaknesses and subsequently generates personalized feedback. To evaluate the usefulness of the generated feedback, we conducted a mixed-methods pilot study using mock-ups from the job interview training system. The overall study results indicate that the GAN-based generated behavioral feedback is helpful. Moreover, participants assessed that the feedback would improve their job interview performance.

Designing a Mobile Social and Vocational Reintegration Assistant for Burn-out Outpatient Treatment

Dec 15, 2020

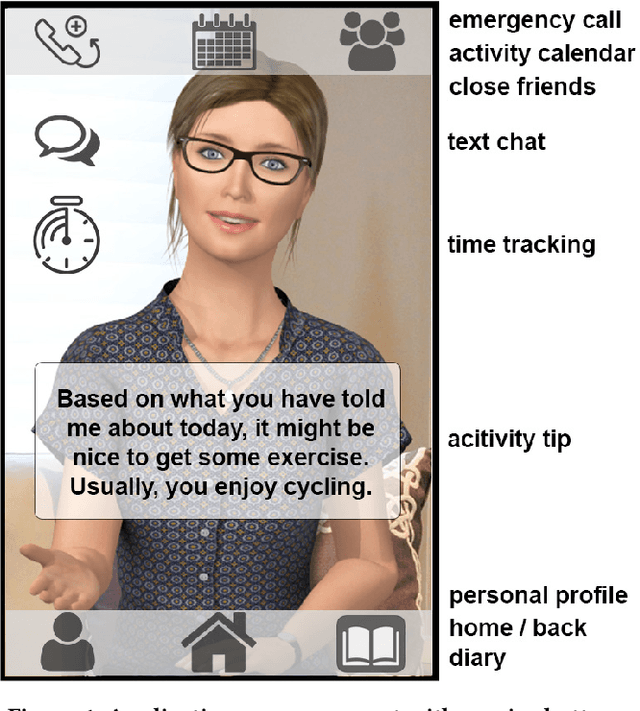

Abstract:Using Social Agents as health-care assistants or trainers is one focus area of IVA research. While their use as physical health-care agents is well established, their employment in the field of psychotherapeutic care comes with daunting challenges. This paper presents our mobile Social Agent EmmA in the role of a vocational reintegration assistant for burn-out outpatient treatment. We follow a typical participatory design approach including experts and patients in order to address requirements from both sides. Since the success of such treatments is related to a patients emotion regulation capabilities, we employ a real-time social signal interpretation together with a computational simulation of emotion regulation that influences the agent's social behavior as well as the situational selection of verbal treatment strategies. Overall, our interdisciplinary approach enables a novel integrative concept for Social Agents as assistants for burn-out patients.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge