Takeshi Oishi

Stereo-LiDAR Fusion by Semi-Global Matching With Discrete Disparity-Matching Cost and Semidensification

Apr 07, 2025Abstract:We present a real-time, non-learning depth estimation method that fuses Light Detection and Ranging (LiDAR) data with stereo camera input. Our approach comprises three key techniques: Semi-Global Matching (SGM) stereo with Discrete Disparity-matching Cost (DDC), semidensification of LiDAR disparity, and a consistency check that combines stereo images and LiDAR data. Each of these components is designed for parallelization on a GPU to realize real-time performance. When it was evaluated on the KITTI dataset, the proposed method achieved an error rate of 2.79\%, outperforming the previous state-of-the-art real-time stereo-LiDAR fusion method, which had an error rate of 3.05\%. Furthermore, we tested the proposed method in various scenarios, including different LiDAR point densities, varying weather conditions, and indoor environments, to demonstrate its high adaptability. We believe that the real-time and non-learning nature of our method makes it highly practical for applications in robotics and automation.

* 8 pages, 8 figures, 7 tables

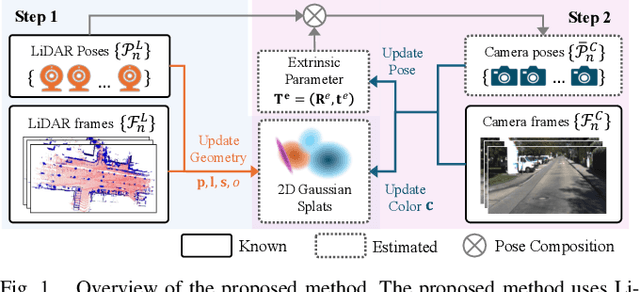

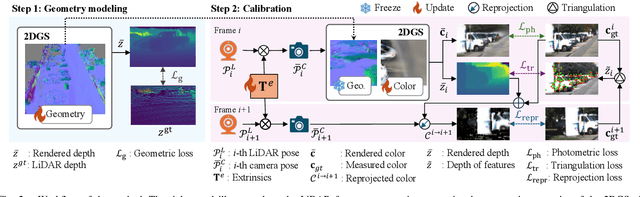

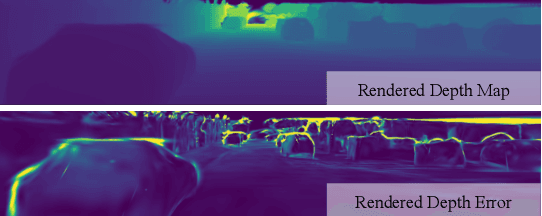

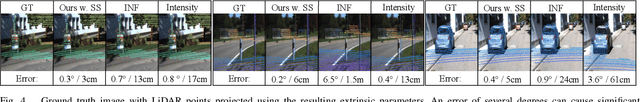

Robust LiDAR-Camera Calibration with 2D Gaussian Splatting

Apr 01, 2025

Abstract:LiDAR-camera systems have become increasingly popular in robotics recently. A critical and initial step in integrating the LiDAR and camera data is the calibration of the LiDAR-camera system. Most existing calibration methods rely on auxiliary target objects, which often involve complex manual operations, whereas targetless methods have yet to achieve practical effectiveness. Recognizing that 2D Gaussian Splatting (2DGS) can reconstruct geometric information from camera image sequences, we propose a calibration method that estimates LiDAR-camera extrinsic parameters using geometric constraints. The proposed method begins by reconstructing colorless 2DGS using LiDAR point clouds. Subsequently, we update the colors of the Gaussian splats by minimizing the photometric loss. The extrinsic parameters are optimized during this process. Additionally, we address the limitations of the photometric loss by incorporating the reprojection and triangulation losses, thereby enhancing the calibration robustness and accuracy.

* Accepted in IEEE Robotics and Automation Letters. Code available at: https://github.com/ShuyiZhou495/RobustCalibration

NeAS: 3D Reconstruction from X-ray Images using Neural Attenuation Surface

Mar 10, 2025Abstract:Reconstructing three-dimensional (3D) structures from two-dimensional (2D) X-ray images is a valuable and efficient technique in medical applications that requires less radiation exposure than computed tomography scans. Recent approaches that use implicit neural representations have enabled the synthesis of novel views from sparse X-ray images. However, although image synthesis has improved the accuracy, the accuracy of surface shape estimation remains insufficient. Therefore, we propose a novel approach for reconstructing 3D scenes using a Neural Attenuation Surface (NeAS) that simultaneously captures the surface geometry and attenuation coefficient fields. NeAS incorporates a signed distance function (SDF), which defines the attenuation field and aids in extracting the 3D surface within the scene. We conducted experiments using simulated and authentic X-ray images, and the results demonstrated that NeAS could accurately extract 3D surfaces within a scene using only 2D X-ray images.

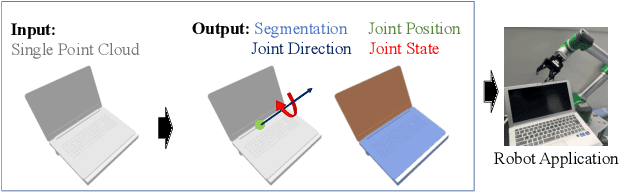

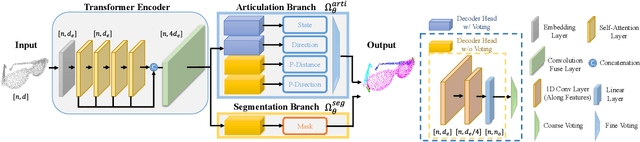

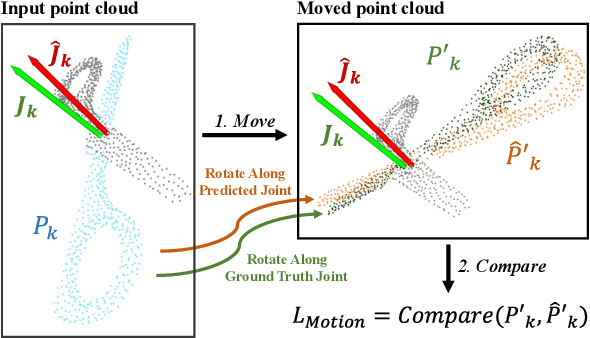

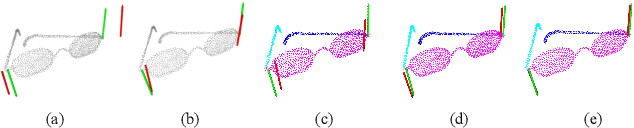

CAPT: Category-level Articulation Estimation from a Single Point Cloud Using Transformer

Feb 27, 2024

Abstract:The ability to estimate joint parameters is essential for various applications in robotics and computer vision. In this paper, we propose CAPT: category-level articulation estimation from a point cloud using Transformer. CAPT uses an end-to-end transformer-based architecture for joint parameter and state estimation of articulated objects from a single point cloud. The proposed CAPT methods accurately estimate joint parameters and states for various articulated objects with high precision and robustness. The paper also introduces a motion loss approach, which improves articulation estimation performance by emphasizing the dynamic features of articulated objects. Additionally, the paper presents a double voting strategy to provide the framework with coarse-to-fine parameter estimation. Experimental results on several category datasets demonstrate that our methods outperform existing alternatives for articulation estimation. Our research provides a promising solution for applying Transformer-based architectures in articulated object analysis.

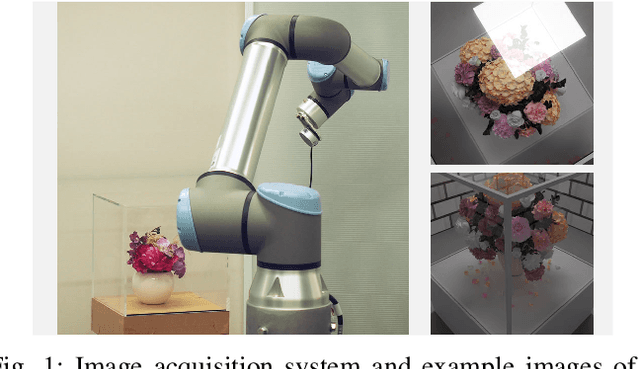

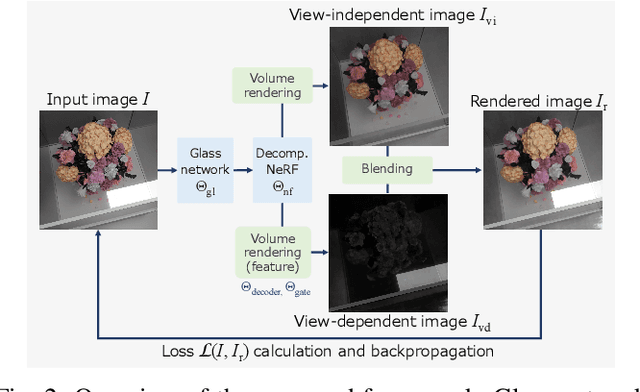

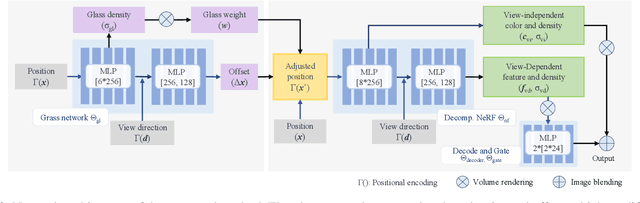

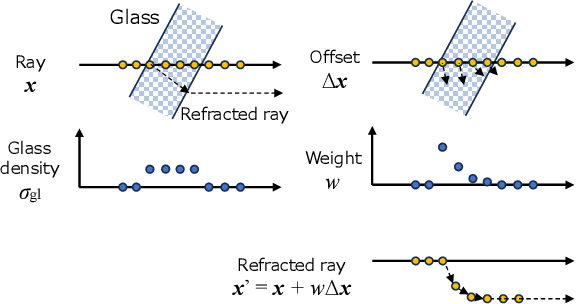

REF$^2$-NeRF: Reflection and Refraction aware Neural Radiance Field

Nov 30, 2023

Abstract:Recently, significant progress has been made in the study of methods for 3D reconstruction from multiple images using implicit neural representations, exemplified by the neural radiance field (NeRF) method. Such methods, which are based on volume rendering, can model various light phenomena, and various extended methods have been proposed to accommodate different scenes and situations. However, when handling scenes with multiple glass objects, e.g., objects in a glass showcase, modeling the target scene accurately has been challenging due to the presence of multiple reflection and refraction effects. Thus, this paper proposes a NeRF-based modeling method for scenes containing a glass case. In the proposed method, refraction and reflection are modeled using elements that are dependent and independent of the viewer's perspective. This approach allows us to estimate the surfaces where refraction occurs, i.e., glass surfaces, and enables the separation and modeling of both direct and reflected light components. Compared to existing methods, the proposed method enables more accurate modeling of both glass refraction and the overall scene.

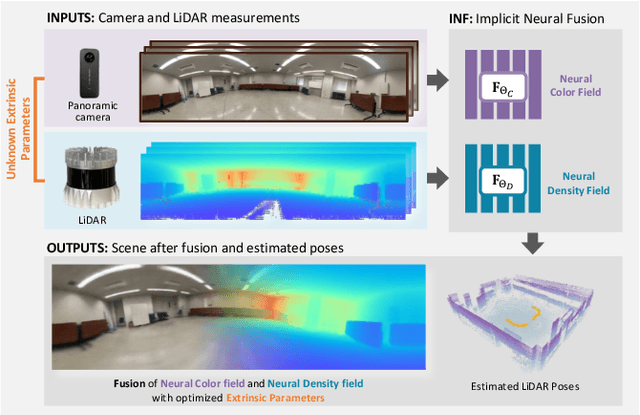

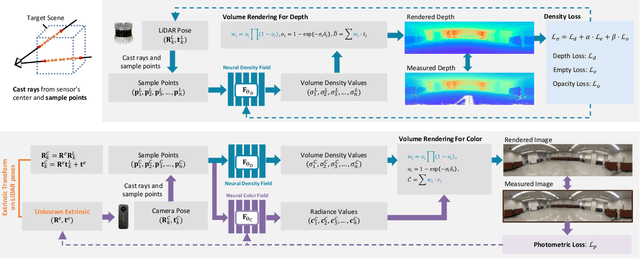

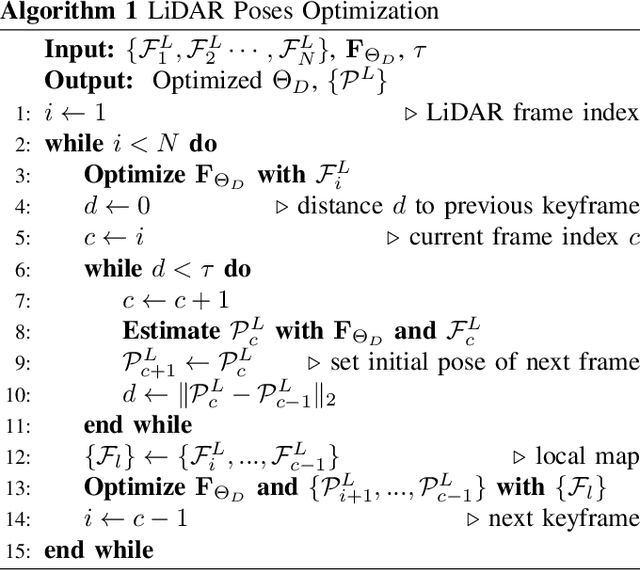

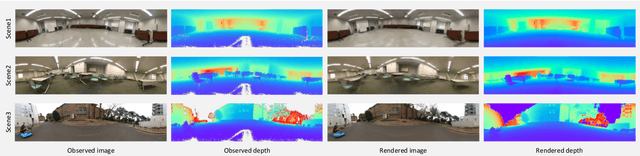

INF: Implicit Neural Fusion for LiDAR and Camera

Aug 28, 2023

Abstract:Sensor fusion has become a popular topic in robotics. However, conventional fusion methods encounter many difficulties, such as data representation differences, sensor variations, and extrinsic calibration. For example, the calibration methods used for LiDAR-camera fusion often require manual operation and auxiliary calibration targets. Implicit neural representations (INRs) have been developed for 3D scenes, and the volume density distribution involved in an INR unifies the scene information obtained by different types of sensors. Therefore, we propose implicit neural fusion (INF) for LiDAR and camera. INF first trains a neural density field of the target scene using LiDAR frames. Then, a separate neural color field is trained using camera images and the trained neural density field. Along with the training process, INF both estimates LiDAR poses and optimizes extrinsic parameters. Our experiments demonstrate the high accuracy and stable performance of the proposed method.

Non-learning Stereo-aided Depth Completion under Mis-projection via Selective Stereo Matching

Oct 04, 2022

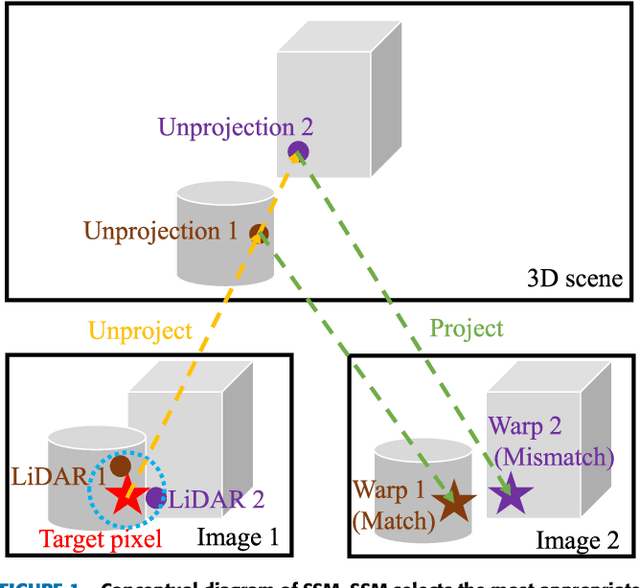

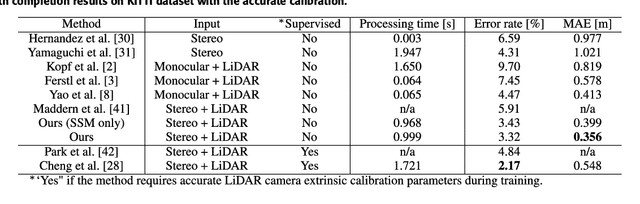

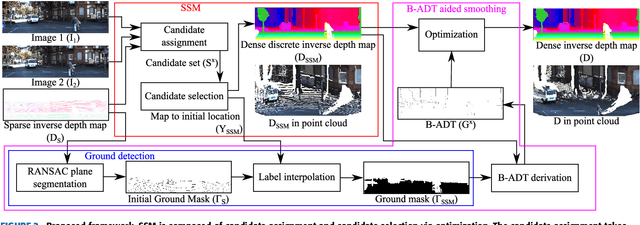

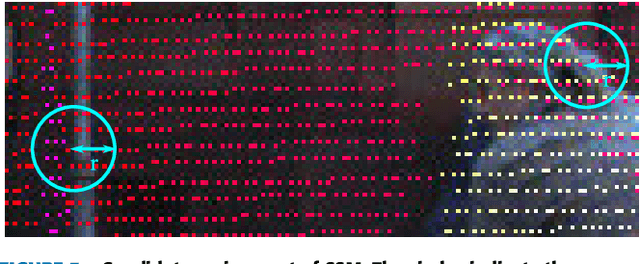

Abstract:We propose a non-learning depth completion method for a sparse depth map captured using a light detection and ranging (LiDAR) sensor guided by a pair of stereo images. Generally, conventional stereo-aided depth completion methods have two limiations. (i) They assume the given sparse depth map is accurately aligned to the input image, whereas the alignment is difficult to achieve in practice. (ii) They have limited accuracy in the long range because the depth is estimated by pixel disparity. To solve the abovementioned limitations, we propose selective stereo matching (SSM) that searches the most appropriate depth value for each image pixel from its neighborly projected LiDAR points based on an energy minimization framework. This depth selection approach can handle any type of mis-projection. Moreover, SSM has an advantage in terms of long-range depth accuracy because it directly uses the LiDAR measurement rather than the depth acquired from the stereo. SSM is a discrete process; thus, we apply variational smoothing with binary anisotropic diffusion tensor (B-ADT) to generate a continuous depth map while preserving depth discontinuity across object boundaries. Experimentally, compared with the previous state-of-the-art stereo-aided depth completion, the proposed method reduced the mean absolute error (MAE) of the depth estimation to 0.65 times and demonstrated approximately twice more accurate estimation in the long range. Moreover, under various LiDAR-camera calibration errors, the proposed method reduced the depth estimation MAE to 0.34-0.93 times from previous depth completion methods.

* 15 pages, 13 figures

Learning 6DoF Grasping Using Reward-Consistent Demonstration

Mar 23, 2021

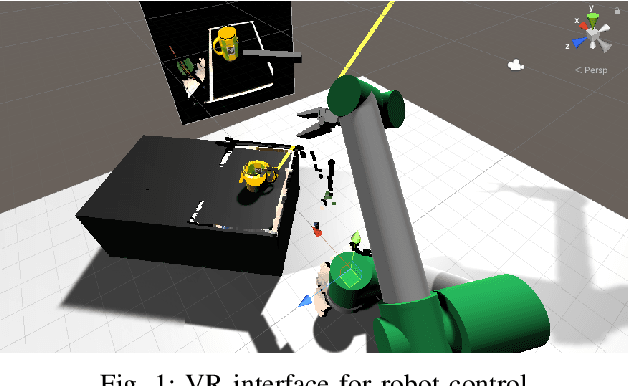

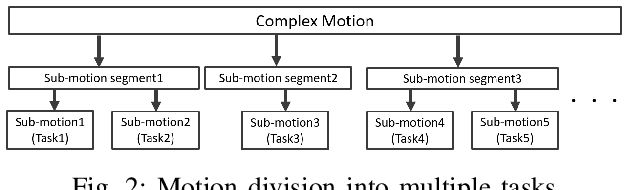

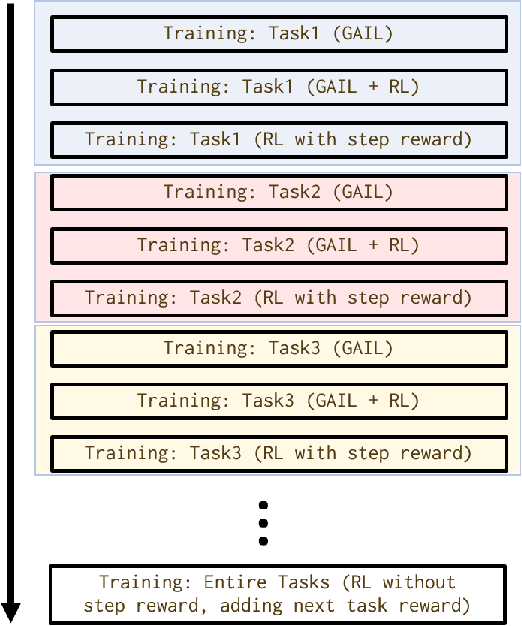

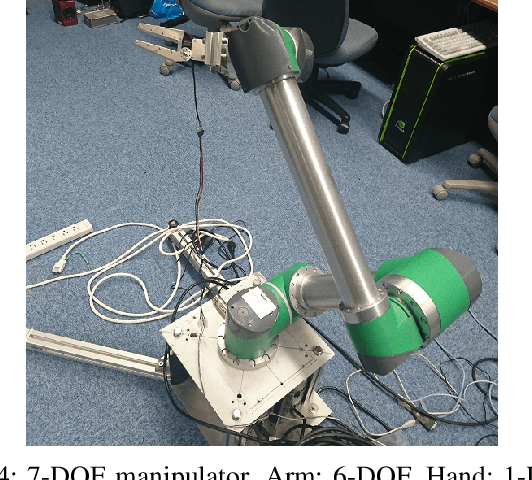

Abstract:As the number of the robot's degrees of freedom increases, the implementation of robot motion becomes more complex and difficult. In this study, we focus on learning 6DOF-grasping motion and consider dividing the grasping motion into multiple tasks. We propose to combine imitation and reinforcement learning in order to facilitate a more efficient learning of the desired motion. In order to collect demonstration data as teacher data for the imitation learning, we created a virtual reality (VR) interface that allows humans to operate the robot intuitively. Moreover, by dividing the motion into simpler tasks, we simplify the design of reward functions for reinforcement learning and show in our experiments a reduction in the steps required to learn the grasping motion.

Relative Drone-Ground Vehicle Localization using LiDAR and Fisheye Cameras through Direct and Indirect Observations

Nov 17, 2020

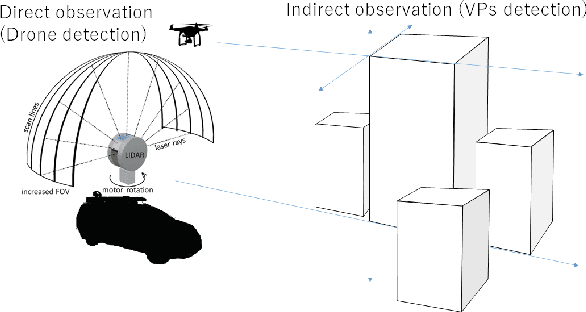

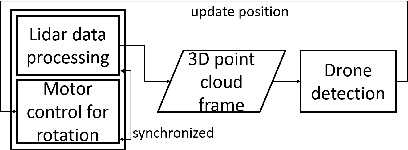

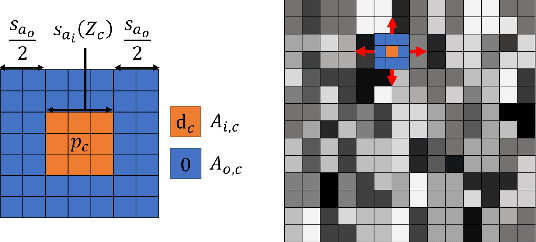

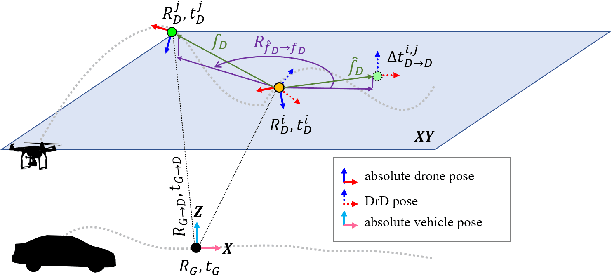

Abstract:Estimating the pose of an unmanned aerial vehicle (UAV) or drone is a challenging task. It is useful for many applications such as navigation, surveillance, tracking objects on the ground, and 3D reconstruction. In this work, we present a LiDAR-camera-based relative pose estimation method between a drone and a ground vehicle, using a LiDAR sensor and a fisheye camera on the vehicle's roof and another fisheye camera mounted under the drone. The LiDAR sensor directly observes the drone and measures its position, and the two cameras estimate the relative orientation using indirect observation of the surrounding objects. We propose a dynamically adaptive kernel-based method for drone detection and tracking using the LiDAR. We detect vanishing points in both cameras and find their correspondences to estimate the relative orientation. Additionally, we propose a rotation correction technique by relying on the observed motion of the drone through the LiDAR. In our experiments, we were able to achieve very fast initial detection and real-time tracking of the drone. Our method is fully automatic.

Discontinuous and Smooth Depth Completion with Binary Anisotropic Diffusion Tensor

Jun 25, 2020

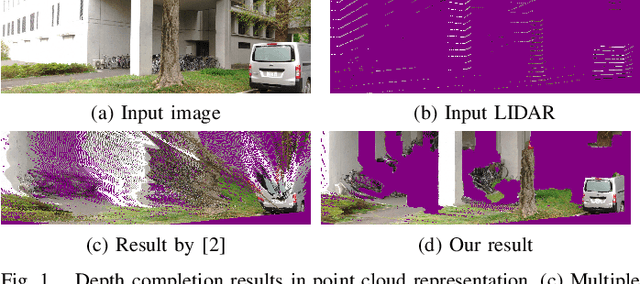

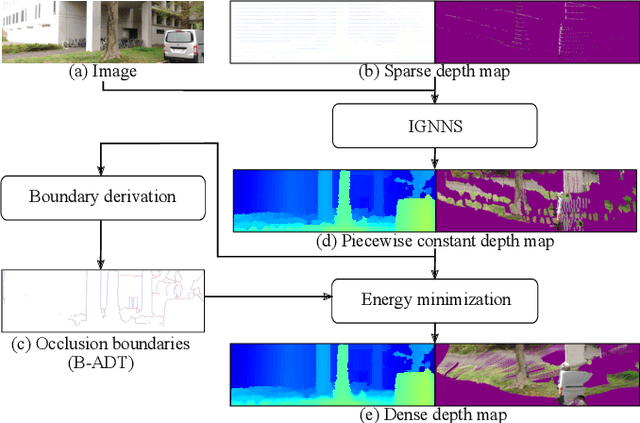

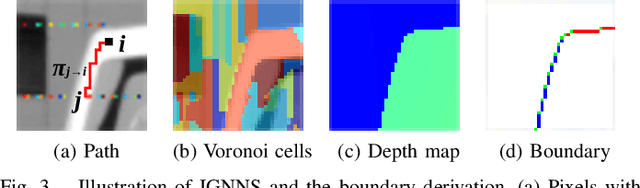

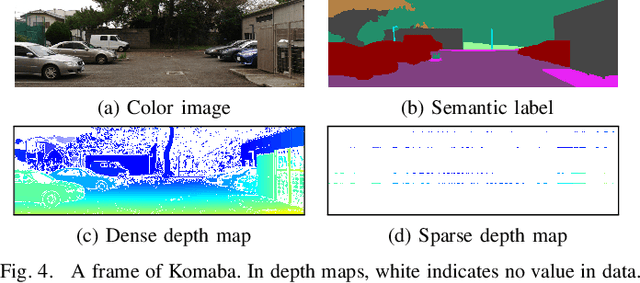

Abstract:We propose an unsupervised real-time dense depth completion from a sparse depth map guided by a single image. Our method generates a smooth depth map while preserving discontinuity between different objects. Our key idea is a Binary Anisotropic Diffusion Tensor (B-ADT) which can completely eliminate smoothness constraint at intended positions and directions by applying it to variational regularization. We also propose an Image-guided Nearest Neighbor Search (IGNNS) to derive a piecewise constant depth map which is used for B-ADT derivation and in the data term of the variational energy. Our experiments show that our method can outperform previous unsupervised and semi-supervised depth completion methods in terms of accuracy. Moreover, since our resulting depth map preserves the discontinuity between objects, the result can be converted to a visually plausible point cloud. This is remarkable since previous methods generate unnatural surface-like artifacts between discontinuous objects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge