Tajwar Abrar Aleef

Quasi-Real Time Multi-Frequency 3D Shear Wave Absolute Vibro-Elastography System for Prostate

May 09, 2022

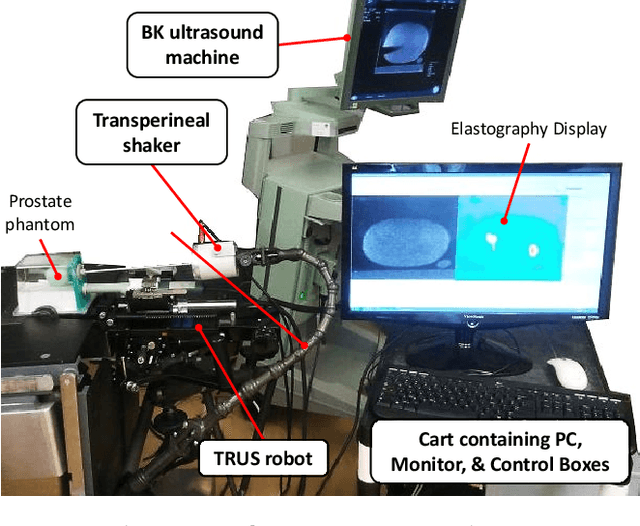

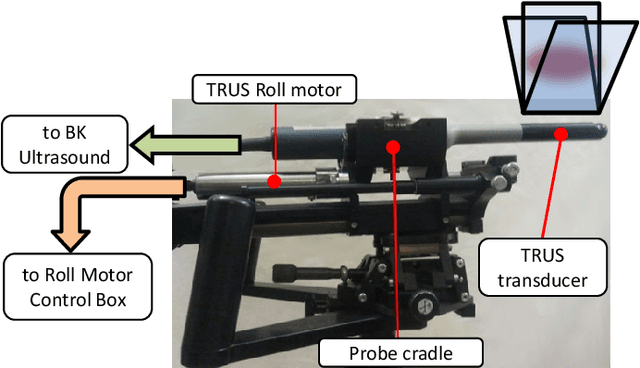

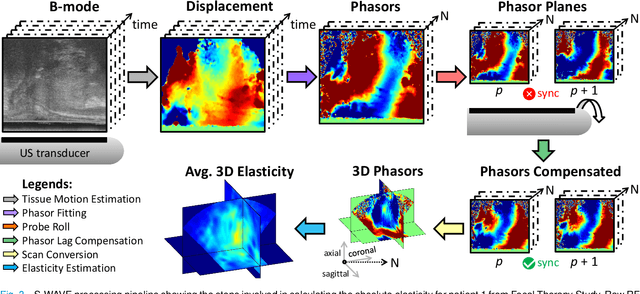

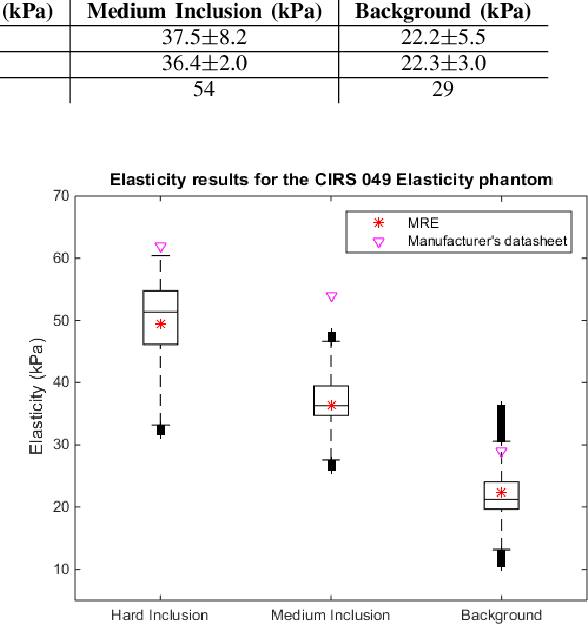

Abstract:This article describes a novel quasi-real time system for quantitative and volumetric measurement of tissue elasticity in the prostate. Tissue elasticity is computed by using a local frequency estimator to measure the three dimensional local wavelengths of a steady-state shear wave within the prostate gland. The shear wave is created using a mechanical voice coil shaker which transmits multi-frequency vibrations transperineally. Radio frequency data is streamed directly from a BK Medical 8848 trans-rectal ultrasound transducer to an external computer where tissue displacement due to the excitation is measured using a speckle tracking algorithm. Bandpass sampling is used that eliminates the need for an ultra fast frame rate to track the tissue motion and allows for accurate reconstruction at a sampling frequency that is below the Nyquist rate. A roll motor with computer control is used to rotate the sagittal array of the transducer and obtain the 3D data. Two CIRS phantoms were used to validate both the accuracy of the elasticity measurement as well as the functional feasibility of using the system for in vivo prostate imaging. The system has been used in two separate clinical studies as a method for cancer identification. The results, presented here, show numerical and visual correlations between our stiffness measurements and cancer likelihood as determined from pathology results. Initial published results using this system include an area under the receiver operating characteristic curve of 0.82+/-0.01 with regards to prostate cancer identification in the peripheral zone.

Rapid treatment planning for low-dose-rate prostate brachytherapy with TP-GAN

Mar 18, 2021

Abstract:Treatment planning in low-dose-rate prostate brachytherapy (LDR-PB) aims to produce arrangement of implantable radioactive seeds that deliver a minimum prescribed dose to the prostate whilst minimizing toxicity to healthy tissues. There can be multiple seed arrangements that satisfy this dosimetric criterion, not all deemed 'acceptable' for implant from a physician's perspective. This leads to plans that are subjective to the physician's/centre's preference, planning style, and expertise. We propose a method that aims to reduce this variability by training a model to learn from a large pool of successful retrospective LDR-PB data (961 patients) and create consistent plans that mimic the high-quality manual plans. Our model is based on conditional generative adversarial networks that use a novel loss function for penalizing the model on spatial constraints of the seeds. An optional optimizer based on a simulated annealing (SA) algorithm can be used to further fine-tune the plans if necessary (determined by the treating physician). Performance analysis was conducted on 150 test cases demonstrating comparable results to that of the manual prehistorical plans. On average, the clinical target volume covering 100% of the prescribed dose was 98.9% for our method compared to 99.4% for manual plans. Moreover, using our model, the planning time was significantly reduced to an average of 2.5 mins/plan with SA, and less than 3 seconds without SA. Compared to this, manual planning at our centre takes around 20 mins/plan.

Co-Generation and Segmentation for Generalized Surgical Instrument Segmentation on Unlabelled Data

Mar 16, 2021

Abstract:Surgical instrument segmentation for robot-assisted surgery is needed for accurate instrument tracking and augmented reality overlays. Therefore, the topic has been the subject of a number of recent papers in the CAI community. Deep learning-based methods have shown state-of-the-art performance for surgical instrument segmentation, but their results depend on labelled data. However, labelled surgical data is of limited availability and is a bottleneck in surgical translation of these methods. In this paper, we demonstrate the limited generalizability of these methods on different datasets, including human robot-assisted surgeries. We then propose a novel joint generation and segmentation strategy to learn a segmentation model with better generalization capability to domains that have no labelled data. The method leverages the availability of labelled data in a different domain. The generator does the domain translation from the labelled domain to the unlabelled domain and simultaneously, the segmentation model learns using the generated data while regularizing the generative model. We compared our method with state-of-the-art methods and showed its generalizability on publicly available datasets and on our own recorded video frames from robot-assisted prostatectomies. Our method shows consistently high mean Dice scores on both labelled and unlabelled domains when data is available only for one of the domains. *M. Kalia and T. Aleef contributed equally to the manuscript

Automatic Mass Detection in Breast Using Deep Convolutional Neural Network and SVM Classifier

Jul 09, 2019

Abstract:Mammography is the most widely used gold standard for screening breast cancer, where, mass detection is considered as the prominent step. Detecting mass in the breast is, however, an arduous problem as they usually have large variations between them in terms of shape, size, boundary, and texture. In this literature, the process of mass detection is automated with the use of transfer learning techniques of Deep Convolutional Neural Networks (DCNN). Pre-trained VGG19 network is used to extract features which are then followed by bagged decision tree for features selection and then a Support Vector Machine (SVM) classifier is trained and used for classifying between the mass and non-mass. Area Under ROC Curve (AUC) is chosen as the performance metric, which is then maximized during classifier selection and hyper-parameter tuning. The robustness of the two selected type of classifiers, C-SVM, and \u{psion}-SVM, are investigated with extensive experiments before selecting the best performing classifier. All experiments in this paper were conducted using the INbreast dataset. The best AUC obtained from the experimental results is 0.994 +/- 0.003 i.e. [0.991, 0.997]. Our results conclude that by using pre-trained VGG19 network, high-level distinctive features can be extracted from Mammograms which when used with the proposed SVM classifier is able to robustly distinguish between the mass and non-mass present in breast.

Smoothness-based Edge Detection using Low-SNR Camera for Robot Navigation

Oct 03, 2017

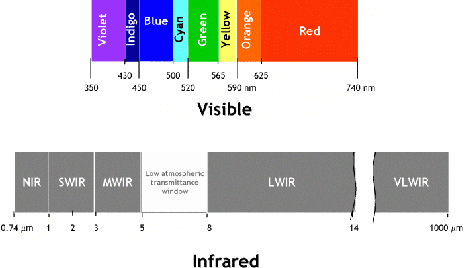

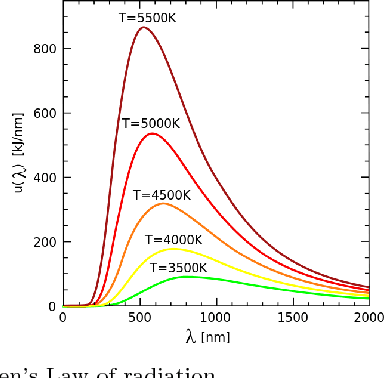

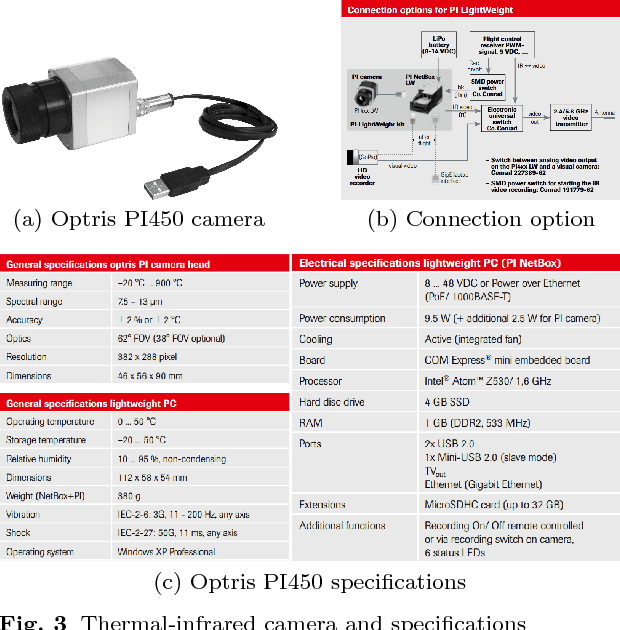

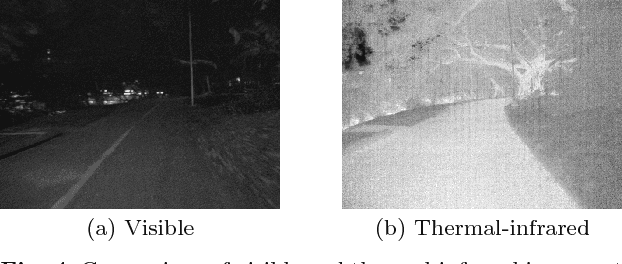

Abstract:In the emerging advancement in the branch of autonomous robotics, the ability of a robot to efficiently localize and construct maps of its surrounding is crucial. This paper deals with utilizing thermal-infrared cameras, as opposed to conventional cameras as the primary sensor to capture images of the robot's surroundings. For localization, the images need to be further processed before feeding them to a navigational system. The main motivation of this paper was to develop an edge detection methodology capable of utilizing the low-SNR poor output from such a thermal camera and effectively detect smooth edges of the surrounding environment. The enhanced edge detector proposed in this paper takes the raw image from the thermal sensor, denoises the images, applies Canny edge detection followed by CSS method. The edges are ranked to remove any noise and only edges of the highest rank are kept. Then, the broken edges are linked by computing edge metrics and a smooth edge of the surrounding is displayed in a binary image. Several comparisons are also made in the paper between the proposed technique and the existing techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge