Taiping Zeng

ELA-ZSON: Efficient Layout-Aware Zero-Shot Object Navigation Agent with Hierarchical Planning

May 09, 2025Abstract:We introduce ELA-ZSON, an efficient layout-aware zero-shot object navigation (ZSON) approach designed for complex multi-room indoor environments. By planning hierarchically leveraging a global topologigal map with layout information and local imperative approach with detailed scene representation memory, ELA-ZSON achieves both efficient and effective navigation. The process is managed by an LLM-powered agent, ensuring seamless effective planning and navigation, without the need for human interaction, complex rewards, or costly training. Our experimental results on the MP3D benchmark achieves 85\% object navigation success rate (SR) and 79\% success rate weighted by path length (SPL) (over 40\% point improvement in SR and 60\% improvement in SPL compared to exsisting methods). Furthermore, we validate the robustness of our approach through virtual agent and real-world robotic deployment, showcasing its capability in practical scenarios. See https://anonymous.4open.science/r/ELA-ZSON-C67E/ for details.

LOP-Field: Brain-inspired Layout-Object-Position Fields for Robotic Scene Understanding

Jun 11, 2024Abstract:Spatial cognition empowers animals with remarkably efficient navigation abilities, largely depending on the scene-level understanding of spatial environments. Recently, it has been found that a neural population in the postrhinal cortex of rat brains is more strongly tuned to the spatial layout rather than objects in a scene. Inspired by the representations of spatial layout in local scenes to encode different regions separately, we proposed LOP-Field that realizes the Layout-Object-Position(LOP) association to model the hierarchical representations for robotic scene understanding. Powered by foundation models and implicit scene representation, a neural field is implemented as a scene memory for robots, storing a queryable representation of scenes with position-wise, object-wise, and layout-wise information. To validate the built LOP association, the model is tested to infer region information from 3D positions with quantitative metrics, achieving an average accuracy of more than 88\%. It is also shown that the proposed method using region information can achieve improved object and view localization results with text and RGB input compared to state-of-the-art localization methods.

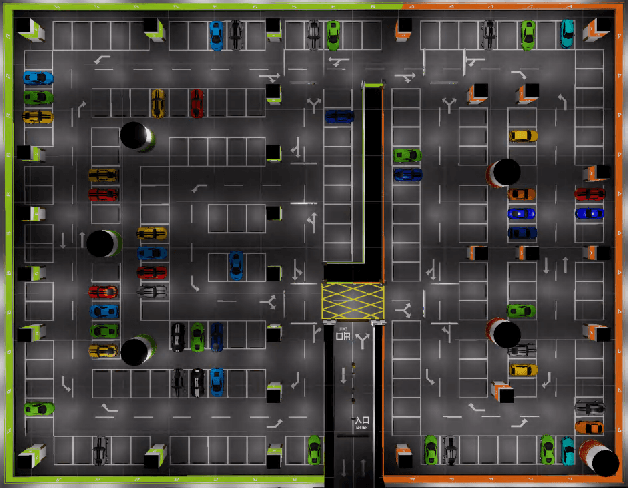

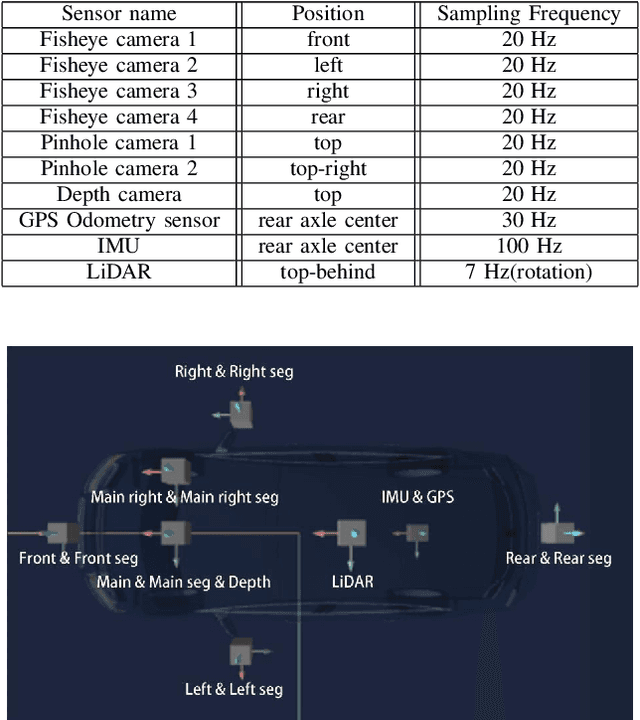

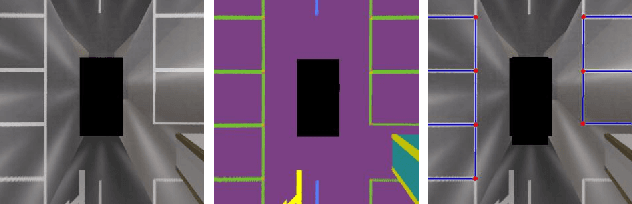

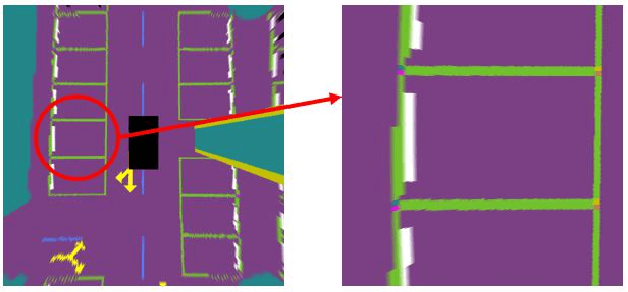

SUPS: A Simulated Underground Parking Scenario Dataset for Autonomous Driving

Feb 25, 2023

Abstract:Automatic underground parking has attracted considerable attention as the scope of autonomous driving expands. The auto-vehicle is supposed to obtain the environmental information, track its location, and build a reliable map of the scenario. Mainstream solutions consist of well-trained neural networks and simultaneous localization and mapping (SLAM) methods, which need numerous carefully labeled images and multiple sensor estimations. However, there is a lack of underground parking scenario datasets with multiple sensors and well-labeled images that support both SLAM tasks and perception tasks, such as semantic segmentation and parking slot detection. In this paper, we present SUPS, a simulated dataset for underground automatic parking, which supports multiple tasks with multiple sensors and multiple semantic labels aligned with successive images according to timestamps. We intend to cover the defect of existing datasets with the variability of environments and the diversity and accessibility of sensors in the virtual scene. Specifically, the dataset records frames from four surrounding fisheye cameras, two forward pinhole cameras, a depth camera, and data from LiDAR, inertial measurement unit (IMU), GNSS. Pixel-level semantic labels are provided for objects, especially ground signs such as arrows, parking lines, lanes, and speed bumps. Perception, 3D reconstruction, depth estimation, and SLAM, and other relative tasks are supported by our dataset. We also evaluate the state-of-the-art SLAM algorithms and perception models on our dataset. Finally, we open source our virtual 3D scene built based on Unity Engine and release our dataset at https://github.com/jarvishou829/SUPS.

StereoNeuroBayesSLAM: A Neurobiologically Inspired Stereo Visual SLAM System Based on Direct Sparse Method

Mar 06, 2020

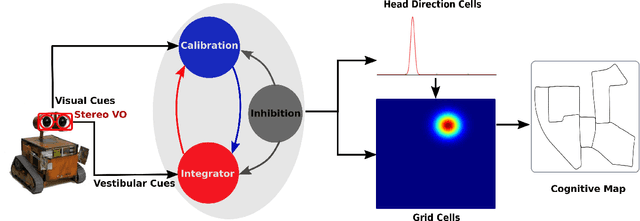

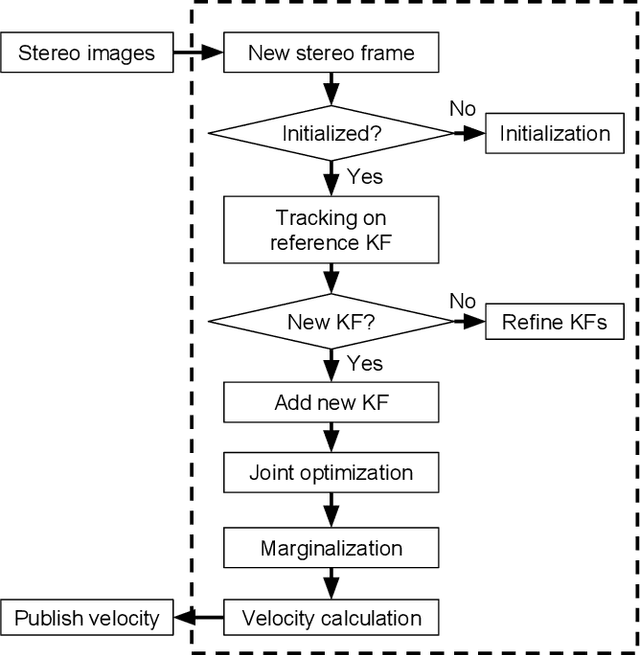

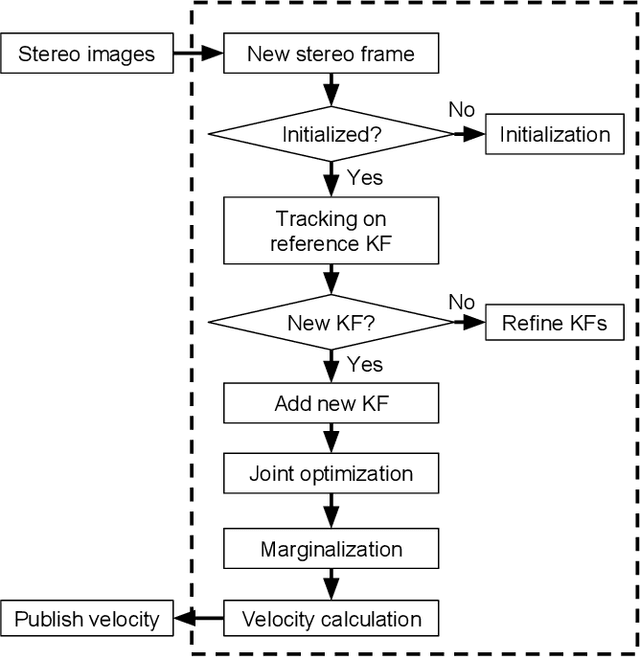

Abstract:We propose a neurobiologically inspired visual simultaneous localization and mapping (SLAM) system based on direction sparse method to real-time build cognitive maps of large-scale environments from a moving stereo camera. The core SLAM system mainly comprises a Bayesian attractor network, which utilizes neural responses of head direction (HD) cells in the hippocampus and grid cells in the medial entorhinal cortex (MEC) to represent the head direction and the position of the robot in the environment, respectively. Direct sparse method is employed to accurately and robustly estimate velocity information from a stereo camera. Input rotational and translational velocities are integrated by the HD cell and grid cell networks, respectively. We demonstrated our neurobiologically inspired stereo visual SLAM system on the KITTI odometry benchmark datasets. Our proposed SLAM system is robust to real-time build a coherent semi-metric topological map from a stereo camera. Qualitative evaluation on cognitive maps shows that our proposed neurobiologically inspired stereo visual SLAM system outperforms our previous brain-inspired algorithms and the neurobiologically inspired monocular visual SLAM system both in terms of tracking accuracy and robustness, which is closer to the traditional state-of-the-art one.

Learning Sparse Spatial Codes for Cognitive Mapping Inspired by Entorhinal-Hippocampal Neurocircuit

Oct 10, 2019

Abstract:The entorhinal-hippocampal circuit plays a critical role in higher brain functions, especially spatial cognition. Grid cells in the medial entorhinal cortex (MEC) periodically fire with different grid spacing and orientation, which makes a contribution that place cells in the hippocampus can uniquely encode locations in an environment. But how sparse firing granule cells in the dentate gyrus are formed from grid cells in the MEC remains to be determined. Recently, the fruit fly olfactory circuit provides a variant algorithm (called locality-sensitive hashing) to solve this problem. To investigate how the sparse place firing generates in the dentate gyrus can help animals to break the perception ambiguity during environment exploration, we build a biologically relevant, computational model from grid cells to place cells. The weight from grid cells to dentate gyrus granule cells is learned by competitive Hebbian learning. We resorted to the robot system for demonstrating our cognitive mapping model on the KITTI odometry benchmark dataset. The experimental results show that our model is able to stably, robustly build a coherent semi-metric topological map in the large-scale outdoor environment. The experimental results suggest that the entorhinal-hippocampal circuit as a variant locality-sensitive hashing algorithm is capable of generating sparse encoding for easily distinguishing different locations in the environment. Our experiments also provide theoretical supports that this analogous hashing algorithm may be a general principle of computation in different brain regions and species.

A Brain-Inspired Compact Cognitive Mapping System

Oct 09, 2019

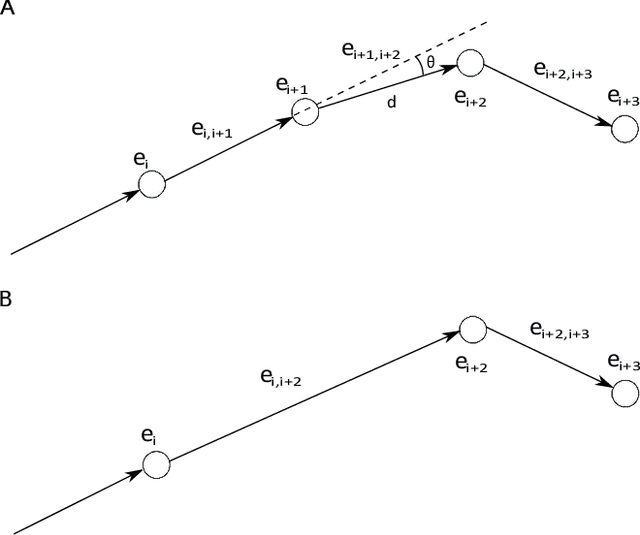

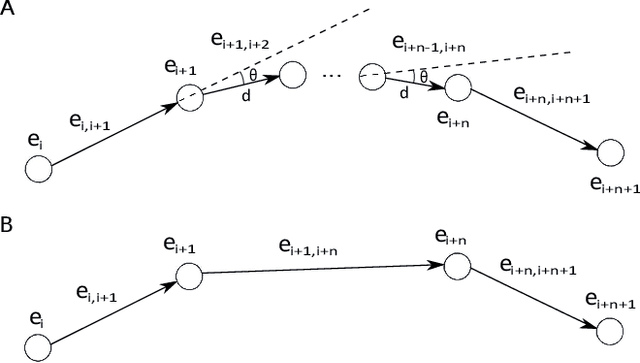

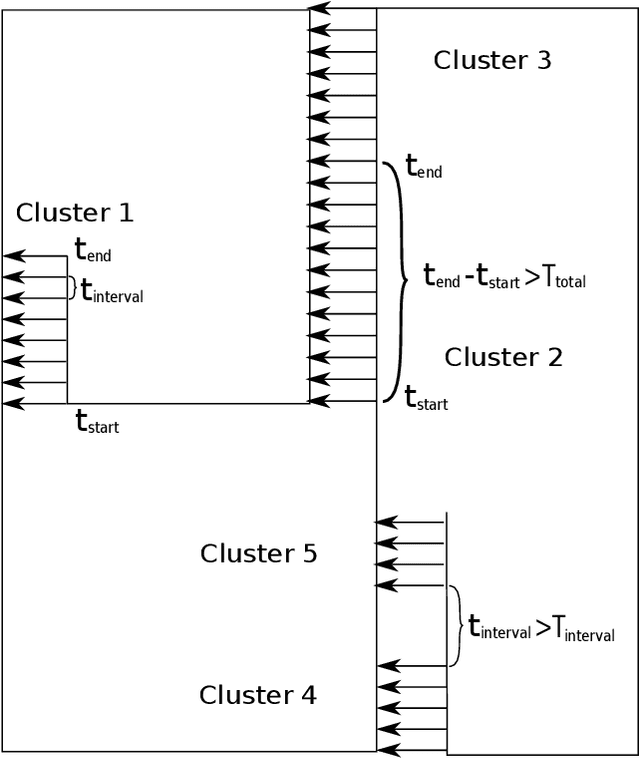

Abstract:As the robot explores the environment, the map grows over time in the simultaneous localization and mapping (SLAM) system, especially for the large scale environment. The ever-growing map prevents long-term mapping. In this paper, we developed a compact cognitive mapping approach inspired by neurobiological experiments. Inspired from neighborhood cells, neighborhood fields determined by movement information, i.e. translation and rotation, are proposed to describe one of distinct segments of the explored environment. The vertices and edges with movement information below the threshold of the neighborhood fields are avoided adding to the cognitive map. The optimization of the cognitive map is formulated as a robust non-linear least squares problem, which can be efficiently solved by the fast open linear solvers as a general problem. According to the cognitive decision-making of familiar environments, loop closure edges are clustered depending on time intervals, and then parallel computing is applied to perform batch global optimization of the cognitive map for ensuring the efficiency of computation and real-time performance. After the loop closure process, scene integration is performed, in which revisited vertices are removed subsequently to further reduce the size of the cognitive map. A monocular visual SLAM system is developed to test our approach in a rat-like maze environment. Our results suggest that the method largely restricts the growth of the size of the cognitive map over time, and meanwhile, the compact cognitive map correctly represents the overall layer of the environment as the standard one. Experiments demonstrate that our method is very suited for compact cognitive mapping to support long-term robot mapping. Our approach is simple, but pragmatic and efficient for achieving the compact cognitive map.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge