Susan Chan

Gemma 2: Improving Open Language Models at a Practical Size

Aug 02, 2024

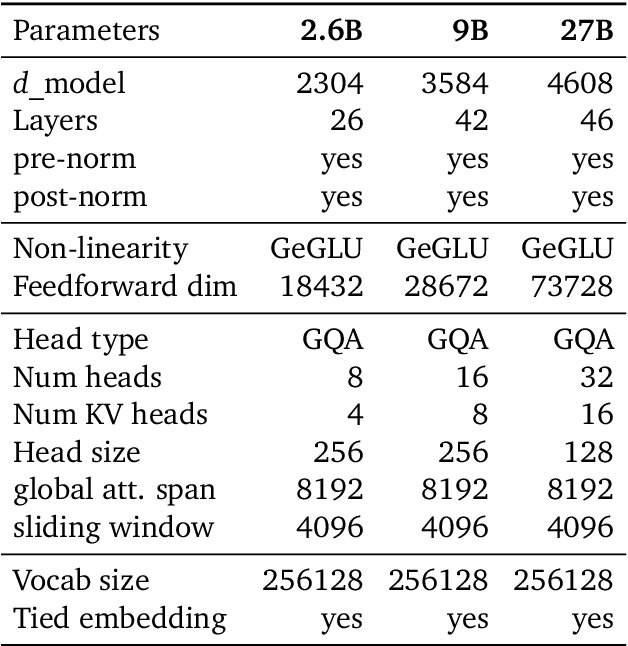

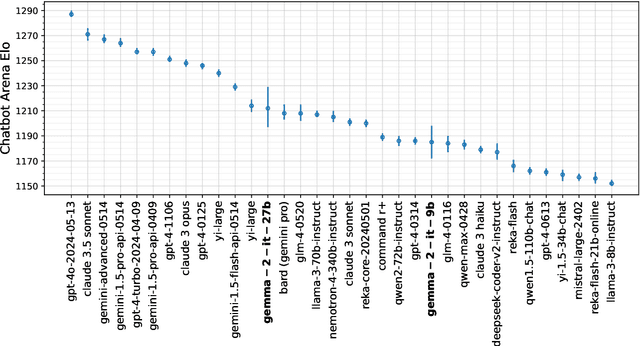

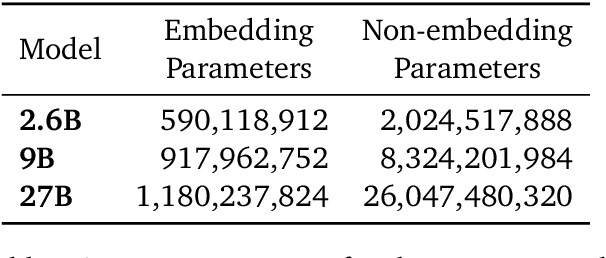

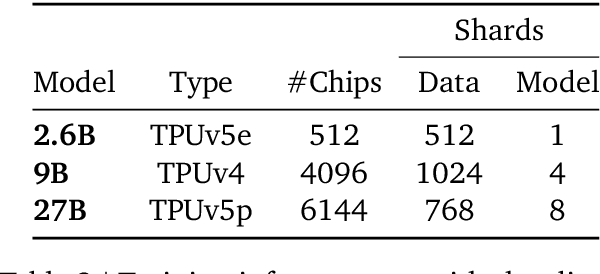

Abstract:In this work, we introduce Gemma 2, a new addition to the Gemma family of lightweight, state-of-the-art open models, ranging in scale from 2 billion to 27 billion parameters. In this new version, we apply several known technical modifications to the Transformer architecture, such as interleaving local-global attentions (Beltagy et al., 2020a) and group-query attention (Ainslie et al., 2023). We also train the 2B and 9B models with knowledge distillation (Hinton et al., 2015) instead of next token prediction. The resulting models deliver the best performance for their size, and even offer competitive alternatives to models that are 2-3 times bigger. We release all our models to the community.

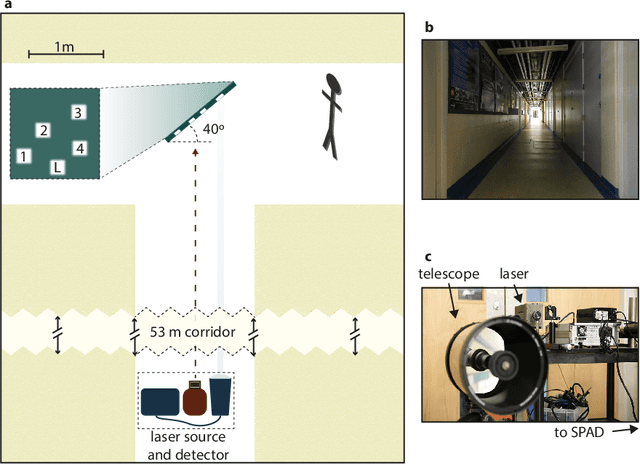

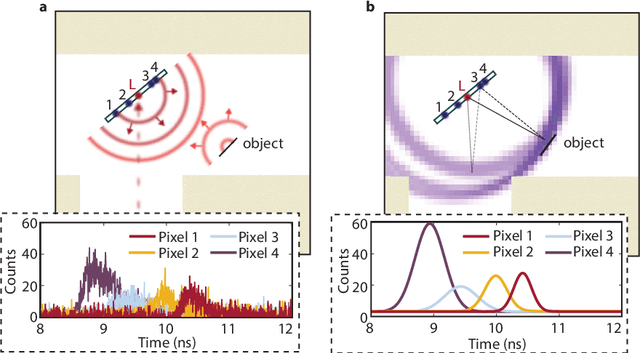

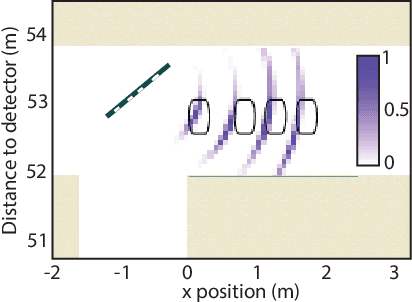

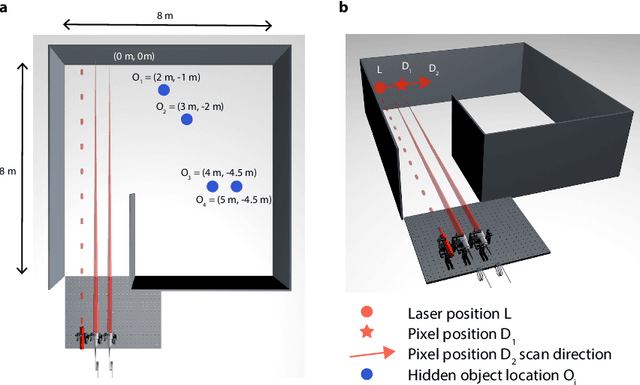

Non-line-of-sight tracking of people at long range

Mar 02, 2017

Abstract:A remote-sensing system that can determine the position of hidden objects has applications in many critical real-life scenarios, such as search and rescue missions and safe autonomous driving. Previous work has shown the ability to range and image objects hidden from the direct line of sight, employing advanced optical imaging technologies aimed at small objects at short range. In this work we demonstrate a long-range tracking system based on single laser illumination and single-pixel single-photon detection. This enables us to track one or more people hidden from view at a stand-off distance of over 50~m. These results pave the way towards next generation LiDAR systems that will reconstruct not only the direct-view scene but also the main elements hidden behind walls or corners.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge