Suril Mehta

"Can't Take the Pressure?": Examining the Challenges of Blood Pressure Estimation via Pulse Wave Analysis

Apr 23, 2023

Abstract:The use of observed wearable sensor data (e.g., photoplethysmograms [PPG]) to infer health measures (e.g., glucose level or blood pressure) is a very active area of research. Such technology can have a significant impact on health screening, chronic disease management and remote monitoring. A common approach is to collect sensor data and corresponding labels from a clinical grade device (e.g., blood pressure cuff), and train deep learning models to map one to the other. Although well intentioned, this approach often ignores a principled analysis of whether the input sensor data has enough information to predict the desired metric. We analyze the task of predicting blood pressure from PPG pulse wave analysis. Our review of the prior work reveals that many papers fall prey data leakage, and unrealistic constraints on the task and the preprocessing steps. We propose a set of tools to help determine if the input signal in question (e.g., PPG) is indeed a good predictor of the desired label (e.g., blood pressure). Using our proposed tools, we have found that blood pressure prediction using PPG has a high multi-valued mapping factor of 33.2% and low mutual information of 9.8%. In comparison, heart rate prediction using PPG, a well-established task, has a very low multi-valued mapping factor of 0.75% and high mutual information of 87.7%. We argue that these results provide a more realistic representation of the current progress towards to goal of wearable blood pressure measurement via PPG pulse wave analysis.

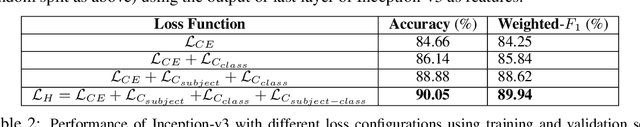

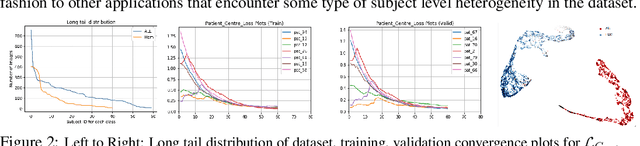

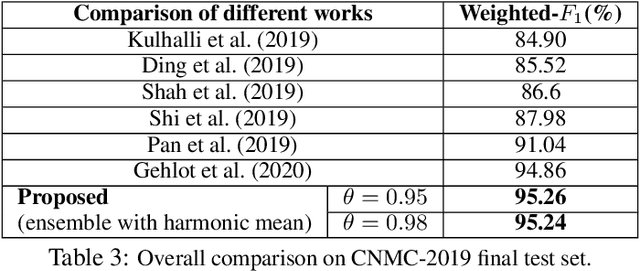

Heterogeneity Loss to Handle Intersubject and Intrasubject Variability in Cancer

Mar 19, 2020

Abstract:Developing nations lack adequate number of hospitals with modern equipment and skilled doctors. Hence, a significant proportion of these nations' population, particularly in rural areas, is not able to avail specialized and timely healthcare facilities. In recent years, deep learning (DL) models, a class of artificial intelligence (AI) methods, have shown impressive results in medical domain. These AI methods can provide immense support to developing nations as affordable healthcare solutions. This work is focused on one such application of blood cancer diagnosis. However, there are some challenges to DL models in cancer research because of the unavailability of a large data for adequate training and the difficulty of capturing heterogeneity in data at different levels ranging from acquisition characteristics, session, to subject-level (within subjects and across subjects). These challenges render DL models prone to overfitting and hence, models lack generalization on prospective subjects' data. In this work, we address these problems in the application of B-cell Acute Lymphoblastic Leukemia (B-ALL) diagnosis using deep learning. We propose heterogeneity loss that captures subject-level heterogeneity, thereby, forcing the neural network to learn subject-independent features. We also propose an unorthodox ensemble strategy that helps us in providing improved classification over models trained on 7-folds giving a weighted-$F_1$ score of 95.26% on unseen (test) subjects' data that are, so far, the best results on the C-NMC 2019 dataset for B-ALL classification.

Synthetic Video Generation for Robust Hand Gesture Recognition in Augmented Reality Applications

Dec 06, 2019

Abstract:Hand gestures are a natural means of interaction in Augmented Reality and Virtual Reality (AR/VR) applications. Recently, there has been an increased focus on removing the dependence of accurate hand gesture recognition on complex sensor setup found in expensive proprietary devices such as the Microsoft HoloLens, Daqri and Meta Glasses. Most such solutions either rely on multi-modal sensor data or deep neural networks that can benefit greatly from abundance of labelled data. Datasets are an integral part of any deep learning based research. They have been the principal reason for the substantial progress in this field, both, in terms of providing enough data for the training of these models, and, for benchmarking competing algorithms. However, it is becoming increasingly difficult to generate enough labelled data for complex tasks such as hand gesture recognition. The goal of this work is to introduce a framework capable of generating photo-realistic videos that have labelled hand bounding box and fingertip that can help in designing, training, and benchmarking models for hand-gesture recognition in AR/VR applications. We demonstrate the efficacy of our framework in generating videos with diverse backgrounds.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge