Sung-Min Gho

Complex Swin Transformer for Accelerating Enhanced SMWI Reconstruction

Dec 21, 2025Abstract:Susceptibility Map Weighted Imaging (SMWI) is an advanced magnetic resonance imaging technique used to detect nigral hyperintensity in Parkinsons disease. However, full resolution SMWI acquisition is limited by long scan times. Efficient reconstruction methods are therefore required to generate high quality SMWI from reduced k space data while preserving diagnostic relevance. In this work, we propose a complex valued Swin Transformer based network for super resolution reconstruction of multi echo MRI data. The proposed method reconstructs high quality SMWI images from low resolution k space inputs. Experimental results demonstrate that the method achieves a structural similarity index of 0.9116 and a mean squared error of 0.076 when reconstructing SMWI from 256 by 256 k space data, while maintaining critical diagnostic features. This approach enables high quality SMWI reconstruction from reduced k space sampling, leading to shorter scan times without compromising diagnostic detail. The proposed method has the potential to improve the clinical applicability of SMWI for Parkinsons disease and support faster and more efficient neuroimaging workflows.

Biological Brain Age Estimation using Sex-Aware Adversarial Variational Autoencoder with Multimodal Neuroimages

Dec 07, 2024

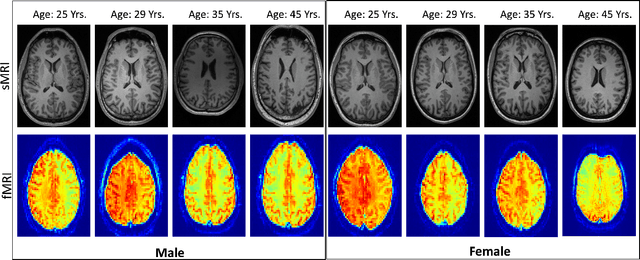

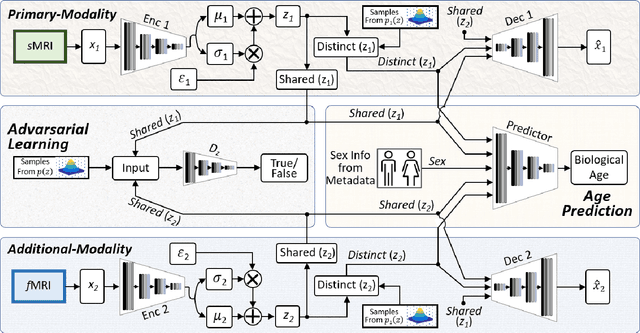

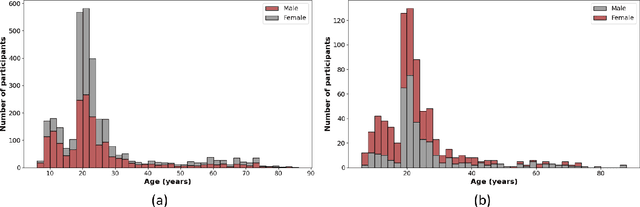

Abstract:Brain aging involves structural and functional changes and therefore serves as a key biomarker for brain health. Combining structural magnetic resonance imaging (sMRI) and functional magnetic resonance imaging (fMRI) has the potential to improve brain age estimation by leveraging complementary data. However, fMRI data, being noisier than sMRI, complicates multimodal fusion. Traditional fusion methods often introduce more noise than useful information, which can reduce accuracy compared to using sMRI alone. In this paper, we propose a novel multimodal framework for biological brain age estimation, utilizing a sex-aware adversarial variational autoencoder (SA-AVAE). Our framework integrates adversarial and variational learning to effectively disentangle the latent features from both modalities. Specifically, we decompose the latent space into modality-specific codes and shared codes to represent complementary and common information across modalities, respectively. To enhance the disentanglement, we introduce cross-reconstruction and shared-distinct distance ratio loss as regularization terms. Importantly, we incorporate sex information into the learned latent code, enabling the model to capture sex-specific aging patterns for brain age estimation via an integrated regressor module. We evaluate our model using the publicly available OpenBHB dataset, a comprehensive multi-site dataset for brain age estimation. The results from ablation studies and comparisons with state-of-the-art methods demonstrate that our framework outperforms existing approaches and shows significant robustness across various age groups, highlighting its potential for real-time clinical applications in the early detection of neurodegenerative diseases.

Multi-Task Adversarial Variational Autoencoder for Estimating Biological Brain Age with Multimodal Neuroimaging

Nov 15, 2024

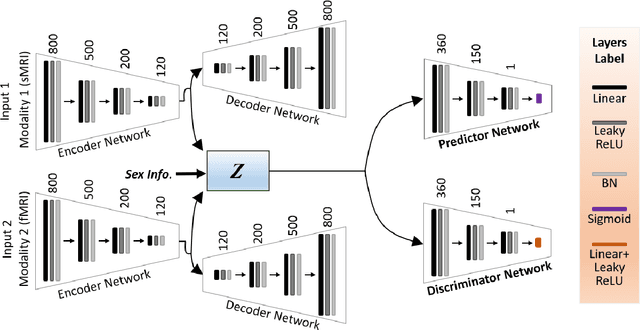

Abstract:Despite advances in deep learning for estimating brain age from structural MRI data, incorporating functional MRI data is challenging due to its complex structure and the noisy nature of functional connectivity measurements. To address this, we present the Multitask Adversarial Variational Autoencoder, a custom deep learning framework designed to improve brain age predictions through multimodal MRI data integration. This model separates latent variables into generic and unique codes, isolating shared and modality-specific features. By integrating multitask learning with sex classification as an additional task, the model captures sex-specific aging patterns. Evaluated on the OpenBHB dataset, a large multisite brain MRI collection, the model achieves a mean absolute error of 2.77 years, outperforming traditional methods. This success positions M-AVAE as a powerful tool for metaverse-based healthcare applications in brain age estimation.

Stacked U-Nets with Self-Assisted Priors Towards Robust Correction of Rigid Motion Artifact in Brain MRI

Nov 11, 2021

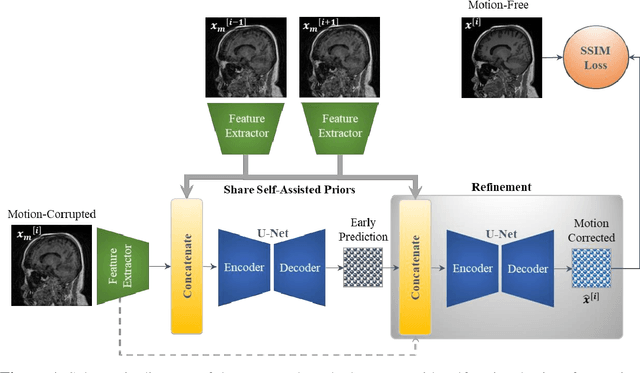

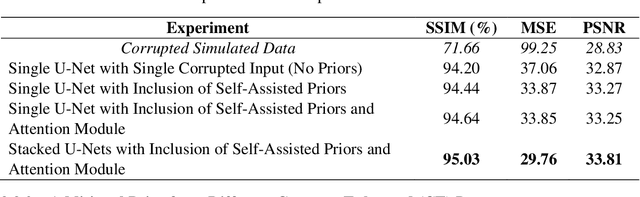

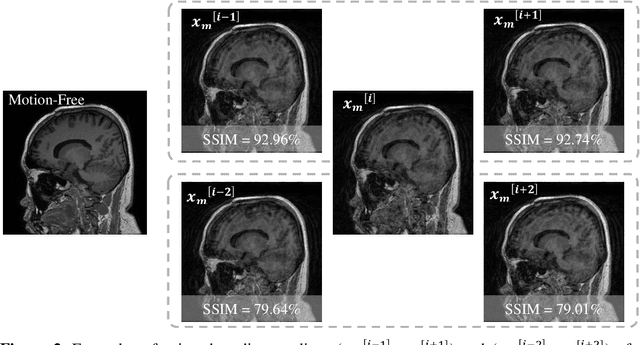

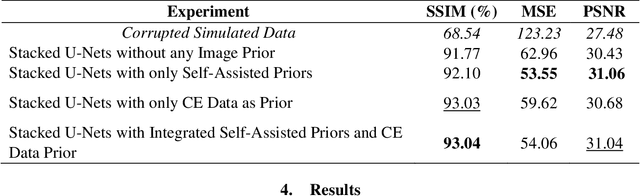

Abstract:In this paper, we develop an efficient retrospective deep learning method called stacked U-Nets with self-assisted priors to address the problem of rigid motion artifacts in MRI. The proposed work exploits the usage of additional knowledge priors from the corrupted images themselves without the need for additional contrast data. The proposed network learns missed structural details through sharing auxiliary information from the contiguous slices of the same distorted subject. We further design a refinement stacked U-Nets that facilitates preserving of the image spatial details and hence improves the pixel-to-pixel dependency. To perform network training, simulation of MRI motion artifacts is inevitable. We present an intensive analysis using various types of image priors: the proposed self-assisted priors and priors from other image contrast of the same subject. The experimental analysis proves the effectiveness and feasibility of our self-assisted priors since it does not require any further data scans.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge