Subhrajit Nag

FedDPC : Handling Data Heterogeneity and Partial Client Participation in Federated Learning

Dec 23, 2025Abstract:Data heterogeneity is a significant challenge in modern federated learning (FL) as it creates variance in local model updates, causing the aggregated global model to shift away from the true global optimum. Partial client participation in FL further exacerbates this issue by skewing the aggregation of local models towards the data distribution of participating clients. This creates additional variance in the global model updates, causing the global model to converge away from the optima of the global objective. These variances lead to instability in FL training, which degrades global model performance and slows down FL training. While existing literature primarily focuses on addressing data heterogeneity, the impact of partial client participation has received less attention. In this paper, we propose FedDPC, a novel FL method, designed to improve FL training and global model performance by mitigating both data heterogeneity and partial client participation. FedDPC addresses these issues by projecting each local update onto the previous global update, thereby controlling variance in both local and global updates. To further accelerate FL training, FedDPC employs adaptive scaling for each local update before aggregation. Extensive experiments on image classification tasks with multiple heterogeneously partitioned datasets validate the effectiveness of FedDPC. The results demonstrate that FedDPC outperforms state-of-the-art FL algorithms by achieving faster reduction in training loss and improved test accuracy across communication rounds.

ACLNet: An Attention and Clustering-based Cloud Segmentation Network

Jul 13, 2022

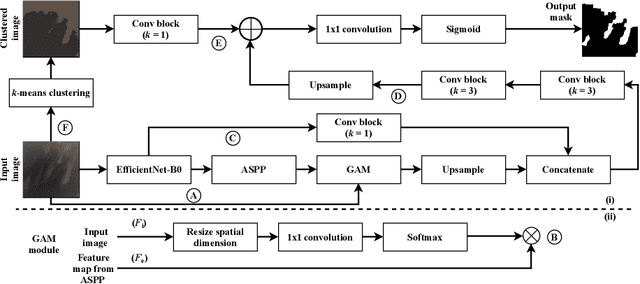

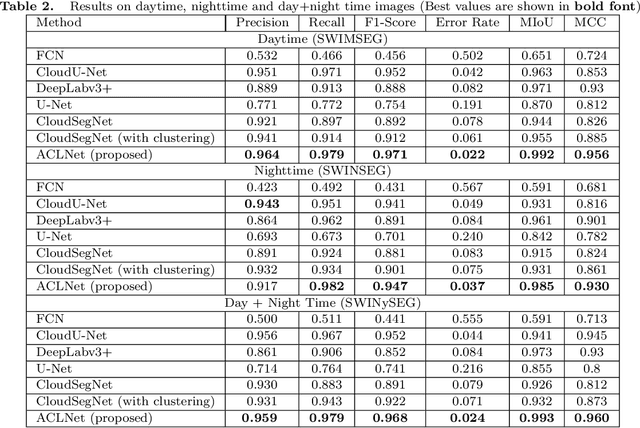

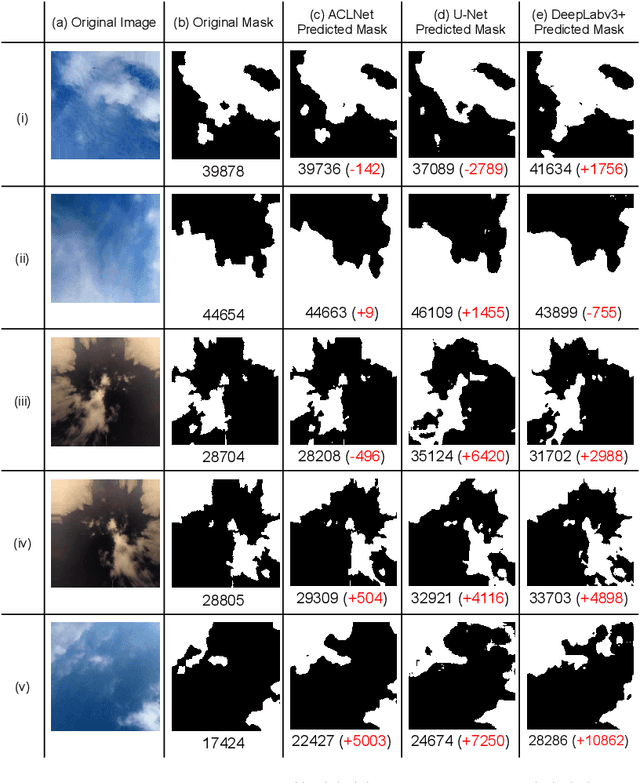

Abstract:We propose a novel deep learning model named ACLNet, for cloud segmentation from ground images. ACLNet uses both deep neural network and machine learning (ML) algorithm to extract complementary features. Specifically, it uses EfficientNet-B0 as the backbone, "`a trous spatial pyramid pooling" (ASPP) to learn at multiple receptive fields, and "global attention module" (GAM) to extract finegrained details from the image. ACLNet also uses k-means clustering to extract cloud boundaries more precisely. ACLNet is effective for both daytime and nighttime images. It provides lower error rate, higher recall and higher F1-score than state-of-art cloud segmentation models. The source-code of ACLNet is available here: https://github.com/ckmvigil/ACLNet.

* 11 pages, 3 figures, 5 tables, Published in remote sensing letters

WaferSegClassNet -- A Light-weight Network for Classification and Segmentation of Semiconductor Wafer Defects

Jul 03, 2022

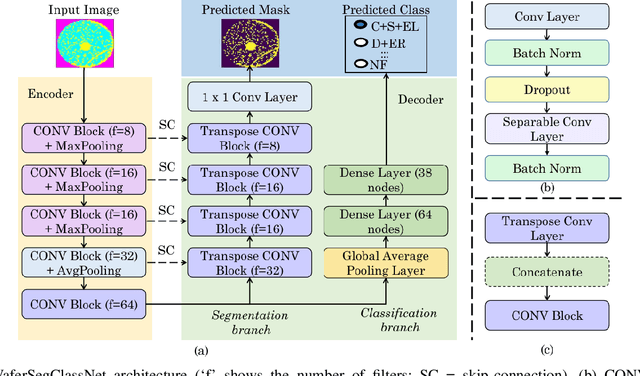

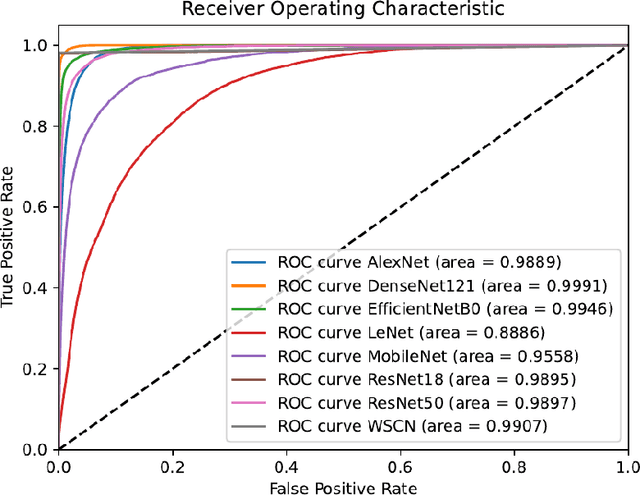

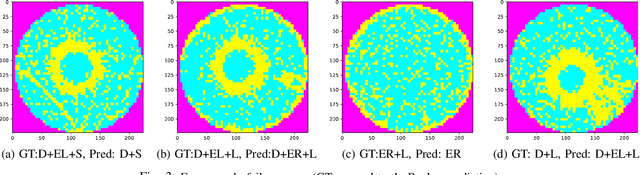

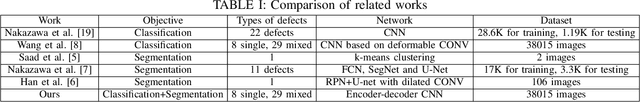

Abstract:As the integration density and design intricacy of semiconductor wafers increase, the magnitude and complexity of defects in them are also on the rise. Since the manual inspection of wafer defects is costly, an automated artificial intelligence (AI) based computer-vision approach is highly desired. The previous works on defect analysis have several limitations, such as low accuracy and the need for separate models for classification and segmentation. For analyzing mixed-type defects, some previous works require separately training one model for each defect type, which is non-scalable. In this paper, we present WaferSegClassNet (WSCN), a novel network based on encoder-decoder architecture. WSCN performs simultaneous classification and segmentation of both single and mixed-type wafer defects. WSCN uses a "shared encoder" for classification, and segmentation, which allows training WSCN end-to-end. We use N-pair contrastive loss to first pretrain the encoder and then use BCE-Dice loss for segmentation, and categorical cross-entropy loss for classification. Use of N-pair contrastive loss helps in better embedding representation in the latent dimension of wafer maps. WSCN has a model size of only 0.51MB and performs only 0.2M FLOPS. Thus, it is much lighter than other state-of-the-art models. Also, it requires only 150 epochs for convergence, compared to 4,000 epochs needed by a previous work. We evaluate our model on the MixedWM38 dataset, which has 38,015 images. WSCN achieves an average classification accuracy of 98.2% and a dice coefficient of 0.9999. We are the first to show segmentation results on the MixedWM38 dataset. The source code can be obtained from https://github.com/ckmvigil/WaferSegClassNet.

* 11 pages, 2 figures, 7 tables, Published in Computers in Industry

E2GC: Energy-efficient Group Convolution in Deep Neural Networks

Jun 26, 2020

Abstract:The number of groups ($g$) in group convolution (GConv) is selected to boost the predictive performance of deep neural networks (DNNs) in a compute and parameter efficient manner. However, we show that naive selection of $g$ in GConv creates an imbalance between the computational complexity and degree of data reuse, which leads to suboptimal energy efficiency in DNNs. We devise an optimum group size model, which enables a balance between computational cost and data movement cost, thus, optimize the energy-efficiency of DNNs. Based on the insights from this model, we propose an "energy-efficient group convolution" (E2GC) module where, unlike the previous implementations of GConv, the group size ($G$) remains constant. Further, to demonstrate the efficacy of the E2GC module, we incorporate this module in the design of MobileNet-V1 and ResNeXt-50 and perform experiments on two GPUs, P100 and P4000. We show that, at comparable computational complexity, DNNs with constant group size (E2GC) are more energy-efficient than DNNs with a fixed number of groups (F$g$GC). For example, on P100 GPU, the energy-efficiency of MobileNet-V1 and ResNeXt-50 is increased by 10.8% and 4.73% (respectively) when E2GC modules substitute the F$g$GC modules in both the DNNs. Furthermore, through our extensive experimentation with ImageNet-1K and Food-101 image classification datasets, we show that the E2GC module enables a trade-off between generalization ability and representational power of DNN. Thus, the predictive performance of DNNs can be optimized by selecting an appropriate $G$. The code and trained models are available at https://github.com/iithcandle/E2GC-release.

* Accepted as a conference paper in 2020 33rd International Conference on VLSI Design and 2020 19th International Conference on Embedded Systems (VLSID)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge