Sai Chandra Teja R

SPEEDNet: Salient Pyramidal Enhancement Encoder-Decoder Network for Colonoscopy Images

Dec 02, 2023

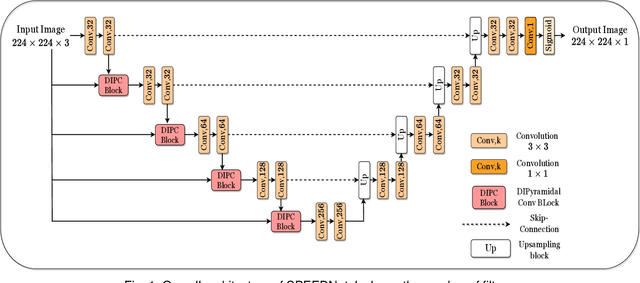

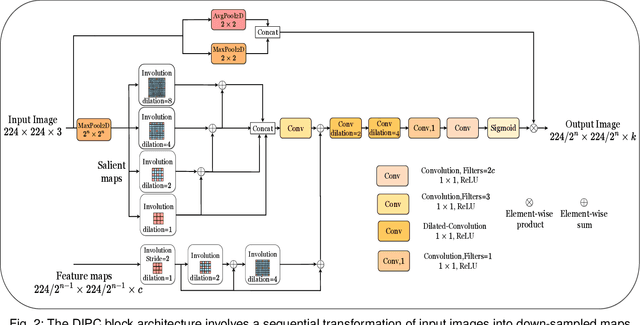

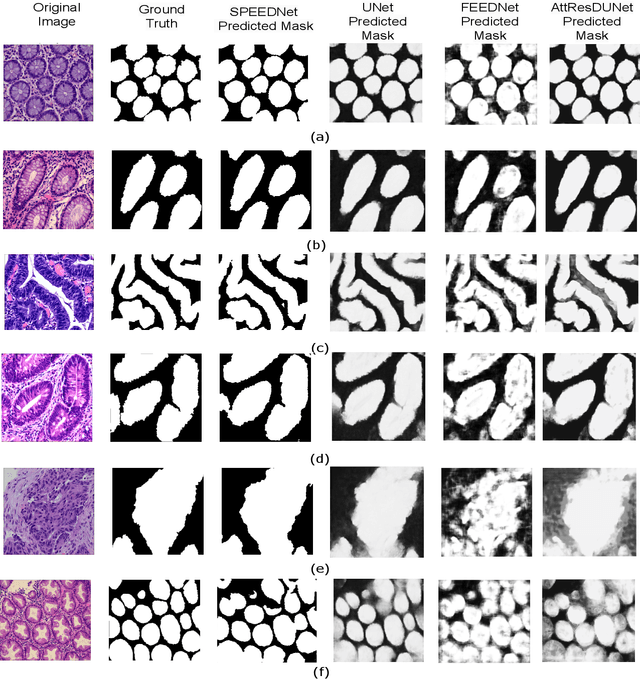

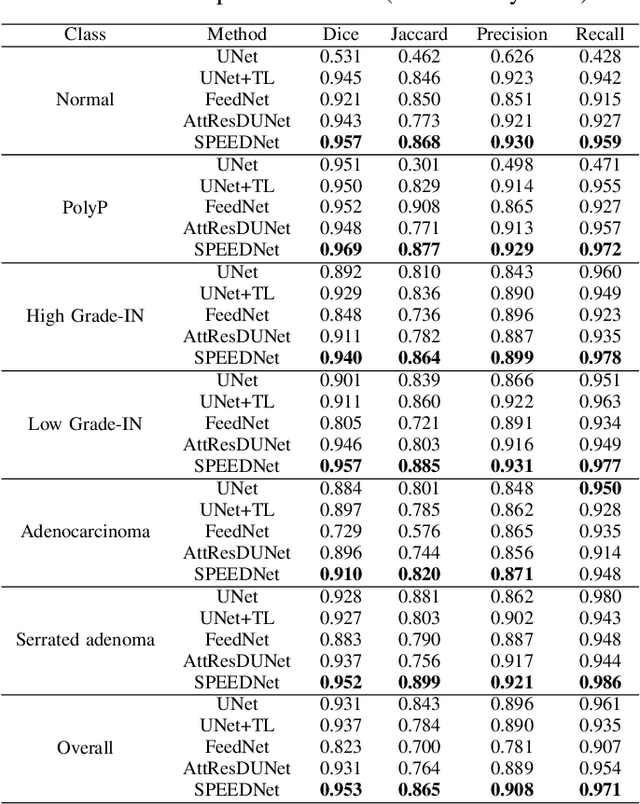

Abstract:Accurate identification and precise delineation of regions of significance, such as tumors or lesions, is a pivotal goal in medical imaging analysis. This paper proposes SPEEDNet, a novel architecture for precisely segmenting lesions within colonoscopy images. SPEEDNet uses a novel block named Dilated-Involutional Pyramidal Convolution Fusion (DIPC). A DIPC block combines the dilated involution layers pairwise into a pyramidal structure to convert the feature maps into a compact space. This lowers the total number of parameters while improving the learning of representations across an optimal receptive field, thereby reducing the blurring effect. On the EBHISeg dataset, SPEEDNet outperforms three previous networks: UNet, FeedNet, and AttesResDUNet. Specifically, SPEEDNet attains an average dice score of 0.952 and a recall of 0.971. Qualitative results and ablation studies provide additional insights into the effectiveness of SPEEDNet. The model size of SPEEDNet is 9.81 MB, significantly smaller than that of UNet (22.84 MB), FeedNet(185.58 MB), and AttesResDUNet (140.09 MB).

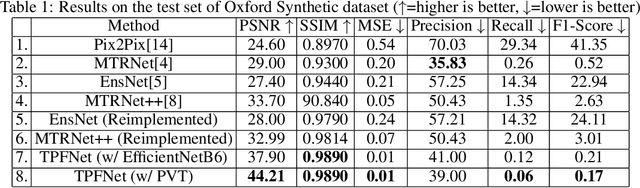

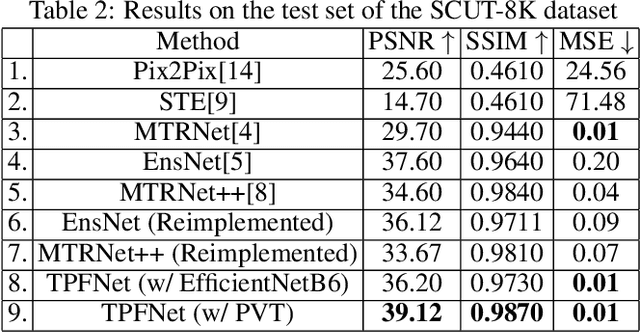

TPFNet: A Novel Text In-painting Transformer for Text Removal

Oct 27, 2022

Abstract:Text erasure from an image is helpful for various tasks such as image editing and privacy preservation. In this paper, we present TPFNet, a novel one-stage (end-toend) network for text removal from images. Our network has two parts: feature synthesis and image generation. Since noise can be more effectively removed from low-resolution images, part 1 operates on low-resolution images. The output of part 1 is a low-resolution text-free image. Part 2 uses the features learned in part 1 to predict a high-resolution text-free image. In part 1, we use "pyramidal vision transformer" (PVT) as the encoder. Further, we use a novel multi-headed decoder that generates a high-pass filtered image and a segmentation map, in addition to a text-free image. The segmentation branch helps locate the text precisely, and the high-pass branch helps in learning the image structure. To precisely locate the text, TPFNet employs an adversarial loss that is conditional on the segmentation map rather than the input image. On Oxford, SCUT, and SCUT-EnsText datasets, our network outperforms recently proposed networks on nearly all the metrics. For example, on SCUT-EnsText dataset, TPFNet has a PSNR (higher is better) of 39.0 and text-detection precision (lower is better) of 21.1, compared to the best previous technique, which has a PSNR of 32.3 and precision of 53.2. The source code can be obtained from https://github.com/CandleLabAI/TPFNet

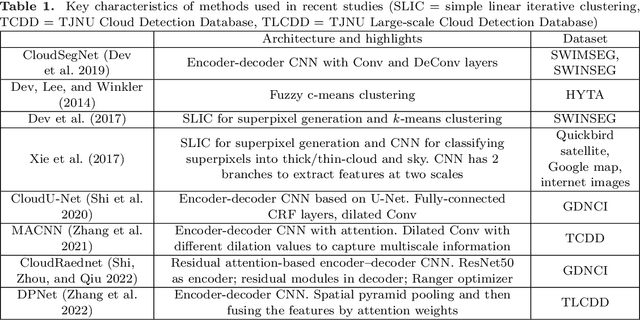

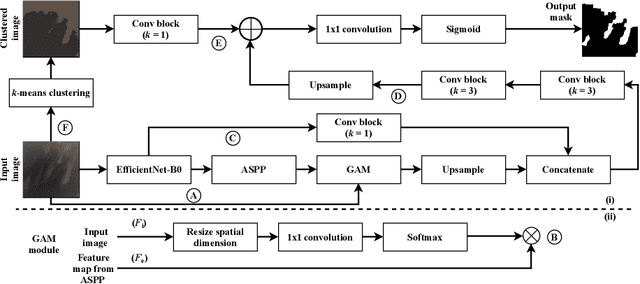

ACLNet: An Attention and Clustering-based Cloud Segmentation Network

Jul 13, 2022

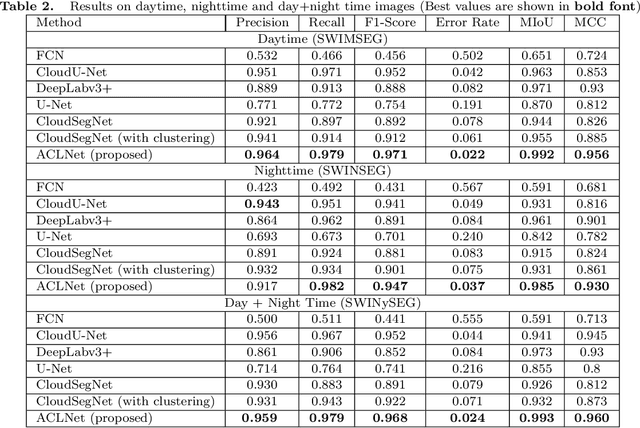

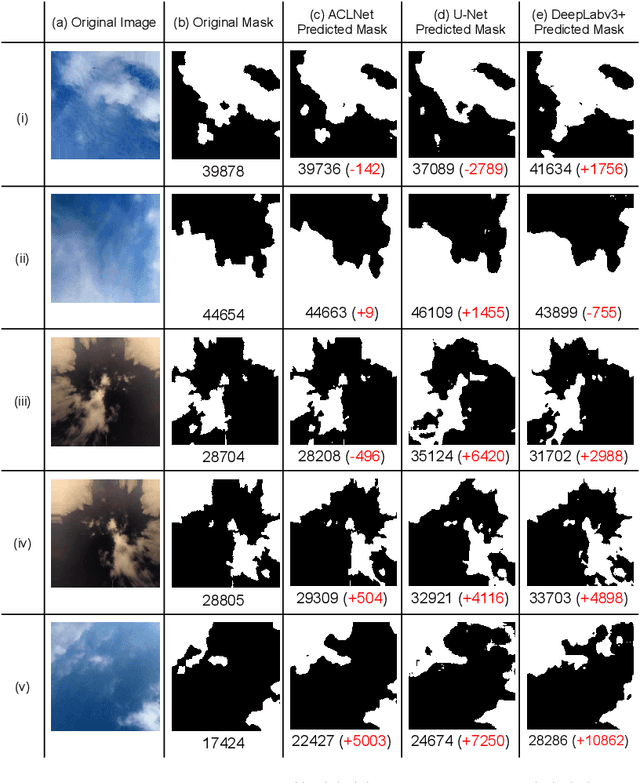

Abstract:We propose a novel deep learning model named ACLNet, for cloud segmentation from ground images. ACLNet uses both deep neural network and machine learning (ML) algorithm to extract complementary features. Specifically, it uses EfficientNet-B0 as the backbone, "`a trous spatial pyramid pooling" (ASPP) to learn at multiple receptive fields, and "global attention module" (GAM) to extract finegrained details from the image. ACLNet also uses k-means clustering to extract cloud boundaries more precisely. ACLNet is effective for both daytime and nighttime images. It provides lower error rate, higher recall and higher F1-score than state-of-art cloud segmentation models. The source-code of ACLNet is available here: https://github.com/ckmvigil/ACLNet.

* 11 pages, 3 figures, 5 tables, Published in remote sensing letters

WaferSegClassNet -- A Light-weight Network for Classification and Segmentation of Semiconductor Wafer Defects

Jul 03, 2022

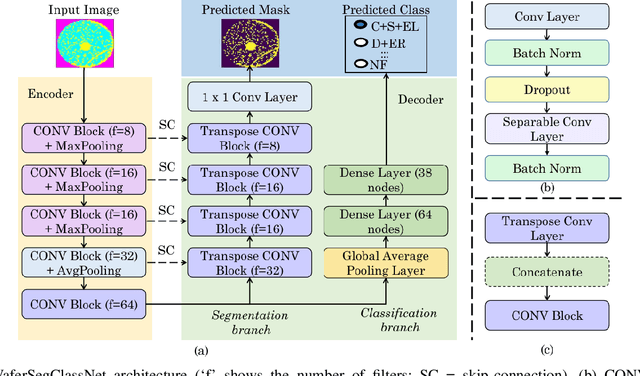

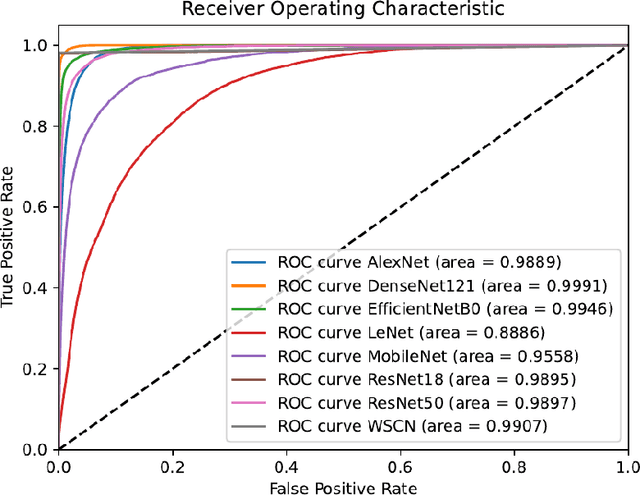

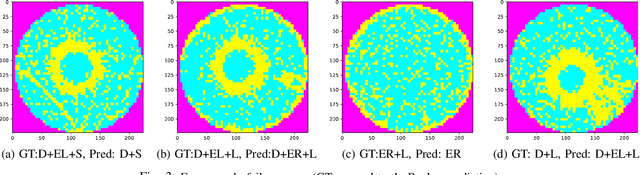

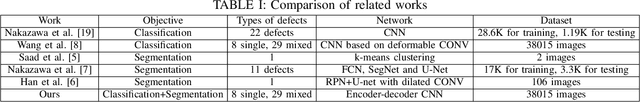

Abstract:As the integration density and design intricacy of semiconductor wafers increase, the magnitude and complexity of defects in them are also on the rise. Since the manual inspection of wafer defects is costly, an automated artificial intelligence (AI) based computer-vision approach is highly desired. The previous works on defect analysis have several limitations, such as low accuracy and the need for separate models for classification and segmentation. For analyzing mixed-type defects, some previous works require separately training one model for each defect type, which is non-scalable. In this paper, we present WaferSegClassNet (WSCN), a novel network based on encoder-decoder architecture. WSCN performs simultaneous classification and segmentation of both single and mixed-type wafer defects. WSCN uses a "shared encoder" for classification, and segmentation, which allows training WSCN end-to-end. We use N-pair contrastive loss to first pretrain the encoder and then use BCE-Dice loss for segmentation, and categorical cross-entropy loss for classification. Use of N-pair contrastive loss helps in better embedding representation in the latent dimension of wafer maps. WSCN has a model size of only 0.51MB and performs only 0.2M FLOPS. Thus, it is much lighter than other state-of-the-art models. Also, it requires only 150 epochs for convergence, compared to 4,000 epochs needed by a previous work. We evaluate our model on the MixedWM38 dataset, which has 38,015 images. WSCN achieves an average classification accuracy of 98.2% and a dice coefficient of 0.9999. We are the first to show segmentation results on the MixedWM38 dataset. The source code can be obtained from https://github.com/ckmvigil/WaferSegClassNet.

* 11 pages, 2 figures, 7 tables, Published in Computers in Industry

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge