Nandan Kumar Jha

Spectral Scaling Laws in Language Models: How Effectively Do Feed-Forward Networks Use Their Latent Space?

Oct 01, 2025Abstract:As large language models (LLMs) scale, the question is not only how large they become, but how much of their capacity is effectively utilized. Existing scaling laws relate model size to loss, yet overlook how components exploit their latent space. We study feed-forward networks (FFNs) and recast width selection as a spectral utilization problem. Using a lightweight diagnostic suite -- Hard Rank (participation ratio), Soft Rank (Shannon rank), Spectral Concentration, and the composite Spectral Utilization Index (SUI) -- we quantify how many latent directions are meaningfully activated across LLaMA, GPT-2, and nGPT families. Our key finding is an asymmetric spectral scaling law: soft rank follows an almost perfect power law with FFN width, while hard rank grows only sublinearly and with high variance. This asymmetry suggests that widening FFNs mostly adds low-energy tail directions, while dominant-mode subspaces saturate early. Moreover, at larger widths, variance further collapses into a narrow subspace, leaving much of the latent space under-utilized. These results recast FFN width selection as a principled trade-off between tail capacity and dominant-mode capacity, offering concrete guidance for inference-efficient LLM design.

Entropy-Guided Attention for Private LLMs

Jan 07, 2025

Abstract:The pervasiveness of proprietary language models has raised critical privacy concerns, necessitating advancements in private inference (PI), where computations are performed directly on encrypted data without revealing users' sensitive information. While PI offers a promising solution, its practical deployment is hindered by substantial communication and latency overheads, primarily stemming from nonlinear operations. To address this, we introduce an information-theoretic framework to characterize the role of nonlinearities in decoder-only language models, laying a principled foundation for optimizing transformer-architectures tailored to the demands of PI. By leveraging Shannon's entropy as a quantitative measure, we uncover the previously unexplored dual significance of nonlinearities: beyond ensuring training stability, they are crucial for maintaining attention head diversity. Specifically, we find that their removal triggers two critical failure modes: {\em entropy collapse} in deeper layers that destabilizes training, and {\em entropic overload} in earlier layers that leads to under-utilization of Multi-Head Attention's (MHA) representational capacity. We propose an entropy-guided attention mechanism paired with a novel entropy regularization technique to mitigate entropic overload. Additionally, we explore PI-friendly alternatives to layer normalization for preventing entropy collapse and stabilizing the training of LLMs with reduced-nonlinearities. Our study bridges the gap between information theory and architectural design, establishing entropy dynamics as a principled guide for developing efficient PI architectures. The code and implementation are available at \href{https://github.com/Nandan91/entropy-guided-attention-llm}{entropy-guided-llm}.

TruncFormer: Private LLM Inference Using Only Truncations

Dec 02, 2024

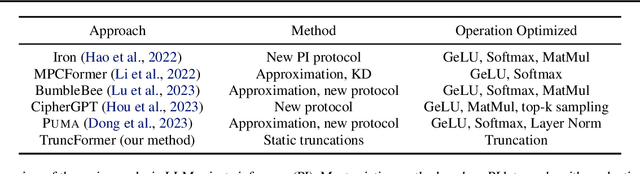

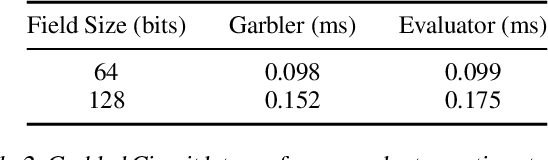

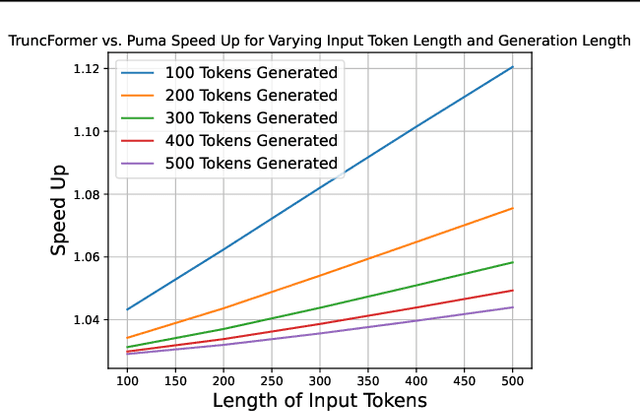

Abstract:Private inference (PI) serves an important role in guaranteeing the privacy of user data when interfacing with proprietary machine learning models such as LLMs. However, PI remains practically intractable due to the massive latency costs associated with nonlinear functions present in LLMs. Existing works have focused on improving latency of specific LLM nonlinearities (such as the Softmax, or the GeLU) via approximations. However, new types of nonlinearities are regularly introduced with new LLM architectures, and this has led to a constant game of catch-up where PI researchers attempt to optimize the newest nonlinear function. We introduce TruncFormer, a framework for taking any LLM and transforming it into a plaintext emulation of PI. Our framework leverages the fact that nonlinearities in LLMs are differentiable and can be accurately approximated with a sequence of additions, multiplications, and truncations. Further, we decouple the add/multiply and truncation operations, and statically determine where truncations should be inserted based on a given field size and input representation size. This leads to latency improvements over existing cryptographic protocols that enforce truncation after every multiplication operation. We open source our code for community use.

AERO: Softmax-Only LLMs for Efficient Private Inference

Oct 16, 2024

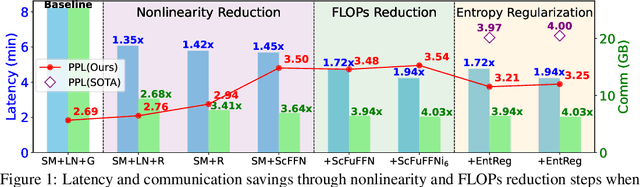

Abstract:The pervasiveness of proprietary language models has raised privacy concerns for users' sensitive data, emphasizing the need for private inference (PI), where inference is performed directly on encrypted inputs. However, current PI methods face prohibitively higher communication and latency overheads, primarily due to nonlinear operations. In this paper, we present a comprehensive analysis to understand the role of nonlinearities in transformer-based decoder-only language models. We introduce AERO, a four-step architectural optimization framework that refines the existing LLM architecture for efficient PI by systematically removing nonlinearities such as LayerNorm and GELU and reducing FLOPs counts. For the first time, we propose a Softmax-only architecture with significantly fewer FLOPs tailored for efficient PI. Furthermore, we devise a novel entropy regularization technique to improve the performance of Softmax-only models. AERO achieves up to 4.23$\times$ communication and 1.94$\times$ latency reduction. We validate the effectiveness of AERO by benchmarking it against the state-of-the-art.

ReLU's Revival: On the Entropic Overload in Normalization-Free Large Language Models

Oct 12, 2024Abstract:LayerNorm is a critical component in modern large language models (LLMs) for stabilizing training and ensuring smooth optimization. However, it introduces significant challenges in mechanistic interpretability, outlier feature suppression, faithful signal propagation, and computational and communication complexity of private inference. This work explores desirable activation functions in normalization-free decoder-only LLMs. Contrary to the conventional preference for the GELU in transformer-based models, our empirical findings demonstrate an {\em opposite trend} -- ReLU significantly outperforms GELU in LayerNorm-free models, leading to an {\bf 8.2\%} perplexity improvement. We discover a key issue with GELU, where early layers experience entropic overload, leading to the under-utilization of the representational capacity of attention heads. This highlights that smoother activations like GELU are {\em ill-suited} for LayerNorm-free architectures, whereas ReLU's geometrical properties -- specialization in input space and intra-class selectivity -- lead to improved learning dynamics and better information retention in the absence of LayerNorm. This study offers key insights for optimizing transformer architectures where LayerNorm introduces significant challenges.

Sisyphus: A Cautionary Tale of Using Low-Degree Polynomial Activations in Privacy-Preserving Deep Learning

Jul 26, 2021

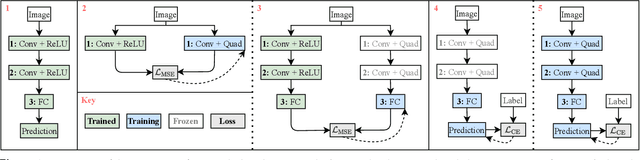

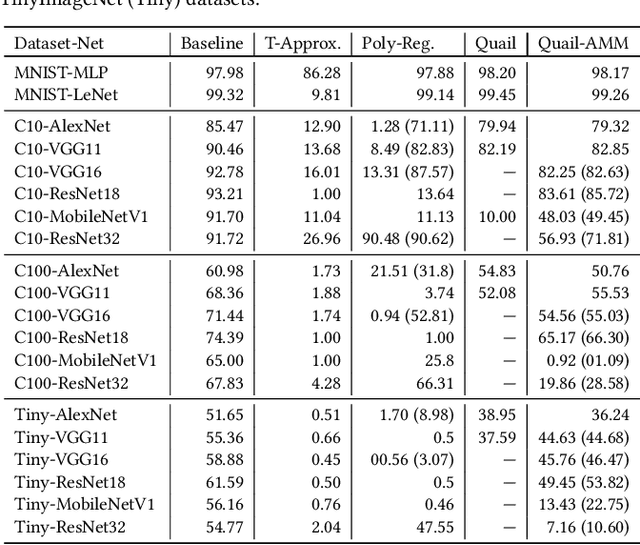

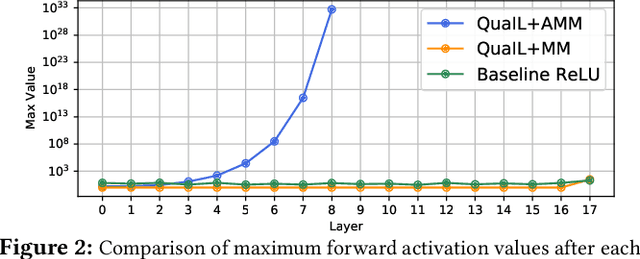

Abstract:Privacy concerns in client-server machine learning have given rise to private inference (PI), where neural inference occurs directly on encrypted inputs. PI protects clients' personal data and the server's intellectual property. A common practice in PI is to use garbled circuits to compute nonlinear functions privately, namely ReLUs. However, garbled circuits suffer from high storage, bandwidth, and latency costs. To mitigate these issues, PI-friendly polynomial activation functions have been employed to replace ReLU. In this work, we ask: Is it feasible to substitute all ReLUs with low-degree polynomial activation functions for building deep, privacy-friendly neural networks? We explore this question by analyzing the challenges of substituting ReLUs with polynomials, starting with simple drop-and-replace solutions to novel, more involved replace-and-retrain strategies. We examine the limitations of each method and provide commentary on the use of polynomial activation functions for PI. We find all evaluated solutions suffer from the escaping activation problem: forward activation values inevitably begin to expand at an exponential rate away from stable regions of the polynomials, which leads to exploding values (NaNs) or poor approximations.

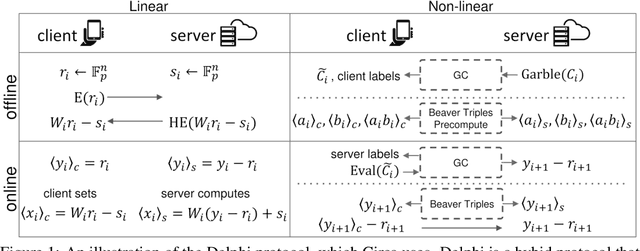

Circa: Stochastic ReLUs for Private Deep Learning

Jun 15, 2021

Abstract:The simultaneous rise of machine learning as a service and concerns over user privacy have increasingly motivated the need for private inference (PI). While recent work demonstrates PI is possible using cryptographic primitives, the computational overheads render it impractical. The community is largely unprepared to address these overheads, as the source of slowdown in PI stems from the ReLU operator whereas optimizations for plaintext inference focus on optimizing FLOPs. In this paper we re-think the ReLU computation and propose optimizations for PI tailored to properties of neural networks. Specifically, we reformulate ReLU as an approximate sign test and introduce a novel truncation method for the sign test that significantly reduces the cost per ReLU. These optimizations result in a specific type of stochastic ReLU. The key observation is that the stochastic fault behavior is well suited for the fault-tolerant properties of neural network inference. Thus, we provide significant savings without impacting accuracy. We collectively call the optimizations Circa and demonstrate improvements of up to 4.7x storage and 3x runtime over baseline implementations; we further show that Circa can be used on top of recent PI optimizations to obtain 1.8x additional speedup.

DeepReDuce: ReLU Reduction for Fast Private Inference

Mar 02, 2021

Abstract:The recent rise of privacy concerns has led researchers to devise methods for private neural inference -- where inferences are made directly on encrypted data, never seeing inputs. The primary challenge facing private inference is that computing on encrypted data levies an impractically-high latency penalty, stemming mostly from non-linear operators like ReLU. Enabling practical and private inference requires new optimization methods that minimize network ReLU counts while preserving accuracy. This paper proposes DeepReDuce: a set of optimizations for the judicious removal of ReLUs to reduce private inference latency. The key insight is that not all ReLUs contribute equally to accuracy. We leverage this insight to drop, or remove, ReLUs from classic networks to significantly reduce inference latency and maintain high accuracy. Given a target network, DeepReDuce outputs a Pareto frontier of networks that tradeoff the number of ReLUs and accuracy. Compared to the state-of-the-art for private inference DeepReDuce improves accuracy and reduces ReLU count by up to 3.5% (iso-ReLU count) and 3.5$\times$ (iso-accuracy), respectively.

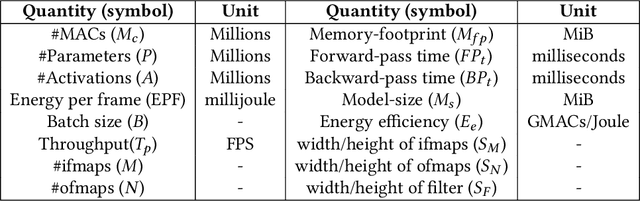

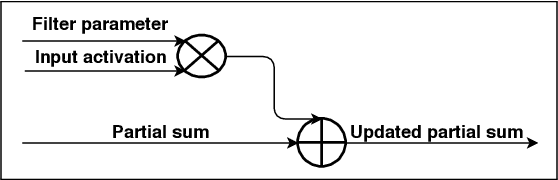

Modeling Data Reuse in Deep Neural Networks by Taking Data-Types into Cognizance

Aug 06, 2020

Abstract:In recent years, researchers have focused on reducing the model size and number of computations (measured as "multiply-accumulate" or MAC operations) of DNNs. The energy consumption of a DNN depends on both the number of MAC operations and the energy efficiency of each MAC operation. The former can be estimated at design time; however, the latter depends on the intricate data reuse patterns and underlying hardware architecture. Hence, estimating it at design time is challenging. This work shows that the conventional approach to estimate the data reuse, viz. arithmetic intensity, does not always correctly estimate the degree of data reuse in DNNs since it gives equal importance to all the data types. We propose a novel model, termed "data type aware weighted arithmetic intensity" ($DI$), which accounts for the unequal importance of different data types in DNNs. We evaluate our model on 25 state-of-the-art DNNs on two GPUs. We show that our model accurately models data-reuse for all possible data reuse patterns for different types of convolution and different types of layers. We show that our model is a better indicator of the energy efficiency of DNNs. We also show its generality using the central limit theorem.

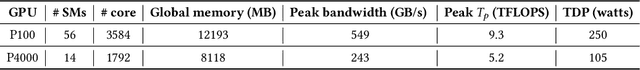

DeepPeep: Exploiting Design Ramifications to Decipher the Architecture of Compact DNNs

Jul 30, 2020

Abstract:The remarkable predictive performance of deep neural networks (DNNs) has led to their adoption in service domains of unprecedented scale and scope. However, the widespread adoption and growing commercialization of DNNs have underscored the importance of intellectual property (IP) protection. Devising techniques to ensure IP protection has become necessary due to the increasing trend of outsourcing the DNN computations on the untrusted accelerators in cloud-based services. The design methodologies and hyper-parameters of DNNs are crucial information, and leaking them may cause massive economic loss to the organization. Furthermore, the knowledge of DNN's architecture can increase the success probability of an adversarial attack where an adversary perturbs the inputs and alter the prediction. In this work, we devise a two-stage attack methodology "DeepPeep" which exploits the distinctive characteristics of design methodologies to reverse-engineer the architecture of building blocks in compact DNNs. We show the efficacy of "DeepPeep" on P100 and P4000 GPUs. Additionally, we propose intelligent design maneuvering strategies for thwarting IP theft through the DeepPeep attack and proposed "Secure MobileNet-V1". Interestingly, compared to vanilla MobileNet-V1, secure MobileNet-V1 provides a significant reduction in inference latency ($\approx$60%) and improvement in predictive performance ($\approx$2%) with very-low memory and computation overheads.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge