Steven D. Morad

Graph Convolutional Memory for Deep Reinforcement Learning

Jun 27, 2021

Abstract:Solving partially-observable Markov decision processes (POMDPs) is critical when applying deep reinforcement learning (DRL) to real-world robotics problems, where agents have an incomplete view of the world. We present graph convolutional memory (GCM) for solving POMDPs using deep reinforcement learning. Unlike recurrent neural networks (RNNs) or transformers, GCM embeds domain-specific priors into the memory recall process via a knowledge graph. By encapsulating priors in the graph, GCM adapts to specific tasks but remains applicable to any DRL task. Using graph convolutions, GCM extracts hierarchical graph features, analogous to image features in a convolutional neural network (CNN). We show GCM outperforms long short-term memory (LSTM), gated transformers for reinforcement learning (GTrXL), and differentiable neural computers (DNCs) on control, long-term non-sequential recall, and 3D navigation tasks while using significantly fewer parameters.

Embodied Visual Navigation with Automatic Curriculum Learning in Real Environments

Sep 11, 2020

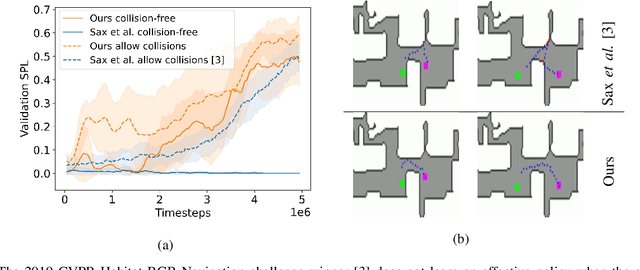

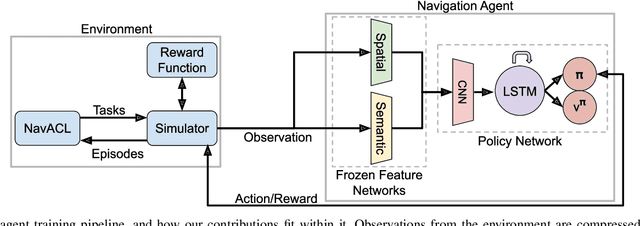

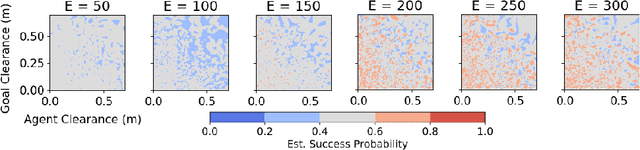

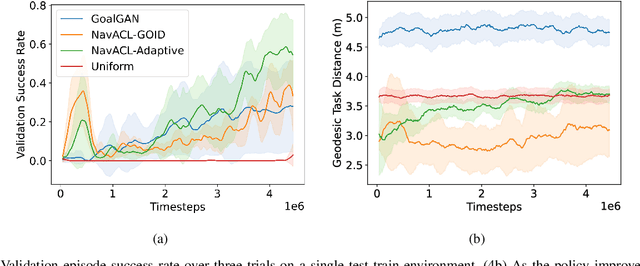

Abstract:We present NavACL, a method of automatic curriculum learning tailored to the navigation task. NavACL is simple to train and efficiently selects relevant tasks using geometric features. In our experiments, deep reinforcement learning agents trained using NavACL in collision-free environments significantly outperform state-of-the-art agents trained with uniform sampling -- the current standard. Furthermore, our agents are able to navigate through unknown cluttered indoor environments to semantically-specified targets using only RGB images. Collision avoidance policies and frozen feature networks support transfer to unseen real-world environments, without any modification or retraining requirements. We evaluate our policies in simulation, and in the real world on a ground robot and a quadrotor drone. Videos of real-world results are available in the supplementary material

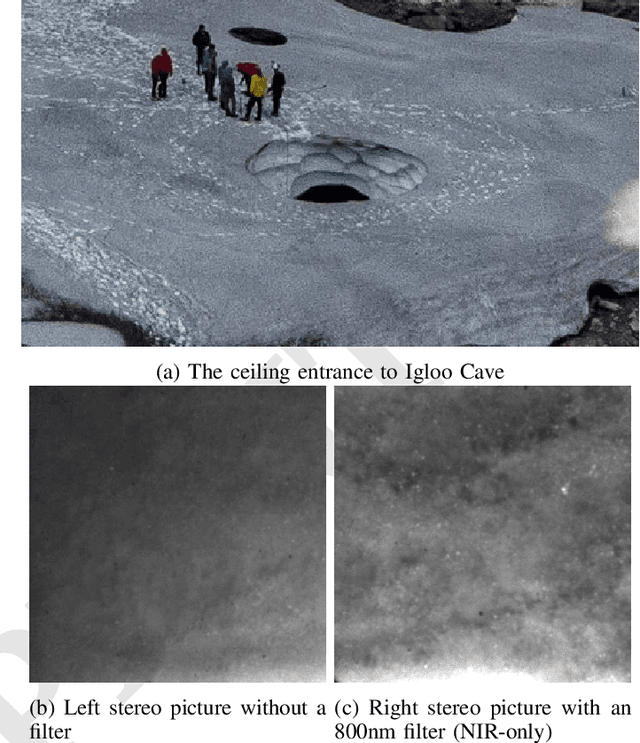

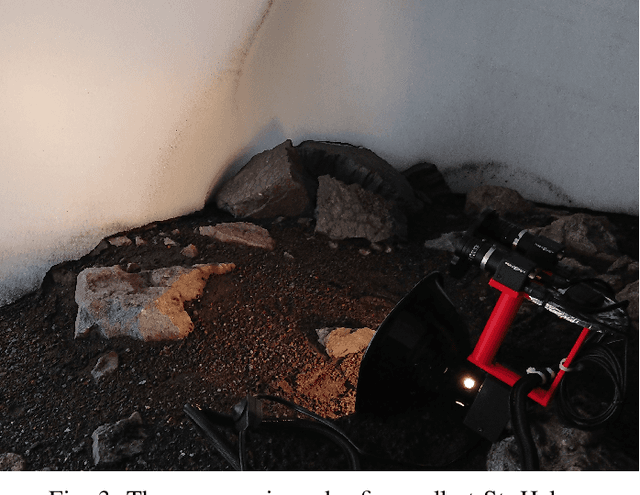

Improving Visual Feature Extraction in Glacial Environments

Aug 27, 2019

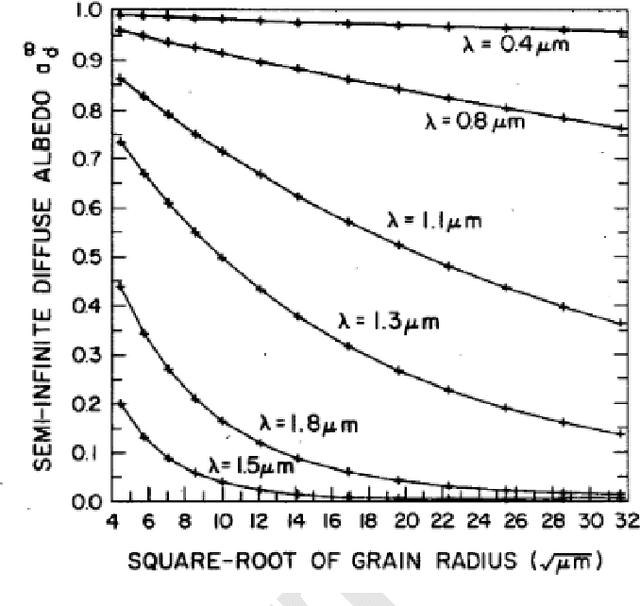

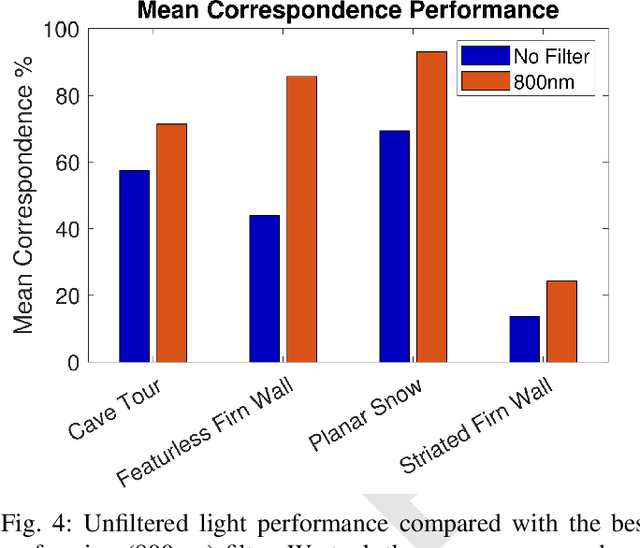

Abstract:Glacial science could benefit tremendously from autonomous robots, but previous glacial robots have had perception issues in these colorless and featureless environments, specifically with visual feature extraction. Glaciologists use near-infrared imagery to reveal the underlying heterogeneous spatial structure of snow and ice, and we theorize that this hidden near-infrared structure could produce more and higher quality features than available in visible light. We took a custom camera rig to Igloo Cave at Mt. St. Helens to test our theory. The camera rig contains two identical machine vision cameras, one which was outfitted with multiple filters to see only near-infrared light. We extracted features from short video clips taken inside Igloo Cave at Mt. St. Helens, using three popular feature extractors (FAST, SIFT, and SURF). We quantified the number of features and their quality for visual navigation using feature correspondence and the epipolar constraint. Our results indicate that near-infrared imagery produces more features that tend to be of higher quality than that of visible light imagery.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge