Shoya Higa

Improving Visual Feature Extraction in Glacial Environments

Aug 27, 2019

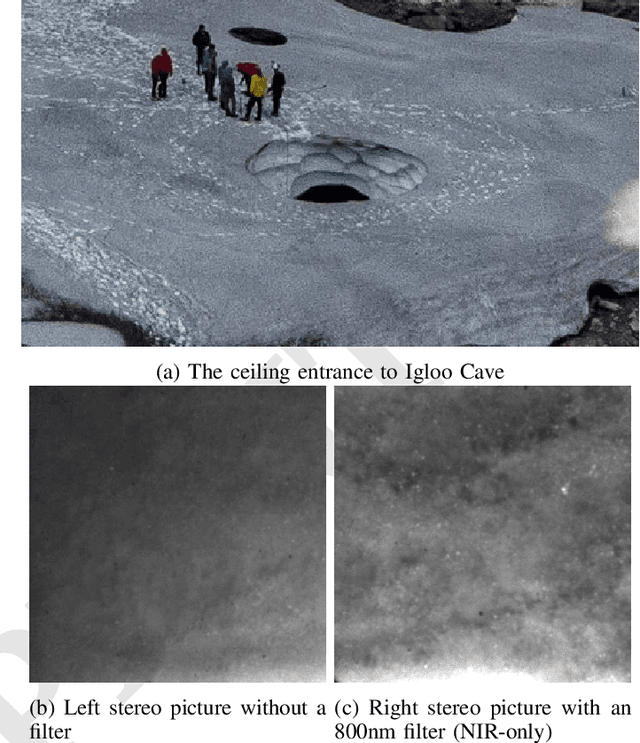

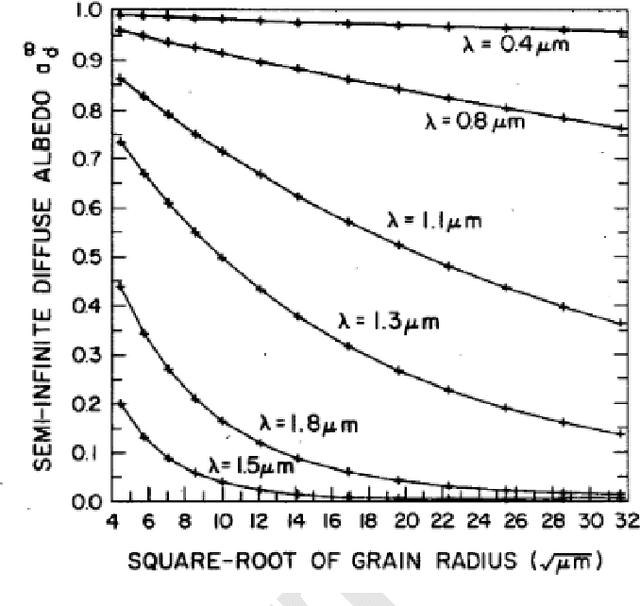

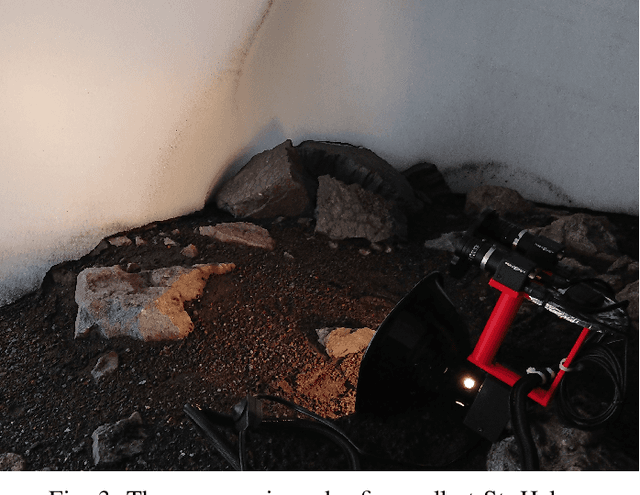

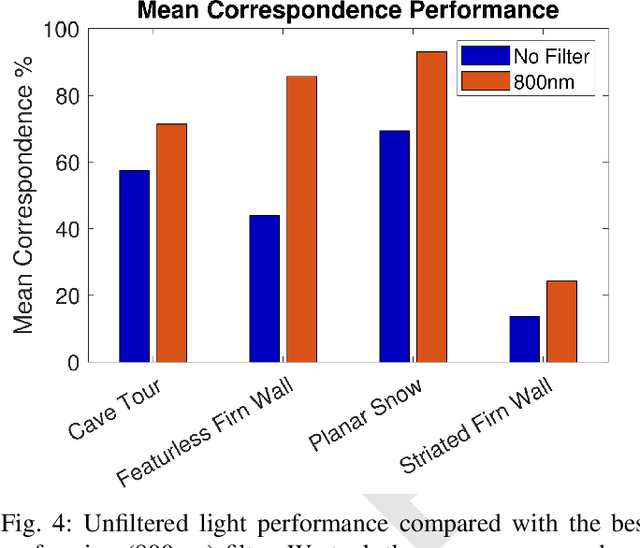

Abstract:Glacial science could benefit tremendously from autonomous robots, but previous glacial robots have had perception issues in these colorless and featureless environments, specifically with visual feature extraction. Glaciologists use near-infrared imagery to reveal the underlying heterogeneous spatial structure of snow and ice, and we theorize that this hidden near-infrared structure could produce more and higher quality features than available in visible light. We took a custom camera rig to Igloo Cave at Mt. St. Helens to test our theory. The camera rig contains two identical machine vision cameras, one which was outfitted with multiple filters to see only near-infrared light. We extracted features from short video clips taken inside Igloo Cave at Mt. St. Helens, using three popular feature extractors (FAST, SIFT, and SURF). We quantified the number of features and their quality for visual navigation using feature correspondence and the epipolar constraint. Our results indicate that near-infrared imagery produces more features that tend to be of higher quality than that of visible light imagery.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge