Stanislav Panev

Adapting Vehicle Detectors for Aerial Imagery to Unseen Domains with Weak Supervision

Jul 28, 2025Abstract:Detecting vehicles in aerial imagery is a critical task with applications in traffic monitoring, urban planning, and defense intelligence. Deep learning methods have provided state-of-the-art (SOTA) results for this application. However, a significant challenge arises when models trained on data from one geographic region fail to generalize effectively to other areas. Variability in factors such as environmental conditions, urban layouts, road networks, vehicle types, and image acquisition parameters (e.g., resolution, lighting, and angle) leads to domain shifts that degrade model performance. This paper proposes a novel method that uses generative AI to synthesize high-quality aerial images and their labels, improving detector training through data augmentation. Our key contribution is the development of a multi-stage, multi-modal knowledge transfer framework utilizing fine-tuned latent diffusion models (LDMs) to mitigate the distribution gap between the source and target environments. Extensive experiments across diverse aerial imagery domains show consistent performance improvements in AP50 over supervised learning on source domain data, weakly supervised adaptation methods, unsupervised domain adaptation methods, and open-set object detectors by 4-23%, 6-10%, 7-40%, and more than 50%, respectively. Furthermore, we introduce two newly annotated aerial datasets from New Zealand and Utah to support further research in this field. Project page is available at: https://humansensinglab.github.io/AGenDA

Texture- and Shape-based Adversarial Attacks for Vehicle Detection in Synthetic Overhead Imagery

Dec 20, 2024

Abstract:Detecting vehicles in aerial images can be very challenging due to complex backgrounds, small resolution, shadows, and occlusions. Despite the effectiveness of SOTA detectors such as YOLO, they remain vulnerable to adversarial attacks (AAs), compromising their reliability. Traditional AA strategies often overlook the practical constraints of physical implementation, focusing solely on attack performance. Our work addresses this issue by proposing practical implementation constraints for AA in texture and/or shape. These constraints include pixelation, masking, limiting the color palette of the textures, and constraining the shape modifications. We evaluated the proposed constraints through extensive experiments using three widely used object detector architectures, and compared them to previous works. The results demonstrate the effectiveness of our solutions and reveal a trade-off between practicality and performance. Additionally, we introduce a labeled dataset of overhead images featuring vehicles of various categories. We will make the code/dataset public upon paper acceptance.

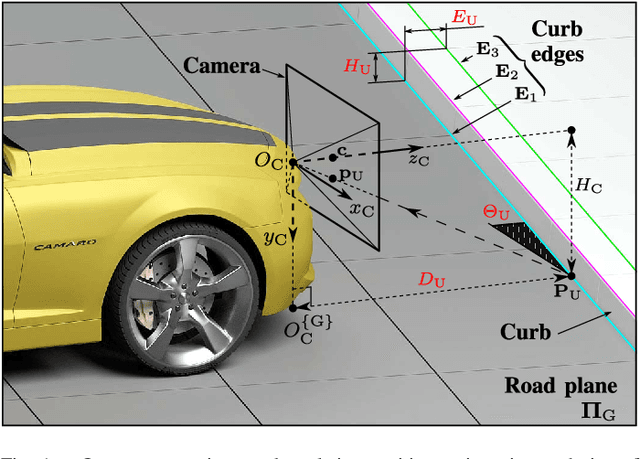

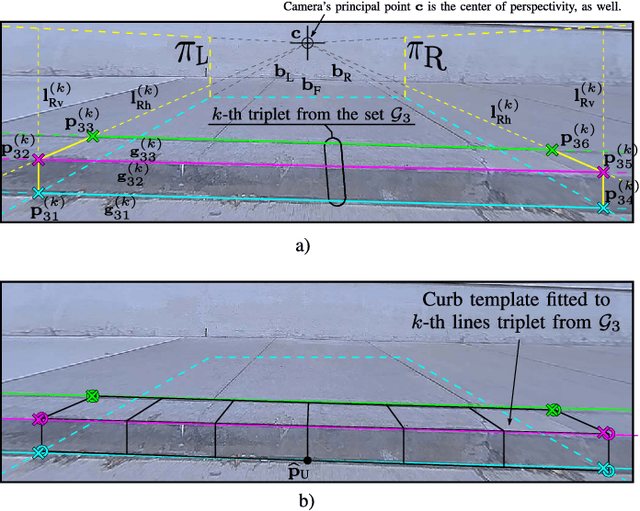

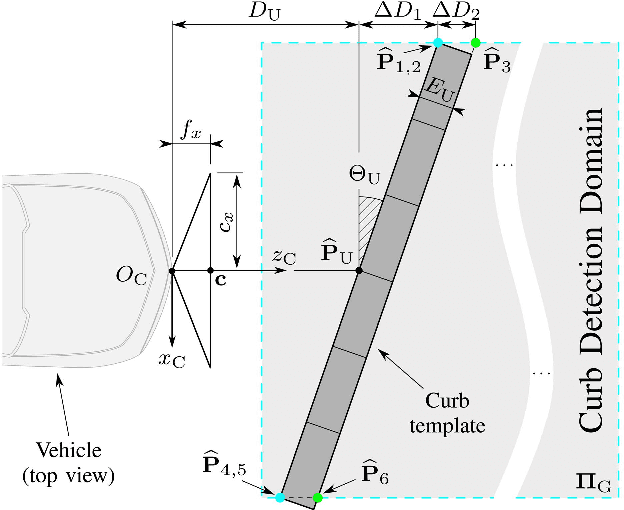

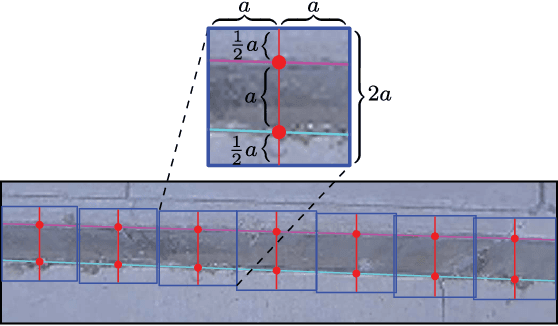

Road Curb Detection and Localization with Monocular Forward-view Vehicle Camera

Feb 28, 2020

Abstract:We propose a robust method for estimating road curb 3D parameters (size, location, orientation) using a calibrated monocular camera equipped with a fisheye lens. Automatic curb detection and localization is particularly important in the context of Advanced Driver Assistance System (ADAS), i.e. to prevent possible collision and damage of the vehicle's bumper during perpendicular and diagonal parking maneuvers. Combining 3D geometric reasoning with advanced vision-based detection methods, our approach is able to estimate the vehicle to curb distance in real time with mean accuracy of more than 90%, as well as its orientation, height and depth. Our approach consists of two distinct components - curb detection in each individual video frame and temporal analysis. The first part comprises of sophisticated curb edges extraction and parametrized 3D curb template fitting. Using a few assumptions regarding the real world geometry, we can thus retrieve the curb's height and its relative position w.r.t. the moving vehicle on which the camera is mounted. Support Vector Machine (SVM) classifier fed with Histograms of Oriented Gradients (HOG) is used for appearance-based filtering out outliers. In the second part, the detected curb regions are tracked in the temporal domain, so as to perform a second pass of false positives rejection. We have validated our approach on a newly collected database of 11 videos under different conditions. We have used point-wise LIDAR measurements and manual exhaustive labels as a ground truth.

* 17 pages, 21 figures, IEEE Transactions on Intelligent Transportation Systems

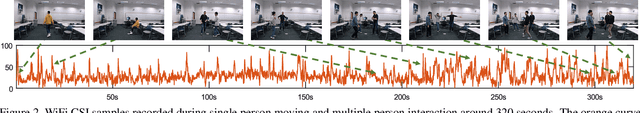

Can WiFi Estimate Person Pose?

Apr 02, 2019

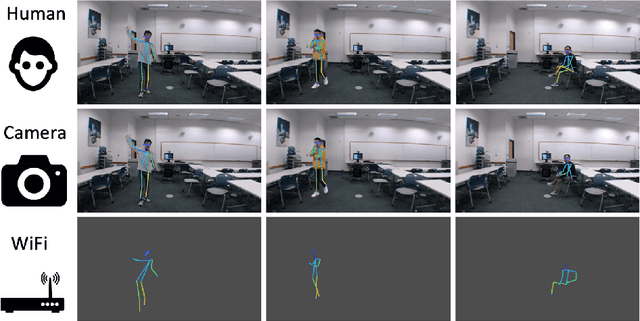

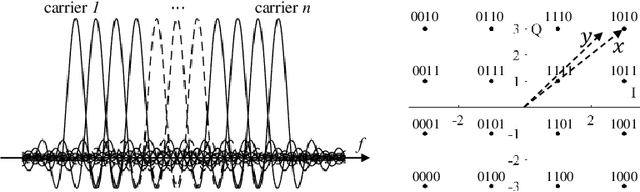

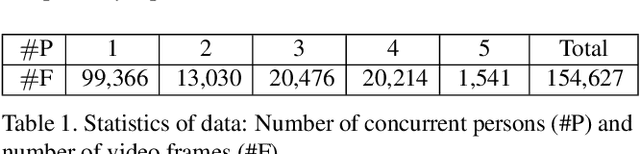

Abstract:WiFi human sensing has achieved great progress in indoor localization, activity classification, etc. Retracing the development of these work, we have a natural question: can WiFi devices work like cameras for vision applications? In this paper We try to answer this question by exploring the ability of WiFi on estimating single person pose. We use a 3-antenna WiFi sender and a 3-antenna receiver to generate WiFi data. Meanwhile, we use a synchronized camera to capture person videos for corresponding keypoint annotations. We further propose a fully convolutional network (FCN), termed WiSPPN, to estimate single person pose from the collected data and annotations. Evaluation on over 80k images (16 sites and 8 persons) replies aforesaid question with a positive answer. Codes have been made publicly available at https://github.com/geekfeiw/WiSPPN.

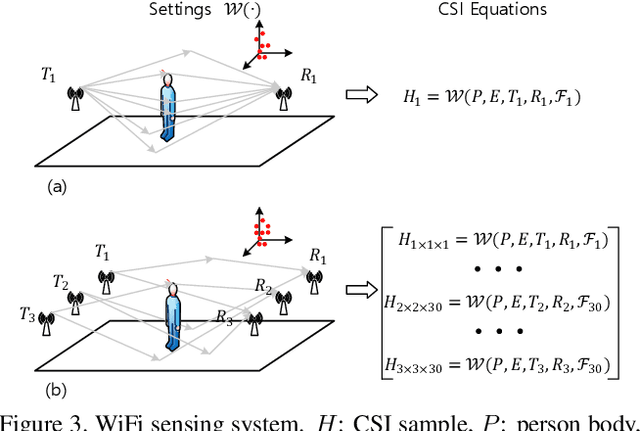

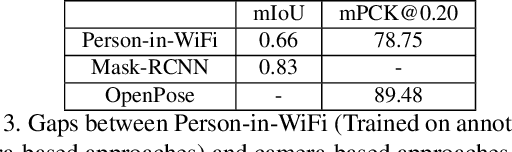

Person-in-WiFi: Fine-grained Person Perception using WiFi

Mar 30, 2019

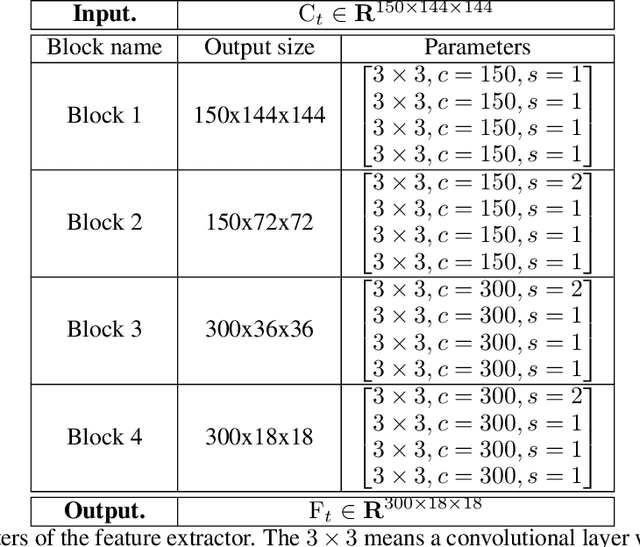

Abstract:Fine-grained person perception such as body segmentation and pose estimation has been achieved with many 2D and 3D sensors such as RGB/depth cameras, radars (e.g., RF-Pose) and LiDARs. These sensors capture 2D pixels or 3D point clouds of person bodies with high spatial resolution, such that the existing Convolutional Neural Networks can be directly applied for perception. In this paper, we take one step forward to show that fine-grained person perception is possible even with 1D sensors: WiFi antennas. To our knowledge, this is the first work to perceive persons with pervasive WiFi devices, which is cheaper and power efficient than radars and LiDARs, invariant to illumination, and has little privacy concern comparing to cameras. We used two sets of off-the-shelf WiFi antennas to acquire signals, i.e., one transmitter set and one receiver set. Each set contains three antennas lined-up as a regular household WiFi router. The WiFi signal generated by a transmitter antenna, penetrates through and reflects on human bodies, furniture and walls, and then superposes at a receiver antenna as a 1D signal sample (instead of 2D pixels or 3D point clouds). We developed a deep learning approach that uses annotations on 2D images, takes the received 1D WiFi signals as inputs, and performs body segmentation and pose estimation in an end-to-end manner. Experimental results on over 100000 frames under 16 indoor scenes demonstrate that Person-in-WiFi achieved person perception comparable to approaches using 2D images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge