Stéphane Gentric

Mitigating Gender Bias in Face Recognition Using the von Mises-Fisher Mixture Model

Oct 24, 2022

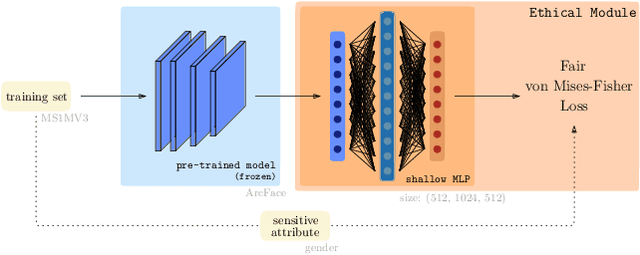

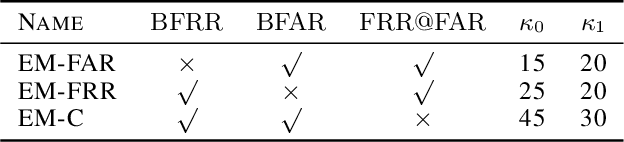

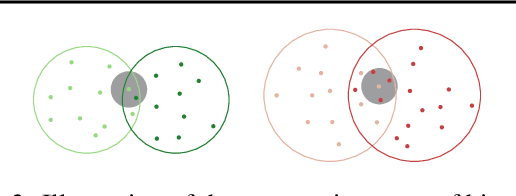

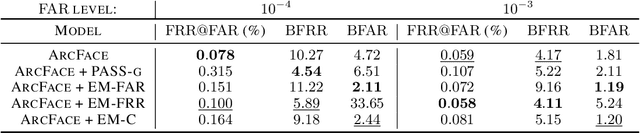

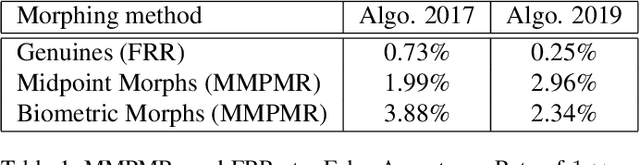

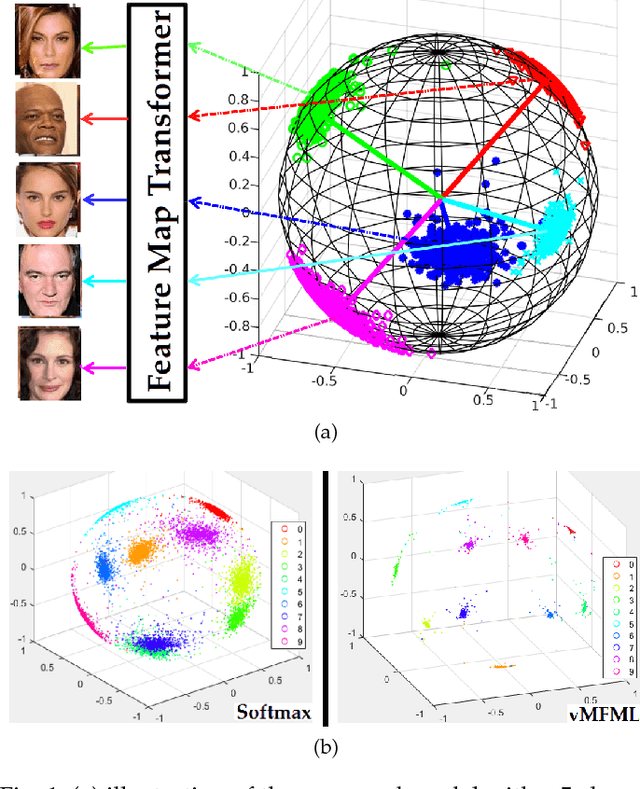

Abstract:In spite of the high performance and reliability of deep learning algorithms in a wide range of everyday applications, many investigations tend to show that a lot of models exhibit biases, discriminating against specific subgroups of the population (e.g. gender, ethnicity). This urges the practitioner to develop fair systems with a uniform/comparable performance across sensitive groups. In this work, we investigate the gender bias of deep Face Recognition networks. In order to measure this bias, we introduce two new metrics, $\mathrm{BFAR}$ and $\mathrm{BFRR}$, that better reflect the inherent deployment needs of Face Recognition systems. Motivated by geometric considerations, we mitigate gender bias through a new post-processing methodology which transforms the deep embeddings of a pre-trained model to give more representation power to discriminated subgroups. It consists in training a shallow neural network by minimizing a Fair von Mises-Fisher loss whose hyperparameters account for the intra-class variance of each gender. Interestingly, we empirically observe that these hyperparameters are correlated with our fairness metrics. In fact, extensive numerical experiments on a variety of datasets show that a careful selection significantly reduces gender bias.

An Assessment of GANs for Identity-related Applications

Dec 18, 2020

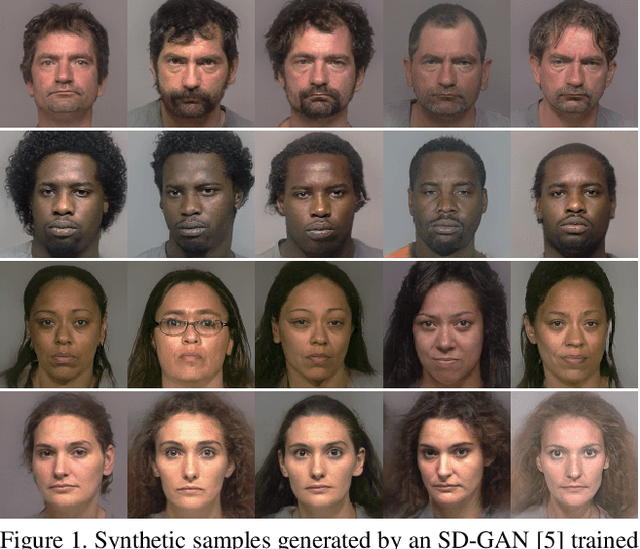

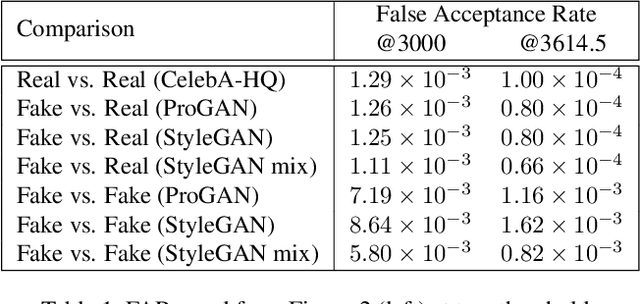

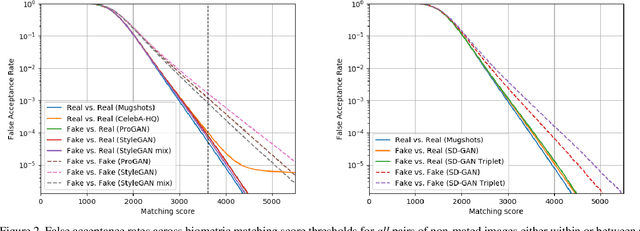

Abstract:Generative Adversarial Networks (GANs) are now capable of producing synthetic face images of exceptionally high visual quality. In parallel to the development of GANs themselves, efforts have been made to develop metrics to objectively assess the characteristics of the synthetic images, mainly focusing on visual quality and the variety of images. Little work has been done, however, to assess overfitting of GANs and their ability to generate new identities. In this paper we apply a state of the art biometric network to various datasets of synthetic images and perform a thorough assessment of their identity-related characteristics. We conclude that GANs can indeed be used to generate new, imagined identities meaning that applications such as anonymisation of image sets and augmentation of training datasets with distractor images are viable applications. We also assess the ability of GANs to disentangle identity from other image characteristics and propose a novel GAN triplet loss that we show to improve this disentanglement.

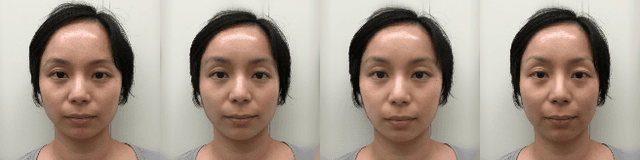

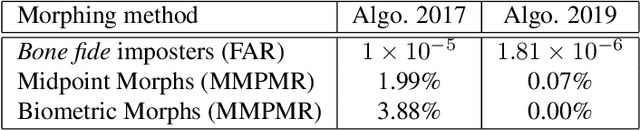

Robustness of Facial Recognition to GAN-based Face-morphing Attacks

Dec 18, 2020

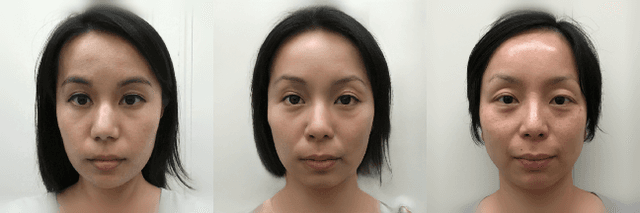

Abstract:Face-morphing attacks have been a cause for concern for a number of years. Striving to remain one step ahead of attackers, researchers have proposed many methods of both creating and detecting morphed images. These detection methods, however, have generally proven to be inadequate. In this work we identify two new, GAN-based methods that an attacker may already have in his arsenal. Each method is evaluated against state-of-the-art facial recognition (FR) algorithms and we demonstrate that improvements to the fidelity of FR algorithms do lead to a reduction in the success rate of attacks provided morphed images are considered when setting operational acceptance thresholds.

von Mises-Fisher Mixture Model-based Deep learning: Application to Face Verification

Dec 31, 2017

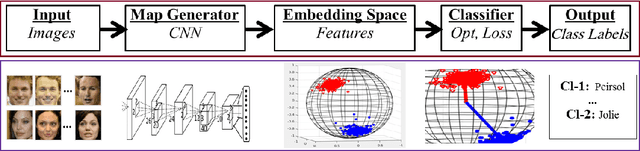

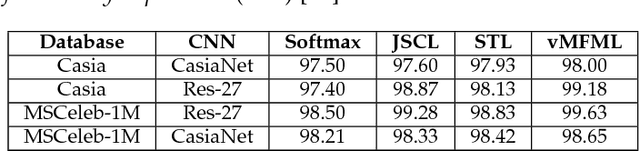

Abstract:A number of pattern recognition tasks, \textit{e.g.}, face verification, can be boiled down to classification or clustering of unit length directional feature vectors whose distance can be simply computed by their angle. In this paper, we propose the von Mises-Fisher (vMF) mixture model as the theoretical foundation for an effective deep-learning of such directional features and derive a novel vMF Mixture Loss and its corresponding vMF deep features. The proposed vMF feature learning achieves the characteristics of discriminative learning, \textit{i.e.}, compacting the instances of the same class while increasing the distance of instances from different classes. Moreover, it subsumes a number of popular loss functions as well as an effective method in deep learning, namely normalization. We conduct extensive experiments on face verification using 4 different challenging face datasets, \textit{i.e.}, LFW, YouTube faces, CACD and IJB-A. Results show the effectiveness and excellent generalization ability of the proposed approach as it achieves state-of-the-art results on the LFW, YouTube faces and CACD datasets and competitive results on the IJB-A dataset.

DeepVisage: Making face recognition simple yet with powerful generalization skills

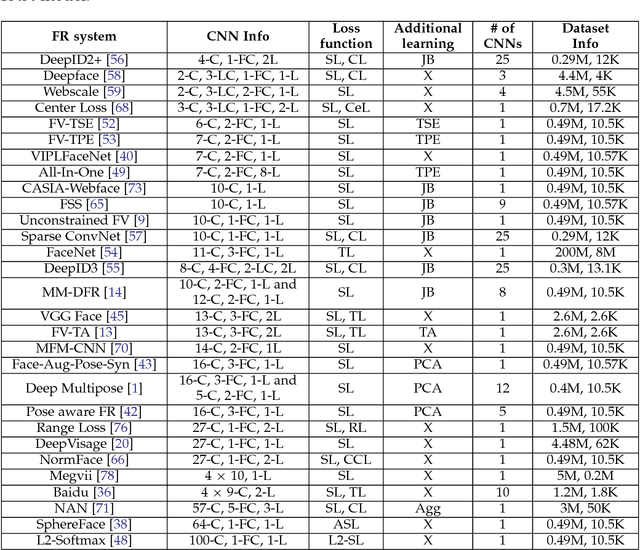

Apr 07, 2017

Abstract:Face recognition (FR) methods report significant performance by adopting the convolutional neural network (CNN) based learning methods. Although CNNs are mostly trained by optimizing the softmax loss, the recent trend shows an improvement of accuracy with different strategies, such as task-specific CNN learning with different loss functions, fine-tuning on target dataset, metric learning and concatenating features from multiple CNNs. Incorporating these tasks obviously requires additional efforts. Moreover, it demotivates the discovery of efficient CNN models for FR which are trained only with identity labels. We focus on this fact and propose an easily trainable and single CNN based FR method. Our CNN model exploits the residual learning framework. Additionally, it uses normalized features to compute the loss. Our extensive experiments show excellent generalization on different datasets. We obtain very competitive and state-of-the-art results on the LFW, IJB-A, YouTube faces and CACD datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge