Jean-Rémy Conti

Mitigating Bias in Facial Recognition Systems: Centroid Fairness Loss Optimization

Apr 27, 2025Abstract:The urging societal demand for fair AI systems has put pressure on the research community to develop predictive models that are not only globally accurate but also meet new fairness criteria, reflecting the lack of disparate mistreatment with respect to sensitive attributes ($\textit{e.g.}$ gender, ethnicity, age). In particular, the variability of the errors made by certain Facial Recognition (FR) systems across specific segments of the population compromises the deployment of the latter, and was judged unacceptable by regulatory authorities. Designing fair FR systems is a very challenging problem, mainly due to the complex and functional nature of the performance measure used in this domain ($\textit{i.e.}$ ROC curves) and because of the huge heterogeneity of the face image datasets usually available for training. In this paper, we propose a novel post-processing approach to improve the fairness of pre-trained FR models by optimizing a regression loss which acts on centroid-based scores. Beyond the computational advantages of the method, we present numerical experiments providing strong empirical evidence of the gain in fairness and of the ability to preserve global accuracy.

Assessing Performance and Fairness Metrics in Face Recognition - Bootstrap Methods

Nov 14, 2022

Abstract:The ROC curve is the major tool for assessing not only the performance but also the fairness properties of a similarity scoring function in Face Recognition. In order to draw reliable conclusions based on empirical ROC analysis, evaluating accurately the uncertainty related to statistical versions of the ROC curves of interest is necessary. For this purpose, we explain in this paper that, because the True/False Acceptance Rates are of the form of U-statistics in the case of similarity scoring, the naive bootstrap approach is not valid here and that a dedicated recentering technique must be used instead. This is illustrated on real data of face images, when applied to several ROC-based metrics such as popular fairness metrics.

Mitigating Gender Bias in Face Recognition Using the von Mises-Fisher Mixture Model

Oct 24, 2022

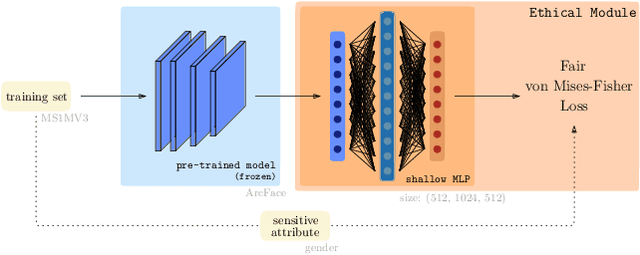

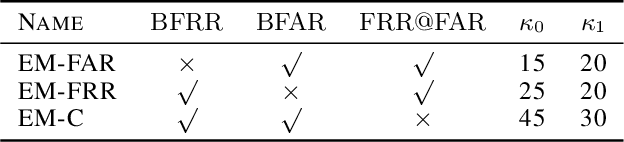

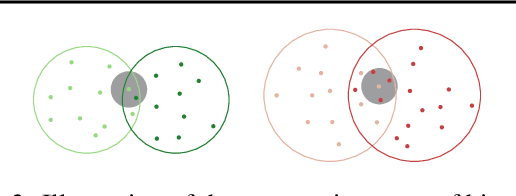

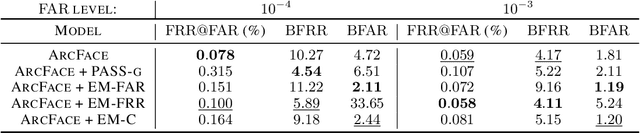

Abstract:In spite of the high performance and reliability of deep learning algorithms in a wide range of everyday applications, many investigations tend to show that a lot of models exhibit biases, discriminating against specific subgroups of the population (e.g. gender, ethnicity). This urges the practitioner to develop fair systems with a uniform/comparable performance across sensitive groups. In this work, we investigate the gender bias of deep Face Recognition networks. In order to measure this bias, we introduce two new metrics, $\mathrm{BFAR}$ and $\mathrm{BFRR}$, that better reflect the inherent deployment needs of Face Recognition systems. Motivated by geometric considerations, we mitigate gender bias through a new post-processing methodology which transforms the deep embeddings of a pre-trained model to give more representation power to discriminated subgroups. It consists in training a shallow neural network by minimizing a Fair von Mises-Fisher loss whose hyperparameters account for the intra-class variance of each gender. Interestingly, we empirically observe that these hyperparameters are correlated with our fairness metrics. In fact, extensive numerical experiments on a variety of datasets show that a careful selection significantly reduces gender bias.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge