Richard T. Marriott

An Assessment of GANs for Identity-related Applications

Dec 18, 2020

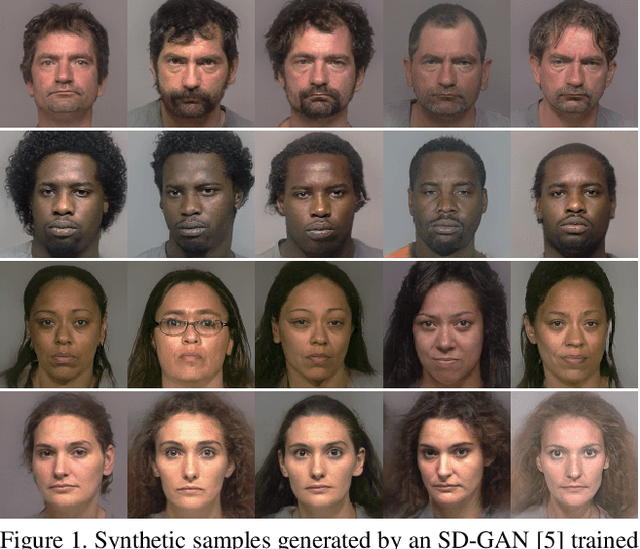

Abstract:Generative Adversarial Networks (GANs) are now capable of producing synthetic face images of exceptionally high visual quality. In parallel to the development of GANs themselves, efforts have been made to develop metrics to objectively assess the characteristics of the synthetic images, mainly focusing on visual quality and the variety of images. Little work has been done, however, to assess overfitting of GANs and their ability to generate new identities. In this paper we apply a state of the art biometric network to various datasets of synthetic images and perform a thorough assessment of their identity-related characteristics. We conclude that GANs can indeed be used to generate new, imagined identities meaning that applications such as anonymisation of image sets and augmentation of training datasets with distractor images are viable applications. We also assess the ability of GANs to disentangle identity from other image characteristics and propose a novel GAN triplet loss that we show to improve this disentanglement.

Robustness of Facial Recognition to GAN-based Face-morphing Attacks

Dec 18, 2020

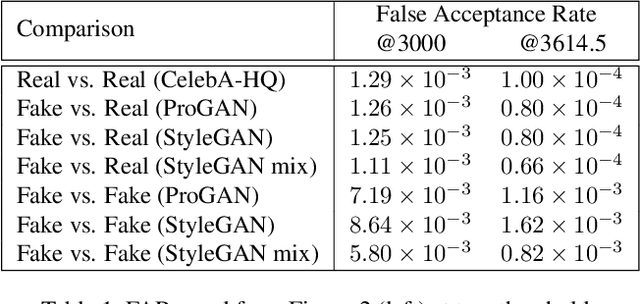

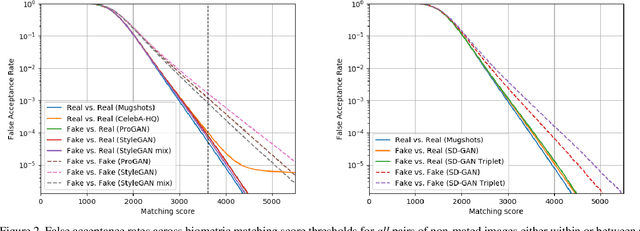

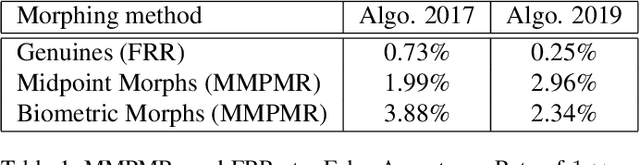

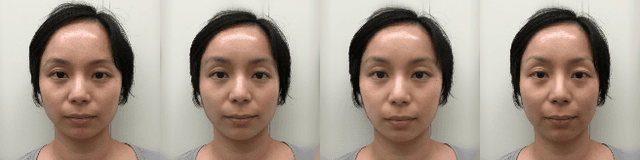

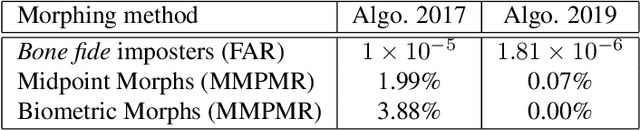

Abstract:Face-morphing attacks have been a cause for concern for a number of years. Striving to remain one step ahead of attackers, researchers have proposed many methods of both creating and detecting morphed images. These detection methods, however, have generally proven to be inadequate. In this work we identify two new, GAN-based methods that an attacker may already have in his arsenal. Each method is evaluated against state-of-the-art facial recognition (FR) algorithms and we demonstrate that improvements to the fidelity of FR algorithms do lead to a reduction in the success rate of attacks provided morphed images are considered when setting operational acceptance thresholds.

A 3D GAN for Improved Large-pose Facial Recognition

Dec 18, 2020

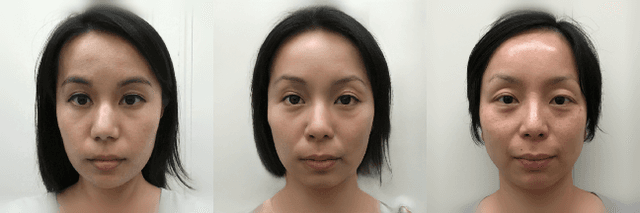

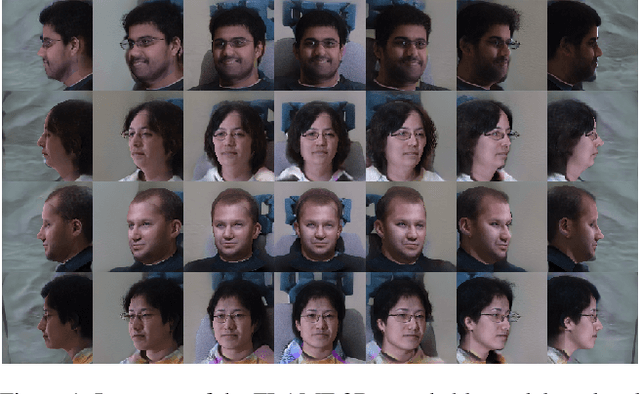

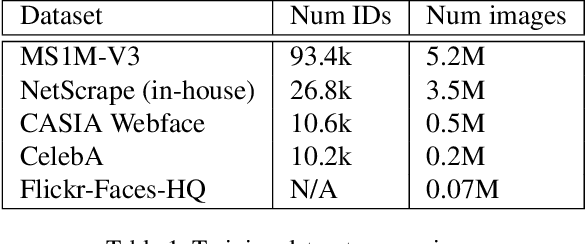

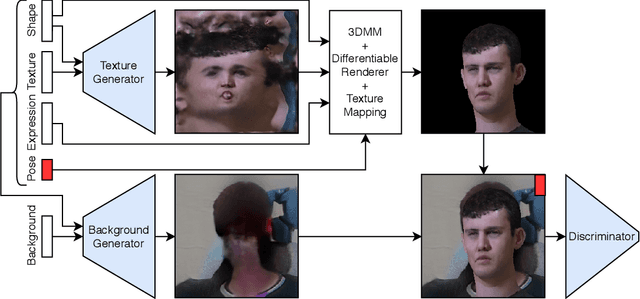

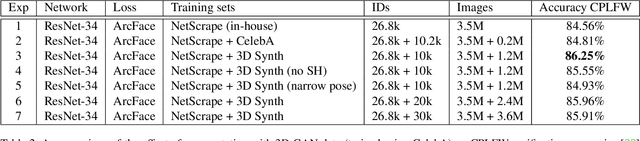

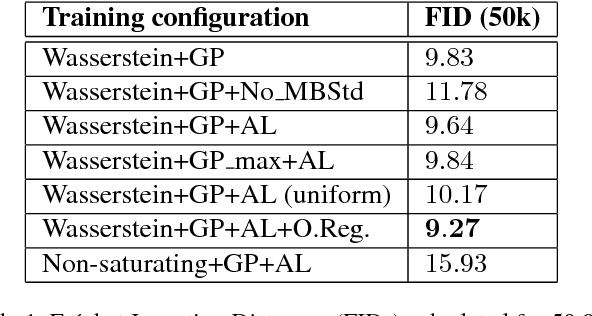

Abstract:Facial recognition using deep convolutional neural networks relies on the availability of large datasets of face images. Many examples of identities are needed, and for each identity, a large variety of images are needed in order for the network to learn robustness to intra-class variation. In practice, such datasets are difficult to obtain, particularly those containing adequate variation of pose. Generative Adversarial Networks (GANs) provide a potential solution to this problem due to their ability to generate realistic, synthetic images. However, recent studies have shown that current methods of disentangling pose from identity are inadequate. In this work we incorporate a 3D morphable model into the generator of a GAN in order to learn a nonlinear texture model from in-the-wild images. This allows generation of new, synthetic identities, and manipulation of pose and expression without compromising the identity. Our synthesised data is used to augment training of facial recognition networks with performance evaluated on the challenging CFPW and Cross-Pose LFW datasets.

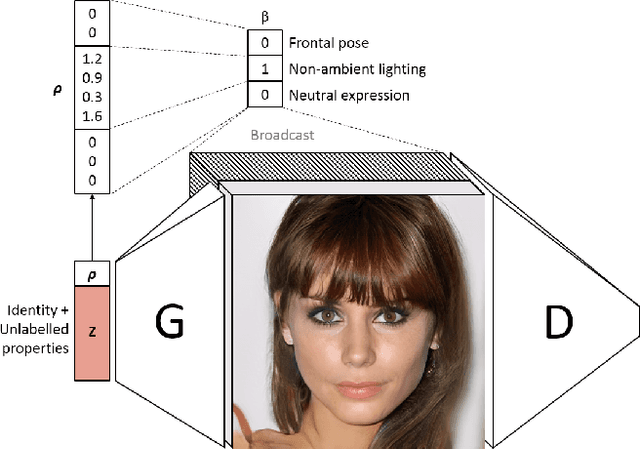

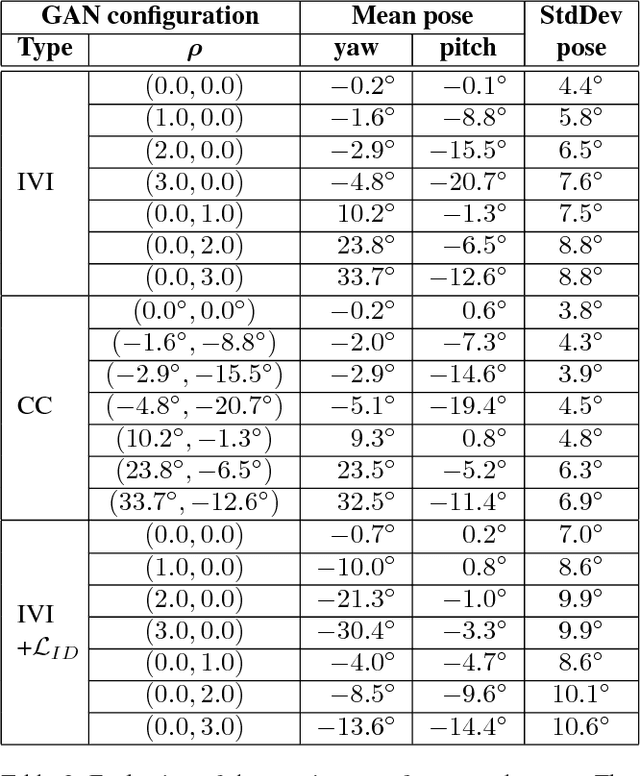

Intra-class Variation Isolation in Conditional GANs

Nov 27, 2018

Abstract:Current state-of-the-art conditional generative adversarial networks (C-GANs) require strong supervision via labeled datasets in order to generate images with continuously adjustable, disentangled semantics. In this paper we introduce a new formulation of the C-GAN that is able to learn realistic models with continuous, semantically meaningful input parameters and which has the advantage of requiring only the weak supervision of binary attribute labels. We coin the method intra-class variation isolation (IVI) and the resulting network the IVI-GAN. The method allows continuous control over the attributes in synthesised images where precise labels are not readily available. For example, given only labels found using a simple classifier of ambient / non-ambient lighting in images, IVI has enabled us to learn a generative face-image model with controllable lighting that is disentangled from other factors in the synthesised images, such as the identity. We evaluate IVI-GAN on the CelebA and CelebA-HQ datasets, learning to disentangle attributes such as lighting, pose, expression and age, and provide a quantitative comparison of IVI-GAN with a classical continuous C-GAN.

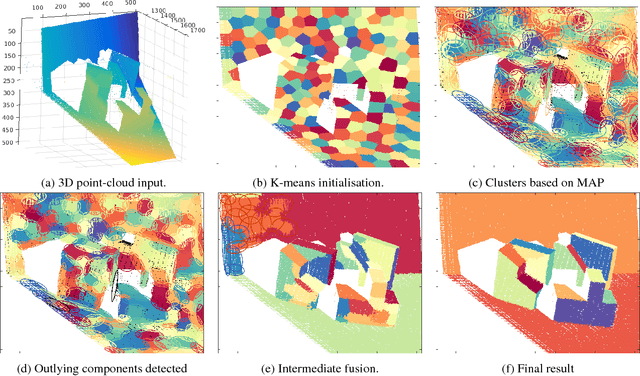

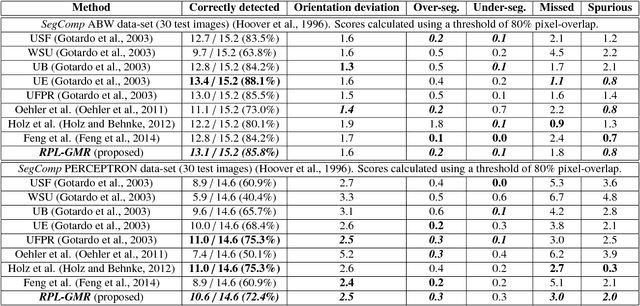

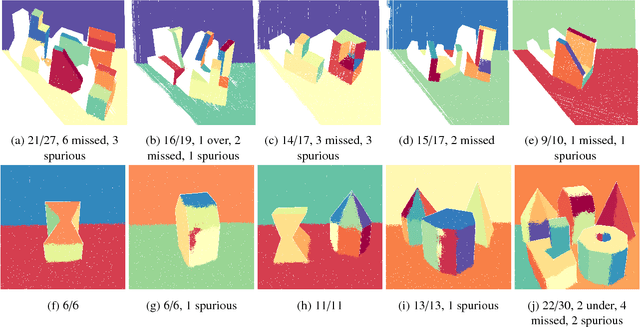

Plane-extraction from depth-data using a Gaussian mixture regression model

Mar 30, 2018

Abstract:We propose a novel algorithm for unsupervised extraction of piecewise planar models from depth-data. Among other applications, such models are a good way of enabling autonomous agents (robots, cars, drones, etc.) to effectively perceive their surroundings and to navigate in three dimensions. We propose to do this by fitting the data with a piecewise-linear Gaussian mixture regression model whose components are skewed over planes, making them flat in appearance rather than being ellipsoidal, by embedding an outlier-trimming process that is formally incorporated into the proposed expectation-maximization algorithm, and by selectively fusing contiguous, coplanar components. Part of our motivation is an attempt to estimate more accurate plane-extraction by allowing each model component to make use of all available data through probabilistic clustering. The algorithm is thoroughly evaluated against a standard benchmark and is shown to rank among the best of the existing state-of-the-art methods.

* 11 pages, 2 figures, 1 table

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge